Vibe Coding Conundrums

A look at the rise of "vibe coding" and its implications for the state of Application Security (AppSec)

One of the most recent trends arising from the wave of GenAI, LLMs and AI more broadly is that of “vibe coding”. While I’ve seen several interpretations and definitions of what it means, it is primarily attributed to Adrej Karpathy, as defined below via his tweet:

This has been discussed in depth on a podcast of venture capital firms such as Y Combinator and a16z (see below):

This was further corroborated by commentary from YC, who stated 25% of startups in their current cohort have codebases that are almost entirely AI-generated. This comes on the back of what is being called “vibe coding” where Developers (if we want to call them that, remains a question) are using LLM’s and describing what they want created without actually writing most or any of the code themselves.

Now don’t get me wrong, the implications of this is a testament to the real and promised potential of AI and LLMs for use cases including coding. It also offers an incredible path there to be not just a “productivity” boon for existing developers, with many stating they are more productive due to the use of AI coding Copilots, but the broad democratization of development for those who do not have development backgrounds entirely.

As Karpathy quipped “the hottest new programming language is English”. This is of course pointing out that as LLM’s and AI coding tools become more capable and promising, coding will largely be done via prompt engineering, as opposed to rote coding and software development.

Adoption is indeed souring, with GitHub for example reporting millions of paid GitHub Copilot subscribers with double digit YoY growth, a trend that seems to be accelerating. There are of course several other leading AI-driven coding tools and platforms, such as Cursor which also reports tens of thousands of paying customers but I use GitHub as one of the largest enterprise players as an example.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Potential Challenges

While vibe coding is definitely riding a wave of excitement, there are some potential challenges that lie ahead too.

In the bastardized words of John Mayer, we may be vibe coding in a burning room.

Put succintly, by Ben South on X:

This of course is from the operational perspective, but there are also security concerns as well. If the vibe coding wave and broader code-by-prompt engineering activity isn’t accompanied by specific instructions related to secure coding and applications, what does the future of the cybersecurity landscape look like for these nonchalantly developed applications look like?

While one may assume that developers will take the time to understand how something has been developed and why it works, that is similar to assuming that developers/organizations will prioritize things like security on par with speed to market and revenue.

It’s naive at best.

We know developers and vendors more broadly are incentivized to prioritize code and product velocity, speed to market, revenue, keeping pace with competitors and more than slowing down, implementing engineering rigor, and for the point of this article, cybersecurity.

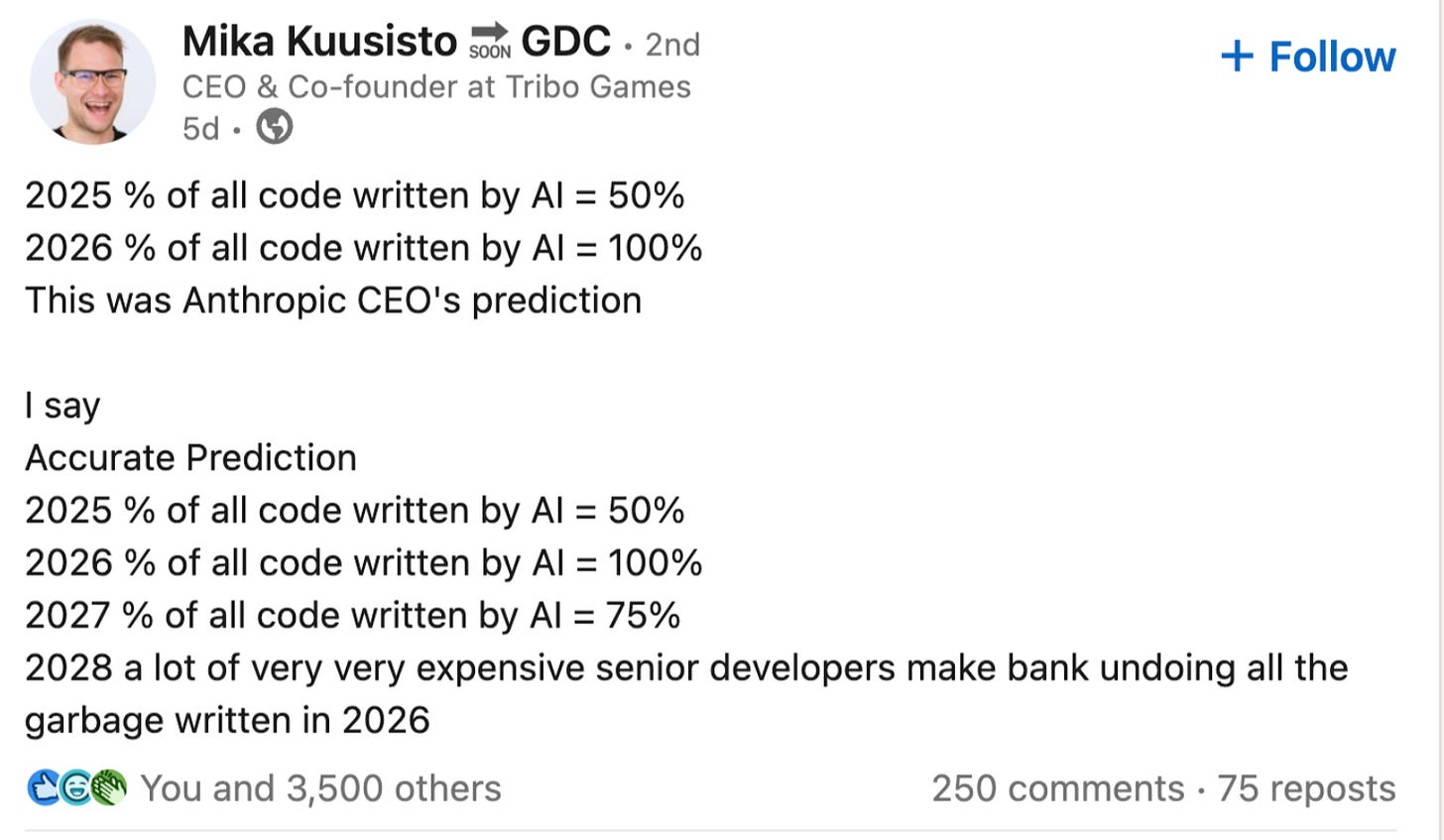

I’ve seen a couple of posts recently that really articulate where I think we may be potentially headed.

This one above highlights how we will likely see increased levels of development outsourced entirely to AI, but over time, security/technical debt will accumulate and someone who actually knows who software works and can debug and fix underlying systemic issues in applications will have to step in to address fundamental problems that were never noticed or just ignored while we rode the wave of “vibes”.

There is also a growing sentiment that “new junior Developers can’t actually code” by some, pointing out that junior devs are using Copilot and AI coding tools but lack any deep understanding of what they’re shipping or how it actually works.

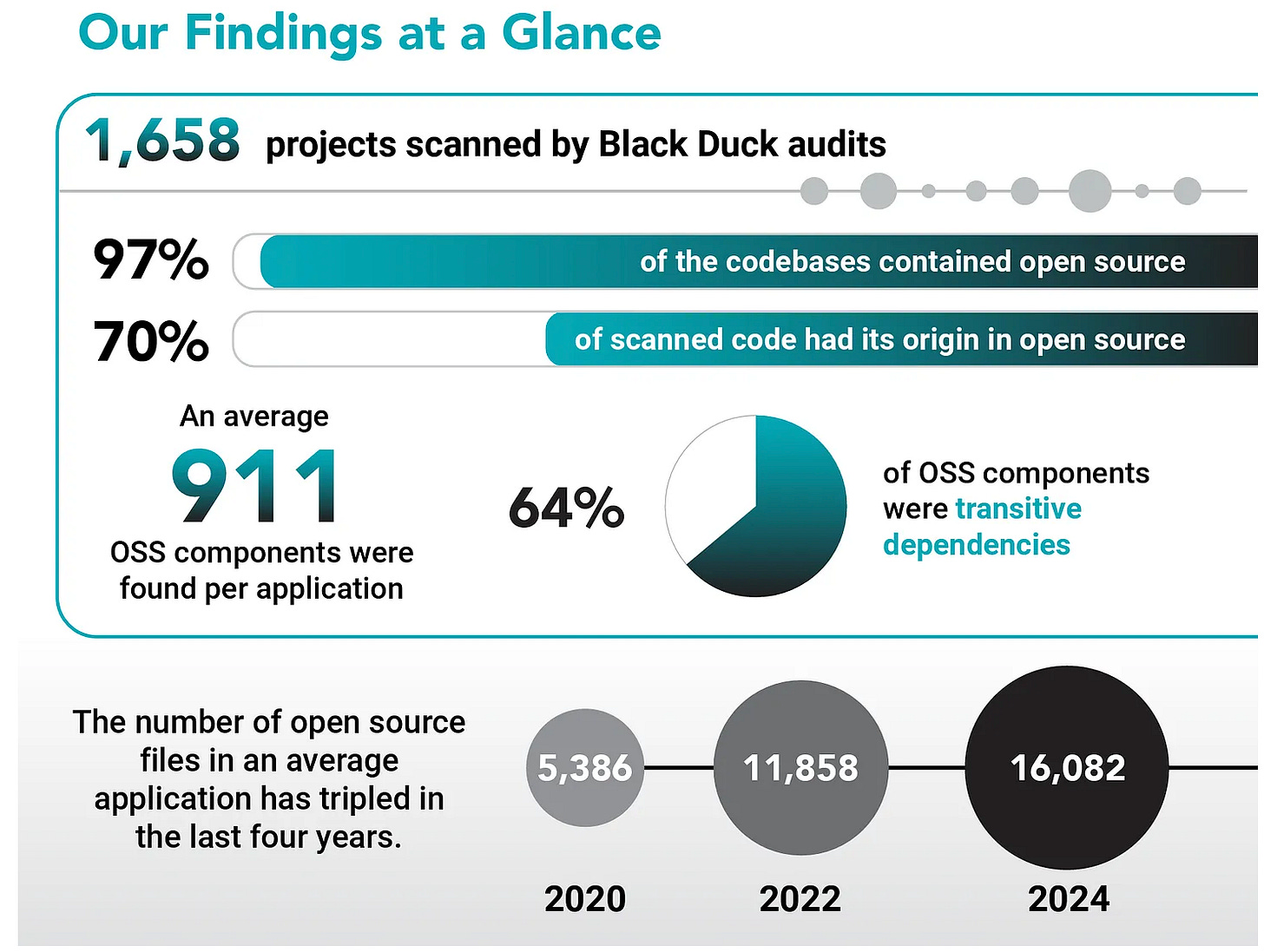

Another challenge involved here is that many of the large frontier models are trained on large swaths of open source software, rather than on proprietary databases of “secure” code (assuming we could even define such a thing as an industry).

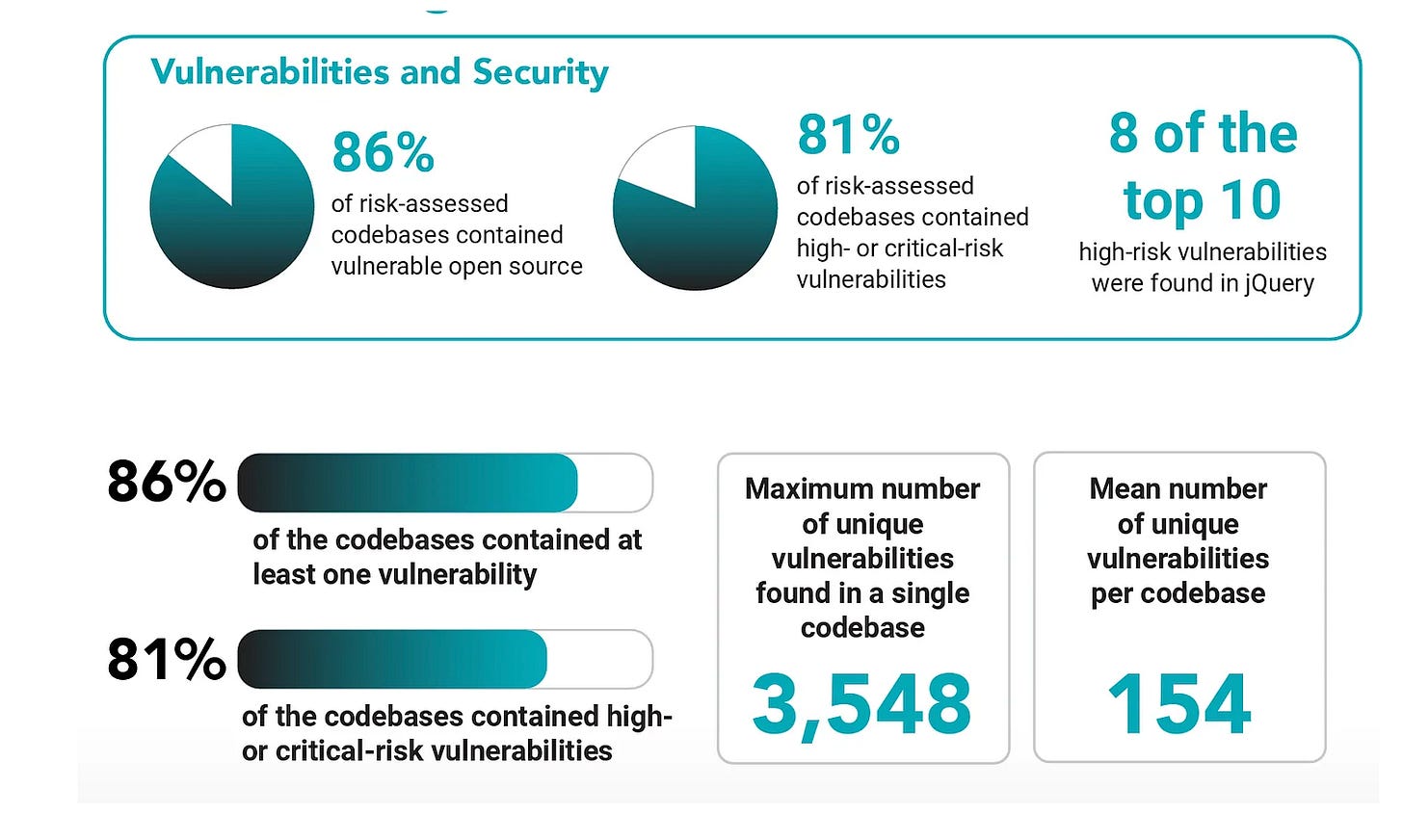

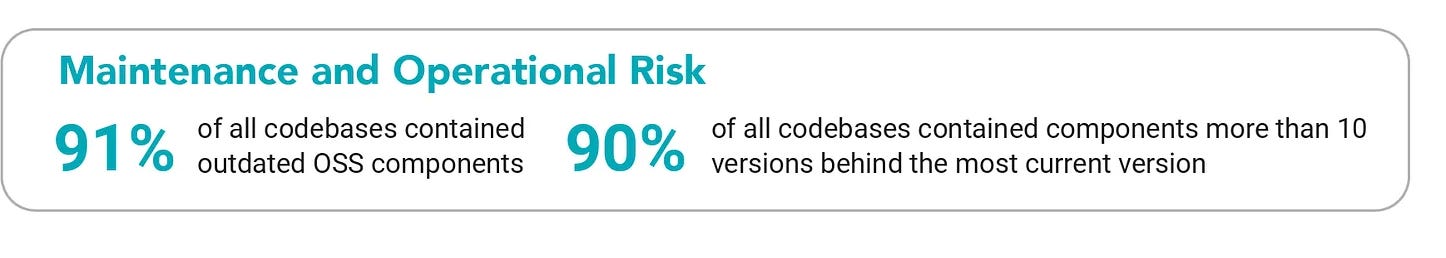

As I have discussed in other articles, such as “The 2025 Open Source Security Landscape”, not only is open source pervasive, but it is porous too.

These codebases are major problems both in terms of vulnerabilities in the open source code, many of which have no available patches/remediations but also “code rot”, lending credibility to the term that software ages like milk, with codebases having open source components that are many versions behind, years out of date, not seeing new development in quite a while and more.

As developers vibe their way to production, they likely bring a long many of these same characteristics, even unknowingly, if they never slowed down to implement any sort of engineering or security rigor, or they lack any experience in software development entirely.

Opportunities

I don’t want to just complain about problems and challenges without also discussing opportunities. While vibe coding and AI-driven development may present quite a few challenges, the same technologies offer some incredible opportunities too.

While I don’t want to bore you with a long diatribe about the state of AppSec and vulnerability management as it stands now, the reality is that most organizations are drowning in massive vulnerability backlogs, with hundreds of thousands or millions of vulnerabilities.

In fact, more than half of organizations are only remediate about 10%~ of the vulnerabilities they encounter, leading to a perpetual spiral of security technical debt and vulnerability backlogs.

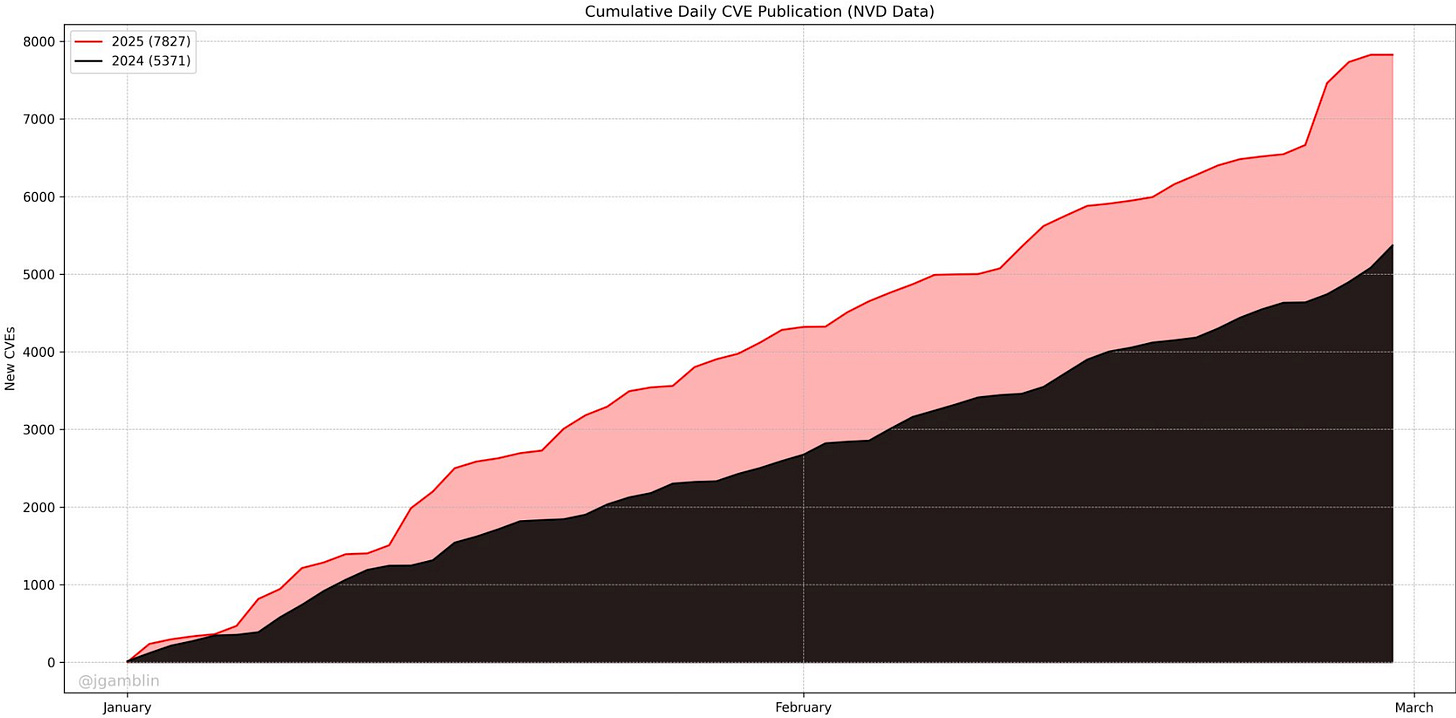

The annual rate of vulnerabilities, or CVE’s if you’re orienting around the NIST National Vulnerability Database (NVD) continues to see double digit YoY growth, and organizations capacity for remediation is far out-paced by that of emerging and aggregating vulnerability backlogs that just continue to climb.

Per Vulnerability Researcher Jerry Gamblin, as of March 2024, we are already seeing 48.37% YoY growth of CVE’s from 2024, which also saw double digit growth (as did the year, prior, and the year prior - you get the picture).

I’ve discussed this at length in many articles, such as:

And many others.

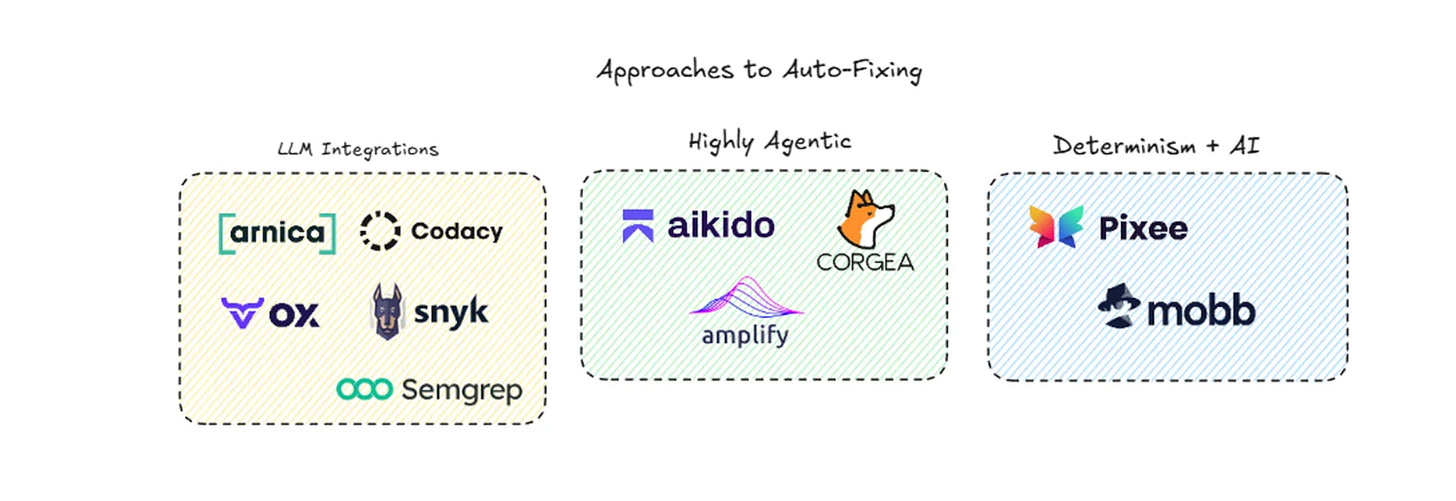

That said, many startups and innovators are looking to leverage the same AI technology to address these systemic challenges in AppSec. James Berthoty at Latio Tech recently wrote a piece where he was “Evaluating the AI AppSec Engineering Hype”, discussing the concept of “Auto-Fixing” and various companies and their approaches from LLM Integrations, Highly Agentic Workflows, and Determinism + AI.

I share this not as an endorsement of any of the companies, but just to show that several are looking to use AI for AppSec, rather than just leaving AppSec to deal with the potential fallout associated with vibe coding and AI-driven development.

Others such as Ghost Security are using AI and Agents to conduct activities such as proactive risk identification, dynamic testing and even autonomous remediation (to the extent that organizations have the appetite for auto-remediations, which will likely need to grow in time if we have any hope of ever keeping pace with the threats and vulnerabilities).

However, I suspect it will take quite some time for many organizations to feel comfortable with auto-remediations, especially due to the fact that these very same LLM’s and AI tools can hallucinate, and non-deterministic and may make mistakes, including ones that could impact applications and systems running in production, impacting customers and consumers.

There’s also the opportunity for LLM’s to act as a “judge”, not just writing code, but then reviewing what has been written, functioning as AppSec copilots for developers, especially knowing Security is often outnumbered by Developers 100:1 (depends on the organization but it is often significant). This allows LLMs to work in tandem, in agentic workflows, with some helping to develop code, others reviewing code, potentially remediating findings and/or surfacing to the attention of developers, pushing us closer to the Secure-by-Design paradigm we continue to hear so much about and long for.

A great paper on the topic is “A Deep Dive into LLMs for Automated Bug Localization and Repair”, or news related to Google and their efforts such as Big Sleep AI and leveling up fuzzing to find more vulnerabilities with AI. This lets us scale efforts to identify and remediate vulnerabilities at a scale and pace that hasn’t been possible prior to the current wave of LLM’s and AI.

All that aside, I firmly believe the future of AppSec and vendors who will begin to have the most success will be those not just better at pointing our problems and creating more work, but those who are able to actively solve problems, fix issues, proactively burn down technical debt and provide business value, as opposed to just surfacing more things to fix.

Closing Thoughts

This is far from an exhaustive discussion on vibe coding and its implications nor the potential for AI and Agents when it comes to AppSec, but I did want to get some thoughts out there on this evolving topic.

Will we see a boon on development, productivity and applications due to AI?

Will we also see issues with tech debt, a lack of inherent knowledge of how things were built, how they work, or how to fix them?

Will we see an exponential growth of the attack surface as existing developers are more productive and others are able to vibe things to production, security be damned?

Much of this remains to be seen, but we can certainly get a sense of where it is headed.

I wanted to close out sharing a hypothetical exchange I saw shared by Simon Wardley who I recently followed on LI and who has some great posts:

Conversations from the future ...

X : I built this system, it's critical for our company. No-one understands how it works. It has stopped working.

Me : Ok, don't worry. Have you tried asking the AI to fix it?

X : It keeps saying it has fixed it but it's still broken. It's stuck in a loop.

Me : Ah ha, so that's why you called me. Do you have the test scripts?

X : What are those?

Me : No problem. Do you have the specification docs?

X : I used prompts.

Me : Ok, well prompts are in fact a Turing complete language when the AI has memory but they also provide the specification - which is great. They are pure gold. Can I get a record of your prompts.

X : Why would I?

Me : Would what?

X : Record the prompts.

Me : So, we have a broken system with no tests, no specification, no prompts and no way of knowing what a working system looks like other than vague half remembered notions in your head. But we do have code which we don't understand and isn't working. I have had worse - at least you are still alive and I can ask questions. Is this a fair summary?

X : You seem to be talking down to me. You know, I'm a certified Vibe Engineer. I get paid more than you.

Me : The certified part I can definitely believe as for the pay, well that's how mad our society is.

X : What should I do?

Me : This is fixable. Have you tried switching the machine off and on again?

X : Will that help?

Me : I just wanted to watch you try. It's a distributed remote AI.

X : Are you taking the mick?

Me : The primary role of engineering is decision making and you're not making any informed decisions - the AI is. You're more of a Vibe Eloi rather than a Vibe Engineer. To fix this I'm going to need to wrangle your AI into building some context specific tools in order to try and understand what you have built and where the problem is. Think of me, as a Vibe Whisperer. What you have been doing is sacrificing understanding for convenience. You deserve a bit of gentle ribbing. You need to learn some basic practices.

X : You're a dinosaur.

Me : Do you want some help or do you want to find that on/off switch? Whilst you are at it, can you buy me a pack of bubbles for my spirit level. It's also broken