Resilient Cyber Newsletter #70

The End of Cybersecurity, UK’s Costliest Cyber Attack EVER, AWS’s US-East-1 Crashes the Internet, Building Secured Agents, Decade of AI Agents, Cisco’s AI “CodeGuard” & Closing Supply Chain Risks

Welcome!

Welcome to Issue #70 of the Resilient Cyber Newsletter.

I would say it’s been a crazy week, but this is starting to feel like the new status quo in cybersecurity and tech, from nation-state cyber incidents, widespread outages, costly cyberattacks, and the continued push of AI, Agents, and their intersection with AppSec.

I found many of the resources and topics this week to be both fascinating and concerning, and I suspect you will as well.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Effective Cloud Security Requires a Unified Platform

Cloud-native speed comes with cloud-sized risk. As teams spin up and down resources at record pace, security gets buried in complexity. Point solutions for prevention, posture, and response just can’t keep up.

ESG reveals why organizations need a consolidated, integrated approach that streamlines security, reduces risk in real time, and delivers runtime protection where it matters most.

Cyber Leadership & Market Dynamics

The End of Cybersecurity: America’s Digital Defenses Are Failing, but AI Can Save Them

Industry leader Jen Easterly took to Foreign Affairs to make the case (again) that the U.S. does not have a cybersecurity problem; it has a software quality problem. This is a point she has made in various prior talks, including during his time leading CISA.

Thankfully, she calls out the real crux of the problem, which is that vendors have few, if any, incentives to produce secure products or software, or to prioritize security more broadly. This incentive problem is well known, both from a market perspective and a regulatory one, and I have written extensively about it in prior articles, such as “Software’s Iron Triangle: Cheap, Fast and Good - Pick Two”.

But Jen takes an optimistic view, pointing to recent advancements in AI to highlight how we can fix systemic software problems across the digital landscape. She argues that U.S. government agencies, companies, and investors must shift their economic incentives and adopt AI early to enhance the U.S. cyber posture.

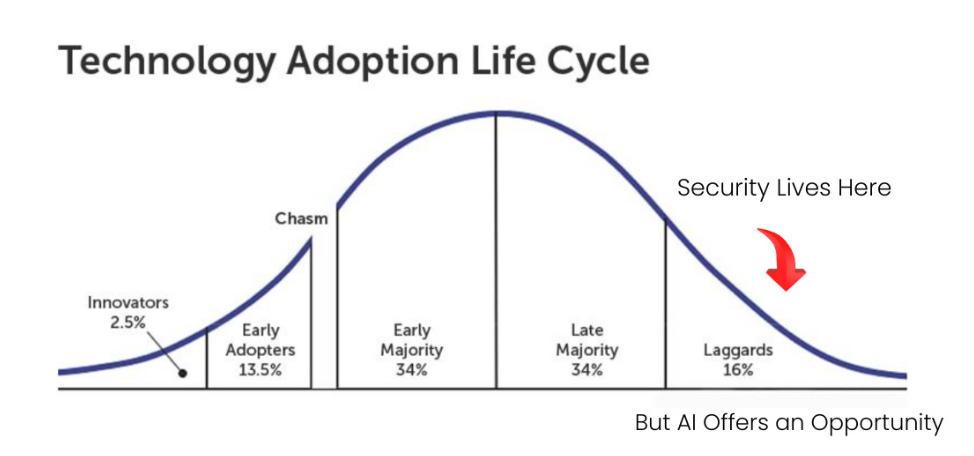

I made this same point in an article of mine titled “Security’s AI-Driven Dilemma”, where I made the case that the cyber community must shift from being a late adopter and laggard of emerging technology to being an early adopter and innovator if we hope to keep pace with developers and attackers, both of whom are eagerly adopting this promising technology.

Speaking of Jen and her role in the industry, she recently shared she is joining a list of other industry leaders such as Chris Inglis, Marcus Hutchins, and others in the first full-length documentary created by a cybersecurity company (Semperis), and it will be called “Midnight in the War Room”. I have to say, the trailer looks incredible and I’m psyched to check out the whole film when it is live!

China Accuses US of a Cyberattack of the National Time Center

In a story that caught my attention due to its irony, the Chinese Ministry of State Security alleged that the U.S.’s NSA exploited vulnerabilities in messaging services of a foreign mobile phone brand to steal sensitive information.

This story I found interesting because we can’t go more than a week typically without a U.S. government and/or technology leader discussing the ongoing cyber attacks by China against U.S. companies, critical infrastructure, and software. In a bit of a reverse uno, China is now levying similar claims against the U.S., with these ones dating back to 2022 and 2023.

Undoubtedly, both nations are doing exactly what they are accusing the other of; however, from the public’s perspective, we will never truly know to what extent, how often, and by what means.

JLR hack is costliest cyber attack in UK history

I recently shared how Jaguar Land Rover (JLR) had a cyber incident that led to the Government in the UK stepping with what amounts to a bailout. It is now estimated to cost 1.9bn and be the “most economically damaging cyber event in UK history” by some researchers.

It halted JLRs car production for five weeks and rippled across their entire supply chain as well, affecting 5,000 businesses and a full recovery isn’t expected until January 2026.

Your third party’s breach costs as much as your own

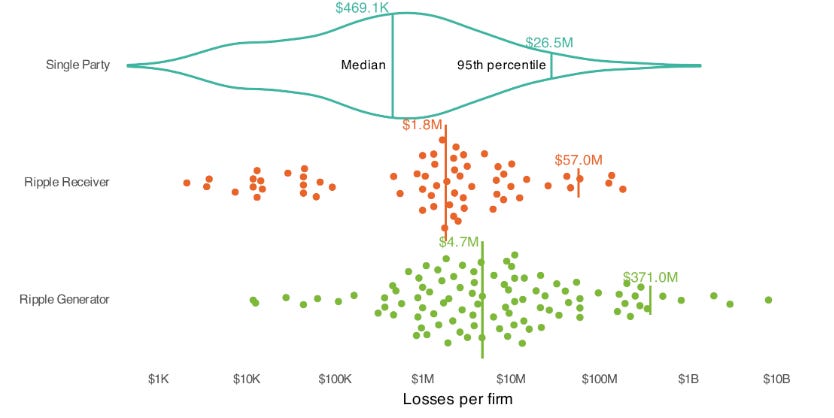

Year after year, we continue to see third-party risk management be a problem for organizations, but we often don’t have good insight into how much of a problem it is financially. This piece from Cyentia Institute shines a light on the fact that an organization’s third party having a breach can cost almost as much as the organization having one itself.

In their research, Cyentia refers to the organization that is the source of the incident as the “ripple generator” and those that are impacted as the “ripple receiver”. They compare reported financial losses for single-party incidents vs those of losses incurred by the generating organization of a multi-party incident vs. losses to downstream organizations impacted by the events (receivers).

What is surprising in the data is that organizations on the receiving end of a multi-party ripple event have higher losses than typical single-party incidents (e.g., median of $1.8M vs. $470k), showing just how impactful these third-party incidents can be for those caught in the ripple.

Cyentia goes on to show how the impact of these incidents is changing over time, showing that over time:

“The per-firm costs of ripple receivers have been increasing and are now roughly equal to those of generators”.

The Internet Hinges on AWS’s US-East-1

Headlines and panic broke out this past Monday as there were outages associated with AWS’s US-East-1 location, which it has been reported is where large portions of the entire Internet run through. Outages impacted many downstream organizations that are built on AWS and the US-East-1 region, leading to a cascading impact across the supply chain.

Some even took to LinkedIn to borrow the original XKCD comic to point fun at the absurdity of the reliance we have on this one single location from a single hosting provider.

The headlines of the event even made mainstream news sources, such as CNN and others.

This is as good a time as any for organizations to review their business continuity and disaster management plans, as well as to determine the fault tolerance of their operations and single points of failure.

Today is when Amazon brain drain finally sent AWS down the spout

While the full details of the incident haven’t been disclosed quite yet, some are speculating that it could be the result of an exodus of seasoned senior engineering talent from AWS due to layoffs and various rounds of return to the office (RTO). This piece from The Register sites some senior AWS engineers who have left and even projected that there would be incidents and outages to come in the near future due to a “brain drain” at AWS.

Learned Helplessness is Hurting the Security Industry

If you’ve been in cybersecurity for more than a month, you inevitably know the collective sense of learned helplessness our industry suffers from. The fact that we will feel we can never stop the attacks, hackers will inevitably get in, consumers/markets don’t give a sh*t about security, and more.

It can all be a bit depressing, and it certainly doesn’t do anything to make us more secure, even if the collective commiserating somehow makes us feel better. My friend Ross Haleliuk penned a great piece discussing how this sense of learned helplessness is hurting the security industry.

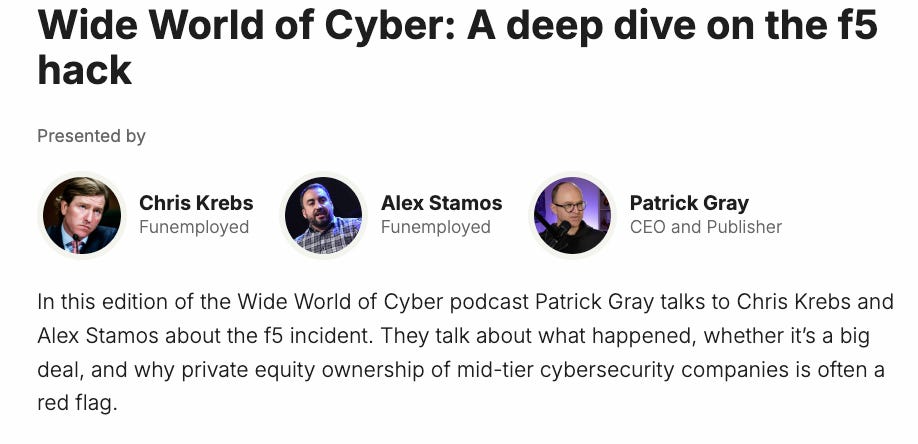

Wide World of Cyber: A deep dive on the F5 Hack

Last week, I shared how CISA had issued an emergency directive due to the exposure of the source code of a large security vendor, F5, in an incident. This issue continues to be urgently discussed in the community, and I found the recent episode of Risky Biz, with its usual host Patrick Gray, along with industry leaders Chris Krebs and Alex Stamos, to be a valuable conversation.

They dove into not only the details and potential implications of the incident, but also similar incidents Chris and Alex have been involved in, what it takes to recover from an incident like this as a vendor (e.g., F5), including parallels to past incidents such as SolarWinds and others. They discussed an often-overlooked aspect, which is the role of private equity, M&A, and Frankenstein legacy products, among others.

Additionally, they touched on the potential of AI to rewrite legacy insecure code and products, which is something Jen Easterly emphasized in her “The End of Cybersecurity” Foreign Affairs piece I shared above.

AI

Building Secured Agents

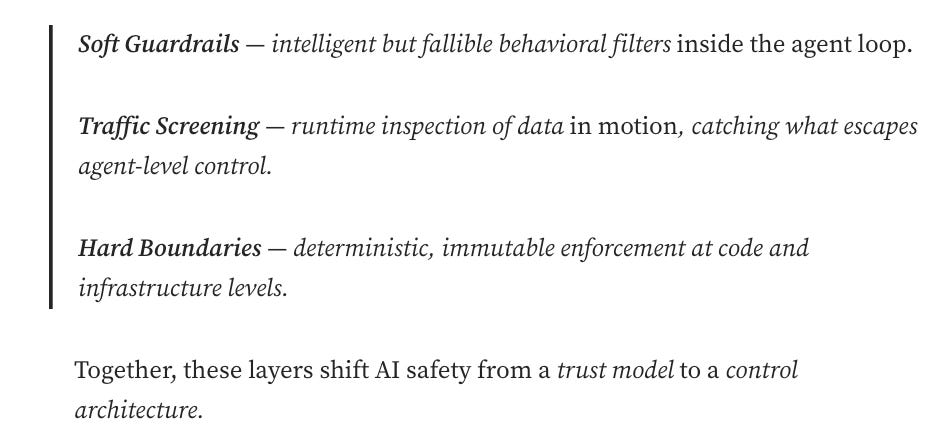

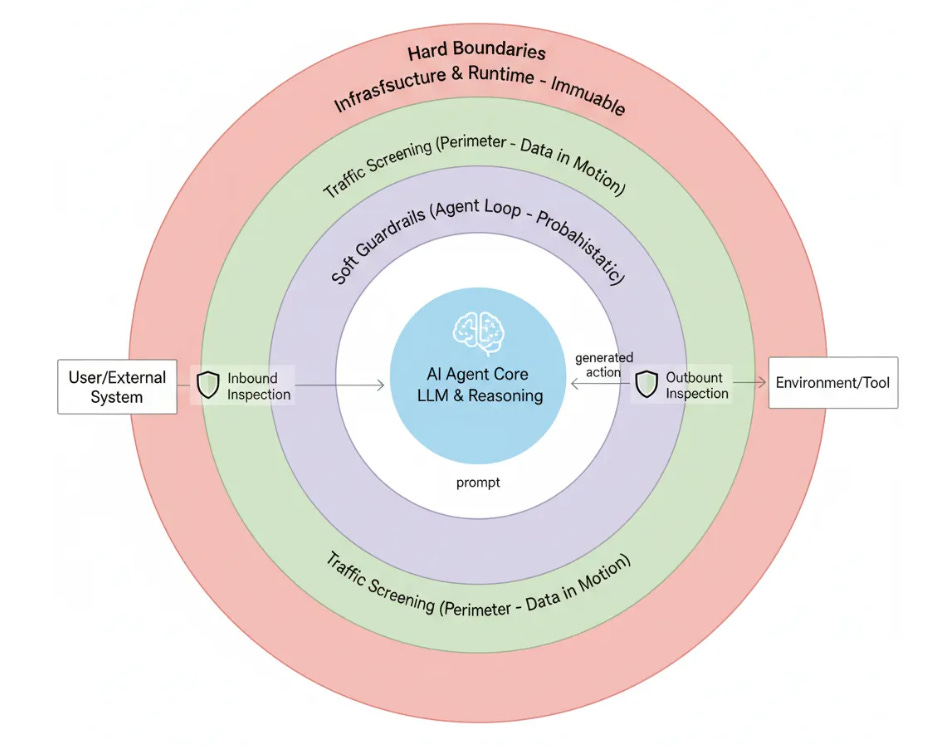

As we continue to explore the intersection of Agentic AI and Cybersecurity, key terms such as soft guardrails and hard boundaries have emerged when discussing the security of Agentic AI.

This recent blog by Idan Habler, Guy Shtar, and Ron F. Del Rosario covers these concepts, emphasizing that none of these concepts in isolation is sufficient, and we need all three layers to function together to secure Agentic AI. They summarize the three concepts below, as well as provide a conceptual visual model:

Governing Agentic AI Internationally

We often discuss AI and Agentic AI Governance, but what does that look like on a global scale, particularly as it blurs international nation-state boundaries? The Partnership on AI (PAI) has published a policy brief outlining a framework for addressing such challenges.

The publication examines existing and emerging policies worldwide regarding AI and AI governance, as well as existing international law, non-binding norms, and accountability mechanisms.

It goes on to discuss moving from Principles to Action and provides detailed steps governments and companies can take.

The LLM Dependency Trap

Everyone continues to acknowledge how AI and LLMs are reshaping modern software development. That said, these amazing tools also introduce new complexity and risks. Brian Fox of Sonatype wrote a good piece recently discussing the challenges of how LLMs choose their dependencies during development.

Brian stressed that LLMs don’t understand context to know what is secure, what is maintained, and what organizational policies may or may not allow, leading to dependencies that are often outdated, vulnerable, or have licensing risks as well. While Brian didn’t state it, the outdated and vulnerable part is further complicated by the fact that models’ training data often is 6-12 months old, all but ensuring the dependencies will indeed be outdated, if not vulnerable.

Brian discusses how attackers are well aware that developers implicitly trust AI-generated code, leading them to take advantage of LLM hallucinations and abuse prompt patterns to plant malicious dependencies across the OSS ecosystem, which are subsequently incorporated into training data for models.

One line of Brian’s sums up what I think the core of the problems around AI-driven development will be for some time to come:

“The AI doesn’t know better, and if your devs aren’t questioning the suggestion, the attacker wins by default”.

The above point summarizes the challenge we face. Age-old conflicts in priorities between speed to market, revenue, productivity, etc., are and will continue to outweigh security concerns, but are exacerbated by the “boost” developers receive from AI coding.

This means faster iterative development, an exponential scale, and a more porous attack surface, as developers inherently trust the code recommended by AI. Brian argues that teams must use guardrails at the point of generation, context-aware dependency evaluation, and enforceable policies to mitigate AI-driven development risks, and I tend to agree with him.

The problem is, most teams won’t do these things.

Andrej Karpathy - We’re summoning ghosts, not building animals.”

In an interview that set the Internet ablaze (albeit not in the way AWS’s outage did), AI industry leader Andrej Karpathy sat down on a podcast with Dwarkesh Patel to discuss the state of AI, LLMs the industry and more. Several of the comments Andrej made sent X, LI and the broader AI social ecosystem into a fury, as some came to agree with him, and some to disagree. A few of the notable quotes below:

This is not the year of agents. It is the decade of agents

“I feel like the industry is making too big of a jump and is trying to pretend like this is amazing, and it’s not. It’s slop. They’re not coming to terms with it, and maybe they’re trying to fundraise or something like that... We’re at this intermediate stage”.

“My critique of the industry is more in overshooting the tooling w.r.t. present capability... The industry lives in a future where fully autonomous entities collaborate in parallel to write all the code, and humans are useless”.

“They just don’t work. They don’t have enough intelligence, they’re not multimodal enough... They’re cognitively lacking and it’s just not working

Now, when someone with a track record in AI and tech, such as Andrej, speaks, I, along with many others, take notice, and I have learned a lot from his perspectives on AI and its progress, challenges, opportunities, and more, including his prior framing of “Software 3.0”.

The comments he made in this episode seemed to reiterate we indeed may be in a bubble and the industry is over-hyping AI capabilities due to needing to fundraise, having financial incentives tied to AI’s progress, and other reasons, none of which change the state of where we stand with AI in reality.

I can’t pretend to understand some of the more nuanced and technical aspects of LLMs and AI that Andrej and Dwarkesh discussed at points of the discussion, but I found the entire interview fascinating given the current market dynamics around AI, including within cybersecurity.

Failing to Understand the Exponential, Again

I recently shared pieces in prior newsletters about some raising concerns about being in an AI bubble, as well as the U.S. economy’s dependence on the AI boom that we’re experiencing. In a somewhat contrarian take, Julian Schrittwieser penned an interesting piece discussing how people are failing to grasp the exponential growth in capabilities that AI has achieved.

Julian argues that people look at some of the mistakes or gaps AI has and jump to conclusions that progress is slowing down or even going in the wrong direction, and that AI will never be able to do tasks at human levels, at least anytime soon. He makes the case that what AI is capable of now would have been seen as science fiction just a few years ago.

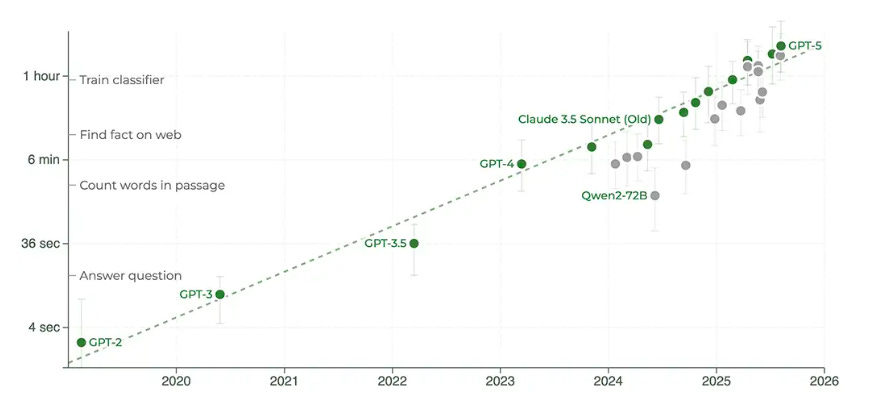

To make his case, he points to METR, which is built to study AI capabilities and looks at their study “Measuring AI Ability to Complete Long Tasks”, which measures the length of software engineering tasks AI can autonomously perform. The study shows that the length of tasks AI can do is doubling every 7 months.

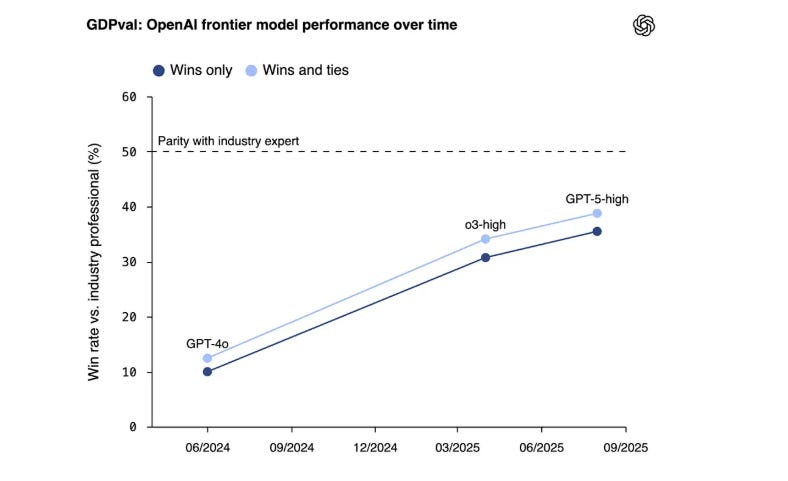

Granted, that is just activities associated with software development and so on, so Julian goes on to point to GDPVal from OpenAI, which looks at performance in 44 different occupations across 9 industries, as seen below:

This includes 30 tasks per occupation, with over 1,300 tasks, averaging 14 years of experience from respective industry experts who contributed the tasks. OpenAI found that GPT -5 had incredible performance, nearly on par with that of a human.

Julian closes by making the case that if the current trends sustain, 2026 will be a breakout year for AI, with models being able to perform full days/8 working hours of work autonomously, a single model will match the performance of human experts across many industries, and by 2027, that will surpass many human experts on many of the tasks.

I’m not sure whether I agree, but the exponential growth in capability is definitely something worth discussing.

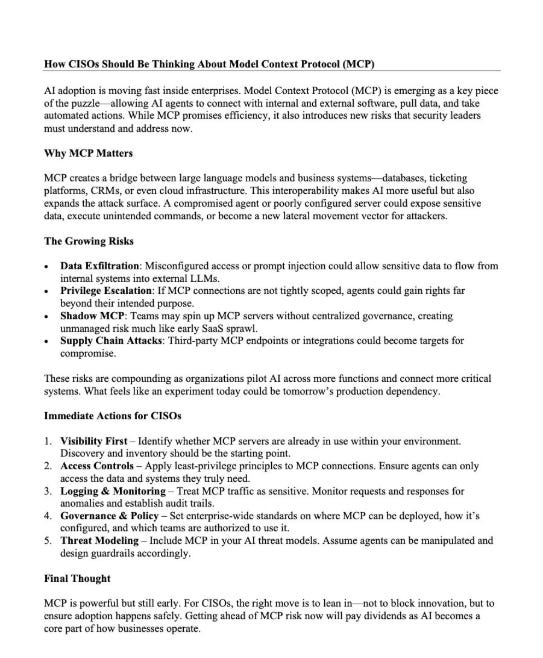

How CISOs Should Be Thinking About Model Context Protocol (MCP)

One of the aspects of AI and Agentic AI in particular that has dominated discussions in 2025 is that of MCP from Anthropic. It enables AI agents to connect with internal and external software, data, tools, and more, helping give AI agents “arms and legs”. That said, it has also brought its own security risks and concerns, which I have previously shared, including novel attacks such as “rug pulls” and teams discovering intentionally malicious MCP servers in the wild.

Fred Kneip recently shared an excellent one-pager aimed at CISOs on how they should be thinking about MCP.

AppSec

Cisco Unveils Project “CodeGuard”, an open-source framework to secure AI-written software

As we all know by now, code generation is among the top use cases of early AI and LLM adoption. That said, as I have written about and shared many times, the quality (security) of the code that is AI-generated is highly suspect and is often vulnerable more than half the time.

This is leading to challenges among those who are security conscious as well as a likely explosion of the porous attack surface in the years to come as “vibe-coded” applications enter production environments.

The industry continues to explore solutions to this challenge, to enable secure AI coding adoption, and the latest example comes from industry leader Cisco, in an open-sourced framework they shared called Project CodeGuard, which is a unified, model-agnostic framework that can apply guardrails before, during, and after AI-assisted code generation.

It includes a core rule set aligned with standards such as OWASP, CWE, etc., to counter recurring flaws such as hardcoded secrets, missing input validation, crypto gaps, and more. They state the rule set can be used in planning and specification to help guide the AI coding agents to safer patterns and development.

That said, thankfully, they also pragmatically point out that it isn’t a replacement for human oversight or review, but can help minimize the initial vulnerabilities and flaws in AI code.

Why LLMs Aren’t Ready for Predicting Vulnerability Exploitation (Yet)

Everywhere we turn, we see LLMs and AI being proposed as a panacea. Alert fatigue, compliance, research, triage, and more. Some are even saying it will find a cure for cancer. But predicting vulnerability exploitation?

Not quite, at least not yet.

This is a good piece from Michael Roytman of Empirical Security discussing the topic. Mike lays out how LLMs perform poorly compared to purpose-built approaches such as the Exploit Prediction Scoring System (EPSS), and cost more as well. They tested leading models such as GPT, Claude, and Gemini on a dataset of 50,000 CVEs. None of the models exceeded a 70% efficiency threshold, and if they kept the process up in an ongoing fashion hourly, it would run up to $288,000 PER DAY.

So while AI may cure cancer, it isn’t ready to predict vulnerability exploitation, not yet.

Closing the Chain: How to reduce your risk of being SolarWinds, Log4j or XZ Utils

If you’re reading the headline of the above link, you inevitably recognize the names, because they are some of the most notable software supply chain incidents we’ve experienced in recent years. From commercial software vendors (SolarWinds), or open source software (Log4j and XZ Utils), we’ve seen a ton of software supply chain attacks, and I’ve even written an entire book on the topic titled “Software Transparency” where I covered the rise of these attacks, how they can be prevented, the role of regulation, software supply chain security frameworks and much more.

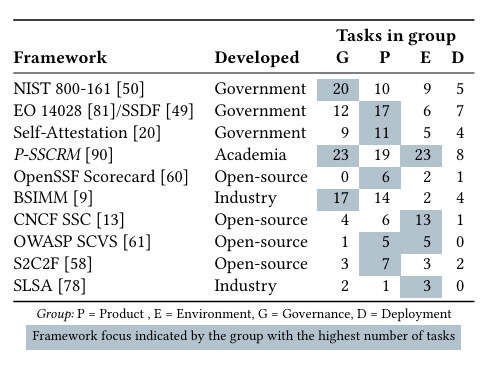

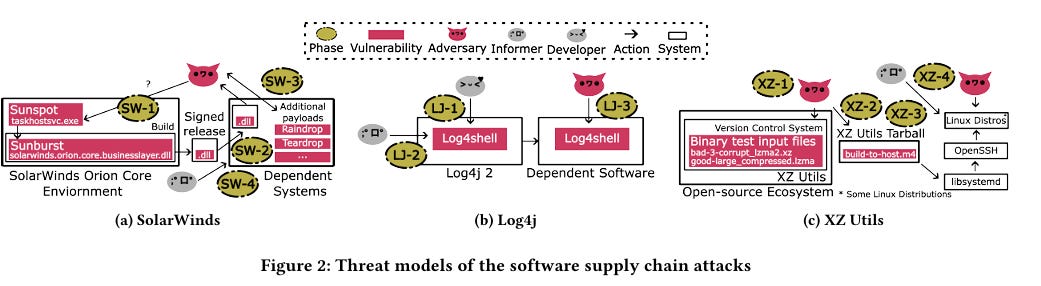

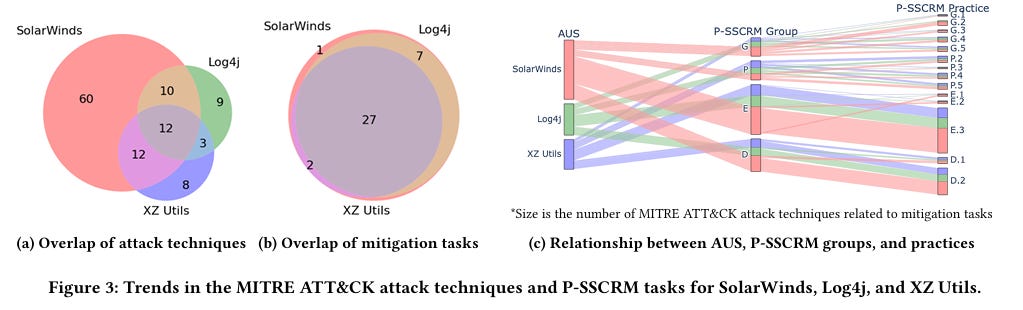

This is an interesting piece of research out of North Carolina State University in partnership with others diving into the attacks and supply chain security frameworks. They synthesized 73 tasks across 10 different software supply chain attacks and rank prioritize tasks that might mitigate the relevant attack techniques from the 3 incidents in the title.

The three mitigation tasks that scored the highest were role-based access control, system monitoring and boundary protection.

Below you can see the 10 frameworks they used in the research, who developed them and where the tasks got grouped:

They also provided a notional threat model of the 3 different software supply chain attacks:

Lastly, they analyzed the trends in MITRE ATT&CK techniques and the P-SSCRM tasks for the 3 incidents:

There are some good insights in here regarding software supply chain attack mitigations and controls, as well as the leading industry frameworks in this space. I will say, no single control can prevent future incidents, and there is also a reality that attackers often go for the low hanging fruit, so in the presence of one control, they may alter they attack techniques, which of course colors the incident and techniques used and subsequently researched.

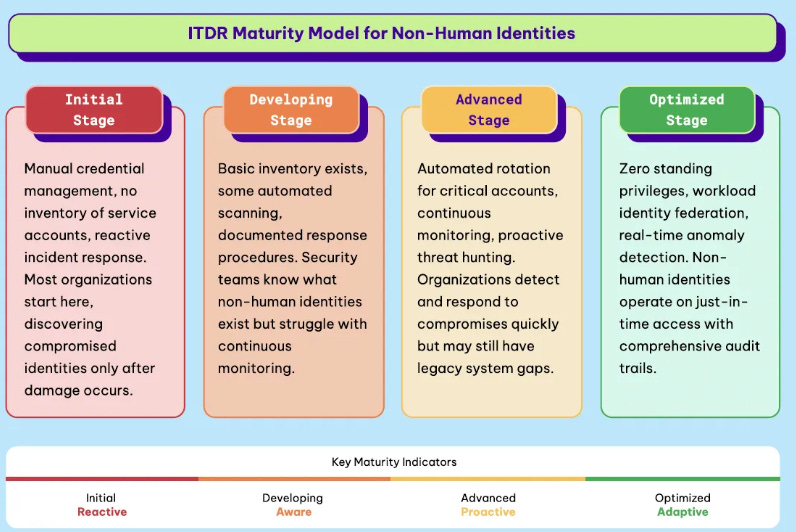

NHI Identity Threat Detection & Response (ITDR)

n the last few years, we’ve heard a lot about non-human identities (NHI) and NHI security. However, it often focuses on topics such as visibility, governance, etc. But what about Threat Detection & Response?

What does “right” look like?

I dive into that in my latest Resilient Cyber deep dive, using Permiso Security‘s NHI ITDR Playbook: Detecting and Responding to NHI Compromise. Permiso’s NHI ITDR Playbook provides a lot of great insights, including:

What the compromised NHI indicators look like, including credential exposure events, privilege accumulation, and cross-boundary usage

The importance of logging, from secret management systems, CI/CD pipelines, Cloud IAM services, code repositories, and certificate authorities

An NHI risk classification scale, ranging from production data, infrastructure, and customer-facing systems down to dormant accounts and test environments

Measuring potential business impact, including mission-critical through non-essential, and estimating financial costs as well

How to contain and investigate NHI incidents and steps organizations can take to improve their NHI security overall, including key security controls with outsized ROI

My latest article, which uses the Permiso NHI ITDR Playbook as a reference, dives into this and much more, so be sure to check it out!

Fascinating. How can AI truely address systemic failures? Always insightful.