Security's AI-Driven Dilemma

A discussion on the rise of AI-driven development and security's challenge and opportunity to cross the chasm

By now, it is clear that AI is fundamentally changing the landscape and future of software development. Over the last several years, GenAI and LLMs have drastically changed the software development ecosystem.

From NVIDIA’s CEO Jen Huang quipping, “Kids shouldn’t learn to code”, to industry leaders such as Google say 25% of their code is now written by AI, VC firm YCombinator stating 25% of their latest cohort has codebases that are 95% written by AI and Anthropic’s CEO claiming within 6 months 90% of all code will be written by AI. Google’s 2024 DORA Report also found that 75% of developers now rely on AI coding assistants.

Even with trends such as “Vibe Coding” aside, which I wrote about recently in an article titled “Vibe Coding Conundrums,” it is clear that AI is rewiring the way modern software development looks, from the workforce to tooling to output and much more.

That said, this has inherent security implications, too, which we will discuss below.

Additionally, security has a chance to be an early adopter, rather than a laggard, and quit perpetuating the problem of bolting on security rather than baking it in.

Velocity, Volume, and Vulnerabilities

We have already discussed how coding assistants and AI-driven development tools are seeing rapid and widespread adoption. In addition to general adoption, these tools drive “productivity” booms. While figures vary, estimates generally range from 35% to 50% in terms of claims of both delivery speed and code output.

On the surface, this is great for businesses and developers, who are primarily focused on incentives such as revenue and speed to market rather than security. But this increased velocity and volume have implications for something else, vulnerabilities.

To level set, let’s examine the vulnerability landscape as it exists. Common Vulnerability and Exposure (CVE) and NIST National Vulnerability Database (NVD) meltdowns aside, security has struggled terribly to keep pace with software development for years.

As recently as March 2024, CVE growth was +48.37 YoY from 2024 > 2025, with no signs of slowing down.

This is due to various factors, such as improved and increased vulnerability discovery and disclosure, and a maturing of the vulnerability management ecosystem, but also to basic math. Historically, more applications, software, and lines of code mean more vulnerabilities—more attack surface.

This has manifested in massive vulnerability backlogs in the hundreds of thousands in many enterprise environments, with security unable to keep pace with the growing attack surface, increased pace of software development, and competing priorities for the business, such as feature releases, customer satisfaction, speed to market, and revenue.

Security has tried various methods such as “shift left” and “DevSecOps”, but this has largely been implemented as throwing a slew of acronym soup security tooling (e.g. SAST, DAST, IaC, Container Scanning et. al) into a CI/CD pipeline, much of the tooling with low fidelity, data quality issues and little to no application or organizational context, bolstering silos between Security <> Development, ironically the same silos that DevSecOps was supposed to improve.

You don’t need to be a genius to see the likely implications for this problem and its future exacerbation when we consider the widespread adoption of Copilots, Coding Assistants, and general AI-driven development.

You might be asking, won’t the coding assistants and AI tooling produce more secure code?

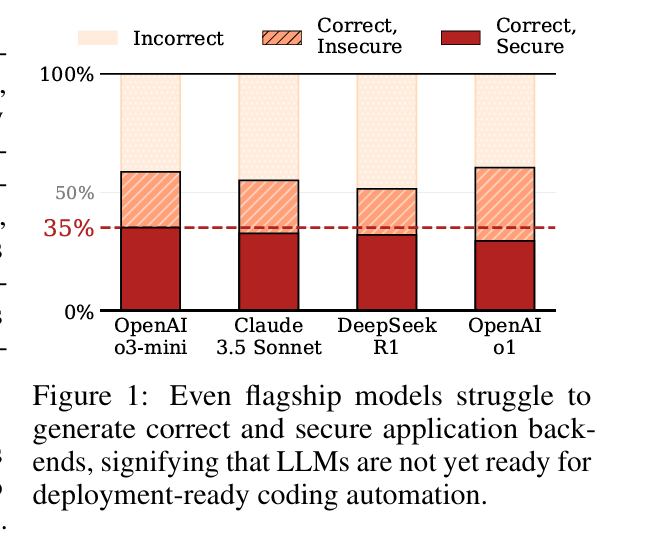

Well, not quite, at least not yet.

Various researchers and interested parties across the industry are looking into this, and right now, it’s problematic. One source found that 62% of solutions generated by AI aren’t correct and/or contain security vulnerabilities. Another study found that 29.6% of AI-generated code snippets have security weaknesses.

Despite this research and findings, surveys show that developers overestimate the security of AI-developed code and often inherently trust its output without further validation or verification from a security perspective. Additional studies claim that the widespread adoption of AI coding tools may cause skills to rot, from critical thinking to expertise in the nuances of software development.

Additionally, there is an “AI Feedback Loop,” a challenging dichotomy in which, as AI creates more modern codebases, those initially AI-generated snippets and code are subsequently used for future AI model training. This causes a reverberating impact where AI model training uses potentially insecure and vulnerable code, amplifying future security ramifications.

This problem is admittedly isn’t unique to AI-developed code, either. In fact, the majority of foundational AI models used by modern coding assistants aren’t organic code created from the ether; instead, they were trained on the massive open-source software ecosystem.

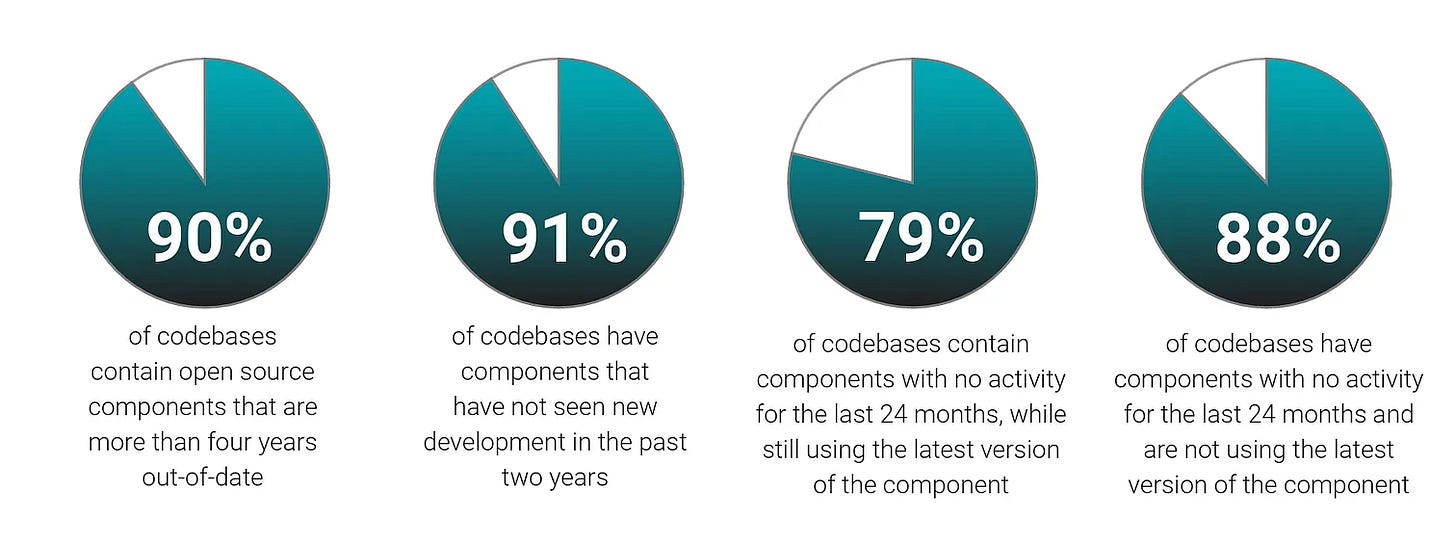

As I wrote in an article titled “The 2025 Open Source Security Landscape,” open source adoption is already seeing exponential year-over-year growth, with 97% of codebases containing open source and the number of open source files in an average application tripling in just the last four years. This open-source code suffers from both vulnerability and sustainability issues.

As seen above, vulnerabilities in the open source code are pervasive. The overwhelming majority of the open source components are several years out of date and, in many cases, haven’t seen new development in years, demonstrating that the vulnerabilities are unlikely to be remediated anytime soon.

None of this is to say open source is inherently bad or less secure than proprietary code. In fact, as evidenced above, the majority of commercial code has its origins in open source.

The same applies to the leading frontier AI models and the code they’ve been trained on, and now subsequently help developers create and distribute into the modern software ecosystem.

Governance - Models, Code, and Integrations

A fundamental part of the AI-driven development challenge is governance. This includes models, code, and integrations. On the models front, as demonstrated from research such as BaxBench, not all AI models and their ability to code, including secure code, are created equally. Organizations will need governance around what Copilots, Coding Assistants, and Models are used for their software development activities.

On the code front, this includes risk-based decision making around what code to include in applications, products, and systems based on deep context, well beyond just known vulnerabilities. This includes known exploitation, exploitation probability, reachability, business context, and more. The legacy vulnerability management approach of burdening development teams with low-context, poor-quality security scan outputs and dragging velocity to a crawl, functioning as a business tax rather than an enabler, is dead. Developers know it, and it’s exactly why they proactively work around, rather than with, security.

When it comes to integrations, we’re seeing the explosive growth and excitement around the supporting protocols and methodologies to empower not just AI-driven development but entire agentic architectures, with the rise of things such as the Model Context Protocol (MCP) and Agent2Agent Protocol.

We will continue to see the widespread adoption of AI-driven development and the entire agentic architectures and workflows of rapidly developed applications and software, which coordinate internally among agents and externally with data sources, tools, and services.

Security’s Opportunity to Cross the Chasm

Security has historically been a friction point for the business, dubbed a " soul-whitening chore” by Developers. It is often seen as the “office of no” and is generally viewed as a necessary evil by the business.

This is due to many of the issues I discussed above, coupled with security’s continued use of legacy methodologies, paper-based compliance processes, inability to articulate value to the business, and an inherently risk-adverse culture.

The classic technology marketing and sales book “Crossing the Chasm” outlines a technology adoption lifecycle comprising Innovators, Early Adopters, Early Majority, Late Majority, and Laggards.

Security almost always functions as a laggard.

While the business is quickly adopting Cloud, Mobile, SaaS, AI, and whatever comes next, security historically sits back, hand-wringing over hypothetical and real risks, emphasizing excessive caution, and inevitably being left behind.

This is why we hear phrases like “security needs to be built in, not bolted on.” While everyone in security will hear that phrase and nod their head, our behaviors, interactions with development and engineering peers, and how we carry out “security” within and across the business tell a different story.

We perpetuate problems of the past by living as laggards.

However, we have a real decision and opportunity ahead of us. Which is to continue to perpetuate our problems from the past, or instead look to be an early adopter and even innovator by moving ourselves earlier in the technology adoption lifecycle.

We already know developers and organizations are quickly adopting AI and AI-driven development. A recent report shows that enterprise adoption of AI agents is also growing rapidly, including a significant surge, with pilots nearly doubling from 37% to 65% in just one quarter.

I’ve discussed Agentic AI’s Intersection with Cybersecurity in a piece with the same title. Startups, innovators, and investors are exploring the potential for Agentic AI in areas such as GRC, SecOps, and AppSec, which we primarily focus on in this article.

Agentic AI offers security the opportunity to address systemic challenges, such as vulnerability management, workforce constraints, and longstanding friction and frustration.

This includes identifying misconfigurations and vulnerabilities and actively maintaining application security in source code and runtime through proactive identification, recommendations, and, in time, remediation.

Multiple AI agents can be used to scale activities such as secure code reviews across thousands of pull requests, bring rich code context with deep security insights, and reduce the manual review and workload that has plagued security due to developers being substantially outnumbered across their organizations.

Much like AI is increasing developer productivity, it can function as a force multiplier for security, scaling security tasks and activities at a historically impossible level with human labor.

The rise of protocols such as MCP allows for the integration with AI coding assistants to directly embed into native developer workflows, catch flaws and vulnerabilities before they’re committed, and provide in-context code reviews and suggestions for developers in areas such as safe/secure libraries, upgrades, and remediations.

One key point to emphasize about MCP and Agentic AI amid all the industry crazes is that the extensibility of MCP and these workflows is only as valuable as the quality of the data, tools, and services you’re integrating with.

If you integrate with legacy, poor fidelity, and low-quality tooling and services, you’re just replicating the problems I discussed above. Still, instead of low-quality security tools and findings in CI/CD workflows, those problems are not brought to the IDE, arguably making the developers feel the frustration and pain worse than before, rather than improving organizational security outcomes.

One such example comes from AppSec leader Endor Labs, where I serve as the Chief Security Advisor. As you can see in the demo below, through Endor Labs' MCP Server capability, you’ll see a demo of VSCode and Copilot, where a user can ask plain-language questions about code vulnerabilities and even instruct the vulnerabilities in code dependencies to be remediated, all natively without disrupting the developer workflow.

This includes in-context code review with leading IDEs, accurate and prioritized results for remediation, suggestions for safer libraries, and upgrades on context for potential breaking changes.

This demonstrated the ability, via MCP and LLMs, to integrate with developer tooling and workflows to improve an organization's security outcomes.

Closing Thoughts

As I have discussed throughout the article, AI-driven development is drastically changing how modern software development occurs. Security now finds itself at a critical crossroads. We can perpetuate the problems of the past and stay a laggard, or we can cross the chasm and position ourselves as early adopters and innovators with this emerging technology.

We know what our business, development, and engineering peers and malicious actors are doing.

From this point forward, what we do will determine whether security stays bolted on and an afterthought or built in and by design.

Do we really want our industry to have a legacy as a laggard?

The idea that "security is always a laggard" misses the point. Security must cover everything, old and new, while others pursue the latest trend. Diffusion models like "Crossing the Chasm" are business concepts, not operational realities. Security has to defend legacy and cutting-edge tech at the same time.

This isn’t about falling behind; it’s about managing risk everywhere, all the time, often with limited and fixed resources. New technology, pushed by broken incentives, offloads risk onto security teams and users: a textbook moral hazard.

Not all security teams lag, and some businesses invest in resilience and involve security early. But the “chasm” narrative isn’t natural law. It’s an unquestioned cycle that traps security in an impossible role. Maybe it’s time to question the premise itself, rather than just play along. Follow the money: who benefits from it? All this "AI".. who is it really for?

This comment by Chris is so spot on and succinct. He captures the DevSecOps movement perfectly.

"Security has tried various methods such as “shift left” and “DevSecOps”, but this has largely been implemented as throwing a slew of acronym soup security tooling (e.g. SAST, DAST, IaC, Container Scanning et. al) into a CI/CD pipeline, much of the tooling with low fidelity, data quality issues and little to no application or organizational context, bolstering silos between Security <> Development, ironically the same silos that DevSecOps was supposed to improve."