Software's Iron Triangle: Cheap, Fast and Good - Pick Two

A look at the ongoing discussion around Security being a subset of Software Quality

There is an ongoing discussion in the cybersecurity ecosystem that we don’t have a security problem, we have a quality problem.

In fact, CISA’s Director, Jen Easterly just spoke at BlackHat and is quoted as saying:

“We don’t have a cybersecurity problem. We have a software quality problem.”

“We have a multi-billion dollar cybersecurity industry because for decades, technology vendors have been allowed to create defective, insecure, flawed software.”

To be clear, the concept that security is a subset of quality isn’t a new concept. It is something that has long been said in our industry but is getting renewed focus in the never ending onslaught of security incidents, data breaches, supply chain attacks and more - all predicated on software, products and services which are ripe with vulnerabilities, misconfigurations and other characteristics facilitating their exploitation.

In this article we will take a look at the related topics, some of the challenges leading to this situation and potential paths forward on addressing ongoing poor software quality (including insecurity).

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 6,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

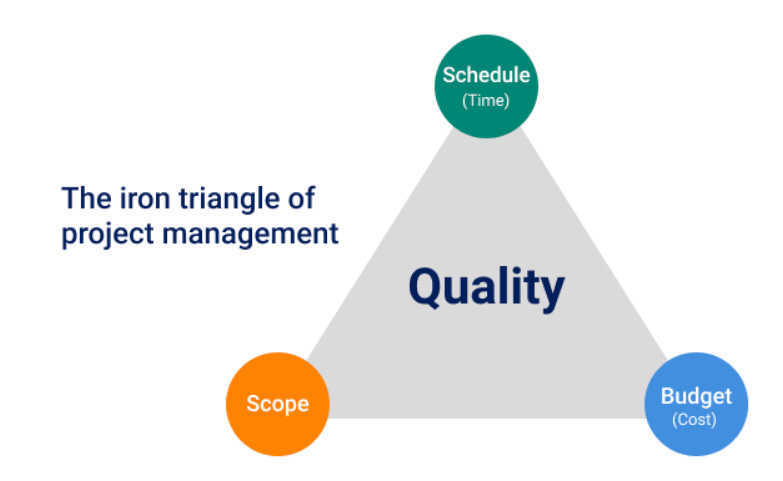

The Iron Triangle

Much like everything else in cybersecurity, we tend to borrow concepts from other fields, domains and aspects of society. The iron triangle is no different, as it is a concept long used in the field of Project Management and business when discussing quality.

At its core, it is a triangle laying out the constraints of quality, being Schedule (Time), Budget (Cost) and Scope.

Less formally, it is often quipped in business “good, cheap, and fast: pick two”.

It is further expanded as below:

Fast and cheap: The quality will be poor

Fast and good: It will be expensive

Good and cheap: It will take a long time

Or as jokingly visualized below, the combination of all three is like finding a unicorn, it’s a fantasy.

The same applies in cybersecurity when it comes to the software industry. While we stress that we want high-quality (secure) products, we’re dealing with constraints, and there are a number of other unique factors at play as well.

These include examples such as information asymmetries between producers and consumers, constraints and competing interests, and a lack of a universal definition of quality - all of which contribute to the reality that cybersecurity is a market failure.

Information Asymmetries

One of the largest problems in the cybersecurity industry is the information asymmetry that exists between producers and consumers.

This occurs on multiple fronts, some related to the broader security ecosystem and dynamics, as well as some related directly to the relationship between software and product suppliers and consumers and the product themselves.

In the fall out of software supply chain attacks such as SolarWinds and Log4j, along with the Cybersecurity Executive Order (EO), the industry woke up to the realization that most don’t know what the hell is in the products they purchase and consume, nor the rigor of the process that produced them.

We’ve seen pushes for artifacts such as Software Bill of Materials (SBOM)’s to try and address the fact that software manufacturers haven’t not historically provided transparency about what is in their product to consumers and customers, including the extensive use of third-party and open source components.

This has been met with mixed emotions, as some claim it is a “roadmap for the attacker” and others have pointed out issues with SBOM data quality, tool maturity and more.

The truth is much closer to the fact that suppliers don’t often know the full extent of components that make up their product, and/or are reluctant to share it given it may show outdated components, extensive vulnerabilities and other defects and deficiencies that don’t bode in their favor.

Given they historically haven’t been required to or even asked for this level of transparency, they aren’t inclined to give it.

We also generally have a lack of transparency around the rigor, methodology and maturity of how software was developed. The industry historically has oriented around static irrelevant questionnaires and checklists which are largely bemoaned by both parties of the sales transaction. That said, those inquiries historically have lacked much emphasis or focus on the software development methodology itself or its focus on security and governance.

That has also started to see a shift, much like SBOM, being led by the U.S. Federal government, who has pushed for all of their software suppliers to begin to self-attest to adherence to the NIST Secure Software Development Framework (SSDF).

While there are inevitable concerns around self-attestations, and how artifacts like the self-attestations and SBOM’s will be used, they do demonstrate an effort by the U.S. Federal government to use its immense purchasing (tens of billions annually on IT) to drive systemic changes.

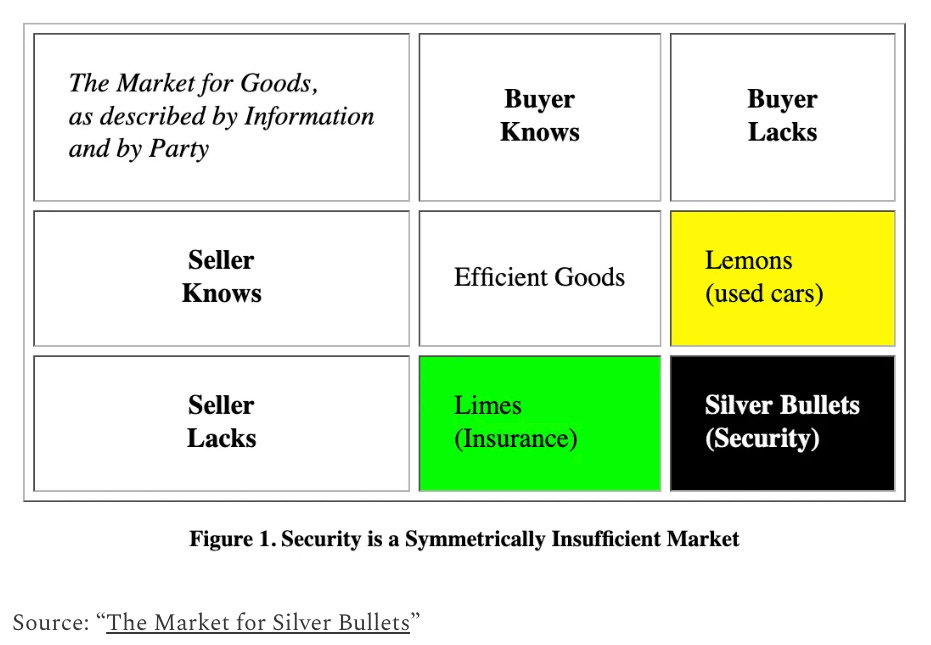

Another fundamental challenge is that cybersecurity is a market for silver bullets.

Many are familiar with the concept “a market for lemons”, where a supplier/seller has more information than the consumer/buy, such as in used car sales, and the argument can be made to some extent such as discussed about about SBOM and SSDF and software transparency that is indeed true.

However, in cybersecurity, not only does the consumer lack information, the seller also lacks significant substantiated data too, such as how truly effective their products, solutions and software are.

That is why we see so many outlandish ridiculous marketing driven buzzword claims from security and software product vendors.

For a much deeper dive on this topic, I recommend reading “Cybersecurity is not a market for lemons. It is a market for silver bullets” at Venture in Security, which was written by Ross Haleliuk and Mayank Dhiman, building on previous pieces from folks such as Ian Grigg’s “The Market for Silver Bullets” and thoughts from security luminary Gene Spafford.

Constraints & Competing Interests

Among the challenges to the software iron triangle are constraints and competing interests.

These include the challenge that security leaders, like others, have finite budgets. Security however, unlike many other business areas, cybersecurity is historically viewed as a cost center, or even necessary evil.

Many do not enjoy engaging with security teams (much of which is our own fault for the way we behave and engage business peers, but that’s another article all of its own). Even developers have called security a “soul withering chore”. This is due to the fact that it can be cumbersome, frustrating, and simply not as exciting as working on and building something new.

We also have competing interests with the business such as speed to market, revenue, feature velocity, customer acquisition and broader competition. While some have cited increasing cybersecurity budgets in 2024, others point out that historically, security budgets get increased after an incident, because prior, it is easy for pushback such as “nothing bad has happened yet, so we must be fine”.

We’re also seeing a massive trend around best of breed vs. platforms in the cybersecurity space, as cybersecurity tool sprawl continues to be a challenge, with some estimates saying organizations have between 70-90 security tools.

Security implications aside, having this many tools has cost ramifications, as well as time commitments, leaning into the software iron triangle of time, cost and scope (capability) across the tools.

Software and security buyers have to consider each constraint, how quickly they need software, how much it will cost, and what capabilities and quality it must have - including how “secure” it is (assuming they can even measure such a thing, which we will discuss below).

Security has a cost

Within the conversation around security being a subset of quality is the truth that building a higher quality (more secure and capable) product has a cost.

While not a perfect example, one such case that has come into the limelight lately is that of Single Sign-On (SSO). Many, including Government leaders, have taken to social media and traditional media outlets to share frustrations with product vendors who up-charge for features such as SSO.

The scenario is so widely discussed that it has been dubbed as an “SSO tax” with CISA event citing it as a factor that can inhibit SMB’s deploying SSO and ultimately hindering their security posture. There is also a site called “The SSO Wall of Shame”, which is a list of vendors that treat SSO as a luxury feature, not a core security requirement.

On one hand it makes sense, as SSO is easily one of the most fundamental security controls to mitigate widespread identity based attacks, it also draws a bigger question of what is a “core security requirement”, and most importantly, as customers and consumers, are we collectively willing to pay for it?

Some have even went so far as to call Cybersecurity a “Human Right”. However, much like Healthcare, if something is claimed to be a human right, someone actually has to pay for it.

Ethical and philosophical discussions aside, if we want to claim specific security features, functionality and capabilities as core security requirements, we have to be prepared to pay additional costs for organizations to development and integrate them into their software and products.

Not only does it costs financially for secure products, it also takes time to develop them.

Are we willing to pay the costs financially and/or incur delays to the latest product releases and iterations if it means obtaining a higher quality more secure product?

This of course is a corner of the software iron triangle.

On the flip side, vendors are faced with similar constraints. Many innovative software vendors are venture backed, with pressures from investors, shareholders and others to grow, acquire customers, gain market share, chase metrics such as Annual Recurring Revenue (ARR) and more.

Those stakeholders aren’t interested in hearing how “secure” a product is, unless it can be tangibly tied to some sort of ROI in the market.

These vendors also have finite time, resources, expertise and capital. All of which must be strategically deployed to grow the organization and drive return for investors and stakeholders.

Making a product more “secure” when it isn’t being demanded by customers or forced via regulation is hard to justify as having a ROI on the deployment and utilization of those scarce resources.

What is “Quality”?

Underpinning all of the discussion of the software iron triangle is the question of what is “secure”?

As an industry we have no universal definition of what “secure” means.

When you look across the industry you see countless perspectives on how to make something secure, or how to measure somethings security.

From Center for Internet Security (CIS) Benchmarks, DISA Security Technical Implementation Guidelines (STIG)’s, Vendor Guides/Best Practices, publications from Cloud Security Alliance (CSA), Cloud Native Computing Foundation (CNCF), the National Security Agency (NSA), the Cybersecurity Infrastructure Security Agency (CISA) and countless others.

Aside from the fact that we’re “best practices” rich and implementation poor, we don’t even have a universal agreement on what secure means.

This is due to the fact that cybersecurity is ultimately risk management.

And risk management exists on a spectrum, with each individual, organization, consumer, and supplier having varies levels of risk tolerance.

We’re not in the business of eliminating risk, we’re in the business of managing it.

Couple that with the harsh truth that as an industry we’re god awful at even managing it. We largely use opaque subjective qualitative risk measurement methodologies like Low, Moderate, High or Critical, based on low quality data.

There are of course attempts to quantify risk in cybersecurity, with leaders such as Richard Seiersen and Doug Hubbard publishing books such as “How to Measure Anything in Cybersecurity Risk” orJack Jones and the FAIR Institute/Model, which seek to enable cybersecurity risk quantification.

However, these efforts are often outliers rather than industry standards, as most organizations still struggle with adopting and implementing them at-scale.

Even in the discussion around secure software development we lack a clear path forward. The latest U.S. National Cybersecurity Strategy (NCS) re-introduced concepts such as Software Liability and Safe Harbor (which got revitalized recently with the Crowdstrike outage) but those topics are far from conclusive.

Many have speculated what would a Software Liability regime even look like, what would be used as a universal standard to measure software security, who would measure it, and what level of “secure” would grant a vendor safe harbor from litigation for not being “secure” enough or doing enough due-diligence.

For deeper dives on these topics, see Jim Dempsey’s publication with Lawfare titled “Standards for Software Liability: Focus on the Product for Liability, Focus on the Process for Safe Harbor”.

In the paper Jim tackles topics such as:

How buggy is too buggy

Comparing software to other fields

What frameworks could (and couldn’t) work for liability

So if as a whole we can’t even define a universal standard of quality (security), how can we reasonably expect to hold vendors to some standard of quality of which we cannot even articulate?

We can’t.

This would paint a scenario where every vendor is held to some subjective opaque definition and is far from just.

Market Failure

Another longstanding concept is that cybersecurity is a market failure.

Market failures occur when inefficient allocation of resources occur when individuals acting in rational self-interest produce a sub-optimal outcome.

In this case, the inefficient allocation of resources if focused on producing secure software (should we be able to define it), and vendors acting in their rational self-interest to maximize profits, while externalizing the cost and consequence of insecure software and products onto consumers.

The cost is essentially externalized onto the whole-of-society now, given software powers everything from consumer goods to critical infrastructure and national security - all of which is underpinned by digital technologies.

This is something that has been publicly discussed for a long time, with authors Jane Chong publishing a series of posts at Lawfare titled “Bad Code: The Whole Series” and Paul Rosenzweig publishing an article titled “Cybersecurity and the Lead Cost Avoider”.

It is worth pointing out that both of these resources are a decade old.

The pieces make the argument that software vendors should be liable and responsible for unreasonable defects in their code/products (should we be able to define unreasonable).

This argument is resurfaced in the U.S. National Cyber Strategy (NCS), specifically Pillar Three “Shape Market Forces to Drive Security and Resilience”, which discusses:

Holding the stewards of our data accountable

Shift liability for insecure software products and services

The challenges of course as discussed above is that we can’t define what an insecure product is, how to measure it, nor how someone should be held liable, or safeguarded when it comes to their products security, or lack thereof.

In relation to the theme of the software iron triangle, vendors are clearly optimizing to select schedule (speed to market) and cost (neglecting sufficient security) in exchange for rational self-interests such as market share, revenue, customer acquisition/retention, feature velocity and competition with similar products and vendors.

If the customer isn’t demanding (and willing to pay for) security and there isn’t sufficient regulatory requirements or consequences for not investing in quality (security), why would they?

They won’t.

It’s not in their rational self-interest, which is maximizing shareholder value.

Role for Regulation?

All of the discussions above call into question the inevitable, what is the role here for regulation?

We’re seeing tremendous amounts of discussion around why and how the software industry should be regulated, and building on existing regulations and requirements.

Some, such as the EU have charged ahead with fairly rigorous requirements, especially in areas like AI, but some have speculated that it will hinder the region economically, more than it helps it.

Here in the U.S. the software and vendor community is already wrestling with an enormously complex and cumbersome regulatory landscape, leading the U.S. Office of the National Cyber Director (ONCD) to release a Request for Information (RFI) to the public, focused on “Cybersecurity Regulatory Harmonization”.

Within there concepts such as regulatory overlap, complexity and reciprocity are discussed.

The ONCD and White House recently released their “Summary of the 2023 Cybersecurity Regulatory Harmonization RFI”.

So concurrently while were seeing a push for harmonizing the existing regulatory landscape due to its complexity and challenges, pushes for implementing Software Liability and Safe Harbor inevitably further muddies the waters.

We also need to be careful what we wish for.

As we have seen with other efforts (e.g. Border, Drugs, Education and more) the outcome can be the inverse of our aspirations.

In short, the cure can be worse than the disease.

Conclusion

So while it is widely agreed that we don’t have a cybersecurity problem, but a quality problem, it is also clear that we have constraints in the software iron triangle for the various entities involved (e.g. vendors/suppliers and consumers).

We also have fundamental challenges such as a lack of universal definition of what “secure” is, how to measure it, who should measure it, and how claims should be validated, coupled with challenges around liability and safe harbor.

These discussions don’t even touch on broader discussions such as the ever present cybersecurity workforce challenge, the role of customers in configurations, usage and security incidents and much more.

If our goal is higher quality software, we should start by defining what quality is, and how we intend to measure it.

Until then, it feels good to say, but doesn’t mean much.