Resilient Cyber Newsletter #48

Coinbase Incident - Markets Yawn, Palo Alto Earnings Call, MCP Potential & Pitfalls, Security for High Velocity Engineering, & AI Slop

Welcome!

Welcome to issue #48 of the Resilient Cyber Newsletter.

Things continue to be incredibly active in the community, from earnings calls, incidents, AI resources, emphasis on MCP’s integral role, and insights around the vulnerability and AppSec space.

I hope you enjoy all of the resources this week!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Break Security Silos. Accelerate Cloud Defense.

Unified security from code to cloud to SOC.

Tool sprawl and team silos don’t just slow response—they increase risk. This guide dives into how AI, automation, and unified data bring AppSec, CloudSec, and SecOps together to detect threats faster and act with precision. It’s a smart read for security leaders rethinking their architecture.

Cyber Leadership & Market Dynamics

Coinbase Incident - Markets Yawn

If you’ve been following me or others like Kelly Shortridge, you’ve likely heard the phrase “Markets DGAF about Cybersecurity”. Public markets don’t care much about cybersecurity, including incidents impacting organizations, and I’ve shared research before demonstrating that markets often shrug when incidents occur. Research shows that stock/share prices may slightly dip, but they usually recover in less than 50 days and rise to pre-incident rates.

That seems to be the case again with Coinbase's recent incident. Coinbase was involved in an insider-driven compromise via social engineering, including a $20M ransom demand. Following the incident, Coinbase’s stock rose.

In this article from X-Analytics, they mention that the projected financial impact to Coinbase is about ~$50M, or 0.8% of their annual revenue, despite public headlines that it could be $400M.

In the article, they make the article that cyber events, even the “serious” ones aren’t priced in as being “material” to the business value, unless:

Massive consumer trust erosion occurs

Regulatory punishment escalates

Financial performance takes a hit

The closing quote is the most damning

“Cyber risk is still mostly noise unless it hits revenue, reputation or regulation at scale.”

Cybersecurity Salaries in 2025: Shifting priorities, rising demand for specialized roles

We have discussed the cyber workforce in several recent newsletters and articles, including widespread layoffs that impacted many in the community. This latest article examines CyberSN’s “Cybersecurity Salary Data Report 2025” and some of the key findings.

Some of the key findings include:

Specialists and leaders see pay increases

GRC and compliance roles hold steady

Generalist and support roles plateau

Skill-based hiring gains ground

The report indicates that key areas such as Cloud, IAM, DevSecOps, and Product Security are seeing intense competition and compensation. GRC has also stayed relatively stable, which isn’t surprising given the increased regulatory and compliance requirements organizations face.

That said, general cyber roles have begun to stagnate, resulting in lower and less salary growth overall. Organizations are slowly shifting towards skill-based hiring, meaning YoE isn’t an automatic slam dunk, and employers want to evaluate the skills of the resources they hire.

Palo Alto Networks (PANW) Strong Q3’25 Earnings Call

Several folks in my network have begun sharing some highlights from PANW’s recent earnings call, and overall, the firm has done stellar.

This includes:

Several double digit deals in the tens of millions, largely driven by platformization and CSIAM, they attribute 90~ of net new deals to the platformization push

200% ARR Growth (YoY) for XSIAM with an >$1M average ARR, and the CEO even stated, “on a trailing 12-month basis, XSIAM bookings are approaching $1 Billion.”

6,000 SASE customers

~3M Access Browser Seats, which is +1,100% YoY growth

$700M Acquisition Of Protect AI, which was announced during RSA, and they state is a $15B TAM

PANW’s CEO recently went live with Jim Cramer, during which they discussed a range of topics, including the firm's performance and its Protect AI acquisition.

Russian GRU Targeting Western Logistics Entities and Technology Companies

CISA and partners released a publication explaining how Russian state-sponsored cyber campaigns have been targeting Western logistics entities and technology companies, continuing the trend of cyber being a modern pillar of warfare. This included organizations involved in providing assistance to Ukraine, which makes sense given the ongoing conflict between Russia and Ukraine.

The publication provides a:

Description of targets

Initial Access TTPs

Post-Compromise TTPs

Indicators of Compromise (IoC)

And more, as well as resources organizations can use to mitigate risks from this threat actor.

AI

Resilient Cyber w/ Vineeth: Model Context Protocol (MCP) - Potential & Pitfalls

In this episode, I discuss the Model Context Protocol (MCP) with the OWASP GenAI Co-Lead for Agentic Application Security.

We will discuss MCP's potential and pitfalls, its role in the emerging Agentic AI ecosystem, and how security practitioners should think about secure MCP enablement.

We discussed:

MCP 101, what it is and why it matters

The role of MCP as a double-edged sword, offering opportunities but additional risks and considerations from a security perspective

Vineeth's work on the "Vulnerable MCP" project is a repository of MCP risks, vulnerabilities, and corresponding mitigations.

How MCP is also offering tremendous opportunities on the security-enablement side, extending security capabilities into AI-native platforms such as Claude and Cursor and security vendors releasing their own MCP servers

Where we see MCP heading from a research and implementation perspective

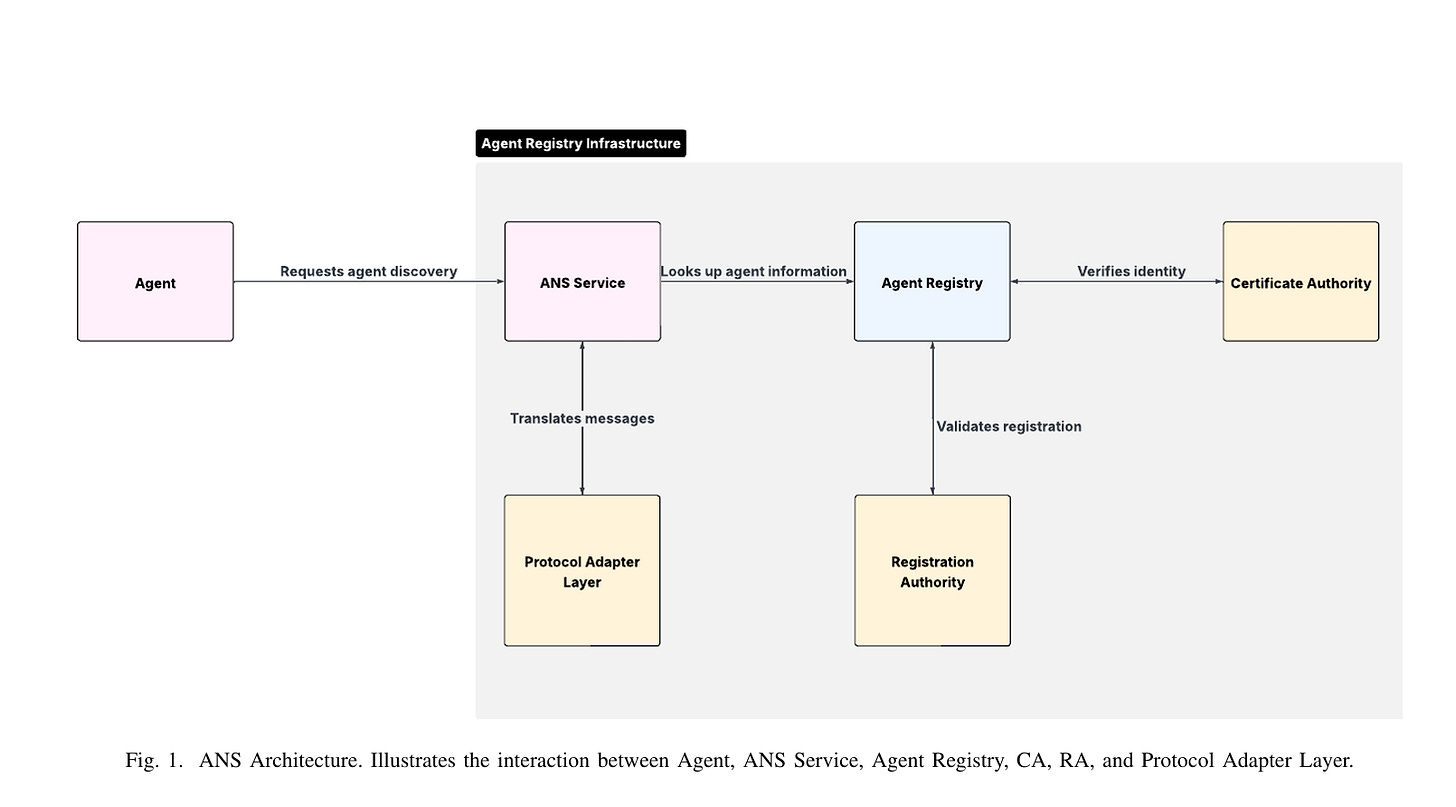

Agent Name Service (ANS): A Universal Directory for Secure AI Agent Discovery and Interoperability

Speaking of Vineeth, another major project he is involved in is the ANS effort, which is being positioned as “DNS” for agents.

This informative paper discusses core aspects of ANS and why it is needed as agentic architectures and agents more broadly become more common place, drawing inspiration from DNS. It discusses core aspects such as a Agent Registry, Registration Authority, Certificate Authority and more.

Awesome GenAI Cyber Hub

I stumbled across this repository, a collection of LLM applications in cybersecurity.

It includes key areas such as:

AI SOC

Vulnerability and Malware Analysis

Detection Engineering

OffSec

And more.

Threat Modeling with LLMs: Two Years In - Hype, Hope, and a Look at Gemini 2.5 Pro

We continue to see the exploration of use cases for LLMs, including in security. In this case, researchers have spent the last several years tinkering with LLMs for threat modeling and providing their thoughts on their performance for the use case.

They cite LLMs' substantial value and utility for threat modeling and the need for expert human analysis and involvement. They also cite open-source resources such as TM Bench and others that show how various models perform on threat modeling and associated tasks.

The researcher provides a lot of great thoughts based on testing and experimentation, but also concludes that they do not see threat modeling being fully automated by LLMs at their current state and capabilities.

Integrating AI Agents into Existing SOC Workflows: Best Practices

Filip Stojkovski continues to share excellent AI content, specifically around SecOps. His latest piece is more of the same, focusing on best practices for integrating AI agents into SOC workflows.

He recommends starting with the “ugliest, most soul-crushing tasks”, such as:

Alert triage in noise, context-heavy sources such as EDR

Context gathering from 5+ tools for every incident

Enrichment that analysts always forget to do

He also discusses the need to train the team rather than just the model, including demystifying the tech, teaching prompting and delegation, participating in hands-on labs, and formalizing the role of “Agent Supervisor.”

Refreshingly, he also lays out several pitfalls to expect, especially on the integration front with changing API’s and even pushback from analysts worried about job security.

AI Agents vs. Agentic AI

There's a TON of discussion right now about AI Agents and Agentic AI. The two concepts are closely related but have some unique considerations and characteristics. This paper does a great job of helping the reader understand AI Agents and Agentic AI, what distinguishes one from the other, and the role the former plays in the latter.

It discusses their architecture, scope/complexity, the way interactions occur in Agentic AI, and the role of autonomy.

Key differentiators, such as being specific to a task or involving multi-step complex tasks, requiring coordination and learning specific to the domain or across environments.

The importance of specialized agents collaborating, decomposing tasks, persistent memory, and orchestration.

Security and Adversarial risks, including the expanded attack surface, tool manipulation, model poisoning, and more.

This is a good read for those looking to understand AI Agents and Agentic AI better and see where the industry is headed across countless verticals and use cases.

AppSec, Vulnerability Management, and Supply Chain Security

Quantifying AI's Impact on Data Risk 📊

The discussion around AI and cybersecurity can be overwhelming. Everything from models, prompts, open vs. closed source, supply chain, data exposure, and more.

That's why I was excited to check out Varonis's "2025 State of Data Security Report." It looked at over 1,000 real-world environments and investigated AI's impact on data risk.

Some of the key findings include:

📖 90% of organizations have exposed sensitive cloud data that can be surfaced and trained on by AI, for better or worse

👻 88% of organizations have stale ghost users, dormant accounts that still have access to applications and data, making them prime targets for compromise, lateral movement, and more.

👁️ 98% of organizations have unverified and unsanctioned AI apps, e.g., "Shadow AI", with little oversight or involvement from security teams

The report also has much more, including overly permissive accounts, unencrypted data, exposure to agentic and AI threats, and the list goes on. This is an insightful report that shows the intersection of AI, cloud, and security risks across over a thousand organizations, with a large potential for compromise and impact.

I dive into everything in my article below, so check it out!

ASPM ’verse Virtual Conference

You’ve likely heard of “Application Security Posture Management (ASPM)”. If not, you can check out my article with Francis Odum titled “The Rise of Application Security Posture Management (ASPM) Platforms”.

The AppSec space continues to become more complex, from vulnerability management and prioritization to tooling. Throw AI into the mix, and it is poised for some fundamental transformations in how we approach AppSec.

That is why I am excited to share that I am joining Cycode for their ASPM ’verse event, I’ll be speaking in a fireside chat on:

“The Future of Application Security: 3 Ways Agentic AI is Changing Security in 2025” 🔐🤖

We’ll dig into:

✅ How AI is reshaping modern AppSec strategies

✅ Defending against evolving vulnerabilities and supply chain threats

✅ Transforming your security posture to meet the pace of innovation

🗓 June 4, 2025

🕚 11AM ET | 8AM PT

💻 Virtual & FREE to attend!

Join me and other AppSec leaders as we explore what’s next for security in the age of AI - with this LINK.

Cyber Hard Problems 🧩

I saw Phil Venables' post about this last week, so I grabbed a physical copy and am looking forward to digging into it.

As the book points out, cyber systems now power everything from consumer goods to critical infrastructure, even playing a key role in modern geopolitics and nation-state conflicts.

The study was sponsored by the Office of the National Cyber Director, The White House, and includes support from the National Science Foundation (NSF)

It looks at what some of the "cyber hard problems" are, including taking a look at:

- The role of cyber in modern digital society

- Key considerations for cyber resiliency from engineering to complexity

- Problem spaces such as Risk Assessment and Trust, Secure Development, System Composition, Supply Chain, and Policy & Economic Incentives

- The producer perspective is also essential, as is the role of software vendors and suppliers in the broader ecosystem.

The research and publication aim to help inform and empower a community response.

When I saw that it involves folks such as Hyrum Anderson, Josiah Dykstra, Wendy Nather, and other industry leaders, I knew it was going to be a good one.

I finally got the chance to read this piece from Jason Chan, which I've been wanting to dive into for a week.

Security for High Velocity Engineering

I finally got the chance to read this piece from Jason Chan, which I've been wanting to dive into for a week. It didn't disappoint 🔥

Jason talks about the fundamental shifts over the years (decades) among software development and technology/business more broadly, and key methodologies to enable secure high velocity engineering.

It covers:

🚫 Shutting down the tired mantra of "security is everyone's job!"

👌 The importance of context, strategy, and execution in effective security

🧠 How critical it is to align with the developer experience to maximize flow state, enable effective feedback loops, and minimize cognitive load.

Most security teams fail to do this effectively, which is why we are avoided, worked around, and perpetuate the age-old "bolted on, not built-in" security paradigm.

📈 Real-world challenges include security's sublinear growth compared to overall company growth, despite the need to support the entire business, emphasizing the need for scalability.

🛑 The reality that gates are anti-patterns to developer productivity, and guardrails facilitate flow (and engagement), and the role of paved roads.

This is a pragmatic and informed approach to security, focusing on the importance of Context, Strategy, and, most importantly, Execution to enable secure velocity among engineering and break with longstanding security anti-patterns that impede it.

curl Sick of Users Submitting “AI Slop” Vulnerabilities

We, of course, know that there is a lot of excitement around AI use cases, including potentially helping find vulnerabilities. That is promising and has a lot of potential to identify and even potentially remediate vulnerabilities one day.

However, right now, the process is far from perfect. The author and lead of the widely popular open source project “curl” took to LinkedIn to discuss how they’re basically being DDoSed by AI-generated findings and reports, rather than actual vulnerability researchers.

Most the heartache is coming via their involvement in the bug reporting program HackerOne. Daniel outright stated the reports they’re seeing are “AI-slop”.

The article goes on to discuss Daniel's reaching out to HackerOne to try to address the issue and HackerOne's perspective on actions they’re taking to improve the fidelity of reports, including those that include AI tooling usage.

It’s All Just Software

I have been discussing how cybersecurity tools are software, much like the very same software they are designed to protect. This means they have flaws and vulnerabilities and are ultimately part of your attack surface.

That is why I was pleased to see myself cited in the latest CISO Series blog alongside folks I respect, such as Ross Haleliuk, Christofer Hoff, and others. They spoke about how my article highlights that security tools add to our attack surface and can introduce more risk than they mitigate in some cases.