Quantifying AI's Impact on Data Risk

A look at Varonis' 2025 State of Data Security Report, including insights from over 1,000 real-world environments

If you’re like me, as a cybersecurity practitioner, you’ve likely been doing your best to keep up with the rapid pace of innovation and acceleration in AI and its impact on cybersecurity.

Everything from models, prompts, open vs. closed-sourced models, supply chain concerns, data exposure, and more. You’re likely also curious about the actual impact of AI on data risks. As we continue to see organizations rapidly adopting this technology, security teams and leaders continue to have valid concerns around data exposure, leaks, sensitive data disclosure, and other data-centric concerns.

That’s why I’m excited to read Varonis's new report, “2025 State of Data Security Report: Quantifying AI’s Impact on Data Risk: Insights from 1,000 real-world IT environments.”

Key Findings

The report starts by looking at key findings, and unsurprisingly, it states that AI adoption is outpacing security measures. This is a tale as old as time, and it is why we have phrases about security often being bolted on rather than built on.

The reasons for this perpetual cycle are deep, including longstanding fractured relationships between security and the business due to our risk-averse nature, as well as the fact that AI security tooling is lagging behind AI business-specific functionality and integrations, as is often the case with any emerging technology.

That said, the how in terms of this lag is where the risks are manifesting, see below:

The concerns are wide-ranging and have a strong potential for real organizational risks. For example, 90% of organizations have sensitive data publicly exposed, which could be accessed by AI and even included in subsequent models via model pre-training.

We know credential compromise has been a problem for years. As the saying goes, hackers don’t hack in; they log in. Often, compromised credentials, including stale user accounts and credentials, exist. The report found that 88% of organizations have stable enabled “ghost users,” active accounts with application and data access that aren’t actively used.

This creates situations where the accounts can be used maliciously and go undetected because they are seen as legitimate users or used to move laterally throughout environments. Couple that with the rise of Non-Human Identities (NHI), which are poised to outnumber human users/credentials exponentially, and the problem is likely to get much worse, particularly as the popularity of agentic AI grows.

As I’ve written about in my article “Bringing Security Out of the Shadows,” insecurity lives in the shadows, including AI. Rampant shadow AI usage seems pervasive, with the report finding that 98% of organizations have shadow and unsanctioned AI usage.

This again is driven by longstanding risk-averse security cultures, where the business and developers work around rather than with us due to fears of security saying “no.” We also cannot keep pace with our business peers, who are rapid adopters and innovators of new technologies. At the same time, we remain late adopters and even laggards, driving the infinite cycle of bolted-on rather than built-in security cultures.

Rounding out the key findings, almost all organizations —99%—have sensitive data that they have dangerously exposed to AI tools. We see organizations rapidly adopting everything from LLMs to chatbots, coding assistants, and more, all haphazardly granted access to sensitive organizational data, creating cybersecurity, compliance, and reputational risks for organizations.

Now that we’ve discussed the key findings, let’s go a bit deeper and see how these findings culminate among enterprise organizations, including over 1,000 of which Varonis examined as part of the report and research process.

Shadow AI

Among the key findings was the rampant presence of shadow AI, that is, AI usage that is out of the purview or involvement of cybersecurity, bypassing security controls, governance, and active advocacy for secure adoption and usage.

Varonis points out that millions of users downloaded DeepSeek in 2025 alone and points to incidents such as unsecured DeepSeek databases that exposed millions of lines of log streams, including chat histories, secret keys, and other sensitive data. Governance of model usage is a real problem, especially when economic factors such as cheaper models, which are appealing from the business perspective, help drive adoption over security considerations.

The findings of the Varonis report really highlight this concern beyond just a specific model.

Nearly all organizations have rampant unsanctioned AI app usage, as security can’t keep pace with the proliferation of AI applications and services that their business, development, and engineering peers are tapping into.

As the report shows, much of this is driven by SaaS governance risks, with over half of employees using high-risk OAuth apps and 25% of OAuth apps in the organizations being high-risk.

This presents not only governance and data exposure risks but also the ability for these credentials to be compromised. This could cause a chain reaction of supply chain risks across the various AI applications and services accessed with the credentials.

Microsoft 365 Copilot Data Risks

The next findings are risks associated with Microsoft 365 Copilot. For those unfamiliar, Microsoft 365 Copilot is embedded in core applications such as Word, Excel, PowerPoint, Outlook, Teams, and more, and can boost productivity and capability.

However, as Varonis points out, this potential force multiplier also carries security risks. The largest of these is the amount of organizational data, especially sensitive data, that Microsoft’s Copilot interfaces with, especially as organizations do a large amount of their work in the fundamental applications in which Microsoft’s 365 Copilot is natively embedded.

While some may be inclined to blow off these concerns, it wouldn’t be wise, even if it is Microsoft. It’s worth remembering that Microsoft is the leader on CISA’s Known Exploited Vulnerability (KEV) catalog, was being investigated as part of the Cyber Safety Review Board (CSRB) in 2024, and was even called a “national security threat” due to incidents involving its products by lawmakers last year.

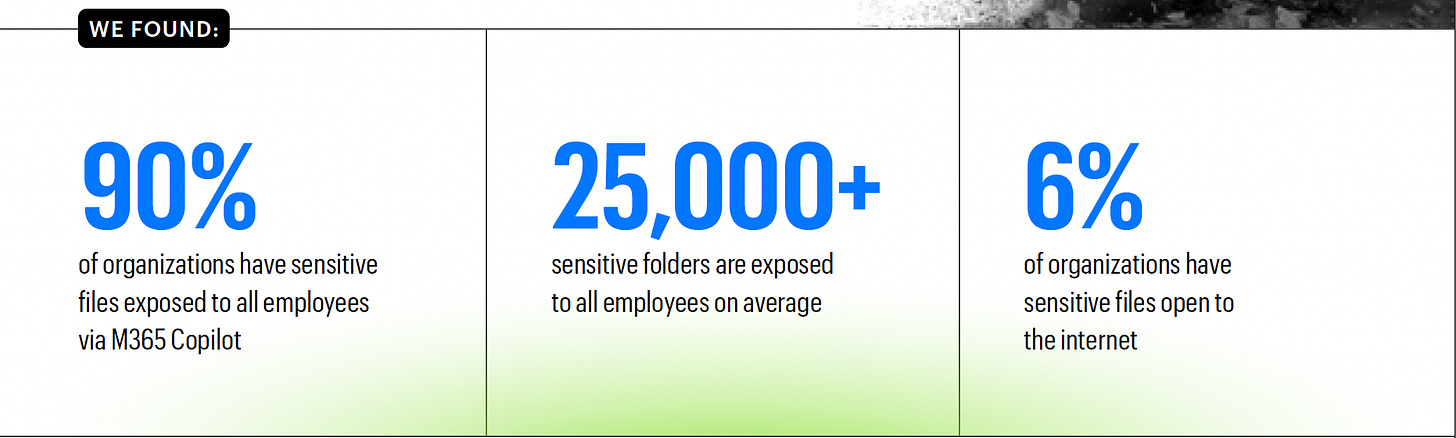

As you can see above, sensitive data exposure is a massive problem, impacting 90% of organizations. Tens of thousands of sensitive folders are exposed to all employees and even the open Internet.

The report points to a best practice, including one emphasized by Microsoft around Data Labeling, which helps ensure data is properly categorized, managed, and protected from AI misuse. The problem, however, is that only 1 out of 10 companies have labeled files, meaning the security recommendation is falling on deaf ears.

Salesforce Agentforce

Following Microsoft, another industry leader, Salesforce, is highlighted in the report. This time it focuses on Salesforce’s offering of “Agentforce”, which is framed as the “Digital Labor Platform”. In Salesforce’s own words “agents can connect to any data source and use it in real time to plan, reason, and evaluate”. From a business perspective, this sounds great, but from a security perspective?

Not quite.

As Varonis lays out in the report, these same agents can surface unprotected sensitive data, and lead to unauthorized data access and misuse, something that has been highlighted by groups such as OWASP in their “Agentic AI - Threats and Mitigations” publication which I had the opportunity to serve as a distinguished reviewer on.

Varonis’ report highlights just how complex and perilous our environments are getting with AI's rapid adoption and integration. For example, they found that for an SMB with 1,000 employees, over 10% of the workforce has permissions to create, grant permissions, and customize applications. Users can also create “public links”, which can be used to grant access to external AI applications, such as ChatGPT, which can then access your internal organizational data.

It is only a matter of time until we see these exact scenarios, a tangled web of poor access control and supply chain concerns manifesting into real-world organizational impacts and incidents.

Model Poisoning and Risks to AI Training Data

Exposure of internal data via agents and poor access control aren’t the only concerns. Varonis rightly points out risks such as model poisoning and AI training data, which are risks also highlighted by industry leaders such as OWASP, as well as MITRE, in their MITRE Adversarial Threat Landscape for AI Systems (ATLAS).

Varonis explains that with organizations curating their AI processes and products, and using data to train them, this data is at risk of breaches and attacks. This is especially true as much of the data sits across multiple cloud and IaaS environments, making managing, governing, and securing it difficult.

The findings highlight both how pervasive the risk is, as well as how poorly many organizations are doing at fundamentals such as encryption and access control, see below:

Encrypting data can serve as a protection mechanism against model training and access by unauthorized entities. Attackers can also perform model poisoning, tampering with or corrupting training data to subsequently impact a model's performance or expose it to malicious prompts.

Ghost Credentials

Next among the key risks Varonis highlights is what they call “ghost users,” which are valid active accounts that belong to former employees and contractors. This demonstrates a fundamental failure of organizations to manage account lifecycles through decommissioning properly.

As the report states, despite users no longer being in the role, with the organization, or having a need to know, the accounts retain access to applications and data and can be used maliciously, often without notice, because they seem to be valid accounts.

These compromised accounts, including those belonging to agents or non-human identities (NHI), can be used to move laterally through environments, access sensitive data, escalate privileges, and more.

The problem isn’t small either. Check out the findings from Varonis below:

In the age of “zero trust”, which is built on least permissive access control, we have thousands of ghost, vacant accounts that are overly permissive and just sitting around ripe for the taking by malicious actors.

Cloud Identities

Building on the bane of seemingly every organization’s existence, identity, comes cloud identities, which Varonis dubs “sprawling and complex”. Varonis highlights what is often called “permission creep”, where groups and memberships aggregate over time, much like a blob.

As demonstrated in the previous section, organizations not only eliminate ghost/vacant accounts but rarely actually clean up the permissions assigned to users via groups, roles, membership, and more.

Varonis found the problem to be the most acute among non-human identities (NHI’s, such as API’s and service accounts and is understandably difficult, with the report highlighting just one CSP, AWS, has over 18,000 possible identity and access management permissions to manage.

What’s wild is that AWS even released a native service called “IAM Access Analyzer,” which can be used to right-size permissions based on assigned access and actual usage. However, it seems organizations are making little to no use of this easy-to-use service that can help tighten up their permissions.

Missing MFA

We all know Multi-Factor Authentication (MFA) is a critical security control that must be in place, right?

Right?

Well, maybe not so, as Varonis found troubling metrics demonstrating that MFA usage was far from commonplace or standard.

When we consider that the average organization uses hundreds of SaaS applications, the lack of MFA opens the door for credential compromise and malicious movements among SaaS applications and internal organization data, including potentially sensitive data.

It isn’t just enterprise organizations that are being targeted, either. The report highlights incidents such as Snowflake’s 2024 incident involving stolen credentials and missing MFA to access downstream customer environments in a textbook example of a software supply chain compromise.

Closing Thoughts

The Varonis 2025 State of Data Security report provides eye-opening insight into how problematic a lack of fundamental security controls and practices is for cloud and AI applications, services, and environments.

Among the gold rush to rapidly adopt this promising emerging technology, we can’t forget the fundamental security controls and practices we’ve learned through painful past incidents.

Varonis’ report leaves us with a few key recommendations:

Reduce your blast radius: This includes managing identities throughout their entire lifecycle, and right-sizing permissions based on actual need-to-know and usage. This will be even more critical as we see the rise of Agents and Agentic AI architectures and use cases.

Data security is AI security: Varonis highlights that data powers AI, whether it's the data the model is training on, organizational data the models and agents are interfacing with to drive business outcomes, or other data. This means organizations must have a comprehensive approach to data security to enable secure AI adoption.

Use AI for good: Aligning with something I have been emphasizing to my security peers, Varonis states t hat AI is a powerful tool, not just for the business or attackers, but also for defenders, when it comes to enabling automation, labeling data, responding to incidents, addressing vulnerabilities, identifying malicious behavior and more. This is a critical point that I tried to hammer home in my own article “Security’s AI-Driven Dilemma”, where I stated security must be an early adopter and innovator with AI, to avoid perpetuating the bolted-on, rather than built-in security paradigm we all know so painfully well.

Be sure to check out the FULL REPORT from Varonis, as well as some additional resources below:

Snowflake Incident Investigation

Shadow AI seems to be everywhere! Feels like security teams are slow on the approval process for commercial Gen AI usage…