Resilient Cyber Newsletter #47

Layoffs & Workforce Woes, AI Congressional Testimony, Vulnerable MCP Project, The State of DevSecOps in the DoD & the Software Security Code of Practice

Welcome!

Welcome to issue #47 of the Resilient Cyber Newsletter.

Despite heading into summer, things are still quite hectic and exciting, with major congressional testimonies on AI, massive workforce disruption due to layoffs (and potentially AI), valuable AI security and AppSec insights, and more.

With that said, let’s dive in, as I look forward to sharing all the resources this week!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Ship Code, Not Privacy Risks

When PII leaks into logs—a clear violation of GDPR and CCPA—relying on DLP is reactive, unreliable, and slow. Teams often spend weeks scrubbing logs, assessing exposure across tools that ingested them, and patching code after the fact.

HoundDog.ai flips the model by analyzing code early to catch unintentional developer mistakes—like overlogging or oversharing sensitive data—before it reaches production. That’s why leading enterprises trust us to drive proactive data minimization, especially in AI applications, which introduce more risky mediums than traditional apps—like prompt logs, temp files, and cached inputs.

Privacy shouldn’t be an afterthought. HoundDog.ai’s privacy-by-design code scanner integrates across all stages of development, from IDE to CI, with in-PR fix suggestions developers actually use.

Cyber Leadership & Market Dynamics

AI’s Impact on Software Engineering Hiring (or Not)

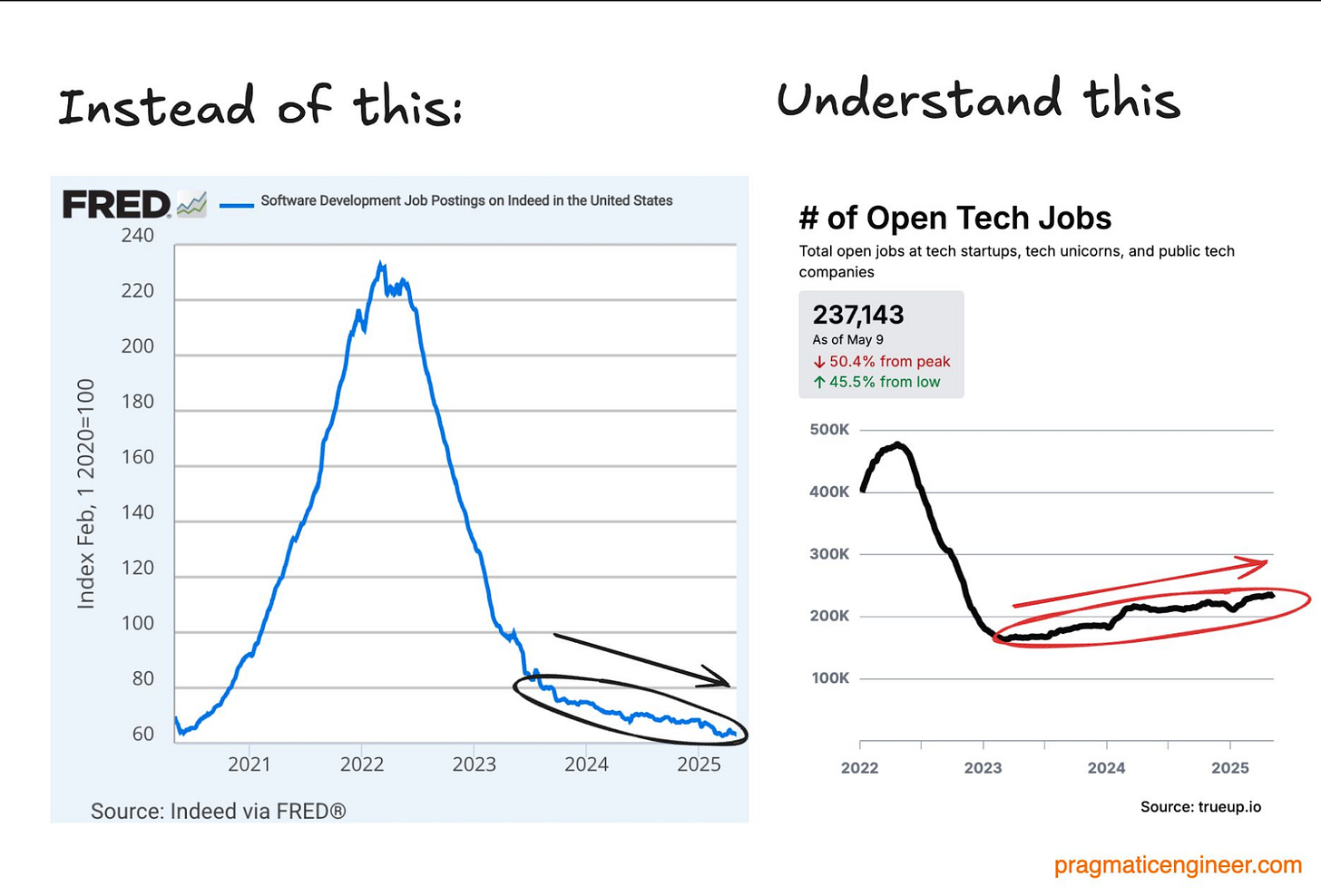

A chart began to make rounds recently, highlighting what was claimed to be an incredibly sharp dip in software engineering hiring by top US AI companies, see below.

The chart initially seemed to be credible, but began to face valid criticisms and analysis by folks such as Gergely Orosz. The chart originally seemed to be from an article by “zekidata”. Gergely points out that this is tied much more to the change from zero interest rates than it is from the rise of AI and LLMs, and even showed that there is a rise in hiring within tech jobs, including startups, unicorns, and public tech companies, from a dip seen during 2022-2023.

While I agree with his analysis, I do think we are seeing some major tectonic shifts occurring in the software engineering space with the rise of AI-driven development. As I’ve written in articles such as “Security’s AI-Driven Dilemma,” I suspect we will see major shifts needed within the security industry to improve effectiveness and align with this new LLM-based model of development as well.

In my opinion, cybersecurity's future effectiveness will depend on whether it remains a laggard of emerging technologies like it has in the past or reverses across the chasm and becomes an early adopter and innovator ourselves, much like our development and business peers when it comes to AI.

Crowdstrike Layoffs Rattle Cyber Industry

Following the above discussion about a sharp drop in software engineering hiring, Crowdstrike recently announced plans to lay off 500 employees, or 5% of its existing workforce.

The layoffs have to some extent been attributed to AI, with this CNBC article quoting Crowdstrike CEO, George Kurtz, who said:

The article also discusses Crowdstrike’s growth ambitions, aiming for $10 billion in annualized revenue. Despite emphasizing AI, the article cites layoffs elsewhere, potentially due to economic and market uncertainty, such as at Autodesk, HP, and others.

Ironically enough, news broke that Klarna and others who heavily pivoted to AI and let go of teams in various functions are now walking that back and re-hiring staff after challenges with prioritizing their use of AI too heavily in place of human expertise and labor.

Microsoft Lays Off 6,000/3% of Workforce.

Building on the above workforce discussions, Microsoft recently announced that it was reducing its workforce by 6,000, or 3% of its overall workforce. This came just a few weeks after Microsoft's earnings announcement, which beat first-quarter earnings expectations, driven by Azure cloud growth. This also comes after Microsoft announced significant AI investments.

The article notes similar cuts at Amazon, Meta and Salesforce, including a 5% cut at Meta in February.

Ransomware Attack Wipes Out $1.7 Billion in Market Value

A ransomware attack on Marks & Spencer, a large British retailer, starting around easter by the DragonForce ransomware group has led to a massive financial impact. The organization is estimated to lose ~$19M per week in profit due to being unable to process online orders or track store inventory.

AI

AI Superiority is Economic and National Security

I often use my spare time on weekends to catch up on long-form educational, informative, and insightful content. This committee on commerce, science & transportation has it all. It features Sam Altman of OpenAI, Dr. Lisa Su of AMD. Michael Intrator of CoreWeave and Brad Smith of Microsoft

It involves a wide-ranging conversation on:

The intersection of AI and Economic and National Prosperity and Security, including our race against China, most specifically

The need for robust, diverse energy sources to power not just AI but our future society

The need for rigorous testing and standards balanced with the need for speed and innovation, and notably, NOT following the example of the EU

Everything from protecting children to developing local and regional economies, data center developments, workforce education and stability, and much more.

It even has collaborative, productive, bipartisan discussions and subtle shots at differences in political ideologies and legislative policies.

Suppose you're passionate about technology, cybersecurity, economics, politics, and the future implications for the U.S. and the world. In that case, this is an excellent discussion to invest the time to listen to.

Microsoft Outlines a Taxonomy of Failure Modes in AI Agents

Microsoft recently released a comprehensive paper outlining AI agents' potential failure models.

It covers key topics such as:

Potential Failure Models: what their effects may be, mitigations, design considerations, and limitations

Case Studies, such as memory poisoning attacks on an agentic AI email assistant

Novel security and safety failure modes, as well as those that already exist now

Agentic Disruption: Innovator’s Dilemma As Applied to Agentic Adoption

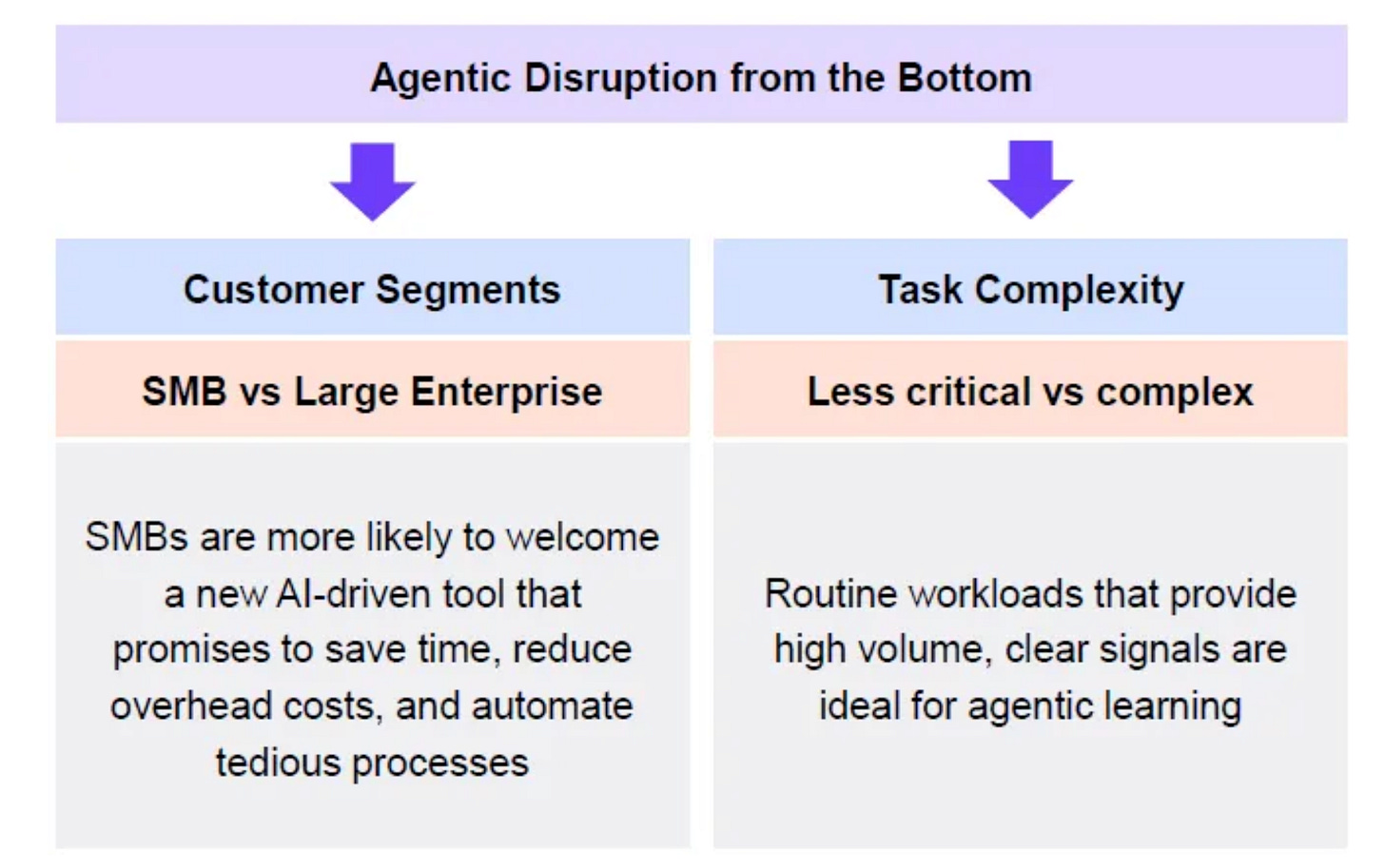

We continue to see a lot of excitement with Agentic AI, including in cybersecurity niches such as SecOps, AppSec and GRC. SOC automation in particular seems to be a key focus use case. This piece from Margin of safety discusses agentic adoption from the perspective of the innovator’s dilemma.

This includes the fact that SOC automation startups alone have raised $150M in the last 12 months, let alone some of the established SOC/SIEM players and their investments around AI and automation. They discuss agentic disruption from a bottom-up perspective, including customer segments and task complexity. This includes SMBs vs Large Enterprises, as well as less critical vs. more complex tasks, as seen below:

The authors make the case that we’re likely to see earlier adoption in SMBs of agentic use cases, given their lack of expertise and workforce constraints, as well as earlier adoption of agentic implementation tied to less critical tasks, rather than complex ones.

They list the three characteristics of tasks ripe for agentic AI as:

Highly observable, often due to a digital substrate (e.g., things that are clear and able to be tracked)

Well-defined, quantitative success metrics

High data volumes, especially for repeated tasks

While these are valid points and I suspect they will prove true in some cases, I also suspect we may see some large enterprises being early adopters of agentic use cases due to their vast amounts of data, more robust budgets, being targeted more by VC backed AI startups looking to drive ROI and find early product market fit (PMF) and more.

We’ve seen many times that cyber vendors are disproportionately focused on the haves, rather than the have-nots (e.g., enterprises rather than SMBs) when it comes to target customers, often due to budgets.

This is why my colleagues and I have written and discussed concepts such as the cybersecurity poverty line, which most SMBs live below.

A2A + MCP: A Blueprint for Next-Gen Critical Infrastructure Attacks?

We’ve heard quite a bit about A2A and MCP, and I’ve covered them in various articles. That said, could they be part of next-gen attacks on critical infrastructure? That’s the case SANS’ Rob T. Lee makes in an article.

Rob discusses the potential for A2A and MCP to facilitate ongoing persistent access, lateral movement, and other activities in critical infrastructure environments.

The Vulnerable MCP Project

In the past couple of months, we've heard much excitement and concern about the Model Context Protocol (MCP). MCP is poised to facilitate the rise of agentic AI architectures and autonomous workflows among agents.

It also comes with potential pitfalls, including an expanded attack surface, exploitation, lateral movement, and authorization gaps. This "Vulnerable MCP" project from Vineeth Sai Narajala is an awesome resource for learning more.

It includes:

📚 A comprehensive deep dive of MCP with technical insights and education

✅ An MCP "Implementer's Checklist" for quick vulnerability and risk assessments

📗 Expanded documentation of known vulnerabilities and associated mitigation strategies.

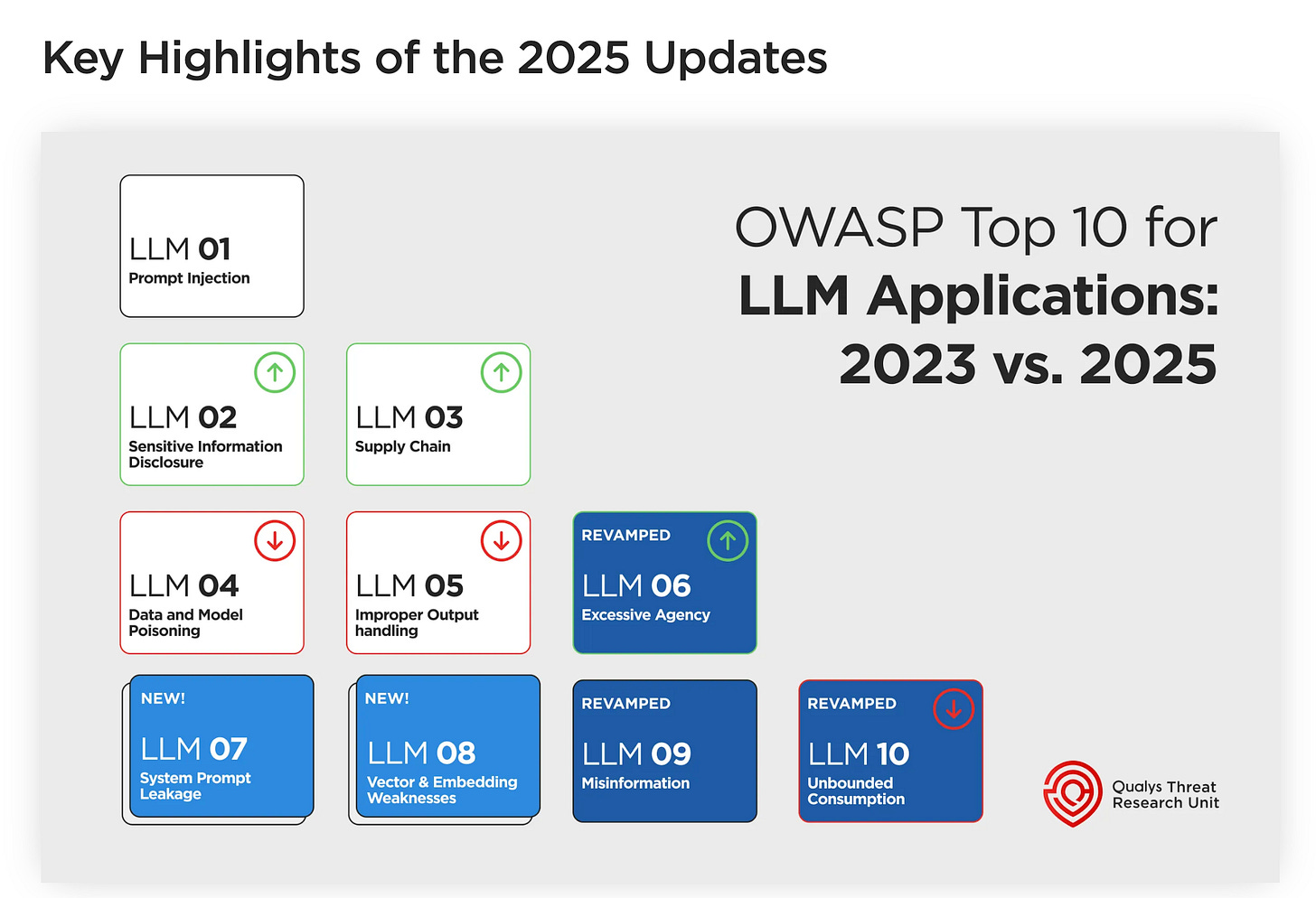

OWASP Top 10 for LLM Applications 2025: Key Changes in AI Security

I’ve previously shared the OWASP Top 10 for LLMs. However, this is a good blog from Saeed Abbasi of Qualys that dives into some key aspects of the OWASP LLM Top 10.

This includes:

Key updates in 2025

Recent vulnerability entries

Revised and expanded AI Security Risks in OWASP 2025

How Qualys addresses AI security (it’s always helpful to hear how different vendors are approaching the problem).

Orchestrating Agentic AI Securely

We continue to see much excitement about Agents and Agentic AI. That said, this emerging technology requires sound security principles and threat modeling.

In my latest article, I examine the new threat modeling framework, Multi-Agent Environment, Security, Threat, Risk, and Outcome (MAESTRO). It was created by Ken Huang, CISSP, and covers seven key aspects of agentic AI systems:

🧱 Foundation models, including those consumed by model providers, as well as open source models from popular platforms such as Hugging Face, where I cite the AI Security Shared Responsibility Model, from my friend Mike Privette

0️⃣ 1️⃣ Data Operations, including threats such as data poisoning, exfiltration, tampering, and model inversion/extraction, which OpenAI claimed China's DeepSeek did

🕸️ Agent Frameworks, with examples such as Microsoft's AutoGen, LangChain, CrewAI, and LlamaIndex, where risks such as compromised model frameworks, backdoor attacks, framework evasion, and more can occur.

☁️ Deployment and Infrastructure is key, which should be very familiar to those with experience in securing cloud environments, Kubernetes, Containers, and fundamentals such as CSPM, IaC, and secure containers with runtime visibility.

🔎 Evaluation and Observability: With agents poised to exponentially outnumber their human counterparts, monitoring these agents, including the processes they're involved in, the data they interact with, and potential anomalous behaviors, will be crucial.

🏛️ Security and Compliance, which Frank argues cuts across the other six pillars of the framework, from models to hosting environments and application workloads.

🤖 The Agent Ecosystem itself, where we will likely see marketplaces of agents, and their implications, involved in activities from business applications, customer service platforms, and enterprise automation solutions.

Overall, MAESTRO is a great tool to be added to the toolbox of security practitioners when it comes to agentic AI and securing the forthcoming wave of this new technology.

AppSec, Vulnerability Management, and Supply Chain Security

The State of DevSecOps in the DoD

Software Engineering Institute | Carnegie Mellon University recently released a comprehensive study of DevSecOps across the U.S. Department of Defense (DoD).

It provides a lot of great insights on the state of both DevSecOps and Software Development across the Department, including:

The role of DevSecOps when it comes to the DoD's digital modernization efforts and future mission success

The continuiously evolving Software Factory (SWF) ecosystem across the DoD and its role as a digital arsenal for modern warfare

Improvements in the Software Factory ecosystem, such as inventory, automation, and managing the overall SWF portfolio of the DoD

How DevSecOps supports the shift to Continuous ATO (cATO) and modern compliance processes and engineering to keep pace with the state of modern software development

And much more.

This is an informative read as someone who's supported various DoD software factories and has be “DevSecOps" in the DoD and Federal government for quite some time.

What is Behind the Exponential Growth of CVEs?

You’ve likely seen many folks discussing the exponential growth of CVEs, but what drives this growth? My friend and Vulnerability Researcher, Patrick Garrity, sheds light on that in a recent post he made.

As Patrick points out, from 2023 to 2024, most growth can be attributed to just six CVE Numbering Authorities (CNAs). He states that five of the six are researchers or bug bounty CNAs making significant contributions to CVEs.

A fair amount is tied to WordPress, or specific open-source databases and ecosystems such as GitHub and VulnDB.

ASPM ’verse Virtual Conference

You’ve likely heard of “Application Security Posture Management (ASPM)”. If not, you can check out my article with Francis Odum titled “The Rise of Application Security Posture Management (ASPM) Platforms”.

The AppSec space continues to become more complex, from vulnerability management and prioritization to tooling. Throw AI into the mix, and it is poised for some fundamental transformations in how we approach AppSec.

That is why I am excited to share that I am joining Cycode for their ASPM ’verse event, I’ll be speaking in a fireside chat on:

“The Future of Application Security: 3 Ways Agentic AI is Changing Security in 2025” 🔐🤖

We’ll dig into:

✅ How AI is reshaping modern AppSec strategies

✅ Defending against evolving vulnerabilities and supply chain threats

✅ Transforming your security posture to meet the pace of innovation

🗓 June 4, 2025

🕚 11AM ET | 8AM PT

💻 Virtual & FREE to attend!

Join me and other AppSec leaders as we explore what’s next for security in the age of AI - with this LINK.

Software Security Code of Practice - Teeth or Just Talk?

The UK’s National Cyber Security Centre recently released a “Software Security Code of Practice”.

David Archer of Endor Labs breaks down the publication in an excellent article looking at what it gets right, where it can be improved, and whether or not it will have an impact.

David lays out the four themes of the Code of Practice:

Theme 1: Secure design and development

Theme 2: Build environment security

Theme 3: Secure deployment and maintenance

Theme 4: Communication with customers

He also walks through the principles within each theme, and where they can make an impact and be improved. While I haven’t read the Code of Practice, it looks well thought out and well-intentioned.

However, I come to the same conclusion as David. Much like CISA’s Secure-by-Design efforts (which the Code of Practice recommends vendors embrace), given that they are also voluntary, they are unlikely to see widespread adoption and even less actual implementation.

This is because the market failure of cybersecurity will not resolve itself voluntarily. It is much easier for vendors to pass the costs of insecurity onto downstream customers and consumers and continue to prioritize speed to market and revenue over security.

This won’t change until widespread consumer changes in spending patterns (unlikely) or massive regulatory changes requiring this sort of effort (also unlikely, at least in the U.S. in the current administration). Additionally, regulation can have unintended consequences and impacts, such as stifling innovation and impacting economic prosperity, so we have to pick our poison.