Resilient Cyber #69

M&A Perspectives, America’s Economy Leans on AI, CISA F5 Emergency Directive, Exploiting AI Coding Assistants, Board Oversight of AI, LLM-Driven SAST & 2025 Cloud Security Market Report

Welcome!

Before we get going with this week’s newsletter, I wanted to share a bit of personal news. As you all know, I’ve written multiple books, and lately, I find myself, like many others, doing all I can to learn and grow in the areas of AI, LLMs, and Agentic AI.

That’s why I am truly humbled to share that I teamed up with industry-leading researcher, author, and expert on all things AI Security, Ken Huang, to publish “Securing AI Agents: Foundations, Frameworks and Real World Deployments”.

It features a foreword by industry leaders, including Rob Joyce, Jason Clinton, Jim Reavis, Caleb Sima, and Steve Wilson. We cover a TON of critical topics in the book, including Agentic AI Red Teaming, Threat Modeling, Regulatory Frameworks, the M&A and startup landscape, and more.

You can grab a copy here now! ←

Enough about me, we’re back with Issue #69 of the Resilient Cyber Newsletter and we have a lot to cover together, so let’s get moving.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Introducing Hyperproof AI. Built with trust by GRC leaders, for GRC leaders.

Are you ready for GRC transformation? Knock the dust off those outdated GRC processes with Hyperproof AI: the only adaptive, unified GRC system with agentic AI enabled across every workflow.

Hyperproof AI helps your team:

Eliminate the GRC grind: AI agents automate evidence collection, testing, and reporting.

Stay off the front page (for the right reasons): Hyperproof AI continuously monitors and flags risks early.

Turn GRC into a competitive edge: Close deals faster, expand into new markets, and strengthen stakeholder confidence.

Hyperproof AI isn’t just AI tacked onto existing technology. It’s enabled across every workflow. It’s your extra teammate who never gets tired of the busywork. Ready to turn GRC into a competitive advantage?

Cyber Leadership & Market Dynamics

Multiple CISA Divisions Targeted in Government Shutdown Layoffs

We are now in the third week of the Government shutdown here in the U.S., and many agencies are reporting impacts from both the shutdown and reduction-in-force (RIF) activities. This includes CISA, which has several divisions impacted by termination orders for their workforce, including the Stakeholder Engagement Division and the Infrastructure Security Division, among others.

Cyber Experts Raise National Security Alarms Over Federal Cyber Cuts

Building on the piece above, an article recently published in the WSJ cites months of layoffs, funding cuts, and lapsed programs and their implications for U.S. cyber capabilities, which the shutdown is now exacerbating.

Several cyber industry veterans have raised concerns about U.S. critical infrastructure security, as well as a “cyber brain drain” from agency workforces resulting from reduction-in-force (RIF) efforts, including those at CISA.

CISA’s New Cyber Chief - Going Back to the Basics

Speaking of CISA, I recently received an interview with Nick Andersen, the new CISA Executive Assistant Director, from someone in my network. In the interview, he discusses the role and future of CISA, as well as the CVE program. I have been chatting with Nick about the possibility of having him on the Resilient Cyber Show, and I hope to finalize the arrangements once the government shutdown is resolved.

M&A Perspectives with Varun Badhwar

I get to interact with a lot of business and cybersecurity leaders in the various roles I hold and activities I participate in within the community. One of the best leaders I know is Varun Badhwar, who is the Cofounder and CEO of AppSec leader Endor Labs, where I’m fortunate to get to serve as the Chief Security Advisor.

Varun has an excellent background and track record, prior to founding Endor Labs and scaling it as he has done thus far, he also founded and exited Redlock to Palo Alto Networks (PANW) from 2015-2018.

This is an excellent interview between Varun and Pramod, where Varun walks through M&A advice and perspectives from a repeat founder with a track record of a successful exit and ability to start multiple successful companies.

Varun covers topics from financials, evaluating M&A offers, the acquirers perspective, market timing and much more. Varun discusses his ambitions to build a 3, 5, 7 or even 800 million dollar company, specializing in AppSec, riding the AI/Agentic wave and more.

I definitely recommend giving this one a listen.

America’s Future Could Hinge on Whether AI Slightly Disappoints

By now everyone is aware that outsized role AI is playing, from M&A, investment, venture capital, startups, and the broader market. But just how outsized of a role it is playing is still worth discussing. I found this piece from Noah Smith to be an interesting one.

Noah discusses the perplexing situation where the U.S. economy is still holding up despite challenges such as tariffs, payroll figures and consumer sentiment. He covers the outsized role of the AI boom, and cites analysis showing that t he U.S. GDP would be a mere 0.6% for the year if it weren’t for AI related spending. Despite being just 5% of GDP, information processing equipment and software is responsible for 92% of GDP growth in H1 of 2025.

Another useful chart Noah provides shows the contribution from tech capex to the U.S. GDP, versus the rest of the economy from 1Q23 through now.

Noah’s piece goes on to discuss tariff exemptions for tech, and the potential implications for if we’re in an AI bubble and if it bursts. I’m not an economist, and this isn’t strictly cyber related, but the topic has strong implications for the entire economy, including cybersecurity as a market segment.

Resilient Cyber w/ Mitch Herckis - Securing the Public Sector: FedRAMP 20x, Vulnerability Management & Continuous ATO (cATO)

In this episode, I sit down with Mitchel Herckis, Global Head of Government Affairs at cloud security leader Wiz.

We will be discussing all things public sector and cybersecurity, including the evolution of the FedRAMP program, modernizing vulnerability management and the future of Continuous ATO (cATO).

Prefer to Listen?

Be sure to leave a rating and review!

We covered a lot of ground, including:

Mitch’s background both at Wiz and inside Government at roles such as OMB

How Wiz is working with Federal agencies and Defense Industrial Base (DIB) partners on Cloud Security, including the long-needed overhaul of FedRAMP with FedRAMP 20x’s efforts.

The move towards real Continuous Monitoring (ConMon) with real-time visibility of cloud environments, as well as the need for machine-readable artifacts, automations, and streamlined security control assessments.

The modernization of vulnerability management, including factors such as attack paths, reachability, exploitability, known exploitation, and the importance of focusing on real risks versus noise.

Moving away from paper-based compliance exercises and bridging the gap between security and compliance.

Wiz’s role as a CVE Numbering Authority (CNA) and the broader CVE program, including its importance for both the Government and industry when it comes to vulnerability management.

To evolving usage of SBOMs and broader supply chain security.

Disjointed efforts around the Government at both the Federal at State levels when it comes to Continuous ATO (cATO) and how we can move towards a more cohesive approach to modern system assessment and authorization.

The importance of Government Affairs and bridging the divide between industry and Government, including bringing in tech leaders into Government, influencing policy, and improving outcomes for citizens and warfighters alike.

The dual-edged sword that is AI adoption in the public sector.

CISA Issues Emergency Directive to Mitigate Vulnerabilities in F5 Devices

CISA recently issued an Emergency Directive tied to nation state cyber threat actors compromising F5 systems and exfiltrating files, including the source code and vulnerability information for BIG-IP.

This will let the attacker conduct static and dynamic analysis of the source code to identify flaws and vulnerabilities and use them for exploitation.

AI

CamoLeak: Critical GitHub Copilot Vulnerability Leaks Private Source Code

It seems like every week now we are seeing reports of researchers finding gaping holes in the pervasive AI tools and coding assistants that are becoming common use among developers and IT professionals more broadly.

The latest example comes from Legit Security, who identified a critical vulnerability in GitHub Copilot Chat, with a CVSS of 9.6 that allows the silent exfiltration of secrets and source code from private repositories and allowed them to control Copilots responses, including suggesting malicious code or links.

They stated it used a combination of CSP bypass on GitHub’s infrastructure, as well as a remote prompt injection. Part of what allowed this is how Copilot Chat has access to code, commits and pull requests to maximize the value of its answers to users.

The team used the prompt of GitHub Copilot as part of a PR to get into the tools context window, as seen below:

The team even used “invisible comments” to inject malicious packages into the process:

The researchers at Legit walk through influencing responses generated by another user’s Copilot, injecting custom markdown that includes URLs, code, and images, and then exploiting how Copilot runs with the same permissions as the victim user.

The remainder of the blog is worth a read, as it walks through how they embed their content as part of the attack into the injected prompt and manipulate the way Copilot operates and responds. They even got the Copilot to search the victim’s private repo and return results such as cloud credentials and other sensitive data.

While GitHub has now fixed the issue, it highlights the numerous examples that researchers and malicious actors alike can use to exploit these AI tools and assistants, often revolving around prompts and context.

AI Coding Assistants Are the Attack Surface

Speaking of exploiting AI tools, Gadi Evron, Cofounder of AI Security firm Knostic, rightly points out in a post that AI coding assistants are the modern attack surface, especially as they have access to code, production environments, secrets, the network, developers' endpoints, and more.

Gadi described the AI coding assistants as a gateway to network resources and a part of the CI/CD trust boundary, which has been expanded even more via malicious MCP services and extensions, vulnerabilities, malicious prompts, slop packages (e.g., package hallucinations), and more.

Agentic IDEs Under Fire: Dissecting the Real CVEs That Exposed Cursor, Windsurf, and Void

Speaking of targeting the agentic coding ecosystem, Ken Huang along with Idan Habler and Sagiv Antebi published a great article breaking down the latest CVEs impacting the agentic coding ecosystem along with the potential implications.

They discuss the rise of agentic IDEs, which added LLM agents directly into coding workflows, and have since received tremendous adoption aross the ecosystem. The agents help accelerate development and activities such as reading code, fixing bugs, and executing commands. That said, with great capability, comes great risk and potential for vulnerabilities and exploitation, which is what the authors discuss.

They take a look at recent CVEs impacting these agentic IDEs and product a comprehensive threat model based on the real CVE data and use the MAESTRO 7-layer framework. If you’re unfamiliar with MAESTRO, I covered it in detail in a prior article of mine, titled “Orchestrating Agentic AI Securely: A look at the MAESTRO AI Threat Modeling Framework”.

A brief recap is that it includes 7 layers, covering everything from the foundation models through the agent ecosystem (which includes the agentic IDEs).

The authors discuss:

The Agentic IDE attack surface, including workspace files, MCP connectors and LLM planners

How real-world recent CVEs tie into this attack surface

Detection and response, including key indicators, sample SIEM rules and forensic artifacts

Mapping these sort of incidents to their corresponding MAESTRO layers:

Deja Vu in the Void: An Agentic IDE Compromised by Known Tricks

Speaking of compromising Agentic IDEs, Idan Habler who contributed to the blog I shared above from Ken also published a deep dive into how the Void Editor, an open source IDE designed for “vibe coding” with widespread use was compromised.

Idan walks through how he was able to achieve sensitive data exfiltration and remote code execution in the Void Editor with known foundational vulnerabilities, demonstrating how the rapid rollout and adoption of Agentic AI coding platforms is apparently coming at the expense of foundational security.

Strengthening Board Oversight of AI

I recently came across a publication by Jim Routh and Mahi Dontamsetti and found it to be a valuable resource. They present a mnemonic for AI Governance and Security, dubbed the “A.I. GUARD Framework”.

It focused on seven principles to help boards enhance their oversight of AI.

A - Advanced Board Understanding and Expertise in AI

I - Integrated AI Governance Framework

G - Governance with clearly defined Accountability & Authority

U - Understanding of Risk Appetite and Reporting across Enterprise

A - Accelerate Continuous Learning and Improvement

R - Respond to Incidents with Structure, Process, and Protocols

D - Disclose with Regulatory Readiness

It also includes a primer on GenAI and AI more broadly, along with relevant frameworks such as NIST AI RMF, EU AI Act, and industry parallels from Google, Microsoft, and others.

By now, we all agree that AI is a business imperative, which means boards need to be stewards of AI and the strategic risks and opportunities it presents to organizations.

AppSec

Ensuring the Longevity of the CVE Program

Hate it or love it, the Common Vulnerability and Exposures (CVE) program is an absolute cornerstone of the Vulnerability Management and application security (AppSec) community.

The program has grown tremendously since its inception in 1999, scaling from a few hundred CVEs to tens of thousands, and from a single or handful of CNAs to several hundred.

It is relied upon by countless vulnerability scanners and security tools, as well as nearly all organizations looking to mitigate cybersecurity risks. That said, it has faced significant hurdles over the years in terms of ownership, funding, transparency, data quality, and more.

Some of these issues have contributed to a proliferation of vulnerability databases, a lack of trust within the community, and ultimately increased complexity for organizations already overwhelmed by digital risks.

This paper by Ari Schwartz and Jessie Shen of the Center for Cybersecurity Policy and Law does a great job of both summarizing the CVE program’s history and potential considerations for its future.

This includes questions such as what combination of funding works best, the role of international Governments and global cooperation, how the CVE program itself should be structured, and other key questions for the future of this pivotal program.

LLM-Driven SAST

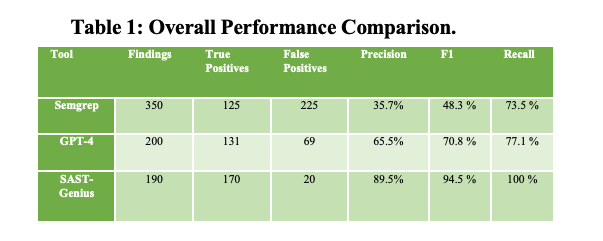

Everyone in AppSec understands that SAST is a critical aspect of ensuring a secure SDLC. That said, SAST, of course, comes with challenges, such as high false positive rates, which introduce burden and toil to developers, as well as problems in detecting classes of vulnerabilities, including complex logical flaws, multi-file dependencies, and more.

And, while LLMs are promising for many use cases, on their own, they aren’t a replacement for widely used AppSec tooling, such as SAST. But what if you combined the two? Can you achieve the best of both worlds and enhance security outcomes?

As it turns out, that answer is yes.

This research from Vaibhav Agrawal and team provided some exciting findings. Combining SAST and an LLM, they were able to reduce false positives by 91%.

They were also able to discover distinct classes of vulnerabilities that SAST or LLMs on their own would have missed, such as Directory Traversal, Insecure API Usage in Third-Party Libraries, and others.

This is really cool research, and it will be exciting to see the potential improvements in AppSec by combining LLMs with existing AppSec tooling and methodologies.

The Future of AppSec in the Era of AI

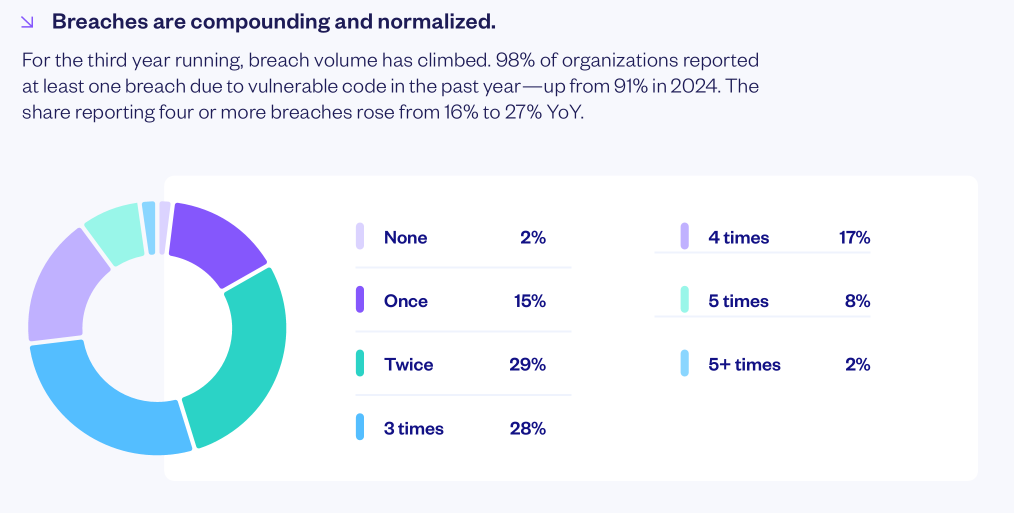

Checkmarx recently published an interesting report focusing on the future of AppSec in the era of AI, which included a survey of over 1,500 CISOs, AppSec leaders and developers across the globe. The subtitle of the report perfectly explains what is occurring right now, too: “When a False Sense of Security Meets Breakneck Developer Velocity”.

We know from other research and reports that we have a lethal combination of developers inherently trusting AI-generated code, coupled with an uptick in productivity, without any actual verification or security rigor - we can all guess where that leads us.

A snapshot of their key findings is below:

This shows that, despite headlines such as “Secure-by-Design” and other feel-good phrases, organizations are all-in on AI-generated code, even when it has vulnerabilities, and even when they experience an incident.

Another notable aspect of the report is the emphasis on AI essentially writing the code. As the report puts it, Developers are “just hitting deploy” (emphasis on no actual review or rigor).

Not only are half, and soon to be more, of all organizations using AI for code generation, but AI is also writing more than half of the code in those cases. Despite the rapid level of adoption, there is little actual rigor in terms of policy/process when it comes to tracking, auditing, and managing the code the AI writes, relying instead on blind implicit trust. Keep in mind that research continues to show AI-generated code is rife with vulnerabilities and risks.

Zero trust is dead.

Additionally, the hope that organizations and vendors would simply adopt Secure-by-Design out of the goodness of their hearts proved to be unfounded. The survey found 81% of respondents knowingly ship vulnerable code due to competing business priorities.

This is something I attempted to highlight in great detail in my piece, "Secure-by-Design Delusions.” Despite the best intentions and any public virtue signaling about security being a top priority, it always has, and always will, fall below competing business priorities.

Developers surveyed even admitted they “hoped the vulnerability would go unnoticed”.

Could you let that one sink in?

Vendors/developers are knowingly shipping vulnerable products and code, hoping that customers/consumers won’t notice. Worse yet, even if they do, nothing will change because there are no market incentives to change the behavior, nor sufficient regulatory forces.

And, as expected, breach volume continues to grow as a result of vulnerable products and code, with 98% of organizations reporting at least one breach due to vulnerable code, up from 91% in 2024. And it isn’t isolated; the number of respondents reporting four or more breaches rose from 16% to 27% YoY.

This report is saying the quiet part out loud when it comes to AI adoption and security.

The primary people who care about security, as well as those in the community, will often fall behind competing business priorities, the latest of which includes AI adoption.

2025 Cloud Security Market Report

If you’ve been following the newsletter for sometime, you’ve inevitably seen me share articles and resources from James Berthoty and even have him join me on the Resilient Cyber Show multiple times.

He recently published a comprehensive Cloud Security Market Report, which I found interesting and incredibly detailed. I find his analysis so helpful that I’m a paid subscriber personally. The report covers cloud security trends, the various generations of CNAPP’s, the future of cloud security, a buyer’s guide and vendor spotlights.

If you’re into cloud security, you should definitely check it out.

TigerJack Malware Campaign Hits Over 17,000 Developers

The team at Koi continues to do incredible work in terms of research, from identifying malicious extensions, packages, and MCP instances. The latest example they found, they dubbed “TigerJack” and it involves hiding malware inside legitimate VS Code extensions, and impacting tens of thousands of developers.

This involves a threat actor the Koi team was tracking that has infiltrated developer marketplaces with 11 malicious VS code extensions across multiple publisher accounts.

Koi’s blog walks through how developers and unknowingly downloading and using malicious extensions and packages, leading to backdoors, crypto mining, key logging and more, putting their respective organizations at risk. Making these attacks even more insidious is the fact that the extensions functionally work as described, and are indeed helpful to developers, all while secretly carrying out malicious activities as well.

“Your complete source code gets packages into JSON payloads and transmitted to multiple endpoints”

It’s easy to see how malicious extensions like this can risk organizations IP, sensitive data and more, all while seemingly providing value to developers who aren’t aware how they are exposing their organizations to risks.

Vulnerability Management Has Evolved

The problem is, most haven’t evolved with it.

As vulnerability backlogs grow exponentially, organizations deal with complex hybrid and multi-cloud environments and teams are drowning in noise and toil, most are still clinging to legacy practices. That’s why I find Continuous Threat Exposure Management (CTEM) so appealing.

While some have heard of the term, most don’t know where to begin. Luckily there’s resources out there though, such as Zafran Security’s Practical Guide for Evolving from VM to CTEM. I break down the guide step-by-step in my latest article, including:

5 Stages of CTEM.

The 4 stages of exposure management maturity, including factors such as asset inventory, prioritization and exposure hunting.

Effective communication and workflows to facilitate not just finding issues, but fixing them e.g. Remediation Operations (RemOps)

Be sure to check out the full write-up and guide, as it helps organizations pivot from a reactive to a proactive model of risk management.As someone who’s written a book on Vulnerability Management, this evolution is long overdue.

Vulnerability Scoring System Sprawl

As it turns out, it isn’t just security tools that are sprawling, or vulnerabilities, but the vulnerability scoring system itself is also spiraling out of control as we continue to see new scoring systems emerge. Each has their own unique scoring criteria, considerations, levels of severity, focus and more.

My friend Patrick Garrity recently shared a repo with a list of vulnerability scoring systems, and well, it’s…a lot.

It reads like an acronym soup 🍜. CVSS, EPSS, LEV, AIVSS, SSVC and VISS, just to name a few.

While it is true many of them have limited adoption or use, several of them due, making the space of vulnerability management even more complicated than it already is.