FedRAMP Vulnerability Management Evolution

A look at FedRAMP's Long Overdue Vulnerability Management Evolution

If you’ve been paying attention to the vulnerability management landscape over the last several years, it has undergone a painful but long-needed evolution. Organizations continue to drown in vulnerability backlogs in the hundreds of thousands to millions of findings, with practitioners unsure where to begin, mainly due to legacy vulnerability management practices.

Slowly, we’ve seen the industry move towards context-rich vulnerability management methods, which account for known exploitation, exploitability, reachability, mitigations, and organizational context, such as business criticality and data sensitivity.

While several of these factors always should have been part of vulnerability management methodologies, and may have been for some outliers, most organizations have used mainly legacy practices, such as prioritizing vulnerabilities for remediation based on flawed approaches, such as base CVSS scores.

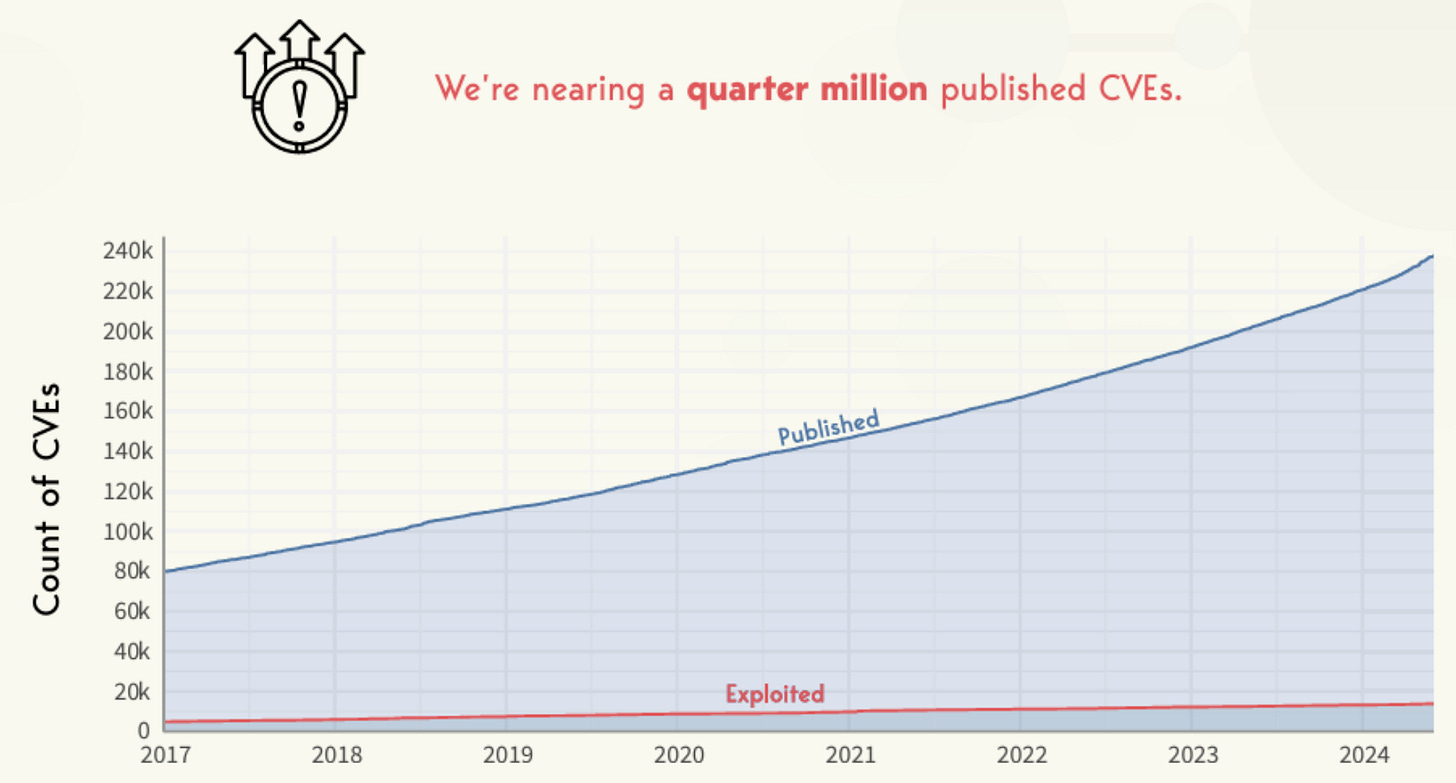

The problem with this, of course, is that it accounts for nothing I mentioned above, and doesn’t account for realities such as < 5% of CVEs in a given year are ever exploited, meaning the traditional base scoring approach to remediation is incredibly inefficient and ineffective, and diverts resources from real risks.

So while CVEs continue to climb, prioritizing based on CVSS base scores alone has teams wasting tremendous amounts of time on findings that never are exploited, as seen below:

Most large compliance frameworks have yet to evolve with this reality, which is why it is so refreshing to see FedRAMP release RFC-0012 Continuous Vulnerability Management Standard, which we will examine in this article.

For anyone who’s followed me for some time, you know that FedRAMP and Vulnerability Management are near and dear topics to me. Previously, I served as a federal employee at the General Services Administration (GSA), the agency that leads the FedRAMP program. I’ve also helped many agencies and CSPs navigate FedRAMP requirements, including vulnerability management.

Additionally, I’m the coauthor of “Effective Vulnerability Management: Managing Risk in the Vulnerable Digital Ecosystem”.

For those looking to learn more about FedRAMP, I’ve written about it, including its challenges, extensively in other articles, such as:

For those unfamiliar with it, the Federal Risk Authorization Management Program (aka FedRAMP) is a program the federal government uses to adopt cloud services and offerings securely. It is oriented around NIST 800-53 and associated security control baselines (Low, Moderate, and High).

With that out of the way, let’s examine this latest RFC and why the changes are both needed and valuable.

If you’re more of a visual/audio learner or fan, I also joined Ron Harnik to dive into this topic on a livestream this past Friday:

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

FedRAMP Continuous Vulnerability Management Standard

It’s worth noting that the new continuous vulnerability management standard isn’t finalized, but it is a strong indicator of where the program is headed. The FedRAMP PMO is currently accepting public comments and feedback.

FedRAMP provides a summary and motivation of the RFC as laid out below:

This standard’s intent is to ensure providers promptly detect and respond to critical vulnerabilities by considering the entire context over Common Vulnerability Scoring System (CVSS) risk scores alone, prioritizing realistically exploitable weaknesses, and encouraging automated vulnerability management. It also aims to facilitate the use of existing commercial tools for cloud service providers and reduce custom government-only reporting requirements.

The RFC provides a lot of helpful additional information, such as definitions and terminology, but I won’t be diving into that here, as it is primarily helpful for folks unfamiliar with some of the terms used.

The RFC also lays out various requirements for ALL CSPs authorized under FedRAMP, such as:

Establishing and maintaining programs to meet the requirements in the standard, including detecting, evaluating, reporting, mitigating, and remediating vulnerabilities

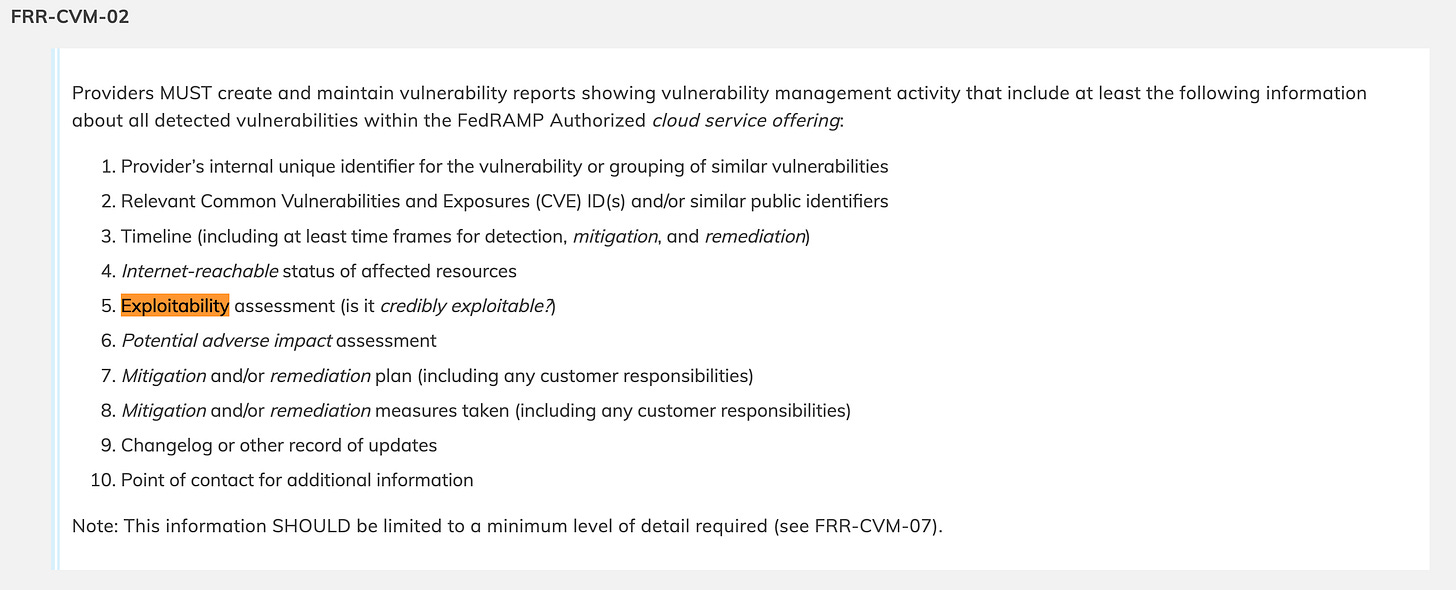

Creating and maintaining vulnerability reports that provide sufficient metadata (e.g. CVE ID’s, unique identifiers, exploitability assessment, potential impacts, etc.)

Many other requirements, tied to KEV’s, vulnerability discovery, reporting, mitigations and more.

I don’t want to belabor all of the new/proposed requirements, but instead focus on the new context FedRAMP is adding that is long overdue both for the way FedRAMP approaches vulnerability management, but also how the industry as a whole approaches the practice. I’ll be highlighting some of them below.

First is the aspect of “Exploitability Assessment” in FRR-CV-02.

This is a loaded term because a lot can go into whether something is credibly exploitable. Still, some common aspects include if it’s known to be exploited, such as via CISA’s Known Exploited Vulnerability (KEV) catalog. Also, the rise in popularity of the Exploit Prediction Scoring System (EPSS) provides a score between 0 and 1 regarding how likely a vulnerability will be exploited in the next 30 days. Other factors include whether it is reachable (more to come on this later).

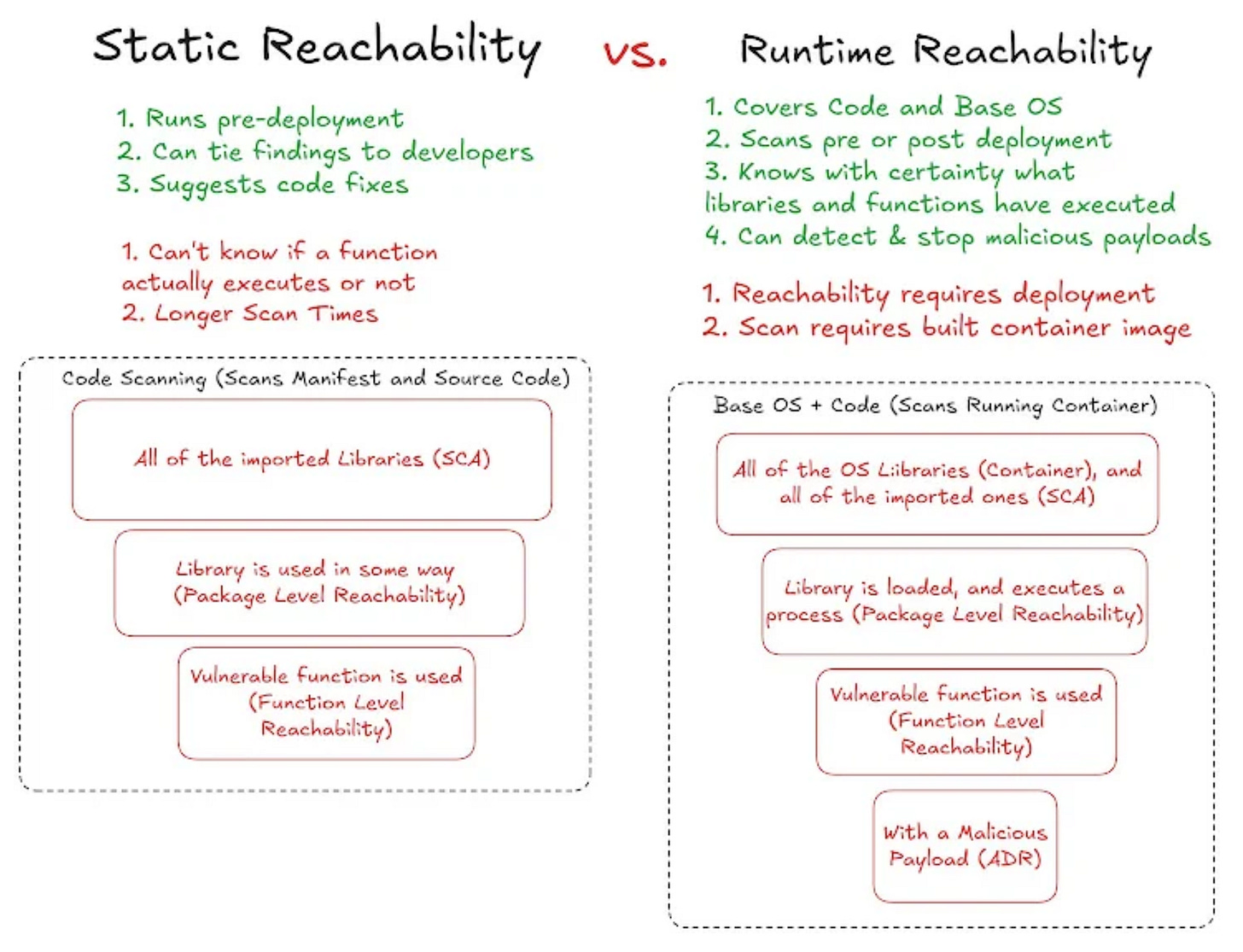

This may include whether it is Internet-accessible, whether a library is loaded, whether a function is called, or whether compensating controls in your architecture would make something unreachable and, therefore, unable to be exploited.

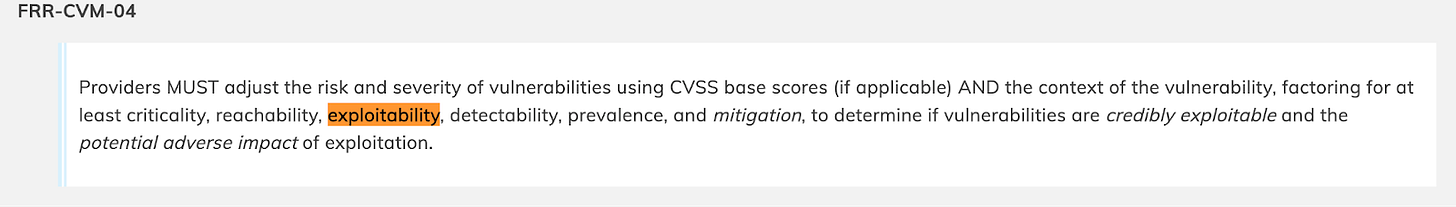

Second is FRR-CVM-04

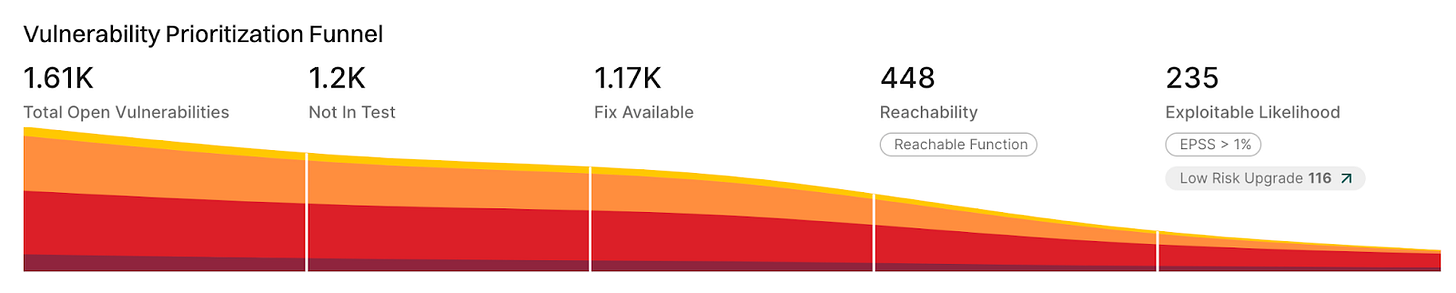

Here you see FedRAMP stressing that providers MUST adjust the risk and severity of vulnerabilities using not just CVSS but also context such as reachability and exploitability. Again, this is a long overdue change because less than 5% of CVEs annually ever see exploitation activity. When you adjust for factors such as reachability, that number drops even further. Accounting for these factors helps development and engineering focus on real risks to the organization, rather than just blindly trying to remediate “all the highs and criticals” when referring to CVSS base scores.

This is an industry-wide change that is desperately needed and something the Endor Labs team has already been championing for years. Endor boasts robust reachability in our Software Composition Analysis (SCA) platform, looking into factors related to vulnerabilities such as:

Is it in the production code?

Is there a fix available

Is the affected function reachable?

Is there a high probability of an exploit (e.g., high EPSS score)

This is depicted well in what we dub the “Vulnerability Prioritization Funnel”, as seen below:

For a deeper dive on Reachability Analysis, I strongly recommend checking out my friend

’s articles:In these articles, James discusses fundamental topics such as why reachability is key for vulnerability prioritization, and the reality that if something isn’t reachable, than it isn’t exploitable and shouldn’t be prioritized above findings that are reachable, known to be exploitable, or likely to be exploitable, which is something I have emphasized in several articles of my own as well.

James also highlights key topics such as Static vs. Runtime Reachability, see below:

As well as different runtime reachability approaches, and how trustworthy they are when it comes to being prioritized.

These latter points of runtime reachability speak to a broader industry trend which is moving from a heavy emphasis on “shift left” security, to also focusing on runtime, with the rise of categories such as Application Detection & Response (ADR), which I’ve written about in articles such as “How ADR Addresses Gaps in the Detection & Response Landscape: A look at the emerging category of “Application Detection & Response (ADR)”.

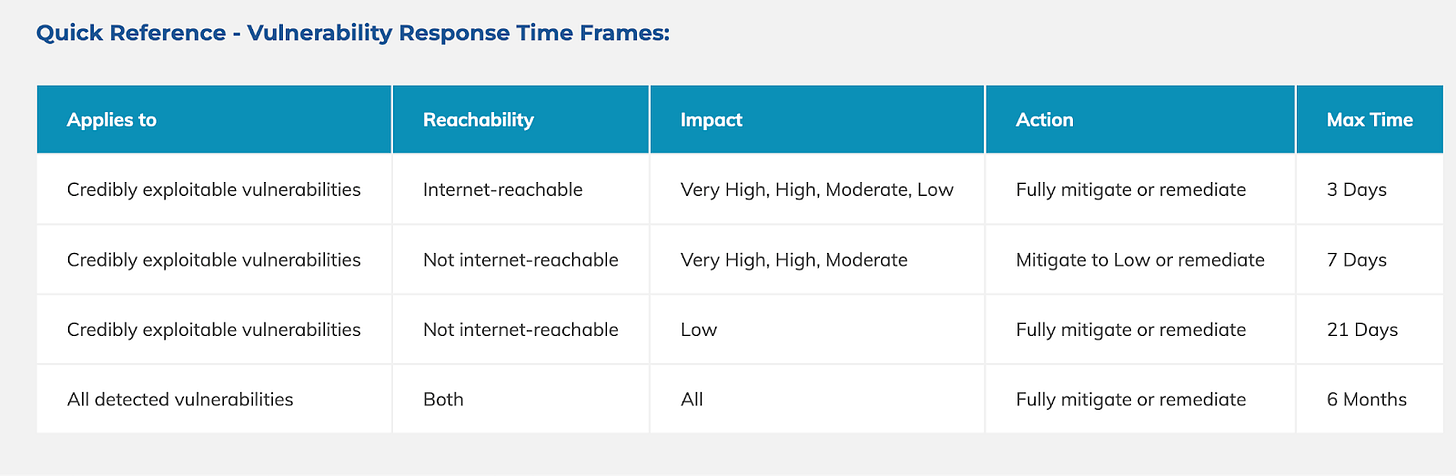

Vulnerability Response Times

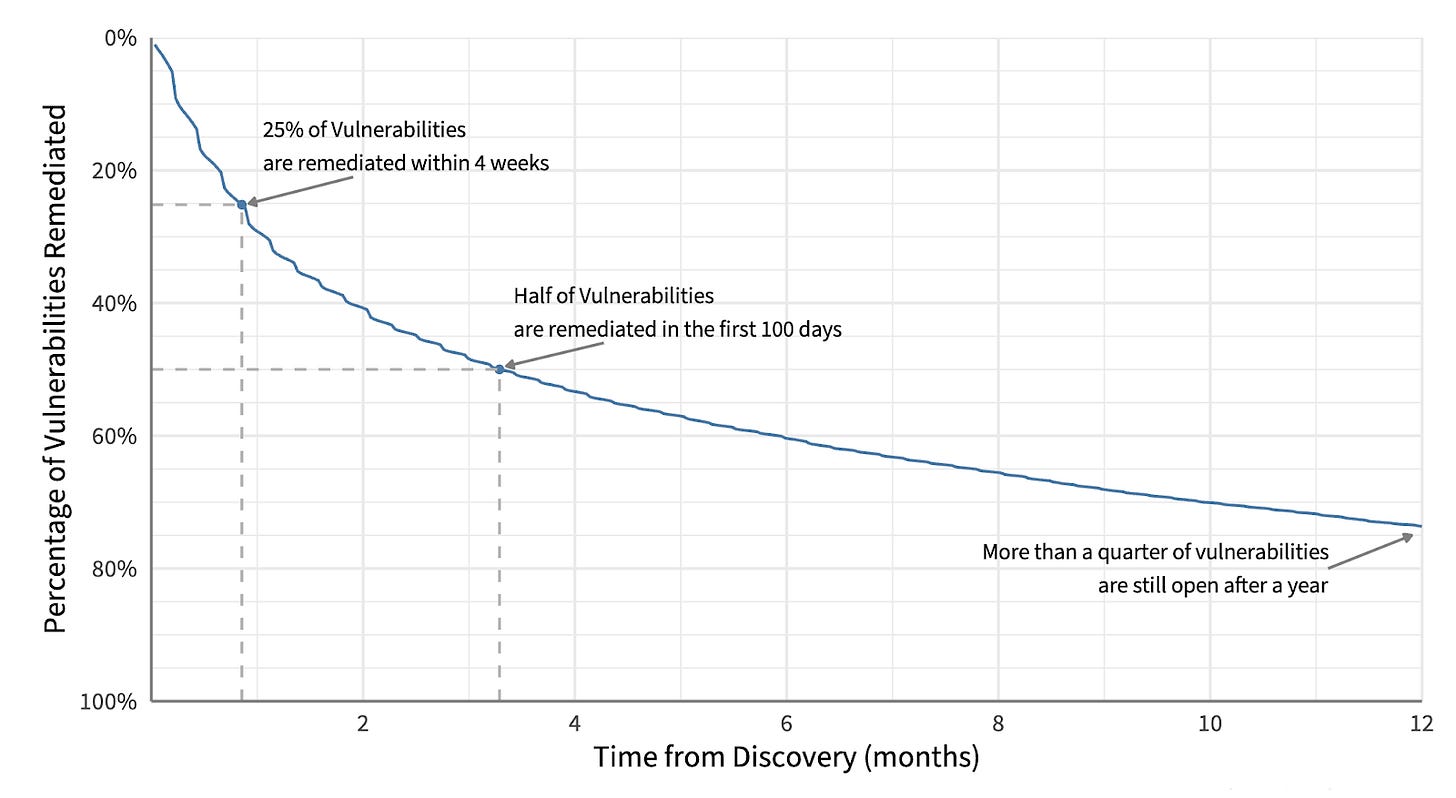

FedRAMP’s new continuous vulnerability management guidance then lays out tentative vulnerability response timeframes. Many may view these as aggressive, especially given the massive vulnerability backlog, competing priorities for developers, limited bandwidth, concerns about disrupting production, and more.

Some may say “just patch the findings” but any practitioner knows it isn’t that simple and the research demonstrates that reality.

For example, Cyentia Institute found that 25% of vulnerabilities are remediated within the first 4 weeks, while half are remediated within the first 100 days, and nearly 25% of all vulnerabilities remain open after a year.

To meet these sorts of SLA’s from FedRAMP, organizations need to lean into innovative approaches. For example, we’ve seen widespread adoption of Chainguard Images (with several vendors now going to market with a similar offering), which are minimal, zero-CVE images with industry-leading SLA’s, to try and minimize the cost of CVE’s, something I discussed in depth in a recent article titled “CVE Cost Conundrums”.

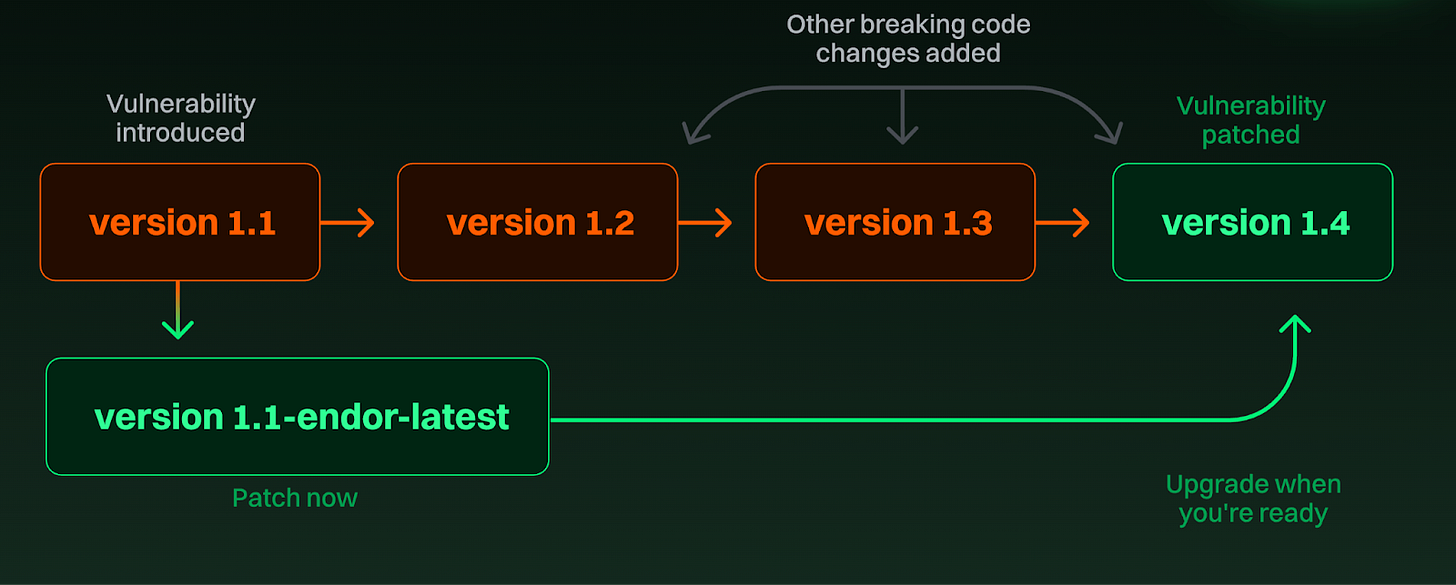

Endor Labs also offers an offering dubbed “Endor Patches” aimed at helping organizations meet strict regulatory vulnerability remediation SLAs. It allows organizations to take the security fix from the latest version of an open source project and apply it to older versions they’re already using. This is incredibly helpful given organizations are often running open source dependencies that are multiple versions behind the version with the security fix, and upgrading may impact functionality and lead to breaking changes, leading to business disruption, or in other words, affecting the “A” in the CIA triad.

As I discussed in my article “The 2025 Open Source Security Landscape”, 90% of codebases contain components that are more than 10 versions behind the most current version, so this problem is pervasive. It is due to factors such as organizations lacking the remediation capacity, or being reluctant due to the breaking changes I mentioned above. Endor Patches helps tackle this challenge directly.

These innovative offerings and others that are similar strive to provide organizations with an opportunity to offload the burden of strict vulnerability management remediation SLAs and requirements, often driven by regulatory frameworks and customer requirements.

Closing Thoughts

The FedRAMP Continuous Vulnerability Management RFC represents a systemic shift in how one of the industry’s leading compliance frameworks approaches AppSec and vulnerability management. It’s a pivot from legacy approaches and instead focuses on rich context of insights tied to exploitation, reachability and more.

However, to make this shift and meet the desired SLA’s, organizations will need to have comprehensive AppSec tooling capable of providing this context, which will also be a welcomed change for developers and engineering teams, who can instead focus on the ~8% of vulnerabilities that pose real organizational risks.

With the rise of AI-driven development, copilots, LLMs and developers utilizing AI to produce more software faster than ever, legacy approaches to AppSec won’t suffice, especially when it comes to vulnerability management.

As the federal government continues to adopt more innovation at speed, any/every company who cares about their impact in the AI powered world, needs to be able to participate, and that requires having the right tooling and capability to provide context rich findings that are actionable.