AI's Intersection with Trust

A look at Vanta's 2025 State of Trust Report

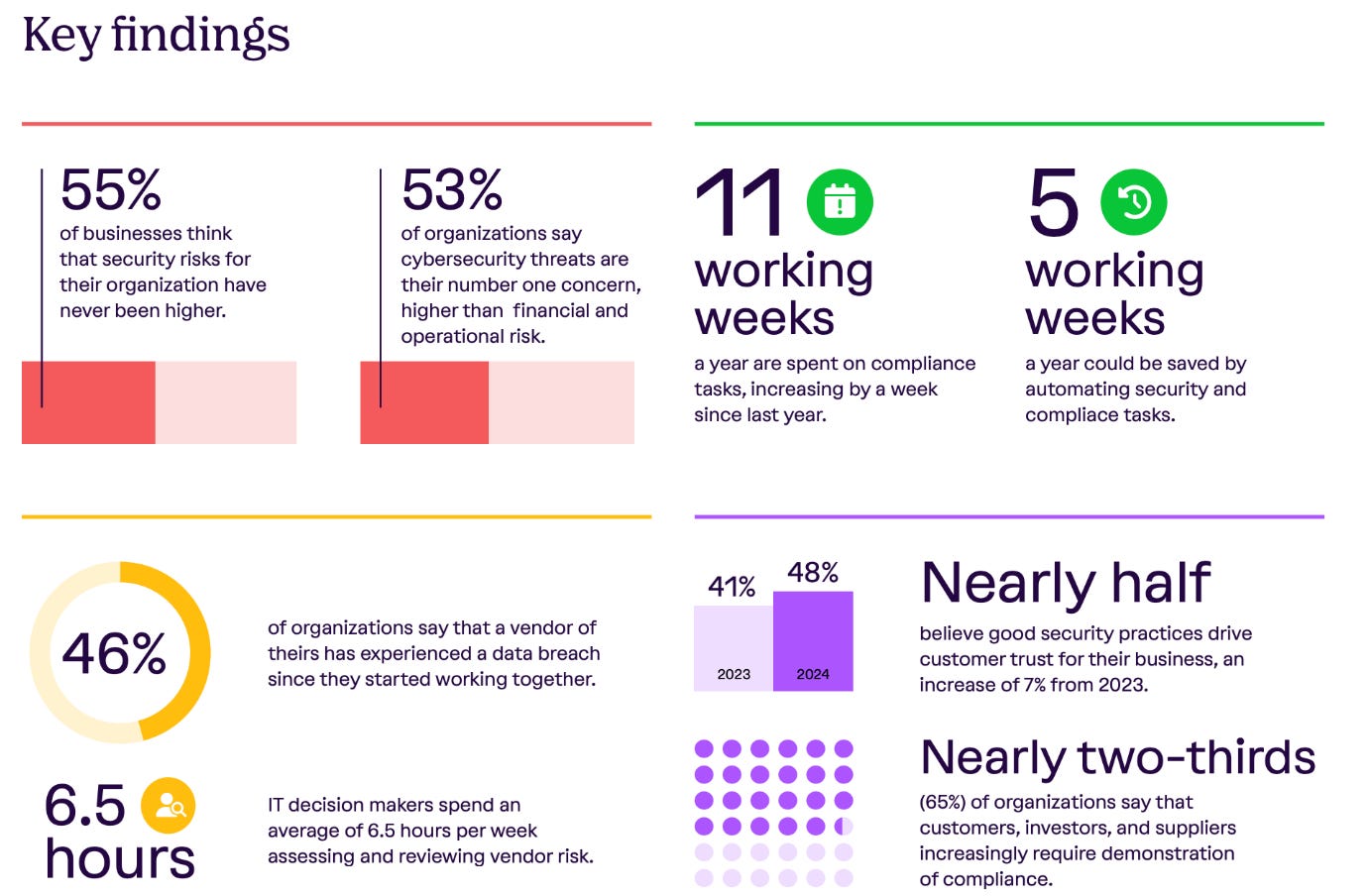

If you’ve been following me for some time, you know that in 2024, I covered Vanta’s State of Trust report. Last year’s report had a lot of interesting findings, summarized in the image below:

Key themes included a heightened sense of concern around cyber risks. Still, many organizations admitted they spend an inordinate amount of time on manual compliance tasks and activities, leading to a desperate need for automation and innovation.

Another key theme was the acknowledgment that security helps drive customer trust, as well as the need to effectively demonstrate compliance to various types of third parties.

2025 - Where’s the Focus Now?

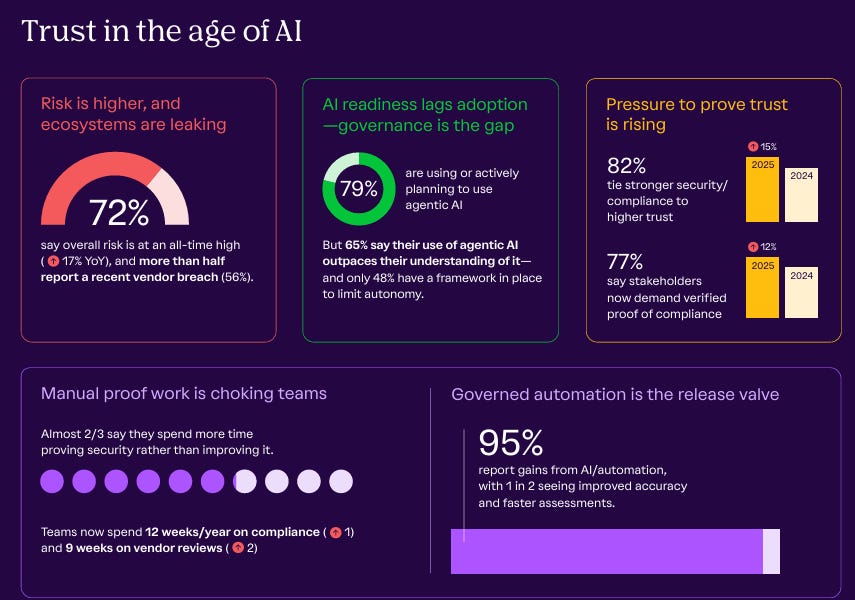

A lot can change in a year, for the better or for the worse. When we examine the key findings in this year's 2025 State of Trust Report from Vanta, we see that some organizations are still plagued by problems of the past, which are only exacerbated. However, we also see some forward-leaning innovators reaping the rewards of modernization.

Key Findings

Before diving into the specifics, let’s take a look at the key findings:

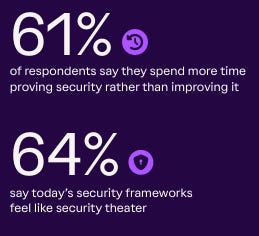

Several problematic themes emerge here. Compliance, often conflated with security, suffers from phrases such as “security theater.” The report seems to support this, not only in perception, but also in reality, as teams spend nine working weeks a year on vendor security reviews and risk assessments. These often involve futile exercises, such as reviewing security questionnaires and completing paper-based check-the-box activities, among others.

More broadly, compliance continues to consume organizations’ time, accounting for up to almost a quarter of their working weeks, and is on the rise from last year. This isn’t to say that compliance is inherently bad, but how it is conducted in practice is akin to the dark ages, where as an industry we’ve seen microservices, Cloud, API’s, AI and automation, many still essentially handle compliance activities in static documentation, snapshot-in-time assessments, manual and cyclical activities that don’t reflect the real state of systems and more.

AI is forcing an uncomfortable dichotomy, where on one hand some organizations are driven by part excitement and part fear of missing out (FOMO), and are rapidly adopting AI and Agentic AI without having the expertise to govern or secure it.

At the same time, others are incredibly optimistic about AI’s ability to improve security team effectiveness and accelerate activities such as risk assessments and incident response.

This represents the double-edged nature of AI as a whole when it comes to security. Leading to accelerated exploitation timelines for attackers, enhanced attack capabilities, and more, while also showing great promise to automate traditional security activities and bolster cyber defenses.

Digging Deeper

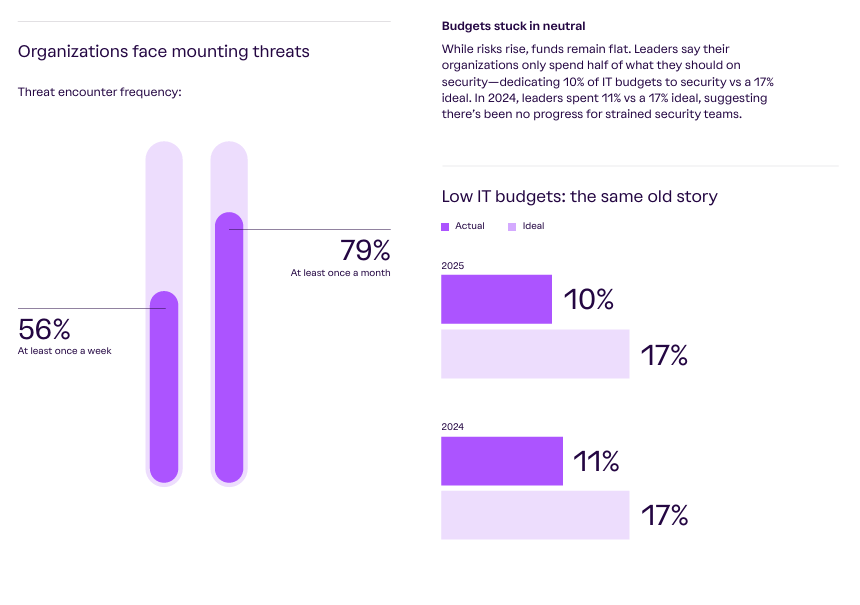

As we delve deeper into Vanta’s 2025 State of Trust Report, we see that the challenges aren’t just technical, but also financial. Security leaders are facing an increasingly complex risk landscape and expanding attack surface. While the risks may be increasing, budgets are not, with many respondents stating that budgets have been flat or below ideal, based on the threat landscape and their assessments of risk to the organization.

This aligns with other resources I’ve shared recently, such as IANS 2025 Security Software and Services Benchmark Report.

More than half of organizations are encountering threats once a week, and nearly all are experiencing a threat once a month, with 3 in 4 or 72% stating the risks have never been higher for their organizations, all while budgets are actually declining YoY, and security struggles to communicate ROI and cost efficiency.

Risks are up, budgets aren’t.

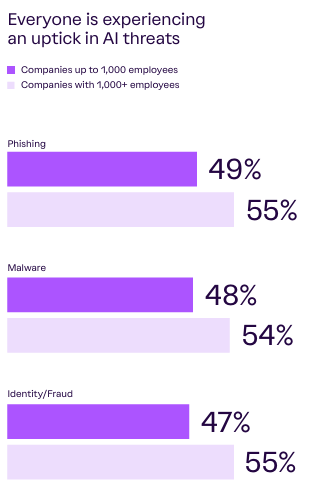

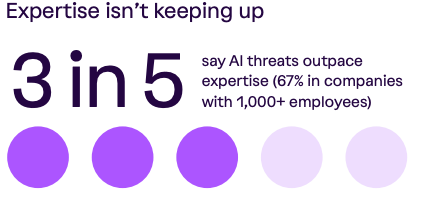

Another critical thing highlighted in this year’s report is a glaring AI readiness gap. Organizations are encountering not just more attacks, but more attacks driven by AI, ranging from phishing, fraud, and malware.

This aligns with reports from leading frontier model providers, such as Anthropic, which, in their August 2025 “Threat Intelligence Report”, highlighted how attackers have been attempting to use the platform for the full lifecycle of attacks and complex attack campaigns.

Unsurprisingly, organizations don’t have the internal expertise to keep up with the AI threats:

We know there’s an AI talent war among the leading AI and tech companies, let alone the broader enterprise environments, with a small portion of the workforce having expertise in this emerging technology.

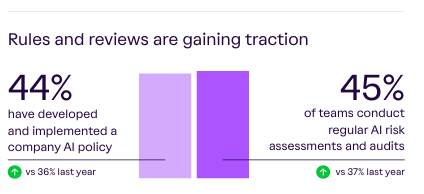

This leaves organizations struggling with AI-related threats, as they continue to rely on traditional controls such as signatures, static boundary protection, and manual review. All hope isn’t lost, though, either, as Vanta’s report found an increase in both teams conducting AI risk assessments and audits as well as organizations formalizing company-wide AI policies.

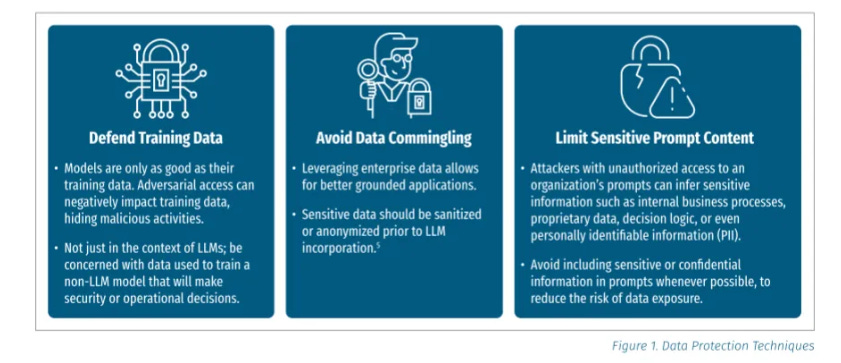

These policies include guidelines on handling data usage, as we have seen various headlines about companies' staff inadvertently exposing sensitive data, such as IP, financial information, source code, and even customer data, to external LLMs and service providers. These are topics I have covered in depth previously, including resources such as the SANS Critical AI Security Guidelines, which include a category focused on data protection, as well as key activities such as defending training data, avoiding data commingling, and limiting sensitive prompt content.

Failing to follow these steps has led to LLM Data Leakage, where adversaries craft prompts to induce LLMs to leak sensitive data, as well as incidents where employees expose sensitive corporate data into public LLMs.

Diving further into AI-specific findings, the State of Trust Report highlights that “agentic AI adoption is high but control is low”. They state that 8/10 organizations are using or plan to use agentic AI; however, 65% admit that the adoption outpaces their understanding of the technology, mirroring the expertise gap seen with AI more broadly.

Even in the absence of a clear understanding, organizations are buying into the hype surrounding Agentic AI, including in security, with those surveyed hoping it can be used for everything from forensic log analysis to automated vulnerability scanning and prioritization.

The excitement expressed by those surveyed aligns with the broader industry enthusiasm surrounding Agentic AI, including that of venture capitalists, startups, and industry leaders. I discussed this in detail as we began 2025, in an article titled “Agentic AI’s Intersection with Cybersecurity”, where I showed how investors were excited about the potential total addressable market (TAM) of Agents. In Cyber, we were seeing innovation and funding in areas ranging from AppSec, GRC, and SecOps, to name a few.

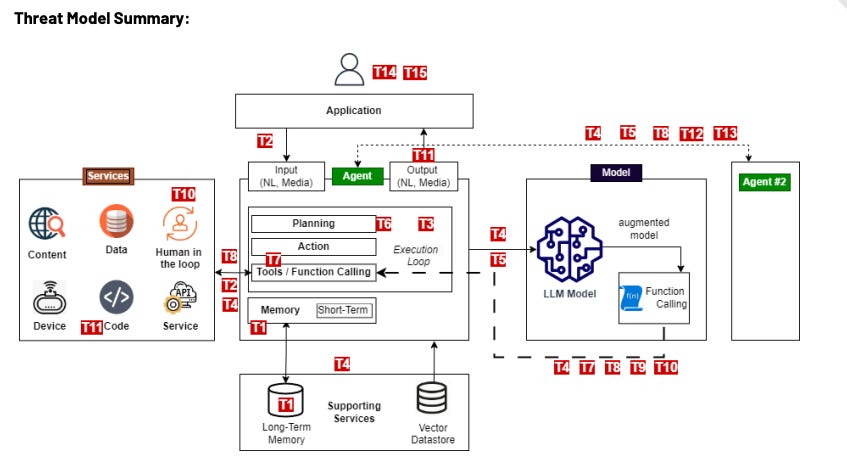

While Vanta’s State of Trust Report shows an uptick in those comfortable with agentic AI acting in an advisory or even autonomous capacity in some scenarios, this excitement needs to be contrasted against the potential risks of Agentic AI, which are captured in resources such as OWASP’s Agentic AI Threats and Mitigations, with concerns in areas such as excessive autonomous, cascading risks and goal manipulation.

Reference Threat Models of Multi-Agent Architectures demonstrate both the complexity and potential risks associated with threats such as memory poisoning, tool misuse, untraceability, and identity-based attacks.

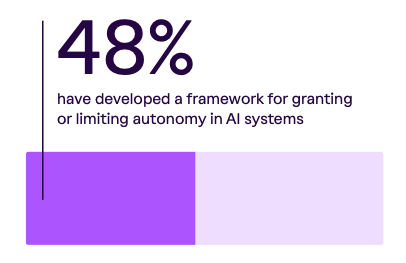

A key part of secure agentic adoption includes governance, and Vanta’s State of Trust Report highlighted an agentic governance gap, with less than half of organizations having developed frameworks for granting or limiting autonomy in AI systems, despite excessive agency/autonomy being listed among the OWASP LLM Top 10.

In last year’s State of Trust Report breakdown, I emphasized how trust (e.g., cyber) is a business imperative that can either lead to market expansion, partnerships, and growth or result in a tarnished reputation and contraction of opportunities for firms.

That theme remains strong in the 2025 report, with 82% of those surveyed stating they believe security and compliance enhance customer trust, a significant increase from last year. Moreover, more organizations are experiencing customers and stakeholders seeking evidence of security and compliance.

We often hear phrases such as “compliance doesn’t equal security” used in a derogatory manner, and admittedly, it is tied to how organizations approach compliance, rather than the concept itself.

That said, other phrases are thrown around, such as “security as a business enabler.” As this survey (and the one prior) shows, there are few better ways for security to enable the business (e.g., growth and opportunity) than through effective and provable compliance and security postures.

Vanta’s report reiterates this point, highlighting how evidence of strong security and compliance can make or break deals and lead to revenue acceleration.

The problem?

Due to legacy Governance, Risk and Compliance (GRC) methodologies, most organizations spend more time proving security than actually becoming secure. This is particularly damning, given that it is an admission from our own community, as stated in the survey, and even more so, it aligns with the reputation of cyber/compliance among our peers in development and the business more broadly.

The discussion about GRC needing to evolve is slowly starting to gain traction with movements such as GRC Engineering, a topic I dove into in detail with my friend AJ Yawn, author of GRC Engineering for AWS, as well as an article of my own, titled “GRC is Ripe for a Revolution: A look at why GRC lives in the dark ages and how it can be fixed”.

Vanta’s report is quite damning in that it highlights security theater on display, with organizations optimizing for optics over outcomes and teams now spending 12 weeks a year on manual compliance tasks, much of which could be modernized and automated, if the GRC community would lean into concepts such as GRC Engineering, as well as modern GRC platforms such as Vanta itself.

We’re perpetuating the reputation of security theater ourselves, by refusing to modernize our tools and processes when it comes to GRC.

Another key theme last year was organizations grappling with third-party risks, a trend that hasn’t changed since last year and continues to grow in impact, as 67% of those surveyed in the report say breaches tied to third-parties were more common this year than ever before.

Much like GRC, we also see teams spending an absurd amount of time on manual vendor reviews, at 9 working weeks a year, an increase from the year prior.

Many are still clinging to antiquated processes such as security questionnaires and manual artifact review, despite the ability to automate up to 90% of the Vendor Risk Management (VRM) process by Vanta and other industry innovators.

The numbers related to vendor breaches also conflicts, or perhaps reaffirms, organizations experience with these sort of incidents. 80% of those surveyed were confident their vendors would disclose a breach, which is good, however it doesn’t stop the damage, and most alarmingly, 56% of those surveyed experienced a vendor breach in the last 6-12 months from July 2025, almost 10% increase from the year prior.

I often rant that the state of cybersecurity won’t change until either market forces, incentives and/or regulatory requirements drive change. Luckily, it seems we may be headed that direction in terms of market forces, as 57% of those surveyed in the report cited that they’ve terminated a vendor over security concerns.

This not only shows that security is indeed a business enabler, and that trust is a proxy for revenue, but also that the market is starting to wake up to the risks of insecure vendors and are even taking steps to remove vendors they don’t trust from their supply chains.

AI

No discussion on cybersecurity in 2025 (or years to come) would be complete without touching on AI. We talked earlier in this article about the dichotomy of AI, both to enhance the capabilities of attackers and defenders.

On such way that shined through in the State of Trust Report was the potential for automation and AI to alleviate teams that are bogged down in traditional manual compliance processes and tools. Several are cited as saving hours in workflows in activities such as incident response, access reviews and log analysis with the help of AI and automation.

More broadly, we’re seeing organizations look to wield AI to bolster their security and resiliency against threats and attacks, with nearly 80% stating they are increasing AI usage in security programs.

These findings are actually super promising, as we hear year-over-year (YoY) how security teams are understaffed, burnt out, drowning in alerts, manual activities and toil - much of which we have discussed throughout this article, as well as the fact that IT and Security budgets may be stagnating, pushing an even stronger need for automation and efficiency.

The survey highlights key areas GRC practitioners and organizations are benefiting from AI when it comes to security and compliance:

This rapid adoption of an emerging technology also breaks an age old paradigm in cybersecurity, where we tend to be laggards and late adopters of new technologies due to a risk aversion and reluctance for change.

Thankfully, the cybersecurity community is waking up to the reality that the only way for us to both be effective against modern threats as well as keep pace with the increasing demands in our field are to be an early adopter and innovator, including when it comes to leveraging AI.

Closing Thoughts

The 2025 State of Trust Report from Vanta represents another great set of insights for our industry. Not only are security leaders and teams reporting that the risks are higher than ever, but the report also confirms what many in security have long been saying - trust is currency in our industry.

Customers and partners are waking up to this reality and even making changes to their supply chain, vendors and partnerships based on any erosion of trust they perceive. This makes the need for security and compliance and the ability to articulate that trust to stakeholders more important than ever.

Despite rapid adoption of AI across the ecosystem, AI governance is still lagging poorly, and organizations need to catch up before incidents involving AI impact trust among key stakeholders. We continue to see teams toil away in manual security theater and performative check boxing. This is a siren call for teams to adopt real GRC engineering and modern GRC platforms that speak in API’s and not static documentation, facilitate automation and can help revolutionize long stagnant GRC methodologies.

The convergence of GRC and Engineering will usher in a new paradigm of trust - for organizations willing to make the change.