Agentic AI's Intersection with Cybersecurity

Looking at one of the hottest trends in 2024-2025 and its potential implications for cybersecurity

As 2024 comes to a close, it would be hard to deny that one of the largest trends has not just been AI, GenAI, or LLMs but “Agentic AI” or AI Agents.

But what exactly is Agentic AI, why are so many focused on it, and what could its intersection and implications for Cybersecurity look like?

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 7,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

What is Agentic AI?

While there are varying definitions of Agentic AI, it commonly is referred to as using AI for iterative workflows where “Agents” can act autonomously to achieve specific goals for organizations with limited or even no human intervention.

This of course is a big shift from the current most common use of AI, which often involves LLM’s, and humans interacting with AI in the form of prompts and prompt engineering.

Prompt engineering is crafting instructions or “prompts” for AI and LLMs to generate specific outputs and insights based on the model’s capabilities and data. These are then often enhanced with methods such as Retrieval Augmented Generation (RAG), where LLMs are enhanced by accessing additional data sources, such as internal knowledge bases, external knowledge sources, and even the open Internet.

Agentic AI systems on the other hand will work with little to no human intervention and in a constant state of learning, analyzing, adapting, and responding to environmental context.

Gartner predicts that by 2028 nearly 1/3 of all interactions with GenAI services will “use action models and autonomous agents for task completion”.

For a deeper dive into Agentic AI, I recommend this blog “What is Agentic AI"?” from NVIDIA, as well as for those who prefer a visual, see below:

Why is Agentic AI top of mind for so many?

So, what has Agentic AI top of mind for so many industry leaders, founders, startups, venture capitalists (VC), and more?

Let’s take a look at some of their perspectives and motivations.

When OpenAI dropped their o1 model it introduced reasoning capabilities, where the model demonstrated the ability to stop and think before giving a response. This is referred to as “System 2 Thinking” in a blog from Sequoia, which we will discuss more in a moment. As they point out, this System 2 Thinking shifts from quickly providing outputs based on model training data or enrichment but considering potential outcomes and making decisions based on reasoning.

This blog from Sequoia Capital is the first time I heard a very important phrase for the context of this blog, which is “Services-as-a-Software”, where they are describing transitioning from traditional Software-as-a-Service (SaaS) models to services-based agentic models where agentic reasoning can turn labor into the software.

You may be initially thinking, so what? What does this have to do with me, or cybersecurity, or my job?

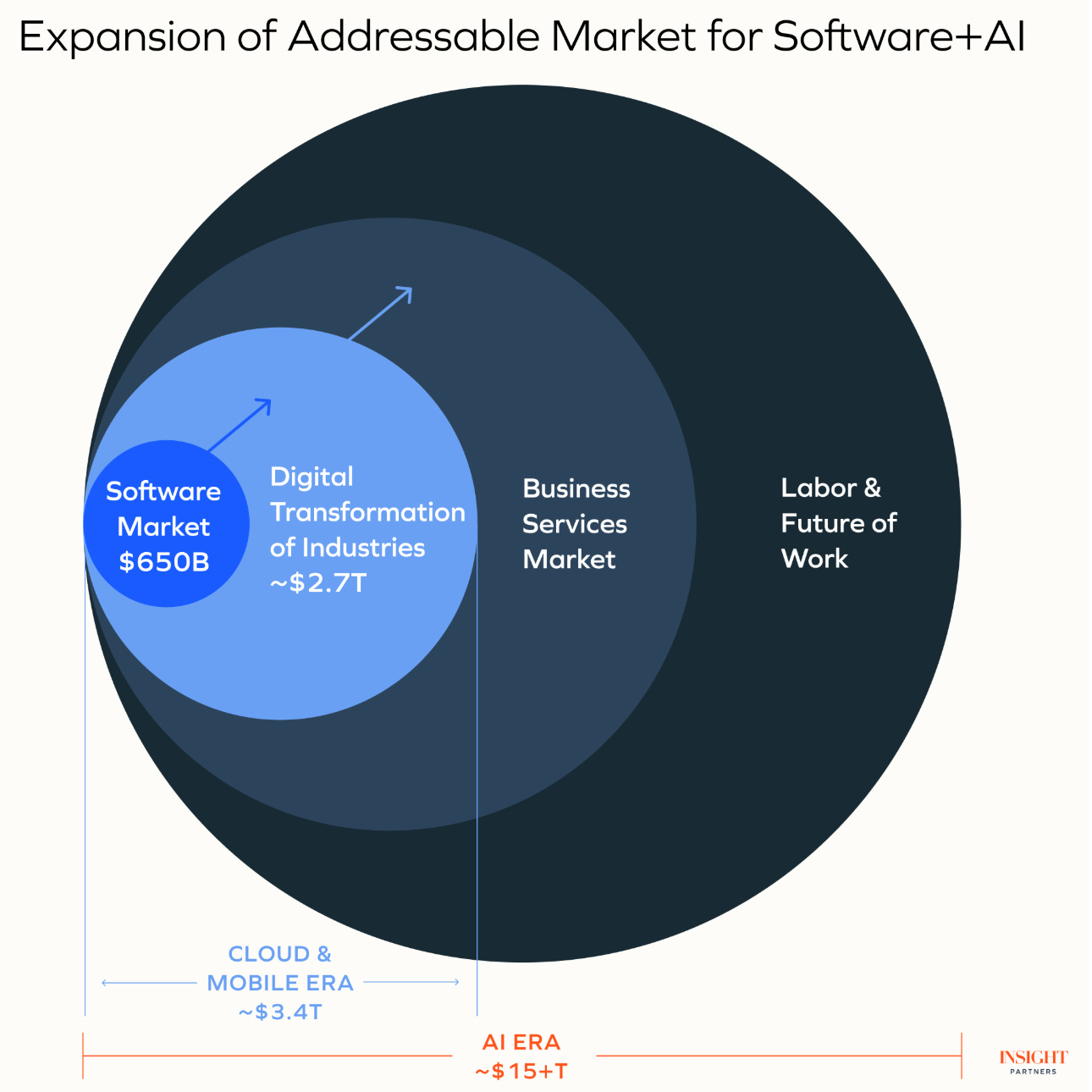

Well, below, you can see the “So What”, as depicted by Sequoia, where they show that SaaS markets are measured in millions, but labor/services markets measure in the trillions. This means the Total Addressable Market (TAM), as discussed by investors is massive, far bigger than SaaS, which already produced many unicorns, massive exists, tons of M&A, and disrupted legacy vendors and market dynamics.

A TAM of this size inevitably warrants a lot of attention, and that is exactly what it is getting.

In fact, Konstantine Buhler, Partner at Sequoia Capital just spoke with Bloomberg Technology this week and discussed “Why 2025 Will Be the Year of AI Agents”.

It isn’t just Sequoia who is interested in Agentic AI though. Private Equity firm Insight Partners, which is reported to have over $80 billion in regulatory assets under management is also strongly eyeing this disruptive trend.

In a recent blog post titled “Reimagination of everything: How intelligence-first design and the Next Stack will unlock human+AI collaborative reasoning”, Insight also makes a similar claim as Sequoia in that the intersection of Software, AI and Services/Labor represents a massive expansion in the TAM, to the tune of potentially tens of trillions.

Both Sequoia and Insight make heavy investments in Cybersecurity startups and innovators, and they’re just a couple among many VC and PE firms playing in the Cybersecurity space.

Other’s are taking a similar stance as well, take Menlo Ventures for example, who’s portfolio boasts cyber companies such as Abnormal, Bitsight, Obsidian and others.

Menlo Ventures recently published their “2024 State of Generative AI in the Enterprise” report and it makes similar claims and perspectives as Sequoia and Insight.

The report stated that 2024’s biggest breakthrough was Agentic architecture, and going from being 0% of primary architectural approaches in 2023 to already at 12% for 2024.

In the same report, Menlo predicts “Agents will drive the next wave of transformation”, tackling complex multi-step tasks moving beyond our current landscape of information generation and human interaction with models and LLM’s.

And, much like Sequoia, Insight, and others they state “advanced agents could disrupt the $400 billion software market and eat into the $10 trillion U.S. services economy”.

Earlier this year I shared a talk from Chenxi Wang, who isn’t only a Managing Partner at Rain Capital, an investment firm, but is a board director, security practitioner, leader and PhD in CompSci.

It was her talk from BSidesSF 2024 titled “Navigating the AI Frontier: Investing in AI in the Evolving Cyber Landscape” and in the talk, she discusses AI for security and the massive opportunity it presents. She demonstrated that nearly half of the global cybersecurity spend (>$100B) is on professional services and that there is a massive opportunity to automate traditionally manual cyber services activity and labor through AI, specifically agentic AI.

See the clip below, where she discusses this:

While you may be thinking, well that’s just the investors, I would recommend you take a step back as a cybersecurity practitioner and leader and realize that these investors play a significant role in the cybersecurity ecosystem, more than most realize.

Those products, tools, platforms, and services we all use to facilitate cybersecurity programs and the People, Process and Technology paradigm?

They fund that.

They fund the founders that start the companies, the startups that iterate and innovate on new security ideas, technologies and more.

This capital is what takes us from legacy on-premise mainframes to Cloud-native services, automation, microservices, DevSecOps, security tooling throughout the SDLC from source, pipelines and runtime, vulnerability management and the list goes on and on - as the category (and acronyms) of security tooling is exhaustive and only growing more complex with tool sprawl, but that’s a discussion for another day.

It also isn’t just investors who are excited about Agentic AI, but countless other firms and organizations as well. PwC, one of the worlds largest consulting firms just published a paper titled “Agentic AI - the new frontier in Gen AI: An executive playbook” (which is worth the read). Similar headlines and predictions around agentic AI can be found from Forbes, NVIDIA, and many others.

Agentic AI has big implications for not just products, but services too, or the actual work that cybersecurity practitioners can do. It has implications for the products we will use to be more effective at securing organizations, as well as Agentic AI bringing its own unique security considerations that must be accounted for as our organizations adopt these new technologies, products and workflows

We will discuss each of those a bit next.

Potential Intersection with and Implications for Cybersecurity

What makes Cybersecurity such a compelling space for AI?

Well, there are many reasons. They include a never-ending dialogue around the cybersecurity workforce and shortages, skill-gaps, an innovative and relentless collection of malicious actors, the monetization of cybercrime to the tune of billions and the manual, resource intensive nature of cybersecurity to name a few.

Now that we’ve covered at a high-level what Agentic AI is, and why it matters to so many, lets discuss how its evolution may intersect with the Cybersecurity industry.

First, I find it helpful to think of AI from two perspectives:

Using AI to improve security outcomes

Securing the use of AI itself

Let’s discuss each a bit.

While the potential for Agentic AI and AI more broadly to aid in cybersecurity is massive, a couple of areas are getting quite a bit of attention early, which includes AppSec, GRC and SecOps.

Agentic AI and AppSec

Agentic AI’s intersection with AppSec can be thought of in the context of agents that are able to take actions, make decisions and proactive go about detecting and responding to threats related to Applications, in the world where AppSec practitioners live.

Apps are the primary customer/consumer facing digital mechanism in which the digital economy operates. However, the world of AppSec has grown incredibly complex in the past decade.

This is due to various trends, such as Cloud, Mobile, SaaS, API’s/Microservices and DevSecOps, as well as a push for “shifting security left”, moving security earlier in the SDLC to mitigate risks before they reach production and integrate security into developer workflows such as in CI/CD pipelines.

The challenge of course is two fold, legacy AppSec tools lack context, utilizing legacy methods such as Common Vulnerability Scoring System (CVSS) scores and burying teams in noise and toil, leading to resentment and frustration from Development teams and impacting developer and feature velocity. Failing to account for known exploitation, exploitation probability, reachability, compensating controls, and business criticality for assets and data sensitivity.

The second is the nature of vulnerability management itself. Through factors such as malicious actors targeting applications, increased vulnerability research and discovery, reporting and just an overall expansion of the digital attack surface we now have runaway vulnerability rates that outpace organizations ability to mitigate them.

As discussed recently by vulnerability researcher Jerry Gamblin, 2024 is approaching 37,000 Common Vulnerabilities and Enumerations (CVE)’s (aka vulnerabilities) which represents over 100 a day, and nearly 40% YoY growth from 2023. Just keeping pace, let alone getting ahead would require organizations to have gotten 40% more efficient at vulnerability management in the last 12 months, which we all know isn’t happening, which is why vulnerability backlogs have ballooned to hundreds of thousands to millions for large complex enterprises.

Organizations such as Cyentia have demonstrated that teams can only typically remediate 1 out of 10 new vulnerabilities per month, leading to this exponential pile up of attack surface in vulnerabilities.

Not only are teams drowning in vulnerabilities, leading to mounting vulnerability backlogs, but we’re losing the race between our ability to remediate vulnerabilities, meantime-to-remediate (MTTR) and the malicious actors ability to weaponize exploits, as shown below by vendor Qualys.

Agentic AI introduces the opportunity for activities such as proactively identifying risks in your applications and code bases, conducting dynamic testing to emulate malicious activities and attack patterns and even potentially autonomously remediate the risks and vulnerabilities identified.

We’re also seeing a rise in automated Pen Testing, which can take traditional Pen Testing activities which are manual, time-intensive and involve specialized labor and have them automated, recurring at much shorter intervals, and even opens the window to chain together agents testing and discovering vulnerabilities and those remediating the findings or triaging and prioritizing the findings for human review.

These of course aren’t all of the potential use cases for Agentic AI in AppSec, but you can see where the potential is pretty quickly, giving the abysmal state of AppSec for most organizations right now.

Agentic AI and GRC

Another are ripe for disruption by Agentic AI within Cybersecurity is that of Governance, Risk and Compliance (GRC), while I have written and spoken extensively about the pains of legacy GRC and how it is still living in the dark ages while software development has moved on to DevOps/DevSecOps, Cloud, API’s and Automation, GRC still lives in static documents, cumbersome processes, worthless questionnaires and generally manual toil.

It isn’t that GRC isn’t valuable, but the nature in how it is conducted in a tactical sense just hasn’t kept pace with the technological landscape and waves of innovation we’ve seen in the past 10+ years. In fact, in a recent piece, I argue that Compliance is Security, and often serves as a business enabler. That said, that doesn’t mean the practice of GRC can’t be evolved, and Agentic AI can be a key enabler of that evolution.

In a recent episode of the GRC Engineering Podcast, Shruti Gupta discusses this potential including automating months long manual processes, streamlining typical cumbersome labor heavy activities and leading to improved security outcomes as a result of GRC Modernization via AI and AI Agents.

We continue to see the evolution of the GRC space, including increased regulation, CISO/organizational liability, new compliance frameworks (especially in the EU markets), and compliance opening (or keeping closed) doors to new customers and market growth for startups and incumbents alike.

Empowering GRC workflows by automating repetitive tasks, streamlining lengthy compliance documentation and reviews, rationalizing duplicative frameworks, automating control validation, attestations and continuous monitoring and reporting are but a few areas that Agentic AI can and will likely have an impact on GRC.

Agentic AI and SecOps

Arguably one of the areas that has gotten the most attention related to AI and Agentic AI is Security Operations (SecOps). This is due to various factors such as the complex and never ending nature of SecOps, with various tiers of analysts, alerts, reporting, incident response, false positives and the 24/7 365 nature of this speciality within the broader Cybersecurity career field.

It involves a TON of data, alerts, notifications, manual analysis, complex workflows and interactions across enterprise security systems, log sources, teams and more. This field generally suffers from severe burnout, often functioning as a stepping stone for practitioners before moving into other areas of Cybersecurity.

Scale Ventures has a great article titled “AI SOC Analysts: LLMs find a home in the security org” where they cover aspects that contribute to SecOps challenges and the potential for AI to make an impact.

The potential for AI agents in SecOps is tremendous, from phishing, malware, credential compromise, lateral movement, incident response, and much more. AI Agents could create or even act on previously curated incident response playbooks and plans to identify and gather relevant artifacts, enrich incoming alerts, utilize threat intelligence, identify impacted systems, isolate/triage impacted systems, remediate relevant vulnerabilities and ultimately report on the full timeline and details of the incident as well as remedial actions taken.

Filip Stojkovski and Dylan Williams produced an excellent article articulating what this may look like in an article titled “Blueprint for AI Agents in Cybersecurity: Leveraging AI Agents to Evolve Cybersecurity Practices”

As you can see from their visualization, a model could be constructed that involved multiple AI agents, each functioning in different capacities, such as triage, threat hunting, and response, leveraging insights and data from SOC/SIEM’s and performing activities from alert grouping and enrichment all the way through remediation and security control implementation while continuously feeding into activities such as threat intelligence and detection engineering.

They also lay out potential agentic design patterns, such as reflection, planning, tool use and multi-agent, the latter of which would be the most likely for workflows involving multiple steps, activities and outcomes in complex enterprise environments to enable superior SecOps outcomes.

Security Concerns for Agentic AI

While the potential for Agentic AI in various areas of cybersecurity is impressive and promising, it isn’t without its concerns either.

Hopefully it goes without saying that all of these use cases discussed for defenders are equally available to malicious actors as well, to improve, optimize and maximize their nefarious activities and impact on organizations in countless ways.

These new agentic, multi-agentic and autonomous workflows will usher in their own new risks, vulnerabilities, threats and considerations for organizations that implement them, the data they are exposed to, and the systems they interact with.

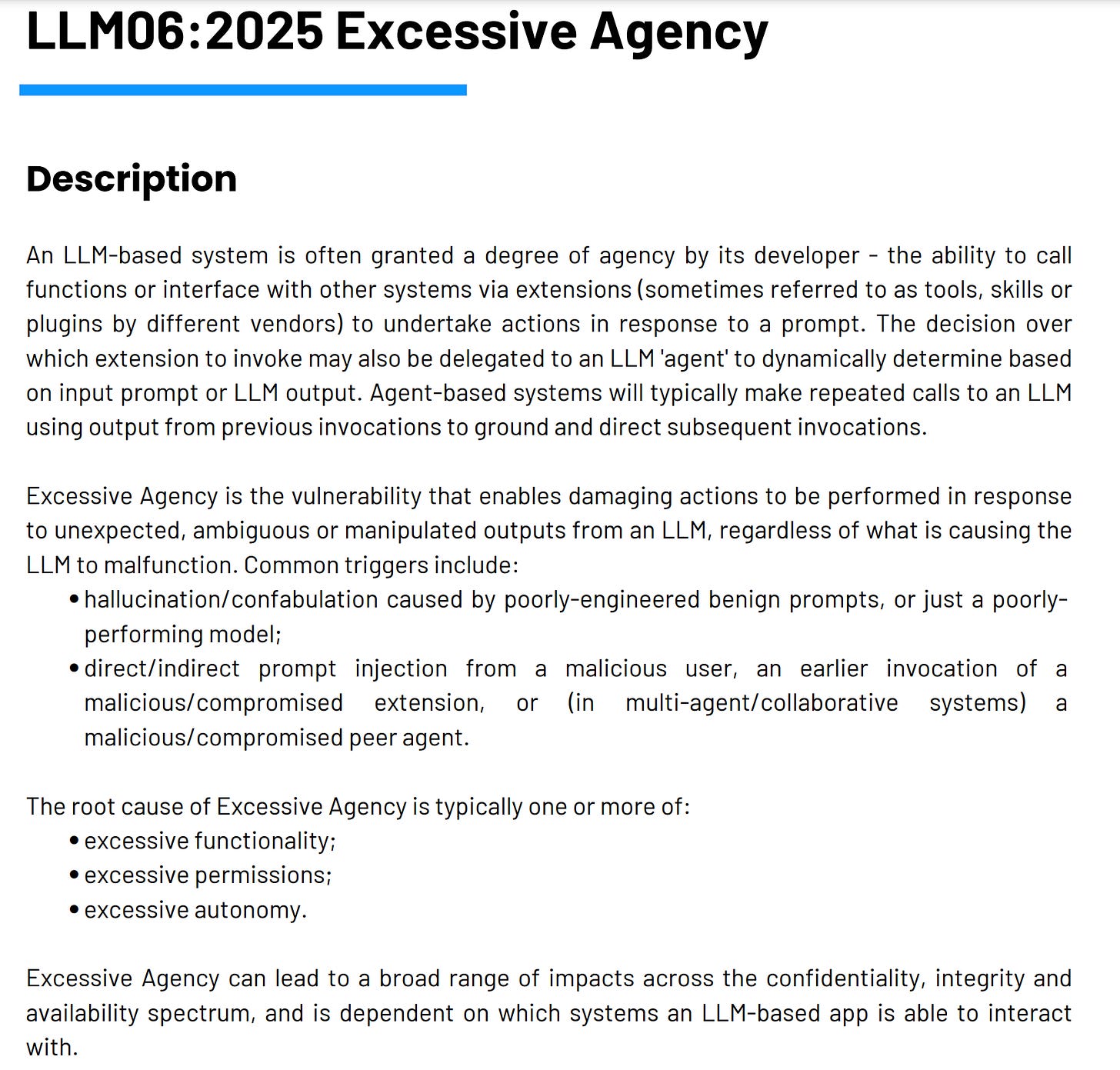

Sources such as the OWASP LLM Top 10 call out this specifically with LLM06:2025 “Excessive Agency”.

As we start to fantasize about the potential for autonomous agents and workflows, we ultimately need to take a step back and ask what can go wrong and what risks warrant consideration as well.

For example, each of the agents may potentially need credentials and to participate in authentication and authorization to carry out their activities in digital environments. In a previous article I published titled “What Are Non-Human Identities and Why Do They Matter” I discussed how organizations have found for every 1,000 human users, they have 10,000 non-human connections/credentials, or even 10-50x the number of human identities in the organization. Now imagine this scenario underpinned by AI Agents and Agentic architectures with credentials tied to these various agents and the potential for credential compromise, which still reigns supreme as the leading cause of data breaches from sources such as the Verizon Data Breach Investigations Report (DBIR).

In a follow up article I published titled “The State of Non-Human Identity (NHI) Security” I discussed a report from the Cloud Security Alliance (CSA) that found most organizations surveyed are already struggling with fundamental non-human identity security practices and handling things such as service accounts, API keys, access tokens and secrets.

OWASP’s LLM Top 10 discusses various risks associated with excessive agency, such as excessive functionality, permissions and overall autonomy. Historically we’ve done a terrible job managing permissions, credentials and overall access control, and if we plan to unleash countless autonomous agents on enterprises, it warrants us asking, do we think we will suddenly get better at these fundamentals?

Perhaps.

Perhaps Agentic AI enables us to address these longstanding issues, or perhaps Agentic AI exacerbates already longstanding challenges in cybersecurity while concurrently opening promising new opportunities as we discussed in areas such as AppSec, GRC and SecOps.

Time will tell, but one thing is for sure and that is that everyone seems excited about the potential for Agentic AI and 2025 is poised to continue this trend.

There are exciting times ahead and I am eager to continue to learn and stay on top of these trends and see how we embrace and govern these incredible technologies moving forward to capture the value they can provide while also mitigating their risks.

This is one of the best articles I have read on Agentic AI and its impact on cybersecurity. Kudos!

Very good article. One thing to add is the potential impact of MCP (Model Context Protocol) which can leverage a lot the Agentic AI scene and now very recently Google A2A (Agent 2 Agent) which seems to be an enhance on top off MCP but I need to better understand it. Thank you for sharing