AI - Incentives, Economics, Technology and National Security

A look at the recently unveiled U.S. AI Action Plan and its implications the race for AI dominance

There’s absolutely no denying that AI dominates the discussion these days, not just in technology but in venture capital, along with broader discussions around economics, the workforce, and even national security.

The current presidential administration has made positioning the U.S. as a dominant force in AI at the forefront of their policy objectives and recently unveiled the U.S. AI Action Plan, which I will be diving into throughout this article, looking at it from various angles in terms of economics, national security and of course, cybersecurity.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

High Level

At a high level, the AI Action Plan covers three core pillars:

Pillar I - Accelerate AI Innovation

Pillar II - Build American AI Infrastructure

Pillar III - Lead in International Diplomacy and Security

Each pillar covers different aspects of the U.S.’s overall AI aspirations and key pathways to enabling U.S. dominance in AI through various means.

Chamath Palihapitiya and the crew at All-In recently helped facilitate a “Winning the AI Race”, featuring speakers across Government and industry, including comments from President Trump himself on the AI Action plan.

I recommend listening to not just President Trump’s comments but the other parts of the series too, as it provides insights from founders, investors, Government senior leadership and policy folks and more. This includes leadership from NVIDIA, AMD, Vice President J.D. Vance, the Treasury Secretary among others.

The introduction of the AI Action plan discusses the U.S.’s ambitions around achieving and sustaining AI dominance. This is primarily of course a race between the US and China, as the nations pursue global AI leadership.

I recently shared an excellent deep dive from Contrary Research on this topic, titled “AI Progress: The Battlefield of Cold War 2.0”. It discusses the distinct approaches between the two nations, with the US approach being more capital markets-driven and compute rich, whereas China’s approach is more state-led ambition, along with its accompanying hurdles.

The White House previously issued EO 14179 “Removing Barriers to American Leadership in AI”, which laid the groundwork for the action plan we will discuss.

Many of these topics and more were covered during the All-In AI Race event from the various speakers and perspectives.

Pillars and specifics aside, it is clear not just in the action plan but from the current presidential administration in general that they believe AI is absolutely critical to US geopolitical interests, economic prosperity and national security.

Before going deeper, the the action plan does call out specific principles that it says cut across all three pillars, which I think are worth highlighting:

The importance of American workers and the need to ensure American workers and their families benefit from the opportunities of the AI revolution

The need to ensure American AI systems are free from ideological bias and designed to pursue objective truth over social engineering

Preventing advanced technologies from being misused or stolen by malicious actors

Pillar I: Accelerate AI Innovation

As the name implies, this pillar is all about acceleration and calls out specific phrases such as “removing red tape and onerous regulation”. This particularly draws stark parallels in my mind when it comes to the drastically different approaches being taken between the US and EU when it comes to AI regulation.

In the US, we see a clear call out and emphasis on removing regulation to accelerate innovation, whereas in the EU we see a push to become a regulatory super power and an emphasis on safety and security (even if misperceived) over enabling commercial innovation and speed.

In fact, the EU Commission recently stated there will be “no pause” when it comes to the roll out and timelines of the EU AI Act, despite pleas from 46 of the EU’s largest companies calling for a pause on the implementation of the EU AI Act.

No where was this distinction between the US and EU on AI more evident than Vice President JD Vance’s comments at the Paris AI Summit, where he railed against topics such as excessive AI regulation:

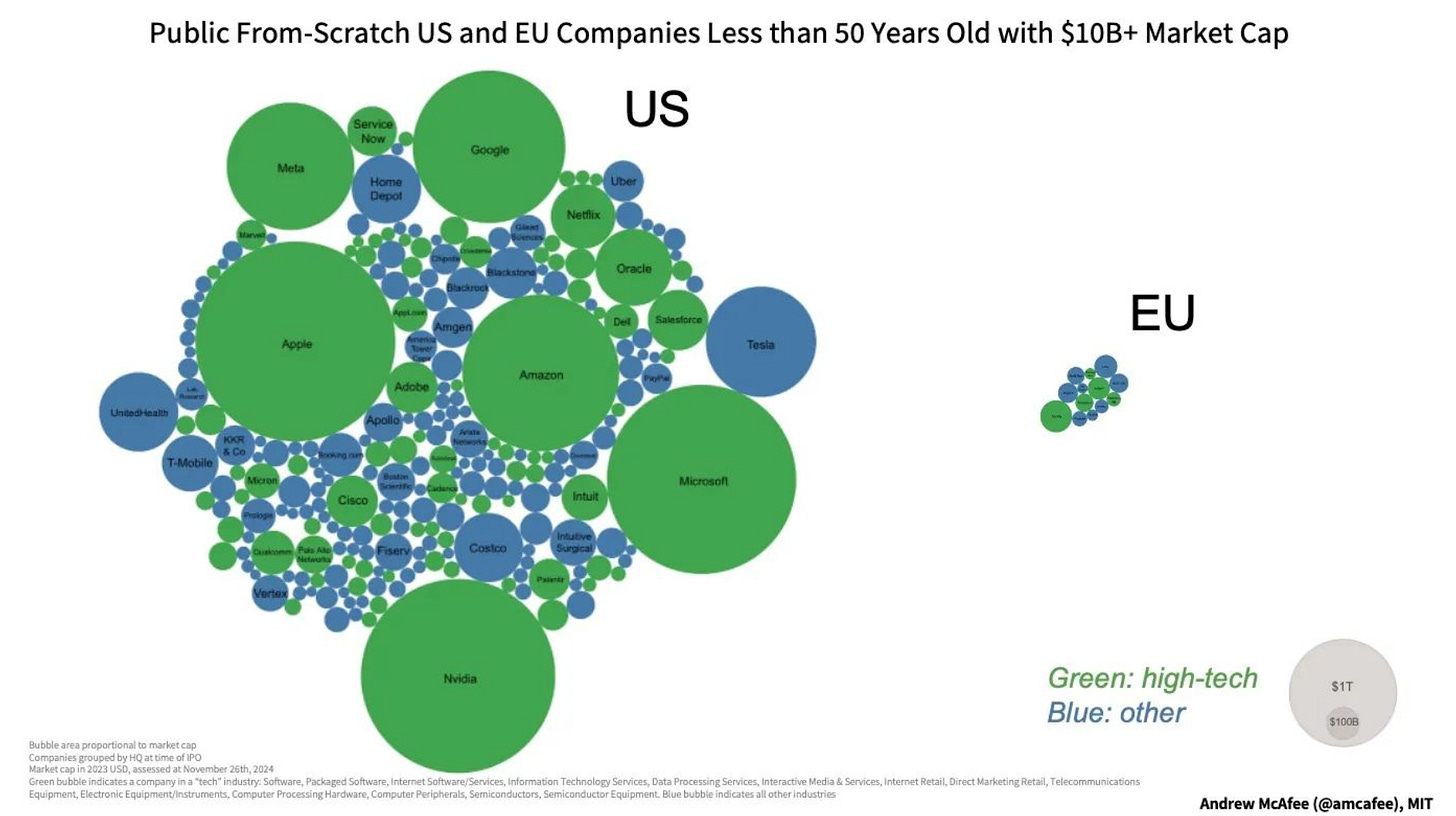

While not directly related to AI, diagrams such as the ones below highlight just the massive difference between the US and EU when it comes to markets, capital and technology (note the outsized presence of technology centric companies in the US side of the first diagram).

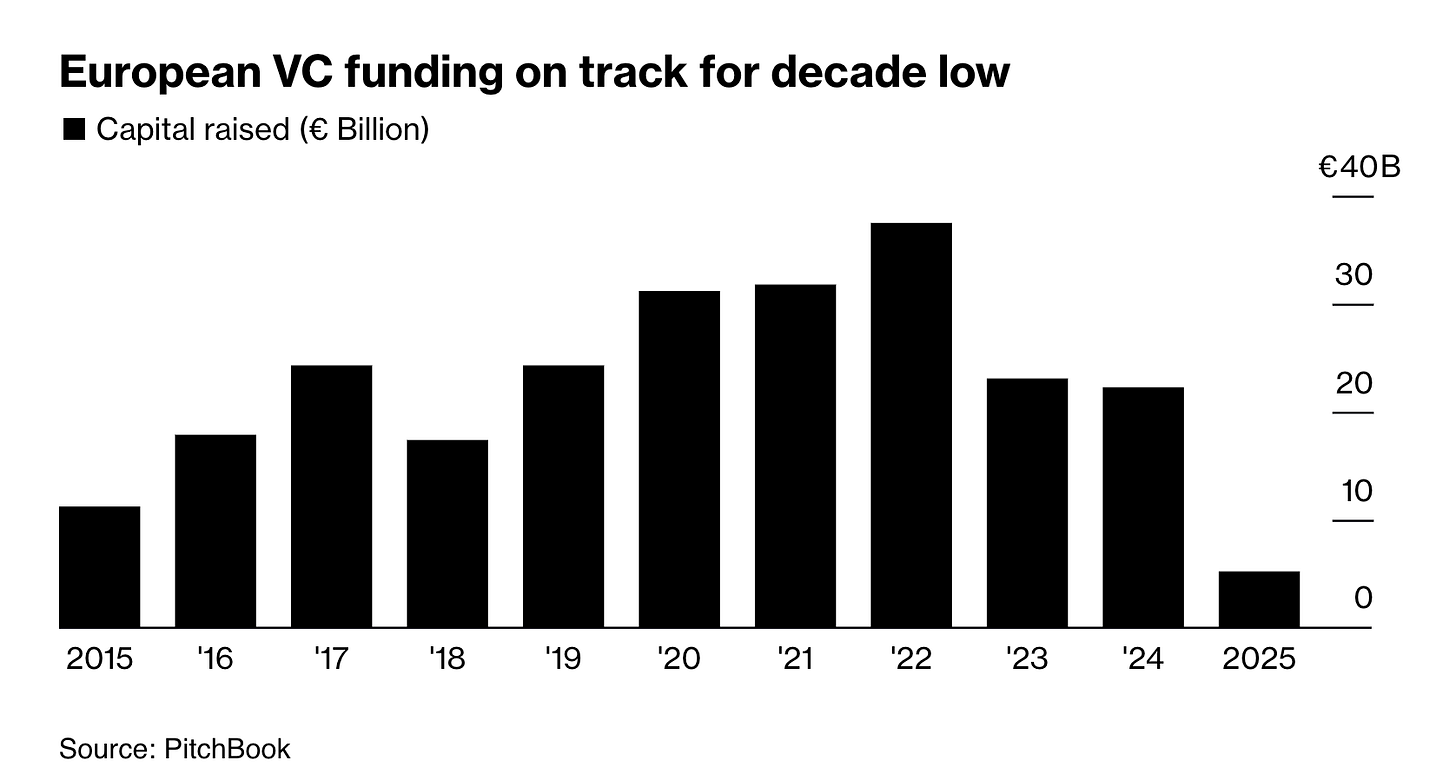

Furthering this trajectory is news that Europe’s VCs are on pace for the lowest fundraising year in a decade:

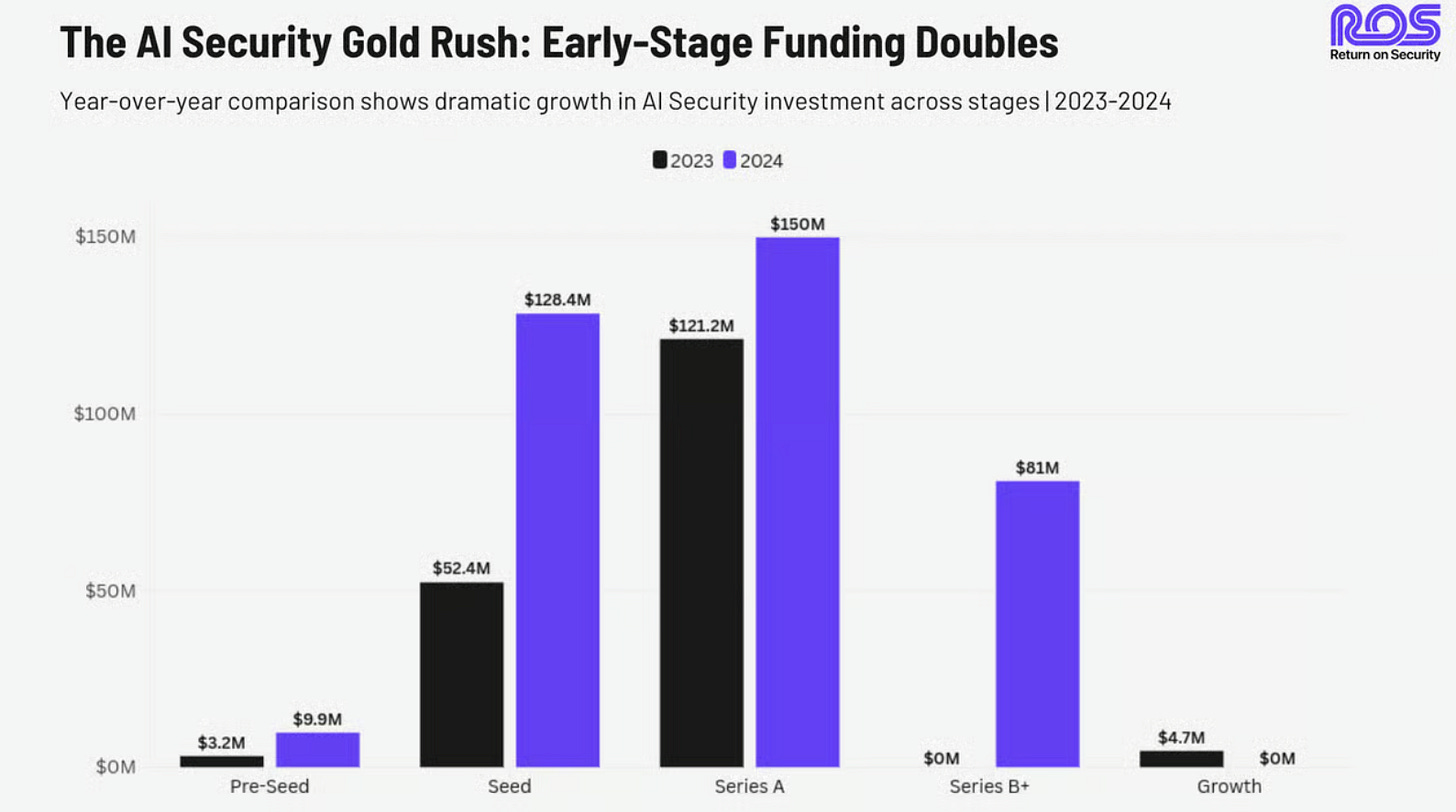

This is much different than the U.S. where 57% of total worldwide deal value was accounted for via the US in 2024, at an estimated $215.4 billion. Bringing it to AI and Cyber in particular, AI dominated security investments across stages in 2024, as laid out by my friend Mike Privette of Return on Security:

Going back to the US AI Action plan, it lays out key policy actions to reduce red tape, such as:

Looking to remove current Federal regulations that hinder AI innovation and adoption

Work with Federal agencies to identify, revise and repeal regulations that hinder AI development or deployment

Consider a state’s AI regulatory climate when making Federal funding decisions (more on this topic later)

There’s also an emphasis on ensuring Frontier AI protects free speech and American values, which includes revising the NIST AI RMF to eliminate references to DEI, Misinformation and Climate Change.

Encouraging Open-Source and Open-Weight AI

This section of the first pillar I found particularly interesting, for reasons I will discuss below. The section emphasizes the importance of open source/weight models when it comes to not being dependent on a provider, and also allowing organizations with sensitive data to use models without needing to send their data to the provider for example.

This speaks to platforms such as HuggingFace and the rise of open source/weight AI models, allowing transparency and visibility that isn’t freely available with commercial AI models and providers. That said, there are risks with open source/weight models too, much like open source software itself, given how expansive and complex the ecosystem is.

This is a nuanced topic, because similar arguments were made about Cloud in the early days, but now the US Department of Defense, Intelligence Community now routinely run sensitive workloads in hyperscale cloud environments, albeit with proper measures such as encryption, to protect data from being disclosed to the providers without authorization, building on compliance programs such as FedRAMP and the DoD Cloud Security Requirements Guide (SRG) and Impact Levels, for different classifications and data sensitivities.

What makes this section interesting too is that the DoD recently awarded contracts to leading proprietary commercial model providers, such as OpenAI, Google, Anthropic and xAI, up to $200M each, to scale up adoption of advanced AI capabilities in the DoD. These of course are commercial model providers that don’t necessarily have the same level of transparency as open-source/weight models that the AI Action Plan emphasizes.

I recently sat down with Daniel Bardenstein to dive into the complexities of the AI supply chain, including discussing the differences between commercial and open source models when it comes to code and weights.

All this said, this section of the AI Action plan does emphasize the importance of open source/weight models, and this includes the rise of platforms such as HuggingFace.

From a policy perspective, the AI Action Plan includes actions such as improving access to compute for startups and academics, driving adoption of open source/weight models by SMBs and more.

Enabling AI Adoption

An interesting aspect of the AI Action plan is that it doesn’t just take aim at the Federal landscape, but commercial industry too, with a section focused on accelerating adoption of AI among established large enterprise organizations.

On one hand, this can be seen as the Federal government looking to accelerate the US adoption of a transformative technology, given how pivotal the administration views it to economic and national security, but on the other, I could see some making the point that this is looking to drive spending and consumption of technologies and companies, some of whom have close relationships with the current administration, as well as are financially backed by venture capitalists who do.

To help accelerate adoption the AI Action plan has policy actions such as establishing AI Centers of Excellence (AI CoE)’s, launching domain-specific efforts to measure/demonstrate the productivity boost of AI and also use the DoD, IC and ODNI to measure US adoption contrasts with competitor nations.

Improving the Understanding, Control and Robustness of AI and LLMs

The Action Plan also specifically calls out the current challenges in understanding how frontier AI models work. It is well known that we currently do not thoroughly understand why a model produces a specific output for example. This is problematic for many use cases, including cybersecurity, especially when it comes to adoption in industry’s such as Defense, National Security and Intelligence.

The Action Plan calls on DARPA in collaboration with others to spearhead research to understand the interpretability and robustness of AI and frontier models as well as building out an AI evaluation ecosystem, to help measure AI reliability and performance, which is also critical as we look to weave these technologies into everything from consumer goods to critical infrastructure and defense systems.

Increased Adoption Throughout Federal and Defense Agencies

This administration has already made it clear how critical they view AI, not just for commercial purposes but also Federal agencies, civilian services and national security. I mentioned earlier the multi-million dollar contracts being awarded to the frontier model providers such as OpenAI, Anthropic, Google and others and the Action Plan highlights the need to accelerate adoption of AI in Government and the DoD.

This includes taking aim at manual internal processes and improved citizen and national security outcomes. Anyone who has been around Federal/DoD cybersecurity knows it is rife with opportunities to streamline and accelerate, whether it is currently manual and cumbersome compliance processes, enterprise SOC environments and threat hunting, vulnerability management and more.

The DoD’s IT and Cyber executives already have efforts underway to leverage AI to transform existing manual cumbersome compliance processes, such as the DoD Software Fast Track (SWFT) initiative, which I covered in the article “Buckle Up for the DoD’s Software Fast Track ATO (SWFT).”

These use cases have commercial equivalents and this is why we’ve seen a wave of AI security startups looking to tackle systemic cybersecurity issues through AI-native capabilities and products.

I’ve covered the intersection of AI and Cyber extensively, but a couple of my resources I primarily recommend include:

Pillar II: Build American AI Infrastructure

While topics such as the potential value and ramifications across society of AI adoption are exciting, one truth is fundamental, none of it can run without a sufficient underlying infrastructure.

While estimates vary, studies project that U.S. data centers already consume more than 4% of the nations total electricity and it is set to rise to nearly 10% or more by 2030. Industry leaders such as Sam Altman of OpenAI and Andy Jassy of Amazon have each made public remarks about the energy constraints and the need for an “energy breakthrough” to power the future demands driven by AI growth and adoption.

Tying back to the “Winning the AI Race” event hosted by All-In, you can listen to this clip below which includes commentary from the Secretary of the Interior as well as the Energy Secretary each discussing the current and future energy demands of the U.S. tied to AI, and what steps need to be taken and how the Action Plan addresses those.

Not to make everything about China, but various speakers, including President Trump commented on the pace at which China is expanding their energy capacity (often through Coal) and how the U.S. is far behind China’s growth in terms of energy capabilities, and that must change, if we want to keep pace with AI, which has such strong energy demands.

This second pillar of the AI Action Plan covers key activities to facilitate the energy demands, such as:

Creating streamlined permitting for Data Centers, Semiconductor Manufacturing Facilities, and Energy Infrastructure

Developing a Grid to Match the Pace of AI Innovation

Restoring American Semiconductor Manufacturing

Building High-Security Data Centers for Military and Intelligence Community Usage

Training a skilled workforce for AI Infrastructure

While I won’t comment as much about the above areas, both due to being outside of my area of expertise and also not as relevant to cybersecurity, I was pleased to see a specific carve out focusing on my wheelhouse:

Bolster Critical Infrastructure Cybersecurity

Before diving into the specifics of the AI Action Plan related to Critical Infrastructure and Cyber, let’s recap what the last year or more have involved when it comes to the intersection of these two topics.

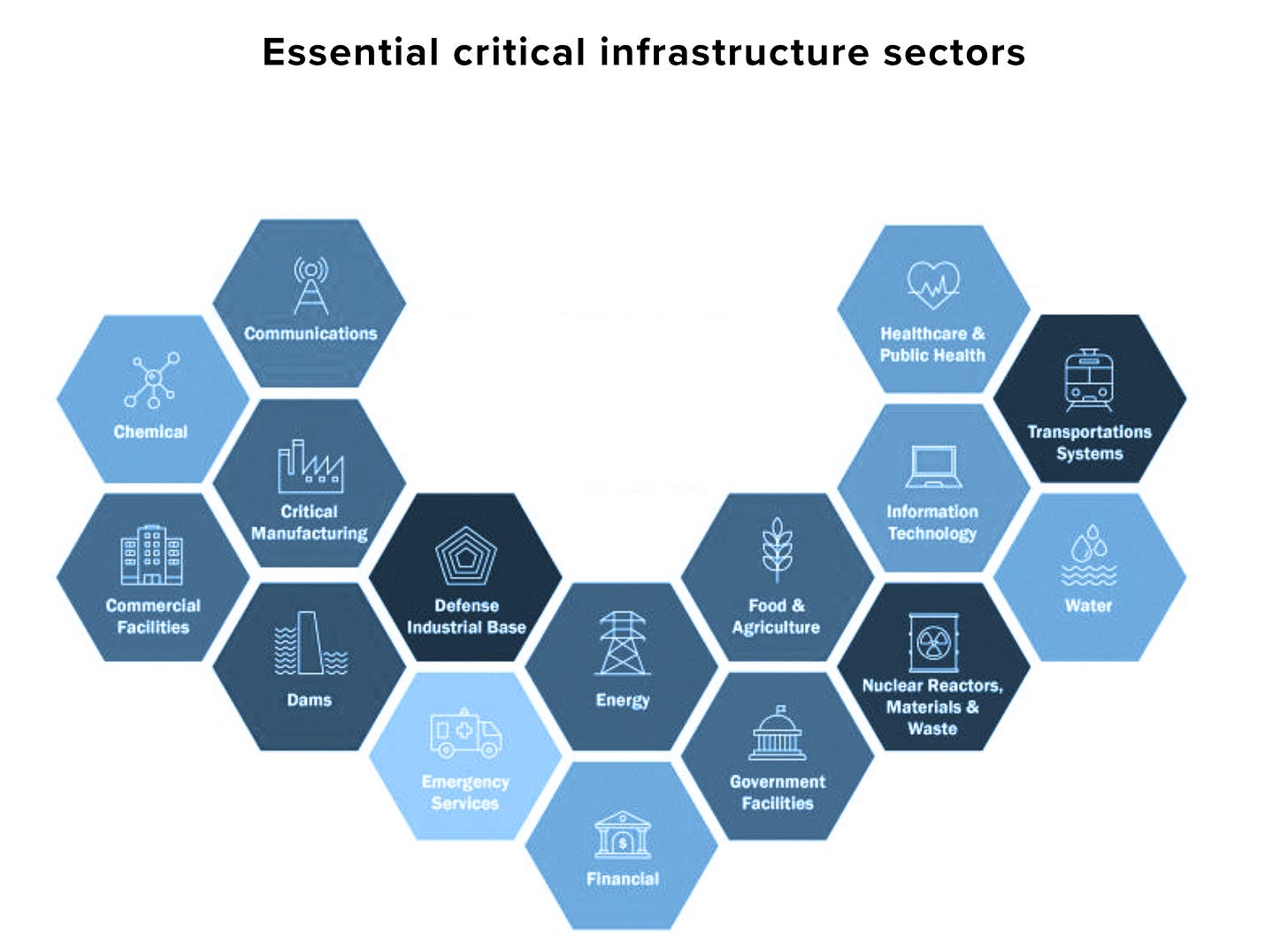

The Critical Infrastructure Sectors, as identified by the Department of Homeland Security (DHS) can be seen below.

All of these sectors actively deal with cyber threats of some sort and many have been impacted by security incidents. This includes:

Massive IP and sensitive data theft across the Defense Industrial Base (DIB), as nation states and hackers target the DoD’s “Soft Underbelly”

The largest U.S. Telecommunications hacks in history as recently as 2024

Foreign nations burrowing and embedding themselves in U.S. energy systems

The Action Plan acknowledges the capability and value of AI systems from coding and software engineering and the potential for AI to enable cyber defensive tools and also bolster organizational defenses in the face of advanced threats, especially for organizations lacking financial resources and deep cyber expertise, which includes many organizations in the critical infrastructure sectors.

That said, as is the nature of AI and any technology, it is a double edged sword, both offering opportunities as well as obstacles. Adversaries are actively using AI to improve there malicious activities, and the AI Action Plan acknowledges this. It calls for use of AI in Critical Infrastructure to ensure the use of Secure-by-Design, Robust and Resilience AI systems that can also detect performance issues, malicious activities or data poisoning and other AI-direct attack vectors.

Some of the recommended policy actions here include establishing AI-ISAC’s, having DHS issue and maintain guidance to sector entities on addressing AI-specific vulnerabilities and threats, as well as enabling information sharing and collaboration between public and private sector organizations about known AI vulnerabilities, leaning into existing cyber vulnerability sharing pathways.

Promote Secure-by-Design AI Technologies and Applications

The AI Action plan also specifically calls out the promotion and use of Secure-by-Design AI Technologies and Applications. For those unfamiliar with the concept of Secure-by-Design, it has most recently been championed by CISA, and I have covered it extensively in previous pieces, such as:

While I’m a big proponent of Secure-by-Design and the value it brings the ecosystem, I also openly acknowledge the challenges, most notable the competing prioritization it presents businesses, over other goals, such as speed to market and revenue, which much to the dismay of security practitioners, will always take the drivers seat over any security aspirations a business has.

AI will inevitably face this same dilemma. That said, to help promote the Secure-by-Design AI Technologies and Applications the recommended policy actions include refining the DoD’s Responsible AI and GenAI Frameworks, Roadmaps and Toolkits, and also publishing an AI Assurance IC Standard.

Promote Mature Federal Capacity for AI Incident Response

Like any other technology, no matter how great your defenses, incidents will inevitably occur, making incident response absolutely critical. The Federal government realizes this, and that CISA in collaboration with other agencies and private sector partners held an inaugural tabletop exercise focused on AI security incidents. This was led by amazing security leaders such as Jen Easterly and others.

CISA through its Joint Cyber Defense Collaborative (JCDC) even published the AI Tabletop Exercise scenario document, outlining exercise purpose, objections, scenarios and more - all of which private sector organizations can use to inform their own AI incident response exercises and activities, and agencies can use to inform future maturity around AI incident response.

The tabletop resource asks a great series of questions that can help organizations reflect on their AI incident response processes and capabilities, or lack thereof.

Policy actions to mature the Federal capacity for AI IR include ensuring NIST and others include AI in the establishment of standards, response frameworks and best-practices. It also calls for CISA to update IR and vulnerability response playbooks to include considerations for AI systems, deeper collaboration between CISO’s and Chief AI Officers, as well as responsible sharing of AI vulnerability information through DoD, DHS and the Office of the National Cyber Director (ONCD), among others.

Pilar III: Lead in International AI Diplomacy and Security

A big emphasis, and rightfully so, throughout commentary by President Trump and others as part of the Winning the AI Race event, was the importance of adoption of American AI systems, computing hardware and standards.

The most poignant example of this is China’s Digital Silk Road (DSR) and larger Belt and Road Initiative (BRI), which involves embedding Chinese technologies and infrastructure around the world, expanding China’s influence while also raising various concerns around cybersecurity and privacy.

The U.S. itself is currently going through major efforts to remove Chinese tech, such as Huawei and others, which is pervasive across many areas.

Efforts to exert this influence through digital technologies is well documented by organizations such as The Atlantic Council. These influences and embedding of technology have not just cybersecurity and privacy implications, but also geopolitical and economic considerations as well.

Some of the key themes in the third pillar include:

Exporting American AI to Allies and Partners

Countering Chinese Influence in International Governance Bodies

Strengthening AI Compute Export Control Enforcement

Plugging Loopholes in Existing Semiconducting Manufacturing Export Controls

Align Protection Measures Globally

Ensuring that the U.S. Government is at the Forefront of Evaluating National Security Risks in Frontier Models

There’s a lot to unpack in this pillar due to the expansive focus areas but the key theme is exerting American influence to ensure American dominance in AI, which shouldn’t be surprising given that is a topic of the AI Action Plan itself, and also echoed in previous Trump EO’s focused on AI, such as “Removing Barriers to American Leadership in AI”.

The U.S. is looking to establish a consortium from industry to create full-stack AI export packages. To indicates the U.S. tapping into its commercial tech sectors to help ensure U.S. technologies are pervasive around the globe among partners. The U.S. is also striving to align international AI governance approaches with American values and counter authoritarian influence. This may be challenging given how increasingly far the U.S. and EU are growing apart when it comes to their regulatory and AI governance approaches, as I discussed above, albeit both entities oppose authoritarian influence, at least historically.

Strengthen AI Compute Export Control Enforcement & Plugging Loopholes in Semiconductor Manufacturing Export Controls

The U.S. has taken a stance of pushing for export controls focused on limiting China’s access to advanced semiconductors and associated manufacturing equipment. The success of these efforts has been far from perfect though.

Just two days ago, news broke that at least $1 billion of NVIDIA AI chips illegally entered China, as well as commentary from NVIDIA’s CEO Jensen Huang that they would resume selling H20 chips to China. It is also widely reported that China has worked around these restriction mechanisms through pathways such as smuggling networks, transit points in bordering nations such as Vietnam, Taiwan and Singapore, relying on front companies and also utilizing offshore AI services and data centers, all in attempts to succeed in their AI ambitions despite these export controls from the U.S.

Additionally, they often say necessity is the mother of invention, and one stark example of that is the Chinese AI company DeepSeek. DeepSeek-R1’s launch rattled U.S. markets, leading to a tech sector sell off, including the Nasdaq dropping 3.6%~ and the S&P 500 falling 1.8%. DeepSeek has a focus on efficiency with their LLMs, including lower resource requirements and innovative architectural approaches, which some speculate is a direction taken due to the difficulty in acquiring leading AI chips due to the export controls mentioned above.

The AI Action Plan seeks to strengthen these export controls, as well as plug loopholes in existing controls, such as targeting component sub-systems rather than just major systems necessary for semiconductor manufacturing.

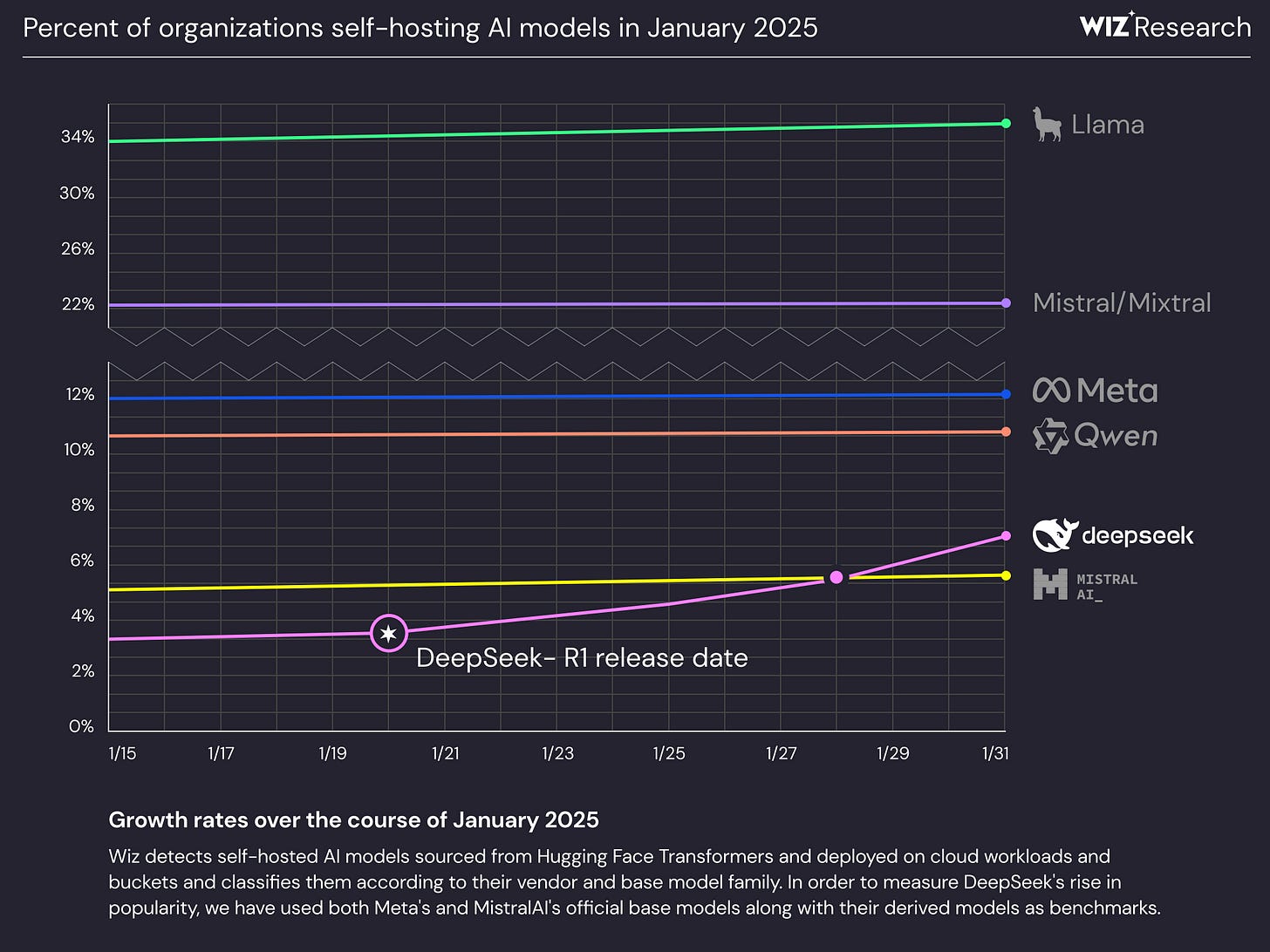

Many have raised cybersecurity concerns around the DeepSeek models, discussed in this Center for Internet Security (CSIS) article, with some nations outright banning their use, and the U.S. some Federal agencies instructing employees against using it due to national security concerns. Despite those concerns, research from industry leaders such as Wiz earlier this year showed rapid adoption of DeepSeek-R1, with it quickly approaching 10% among self-hosted AI models. Wiz also found exposed DeepSeek databases leaking sensitive information, including chat histories.

The AI Action plan also aims to align International partners with the U.S. approach, and even calls for the U.S. to use methods such as secondary tariffs to achieve greater international alignment. Tariffs of course have been a hot topic in 2025, and this indicates the U.S. is willing to use economic incentives and policies to exert its influence globally in efforts to stymie China’s access to AI related technologies and equipment.

Another area of the AI Action Plan that highlights cybersecurity risks discusses evaluating national security risks in frontier models. This includes assessing potential security vulnerabilities as well as foreign influence from the use of adversaries AI systems in critical infrastructure as well as in the broader U.S. economy.

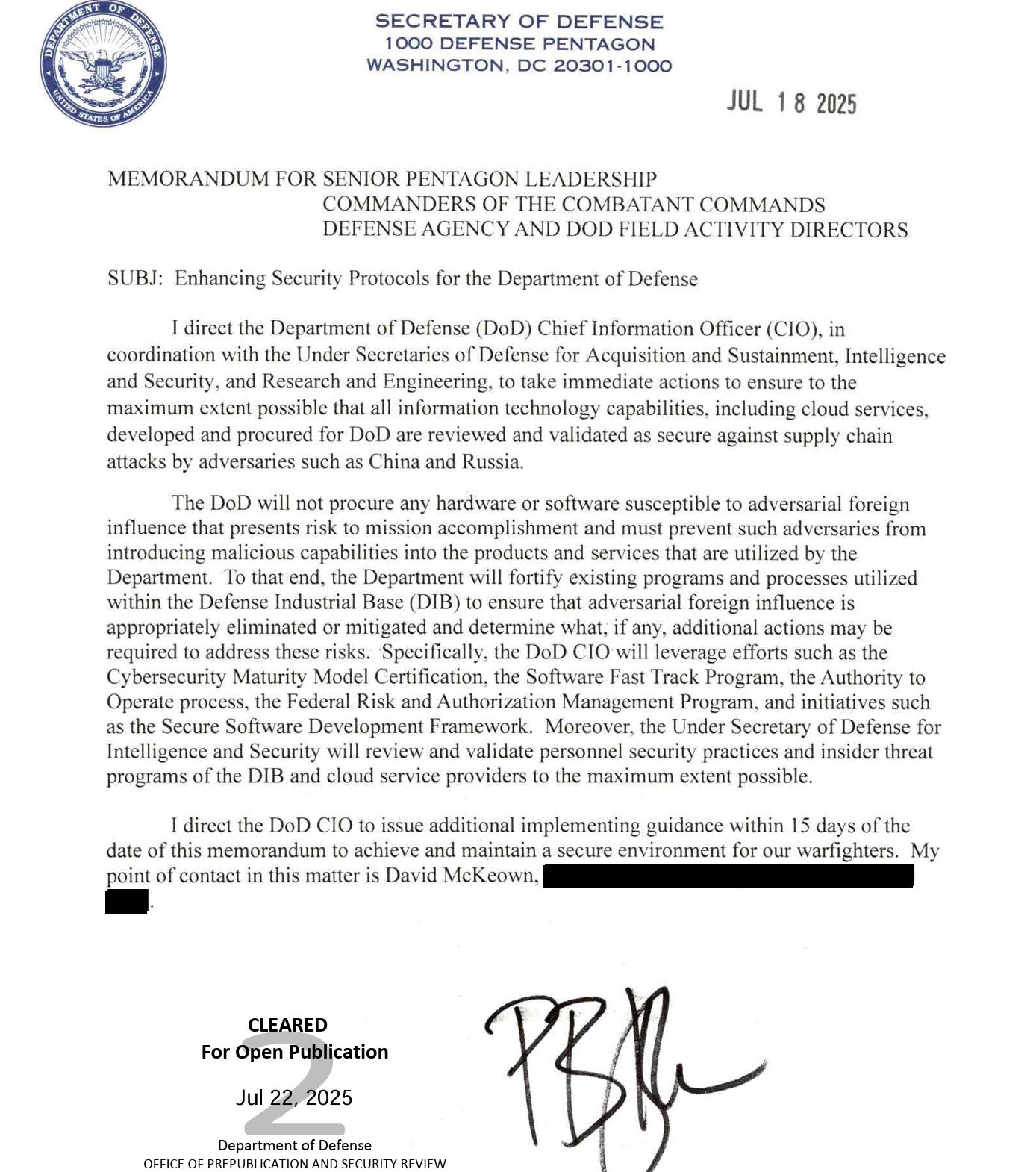

While not specific to AI, this aligns with recent headlines where the Defense Secretary Pete Hegseth issued a directive ordering the DoD CIO to take additional measures to ensure DoD technology in protected from the influence of top adversaries. Ironically, this came after news broke that one of the DoD’s largest tech vendors, Microsoft, was relying on China-based engineerings to support U.S. DoD cloud environments.

This is where things get problematic, given some of the DoD’s largest IT vendors are also global technology giants with footprints around the world and complex supply chains, relationships and competing priorities, often pitting themes of economics and profits against the backdrop of national security interests.

Closing Thoughts

The U.S. AI Action Plan is ambitious and brings together various things that are critical to ensuring U.S. dominance when it comes to AI. These include economics, capital, incentives, energy demands, model development and adoption, and cybersecurity.

All of the topics are underpinned by geopolitics, especially as the world two leading nations on the AI front continue to race for supremacy, allocating investments, aligning their national policies accordingly and looking to exert influence globally to ensure their interests thrive.

It remains to be seen how much of the action plan materializes, especially given it will face some challenges due to recent Federal workforce restructuring, RIFs and budgetary cuts, some also speculate the very technology itself will help address some of these challenges.

For those in the software and cybersecurity communities, while the AI Action Plan is produced by the government, its ambitions and intentions expand well beyond the U.S. Federal government into the entire nations economic prosperity and national security.

We need to be producing the most innovative and capable AI software, products and models, as well as leveraging this transformative technology to both address longstanding systemic cybersecurity challenges such as vulnerability management, application security, workforce shortfalls and more, while also leaning into best practices and guidance from sources such as NIST, OWASP and others to ensure we secure AI itself and don’t make the U.S. critical infrastructure and commercial attack surface more porous than it already is.

We’re in this together, for better or worse.