Vulnerability Velocity & Exploitation Enigma's

Vulnerability exploitation is on the rise, right as the attack surface is poised to explode with AI-driven development

As I shared recently, some of the industry’s most cited and referenced reports of the year dropped. These include Verizon’s DBIR, Mandiant’s M-Trends, and Datadog’s State of DevSecOps.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

While each report is comprehensive and covers multiple problem spaces, areas, and topics, they all touch on trends and AppSec. One trend all of them shared is that vulnerability exploitation is on the rise, overtaking phishing in cases such as the DBIR, which has historically enjoyed a leading spot, alongside credential compromise.

This comes after DBIR’s report in 2024 dubbed things as the “vulnerability era”, meaning the problem got worse a year after that title.

Mandiant’s M-Trends report had a similar finding, with the initial infection vector in 2024 being vulnerability exploitation:

So we see that attackers are not only having more success with vulnerability exploitation as a primary attack vector, but are also focusing on it as one of their primary exploitation methods.

When you flip it around and look at defenders, we have the opposite outcome when it comes to vulnerability remediation. We’re struggling more than ever to keep up with the pace of vulnerabilities, drowning in massive vulnerability backlogs, and trying to use context to make a signal of the noise.

As shown above from Jerry Gamblin, a vulnerability researcher I often cite, as of today, we’re seeing 30% year-over-year (YoY) CVE growth. That is without the fact that the NVD has a historical 39,000 backlog of unenriched vulnerabilities, as pointed out by Francesco Cipollone on my post this morning.

Despite this massive growth in vulnerabilities, organizations are struggling to know what to remediate, as we know less than 5% of CVEs in a given year are ever actually exploited. Organizations often waste insane amounts of time and labor forcing development and engineering peers to remediate vulnerabilities that pose little actual risk, and yet we wonder why they hate us and dread engaging with us.

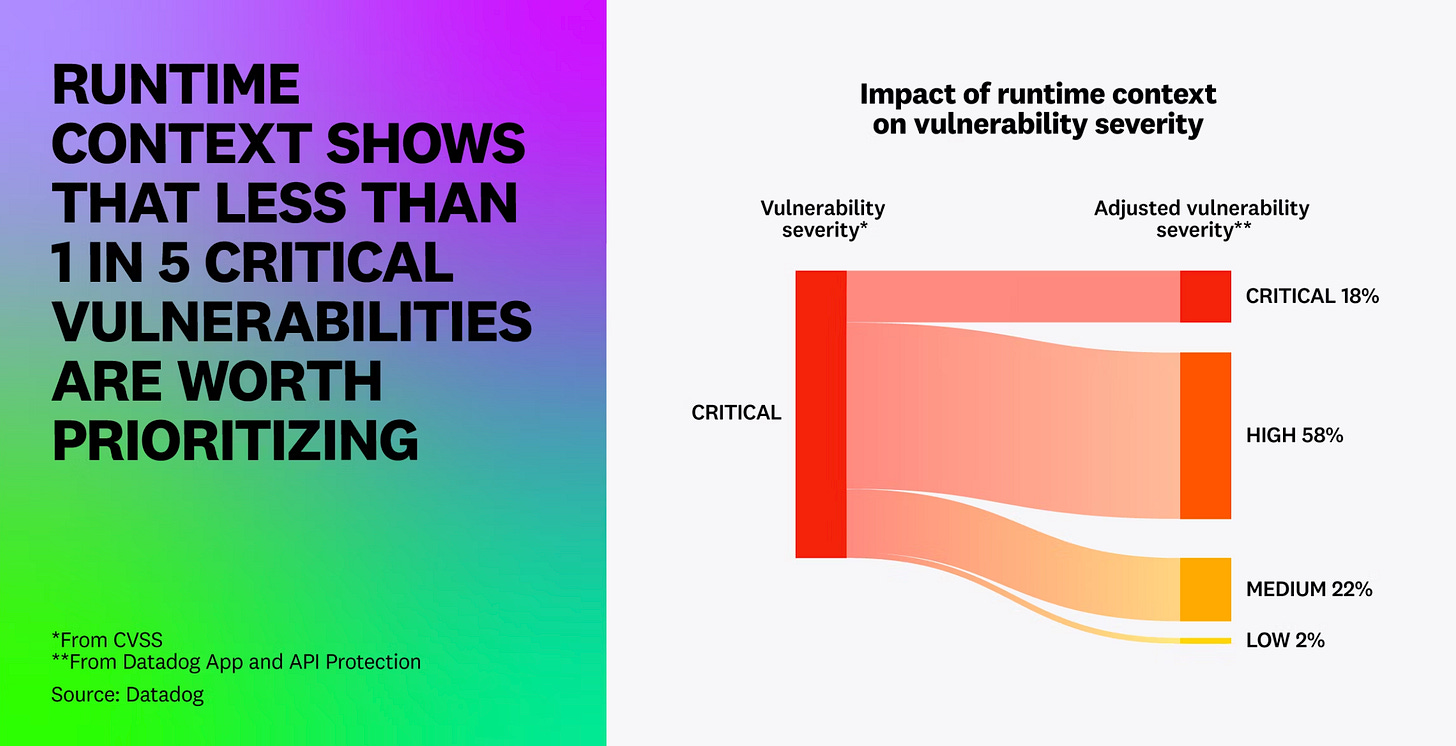

Datadog’s report helps substantiate this, showing only 20% of “critical” (don’t get me started on the nonsense of CVSS base scores) are exploitable at runtime.

Other firms, such as Endor Labs, have shown similar findings via reachability analysis, showing that 92% of vulnerabilities, without context, such as those that aren’t known to be exploited, likely to be exploited (e.g. EPSS), reachable, have existing exploits/PoCs, have existing fixes, and so on, are purely noise.

Traditional vulnerability management approaches burden our development peers with this toil. This doesn’t account for developers now leaning into AI-driven development tools and copilots, experiencing 40%+/minus productivity boosts. Many are now claiming AI is writing outsized portions of code and soon may write nearly all of it.

I covered this point in a recent article titled “Security’s AI-Driven Dilemma: A discussion on the rise of AI-driven development and security’s challenge and opportunity to cross the chasm”.

As I tried to lay out in that article, the idea that we will continue to apply our methods of the past, even in eras such as “shift left” and DevSecOps, where we threw a bunch of noisy, low fidelity tooling into CI/CD pipelines and then dumped massive lists of findings without context onto developers will be successful in the age of AI-driven development and where software development is headed is outright foolish.

As I laid out above, what got us “here”, which isn’t in a good place, certainly won’t get us “there”, or to a more secure and resilient digital ecosystem, which we’re all ultimately aiming for.

The truth is we need to be early adopters and innovators with AI, or we risk not only continuing to fall behind, but continuing to perpetuate the problems of the past, which is drowning in vulnerabilities, have broken relationships with our development peers and not resembling anything close to being a “business enabler” that security likes to LARP around as.

We know our development peers are using this technology, which is fundamentally changing software development. We also know that attackers are experimenting with AI.

We need to do the same. If we don’t, it will be not only to our detriment, but that of us increasingly digital society.

Way too much time is spent on scanning tools to find vulnerabilities in software and not nearly enough time is spent on actually fixing those vulnerabilities. I can't tell you how many times an app makes it to continuous monitoring and Ops will spend millions on tools to ring fence the vulnerability (XDR, Microsegmentation, Airgap, etc.) when they could just patch the f@#$ing code. Its the walled garden of security all over again. Would love to hear your thoughts on the subject.