Resilient Cyber's Collection of AI Security Resources

A collection of AI Security Interviews, Publications and Frameworks

Some folks in my network have asked me if I have a collection of AI security resources. While I have many bookmarks, articles, books on my bookshelf, etc., as I’ve dove headfirst into GenAI/LLMs and AI Security over the past 24~ months, I wanted to use this article to highlight some resources for the community.

Below will be a collection of articles, interviews, and resources I’ve created as I have intentionally up-skilling myself around all things AI. I’ll be going chronologically in order of when I created the resources or had the discussions but the order is in no way a representation of the value or importance of the resources.

Below is a snapshot of the amazing leaders and folks I’ve had conversations with and who you will get to hear from:

So, let’s check out what I’ve gathered so far!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

CISA and NCSC’s Take on Secure AI Development

In 2023, I began researching secure AI development, and one of the initial resources I came across was a joint publication between CISA and the UK’s NCSC.

It lays out comprehensive, secure AI system development guidelines, covering unique aspects of AI such as models, machine learning, data poisoning, prompt injection, and more. It covers some of the nuances between SaaS-based AI model usage and self-hosted deployment models.

It also covers the full tech stack, from the underlying infrastructure to securing models, updating organizational policies and processes such as incident response (IR), and the cultural element, with proper training and equipping the workforce to leverage this emerging technology.

Resilient Cyber w/ Rob van der Veer - Navigating the AI Security Landscape

One of the first “AI Security” leaders I came across in my network was Rob van der Veer, who has a long history and experience in securing AI systems and environments and a deep, rich understanding of AI.

Rob also leads OWASP’s “AI Exchange,” which we will discuss soon.

Rob and I covered key topics such as:

Some of the most pressing risks around the rapid growth and adoption of AI in production environments

Various Government's efforts around regulating AI, most notably the EU with their EU AI Act and the U.S. with the AI Executive Order (EO), which has now been revoked under President Trump.

How to secure and govern AI without hindering innovation and opportunity

Navigating compliance burdens with the countless frameworks and requirements out there

Digging into the OWASP AI Exchange

As I mentioned above, Rob leads the OWASP’s “AI Exchange”, which is an open source collaborative effort to progress the development and sharing of global AI security standards, regulations and knowledge.

It covers AI threats, vulnerabilities and controls and offers the “AI Exchange Navigator” that covers key topics such as:

General controls against all threats

Controls against threats through runtime use

Controls against development-time threats

Runtime application security threats

If you haven’t checked out the OWASP AI Exchange yet, I strongly recommend doing so, as I have found myself referencing it several times over the last two years as I look to ensure I am competent in the various areas of AI security and key considerations for secure organizational adoption of AI.

OWASP LLM AI Cybersecurity & Governance Checklist

In March 2024 I came across OWASP’s newly published LLM AI Cybersecurity & Governance Checklist. You know us folks in Cyber love our checklists, so naturally this caught my attention.

It can often be overwhelming determining where to start with LLM and AI security, and this resource helps hone in your focus on key considerations and risks.

This publication does a great job delineating between broader AI and then GenAI/LLMs and provides good mental models for an AI Threat Map.

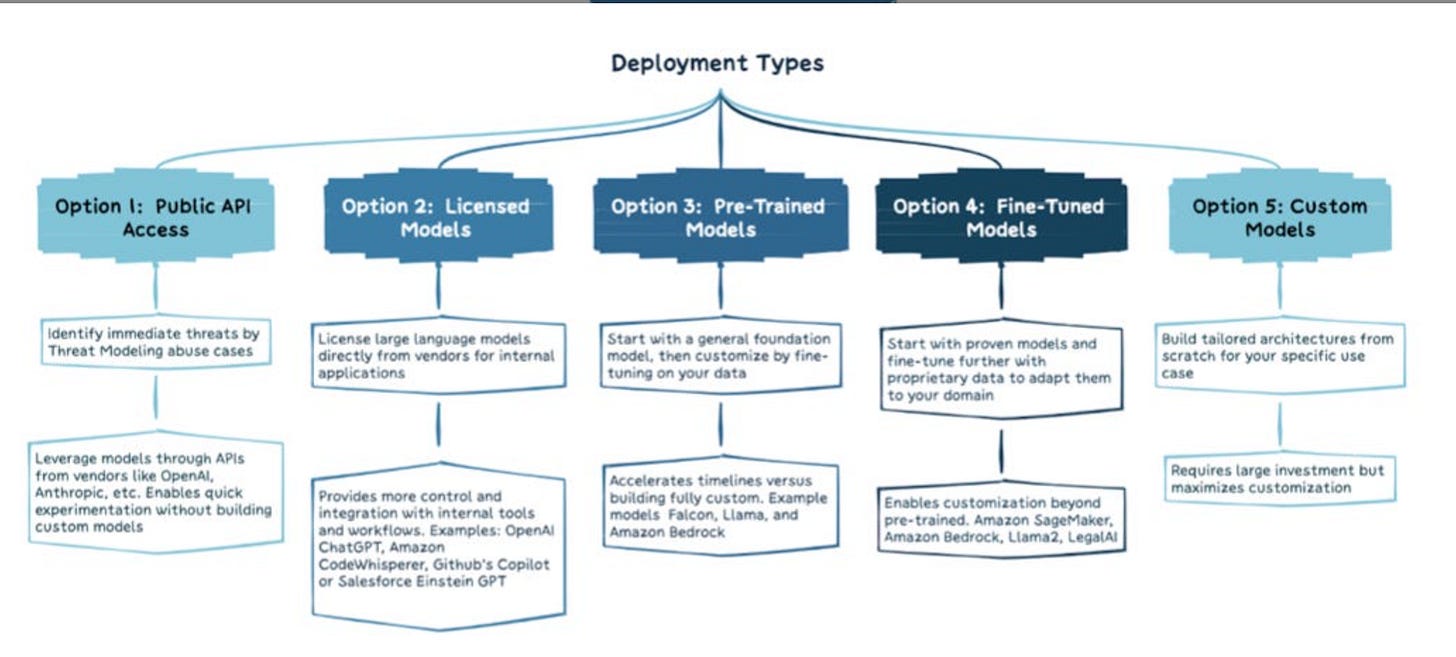

It also provides a good model for organizations to determine their LLM strategy and specific actionable steps to take.

We know there are various LLM deployment types, from public API usage, licensing a model, pre-training, fine-tuning and even custom models, which they depict below:

I found this LLM AI Cybersecurity & Governance to be an excellent resource that isn’t too technical and could serve business and security leaders alike as they look to implement high-level strategies around secure use of LLMs and AI.

Leveraging an AI Security Framework: An overview of Databricks AI Security Framework

In April 2024 I came across industry leader Databricks AI Security Framework (DASF). Given this was from a commercial vendor I of course was initially skeptical, but I was pleased to find a comprehensive 80+ page framework grounded in their real world expertise and experience with customers in implementing secure AI architectures and adoption.

Their DASF covers key topics such as:

Various model types, from open source to external and third-parties and key considerations

AI system components and their risks

55 different technical security risks across the 12 AI system components in their framework

Different AI lifecycle phases and considerations such as data, models, deployments and operations

Bringing (AI) Security Out of the Shadows

While not strictly focused on AI, this is a piece I wrote discussing the behaviors of cybersecurity that foster shadow tech usage, whether it be cloud, mobile, SaaS, and now AI.

discuss the various habits and anti-patterns in security that drive shadow usage, including AI:

Cyber’s reactionary rather than proactive nature

Risk aversion and skepticism

Cumbersome processes and compliance toil

Longstanding relationship fractures with peers in Engineering, Development and the Business

I also discuss what we can do to fix these challenges and avoid AI having rampant shadow usage and lack of security oversight similar to prior technological waves.

Resilient Cyber w/ Steve Wilson - Securing the Adoption of GenAI & LLMs

Another key industry leader I came across regarding AI security is Steve Wilson. Steve is the leader of OWASP’s Top 10 for LLMs as the author of The Developer’s Playbook for LLM Security: Building Secure AI Applications.

Steve and I chatted about:

The OWASP LLM Top 10, how it came about and the value it provides the community

Some key similarities and differences when it comes to securing AI systems and applications compared to broader AppSec

Where organizations should look to get started to try and keep pace with the rapid business adoption of LLM’s and GenAI

His book the Developers Playbook for LLM Security

Key nuances for security between open and closed source models, services and platforms

Supply chain security concerns related to LLMs

Navigating AI Risks with MITRE’s Adversarial Threat Landscape for AI Systems (ATLAS)

As I continued to look into AI threats, not just risks and frameworks, I ran into MITRE’s ATLAS in September of 2024. It is a really excellent resource for exactly that.

MITRE’s AI ATLAS is a living knowledge base of adversary tactics and techniques based on real world attack observations and realistic demonstrations from AI Red Teams and Security Groups.

ATLAS is modeled after MITRE’s widely popular ATT&CK framework, and can be used for activities such as security analysis, AI development/implementation, threat assessments and red teaming and reporting attacks on AI-enabled systems.

MITRE produced the ATLAST MATRIX, that lays out threats, risks and vulnerabilities as well as tactics and techniques organizations should be familiar with as they adopt AI-enabled systems or embed AI technologies into their products.

In my article I walk through the various techniques and tactics, as well as mitigating measures that can be taken to reduce risks to AI systems using the insights from ATLAS. MITRE also has their ATLAS Mitigations Page.

I strongly suspect MITRE ATLAS will be a key resource for the community into the future much like MITRE ATT&CK.

Resilient Cyber w/ Christina Liaghati - Navigating Threats to AI Systems

On the heels of digging into MITRE’s ATLAS, I sat down for a chat with Dr. Christina Liaghati, who is MITRE’s Project Lead for ATLAS.

Her and I chatted about:

What ATLAS is and how it originated and the value it provides the community

The evolving AI threat landscape and the importance of having a framework to categorize the various emerging threats, tactics and techniques

The importance of being data-drive and actionable versus letting FUD drive the AI security conversation

Christina’s experience participating in the first big AI security incident-focused table top exercise (TTX) with CISA and other Government and industry partners and the value of exercises like this

Thoughts on both securing AI and using AI for security

Opportunities for the community to get involved in ATLAS

Resilient Cyber w/ Helen Oakley - Exploring the AI Supply Chain

Given me personal passion and focus on software supply chain security and previously publishing a book on it titled “Software Transparency”, the concept of AI supply chain security had my attention as well.

So naturally, I reached out to Helen Oakley, who is heavily involved with OWASP’s AI Security groups as well as being the Founding Partner for the AI Integrity & Safe Use Foundation (AISUF) to discuss AIBOM’s and the broader AI supply chain.

Helen and I covered:

Where folks can get started with AI security

The intersections when it comes to AI and Supply Chain Security

The nuances of open source models and platforms such as HuggingFace and some risks to consider

What AI BOM’s are and how they differ from SBOM’s

Resilient Cyber w/ Walter Haydock - Implementing AI Governance

We know governing AI’s usage is among the key challenges, so I reached out to chat with my friend Walter Haydock who is doing a lot of great work on this front. Walter is the Founder of StackAware and an AI Governance Expert, specializing in helping companies navigate AI governance and security certifications, frameworks and risks.

We covered quite a bit of ground in our discussion:

We discussed Walter’s pivot with his company StackAware from AppSec and Supply Chain to a focus on AI Governance and from a product-based approach to a services-oriented offering and what that entails.

Walter has been actively helping organizations with AI Governance, including helping them meet emerging and newly formed standards such as ISO 42001. Walter provides field notes, lessons learned and some of the most commonly encountered pain points organizations have around AI Governance.

Organizations have a ton of AI Governance and Security resources to rally around, from OWASP, Cloud Security Alliance, NIST, and more. Walter discusses how he recommends organizations get started and where.

The U.S. and EU have taken drastically different approaches to AI and Cybersecurity, from the EU AI Act, U.S. Cyber EO, Product Liability, and more. We discuss some of the pros and cons of each and why the U.S.’s more relaxed approach may contribute to economic growth, while the EU’s approach to being a regulatory superpower may impede their economic growth.

Walter lays our key credentials practitioners can explore to demonstrate expertise in AI security, including the IAPP AI Governance credential, which he recently took himself.

Agentic AI’s Intersection with Cybersecurity

As 2024 came to a close, it would be hard to deny that one of the largest trends has not just been AI, GenAI, or LLMs but “Agentic AI” or AI Agents.

But what exactly is Agentic AI, why are so many focused on it, and what could its intersection and implications for Cybersecurity look like?

In this article, I dove really deep into what Agentic AI is, its implications for startups, existing product vendors, investors and cybersecurity. I covered key areas such as GRC, AppSec and SecOps and the broader market implications of Agentic AI as well.

Below is a BSidesSF 2024 talk from Chenxi Wang, who I interviewed recently that dives into some of the implications as well.

Resilient Cyber w/ Filip Stojkovski & Dylan Williams - Agentic AI & SecOps

Among the areas in Cyber getting the most attention and excitement for AI is Security Operations (SecOps). In this episode, I was able to sit down with two industry leaders advocating for the adoption of AI across SecOps to address longstanding industry challenges.

I had been following Filip and Dylan for a bit via LinkedIn and was really impressed with their perspective on AI and its intersection with Cyber, especially SecOps. We dove into that in this episode including:

What exactly Agentic AI and AI Agents are, and how they work

What a Blueprint for AI Agents in Cybersecurity may look like, using their example in their blog with the same title

The role of multi-agentic architectures, potential patterns, and examples such as Triage Agents, Threat Hunting Agents, and Response Agents and how they may work in unison

The potential threats to AI Agents and Agentic AI architectures, including longstanding challenges such as Identity and Access Management (IAM), Least-Permissive Access Control, Exploitation, and Lateral Movement

The current state of adoption across enterprises and the startup landscape and key considerations for CISO’s and security leaders looking to potentially leverage Agentic SecOps products and offerings

Resilient Cyber w/ Greg Martin - Agentic AI and AppSec

Another area tied to AI that has gotten tremendous excitement is AppSec. That is why I was excited to sit down with Ghost Security Co-Founder/CEO Greg Martin.

In this episode, we sit down with Ghost Security CEO and Co-Founder Greg Martin to chat about Agentic AI and AppSec. Agentic AI is one of the hottest trends going into 2025, and we will discuss what it is, the role it plays in AppSec, and what system industry challenges it may help tackle.

Greg and I chatted about a lot of great topics, including:

The hype around Agentic AI and what makes AppSec in particular such a promising area and use case for AI to tackle longstanding AppSec challenges such as vulnerabilities, insecure code, backlogs, and workforce constraints.

Greg’s experience as a multi-time founder, including going through acquisitions but what continues to draw him back to being a builder and operational founder.

The challenges of historical AppSec tooling and why the time for innovation, new ways of thinking and leveraging AI is due.

Whether we think AI will end up helping or hurting more in terms of defenders and attackers and their mutual use of this promising technology.

And much more, so be sure to tune in and check it out, as well as checking out his team at Ghost Security and what they’re up to!

Resilient Cyber w/ Grant Oviatt - Transforming SecOps with AI SOC Analysts

Following the conversation with Filip and Dylan I remained incredibly interested in the art of possible when it comes to AI and SecOps. So I sat down with Grant Oviatt of Prophet Security, who has a deep background in SecOps and IR with industry leading firms and is now helping Prophet pioneer the SOC and SecOps in the age of AI.

SecOps continues to be one of the most challenging areas of cybersecurity. It involves addressing alert fatigue, minimizing dwell time and meantime-to-respond (MTTR), automating repetitive tasks, integrating with existing tools, and leading to ROI.

Grant and I dug into a lot of great topics, such as:

Systemic issues impacting the SecOps space, such as alert fatigue, triage, burnout, staffing shortages and inability to keep up with threats.

What makes SecOps such a compelling niche for Agentic AI and key ways AI can help with these systemic challenges.

How Agentic AI and platforms such as Prophet Security can aid with key metrics such as SLO’s or meantime-to-remediation (MTTR) to drive down organizational risks.

Addressing the skepticism around AI, including its use in production operational environments and how the human-in-the-loop still plays a critical role for many organizations.

Many organizations are using Managed Detection and Response (MDR) providers as well, and how Agentic AI may augment or replace these existing offerings depending on the organization maturity, complexity and risk tolerance.

How Prophet Security differs from vendor-native offerings such as Microsoft Co-Pilot and the role of cloud-agnostic offerings for Agentic AI.

Resilient Cyber w/ Sounil Yu - The Intersection of AI and Need-to-Know

In what has ended up being one of my most watched interviews/episodes ever, I had a chance to sit down with industry leader and friend Sounil Yu to discuss the intersection of AI and Need-to-Know.

While several of the interviews I shared above tend to focus on AI for Security, Sounil’s interview was more so focused on Securing AI, or at least organizational use of it, especially when it comes to Copilots and products such as Microsoft Copilot.

Sounil particularly is focused on that at his startup Knostic, where Sounil serves as the Co-Founder and CTO with fellow industry leader Gadi. They specialize in need-to-know based access controls for LLM-based enterprise AI.

Sounil and I dug into a lot of interesting topics, such as:

The latest news with DeepSeek and some of its implications regarding broader AI, cybersecurity, and the AI arms race, most notably between China and the U.S.

The different approaches to AI security and safety we’re seeing unfold between the U.S. and EU, with the former being more best-practice and guidance-driven and the latter being more rigorous and including hard requirements.

The age-old concept of need-to-know access control, the role it plays, and potentially new challenges implementing it when it comes to LLM’s

Organizations rolling out and adopting LLMs and how they can go about implementing least-permissive access control and need-to-know

Some of the different security considerations between

Some of the work Knostic is doing around LLM enterprise readiness assessments, focusing on visibility, policy enforcement, and remediation of data exposure risks

Resilient Cyber w/ Mike Privette - 2024 Cyber Market Analysis Retrospective

While this episode wasn’t singularly focused on AI, I sat down with my friend Mike Privette of Return on Security to discuss his 2024 Cyber Market Analysis. We looked at trends related to M&A, funding rounds, round sizes, geographic metrics and more.

One thing that we specifically called out during the discuss is the outsized role that AI played in 2024 when it came to security investments, acquisitions and valuations.

Resilient Cyber w/ Ed Merrett - AI Vendor Transparency: Understanding Models, Data and Customer Impact

A lot of the conversations I’ve shared focusing on the considerations of enterprise risk tied to AI usage. This episode with Ed Merrett, the Director of Security & TechOps at Harmonic Security focused on just that, especially data leakage.

Ed and I dove into a lot of interesting GenAI Security topics, including:

Harmonic’s recent report on GenAI data leakage shows that nearly 10% of all organizational user prompts include sensitive data such as customer information, intellectual property, source code, and access keys.

Guardrails and measures to prevent data leakage to external GenAI services and platforms

The intersection of SaaS Governance and Security and GenAI and how GenAI is exacerbating longstanding SaaS security challenges

Supply chain risk management considerations with GenAI vendors and services, and key questions and risks organizations should be considering

Some of the nuances between self-hosted GenAI/LLM’s and external GenAI SaaS providers

The role of compliance around GenAI and the different approaches we see between examples such as the EU with the EU AI Act, NIS2, DORA, and more, versus the U.S.-based approach

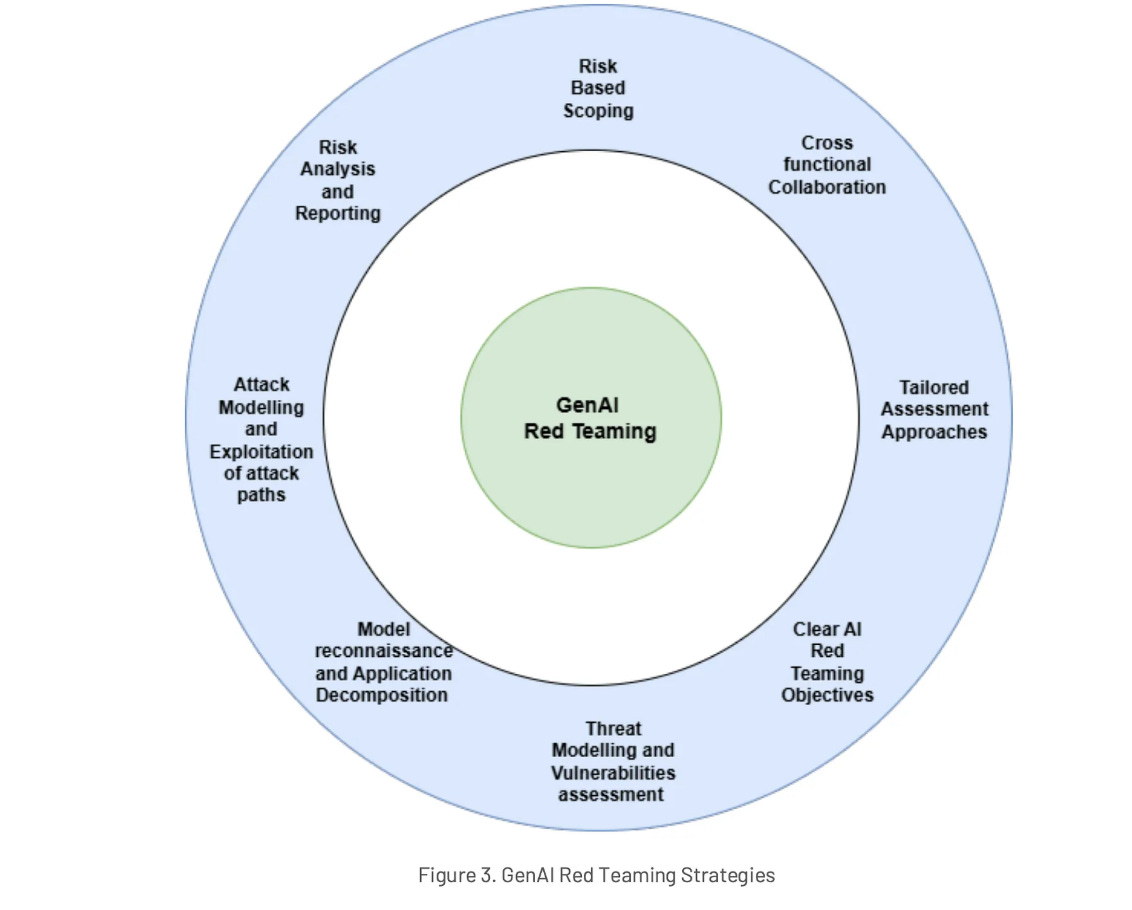

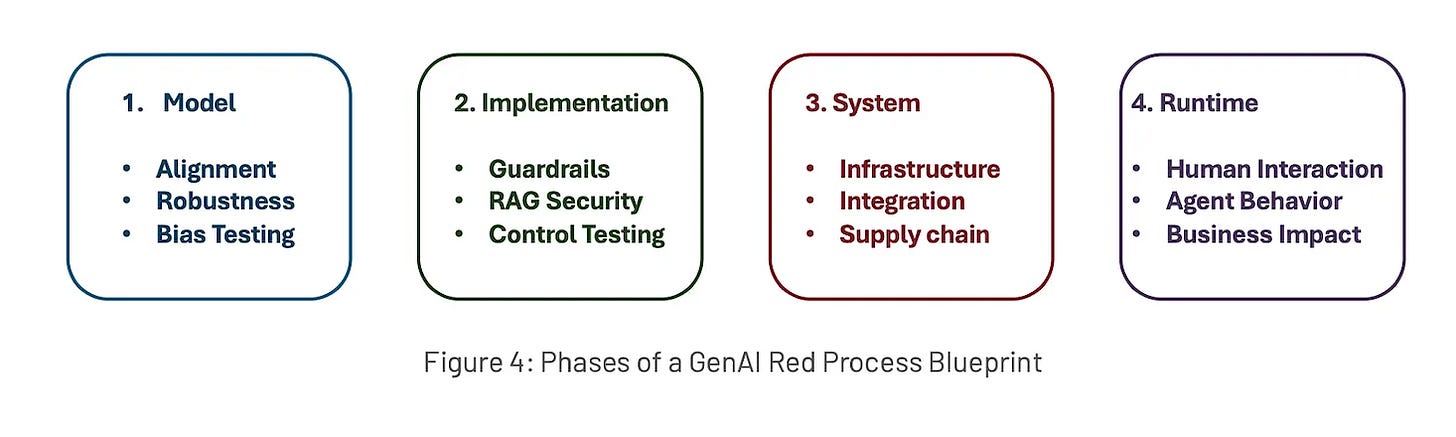

Implementing GenAI Red Teaming - The OWASP Way

Red Teaming has been a hot topic when it comes to GenAI and LLMs, being featured in key industry guidance, frameworks and requirements, such as the EU’s AI Act and the AI Risk Management Framework, from NIST.

I took some dive to dive deep into OWASP’s publication “GenAI Red Teaming Guide: A Practical Approach to Evaluating AI Vulnerabilities”.

I covered:

What Red Teaming is, in the context of GenAI/LLMs and novel aspects of this tried and trusted security practice

Defining objectives and scope

Assembling the team

Threat Modeling

Addressing the entire application state

Debriefing, Post-Engagement Analysis and Continuous Improvement

Resilient Cyber w/ Lior Div & Nate Burke - Agentic AI & the Future of Cyber

Agentic AI and Service-as-a-Software as I have mentioned is one of the hottest areas when it comes to AI and its implications, including for Cyber. In this episode I saw down with Founder/CEO and CMO of 7AI, a company looking to innovate in the SecOps space when it comes to Agentic AI.

Lior is the CEO/Co-Founder of 7AI and a former CEO/Co-Founder of Cybereason, while Nate brings a background as a CMO with firms such as Axonius, Nagomi, and now 7AI.

Lior and Nate bring a wealth of experience and expertise from various startups and industry-leading firms, which made for an excellent conversation.

We discussed:

The rise of AI and Agentic AI and its implications for cybersecurity.

Why the 7AI team chose to focus on SecOps in particular and the importance of tackling toil work to reduce cognitive overload, address workforce challenges, and improve security outcomes.

The importance of distinguishing between Human and Non-Human work, and why the idea of eliminating analysts is the wrong approach.

Being reactive and leveraging Agentic AI for threat hunting and proactive security activities.

The unique culture that comes from having the 7AI team in-person on-site together, allowing them to go from idea to production in a single day while responding quickly to design partners and customer requests.

Challenges of building with Agentic AI and how the space is quickly evolving and growing.

Key perspectives from Nate as a CMO regarding messaging around AI and getting security to be an early adopter rather than a laggard when it comes to this emerging technology.

Insights from Lior on building 7AI compared to his previous role, founding Cybereason, which went on to become an industry giant and leader in the EDR space.

Resilient Cyber w/ Chenxi Wang - The Intersection of AI & Cybersecurity

One of the folks I make sure to follow and whose insights I always enjoy is Chenxi Wang, who has a wealth of experience as an Investor, Advisor, Board Member and Security Leader.

I had a chance to chat with Chenxi about the intersection of AI and Cybersecurity, what Agentic AI means for Cybersecurity Services and implications for the boardroom.

Chenxi and I covered a lot of ground, including:

When we discuss AI for Cybersecurity, it is usually divided into two categories: AI for Cybersecurity and Securing AI. Chenxi and I walk through the potential for each and which one she finds more interesting at the moment.

Chenxi believes LLMs are fundamentally changing the nature of software development, and the industry's current state seems to support that. We discussed what this means for Developers and the cybersecurity implications when LLMs and Copilots create the majority of code and applications.

LLMs and GenAI are currently being applied to various cybersecurity areas, such as SecOps, GRC, and AppSec. Chenxi and I unpack which areas AI may have the greatest impact on and the areas we see the most investment and innovation in currently.

As mentioned above, there is also the need to secure AI itself, which introduces new attack vectors, such as supply chain attacks, model poisoning, prompt injection, and more. We cover how organizations are currently dealing with these new attack vectors and the potential risks.

The biggest buzz of 2025 (and beyond) is Agentic AI or AI Agents, and their potential to disrupt traditional services work represents an outsized portion of cybersecurity spending and revenue. Chenxi envisions a future where Agentic AI and Services-as-a-Software may change what cyber services look like and how cyber activities are conducted within an organization.

Closing Thoughts

While this list is far from exhaustive when it comes to industry leaders and innovators looking to define cybersecurity in the age of AI, it does include some amazing folks I know are making a huge impact.

I also am far from an “expert” in AI, but I am definitely intentionally learning all I can by consuming guidance, frameworks, and resources from those who are, having conversations with a diverse range of industry leaders focusing on AI and looking to learn and share all I can on my own journey as a security practitioner with this emerging technology.

I hope you enjoy these resources and find them helpful and learn from the conversations much like I have and I look forward to continuing to share more with the community as we go!