Resilient Cyber Newsletter #54

GRC Engineering, State of AI Report, Predicting AI’s Impact on Cyber, SAIL Framework & AI, and Secure Code Generation

Welcome!

Welcome to issue #54 of the Resilient Cyber Newsletter.

We’re heading into the 4th of July weekend, and I’m heading up to Northern VA where my son will be participating in the Virginia State Little League Baseball championships, which should be a lot of fun for our family.

I hope everyone has a great holiday weekend and take time to reflect on the significance of the holiday for those of us in the U.S.

All that said, we have a lot of great resources this week from conversations on GRC Engineering and its impact on compliance, a comprehensive state of AI report, the introduction of a new AI secure lifecycle framework and discussions about AI’s impact on AppSec, so here we go!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Navigating M&A: What every security leader needs to know

M&A is exciting – new products, new colleagues, new possibilities. Often overlooked, cybersecurity can make or break the success of a deal. Acquirers often face fragmented systems, different security policies, and new vulnerabilities. These issues introduce real security risks.

On July 17th, CISOs Dave Lewis, Wendy Nather, and Kane Narraway draw on the collective experience of 30+ M&As to examine the security implications of M&A and outline strategies for mitigating risk.

Join the webinar for practical advice on:

What to evaluate during due diligence, and how to prioritize risks.

How to approach access control across fragmented systems.

How to respond to growing risks like social engineering and insider threats.

How compliance adherence becomes more complex—and the first steps you should take

Cyber Leadership & Market Dynamics

Resilient Cyber w/ AJ Yawn - Transforming Compliance Through GRC Engineering

In this episode, we sat down with AJ Yawn, Author of the upcoming book GRC Engineering for AWS and Director of GRC Engineering at Aquia, to discuss how GRC engineering can transform compliance.

We discussed the current pain points and challenges in Governance, Risk, and Compliance (GRC), how GRC has failed to keep up with software development and the threat landscape, and how to leverage cloud-native services, AI, and automation to bring GRC into the digital era.

We dove into:

What the phrase “GRC Engineering” means and how it differs from traditional Governance, Risk and Compliance

What some of the major issues are with traditional compliance in the age of DevSecOps, Cloud, API’s, Automation and now AI

Specific examples of GRC Engineering, including the use of automation, API’s and cloud-native services to streamline security control implementation, assessment and reporting

The promise and potential of AI in GRC, and how AJ is using various models for control assessments, artifact creation and more, and how GRC practitioners should be leveraging AI as a force multiplier

AJ’s new book “GRC Engineering For AWS: A Hands-On Guide to Governance, Risk and Compliance Engineering”

The State of AI Report: The Builder’s Playbook

While this is an AI focused resource, I feel it makes more sense in this section of the newsletter due to the emphasis on the broader market dynamics, startups, incumbents, investors and more.

This “State of the AI Report” from Iconiq is one of the best resources I have seen so far in 2025 on this front. It claims to lay out a practical roadmap for AI innovation and it does so in my opinion, while also providing deep insights on the evolution, adoption and future implementation of AI, much of which is very relevant to security as well.

In fact, Security ranks among the top 5 challenges for those deploying models, in addition to issues such as Hallucinations, Explainability and Trust, all of which I would argue are directly related to security, and have security implications, such as package hallucinations which I discussed last week.

Agents of course are a hot topic, with 80%+ of organizations reporting they are either actively deploying agents, experimenting with doing so, or in the early research phases.

The report shares a TON of other insights related to spending, hiring, costs related to AI initiatives, budget allocations and more. Another key insight shared was that coding assistants by and far were the leading use cases in terms of impact on productivity, which has major ramifications for AppSec, as we know with the rise of vibe coding, and just the implicit trust developers place in code generated by LLMs and coding assistants.

For a deep dive on the implications of coding assistants, I recommend a recent article from Dave Aitel and Dan Geer titled “AI and Secure Code Generation” or my own article titled “Security’s AI-Driven Dilemma”.

The 10 Hottest Cyber Startups of 2025

If you’re like me, you often don’t give a ton of credibility to the “Top” lists that come out, however some are worth paying attention to. One is CRN, which recently named the leading up-and-coming companies that are offering new approaches to securing cloud, data, AI and identities.

Among those cited include Endor Labs and Oligo, the former of which I currently serve as the Chief Security Advisor, and the latter I’ve had a chance to interact with quite a bit and are leading the charge around runtime security and Application Detection and Response (ADR), as well as doing some amazing research, including breaking news of a vulnerability to Apple around RSA.

Scattered Spider Hacking Group Now Targeting Airlines and Transportation

News broke last week that the notorious hacking group “Scattered Spider” is targeting aviation and transportation sectors with some such as Canadian airline “WestJet” already seeing some outages to its system and mobile application.

The news and guidance has come from credible sources such as Charles Carmakal, who serves as the CTO for Google’s Mandiant Consulting.

AI

Predicting AI’s Impact on Security

If you’re like me, you likely read, listen to and consume as much as you can related to the intersection of AI and cybersecurity. That said, it can be a lot, from startups, VC’s, the need to secure AI as well as using AI for security use cases.

One of the top leaders I follow is Caleb Sima, who produces a lot of excellent content on the topic. He recently put out a Medium article titled “Predicting AI’s Impact on Security” which I found informative and resonated with a lot of my thinking on the topic as well.

During the article (and associated talk), Caleb makes the case that AI has the potential to “revolutionize” cyber by addressing key challenges in coverage, context and communication. He sees AI being able to help CISO’s in areas where systemic challenges exist such as vulnerability management, third-party incidents, IAM and more, while also helping with more mundane activities from internal documentation, meeting transcription and more.

As organizations engineering practices change to adapt to AI, the security landscape will need to change alongside it, both in terms of tools and technologies but also practices and methodologies as well.

AI for Security: It’s time to get over our trust issues

Anyone who’s been paying attention to the AI discussion in cybersecurity knows it involves two angles:

Securing the use of AI

Using AI for Security

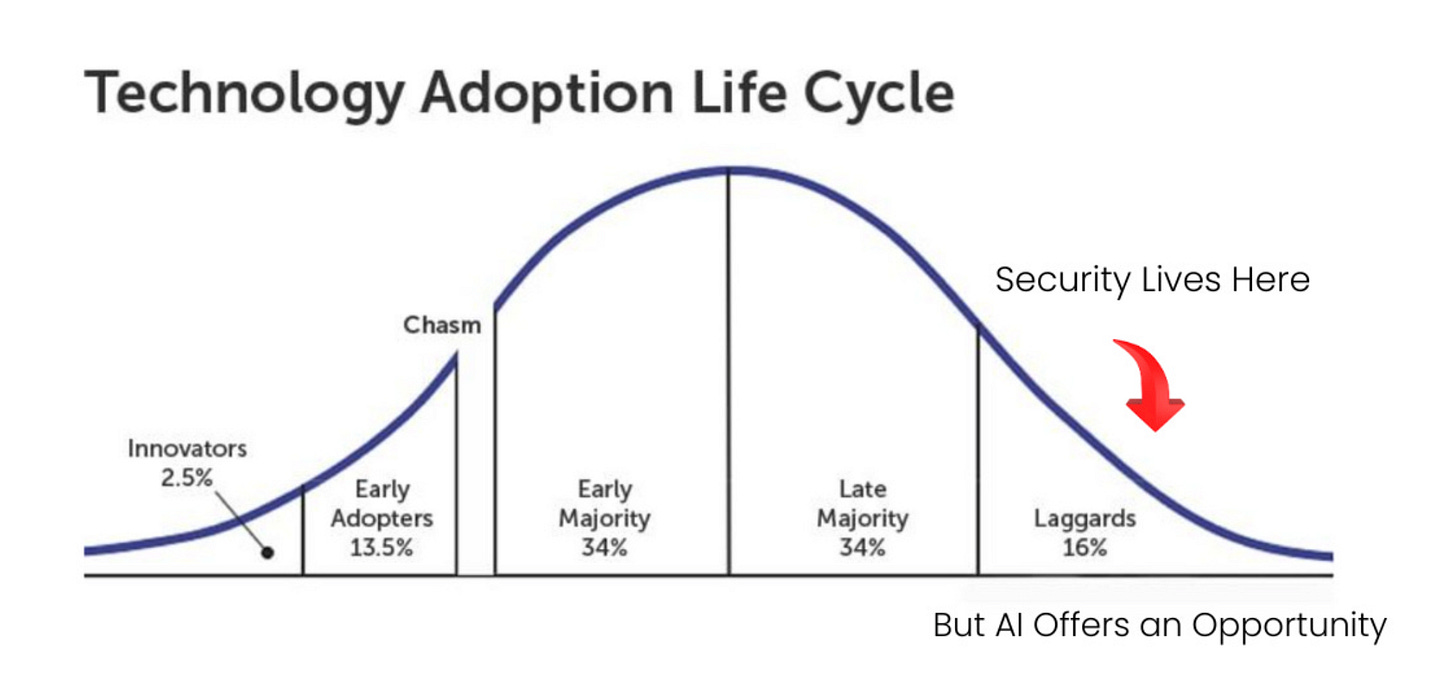

The latter of course has been a much harder conversation for many security practitioners, as they’re rightfully concerned with the security implications of AI adoption. That said, many should equally be concerned with the prospects of what AI can do for security, a point I tried to make in my own article titled “Security’s AI-Driven Dilemma: A discussion on the rise of AI-driven development and security’s challenge and opportunity to cross the chasm”.

In that article I argued that security is historically a late adopter and laggard of technological waves, but AI offers an opportunity for cyber to be an early adopter and innovator, and to use the technology to address longstanding cyber issues.

VC firm Chemistry makes a similar point in an recent article discussing how security needs to get over its trust issues and lean into adopting AI to aid security use cases. They point out examples from VulnMgt, AppSec, Email Security and more, along with vendors operating in those spaces (albeit likely with some bias for their portfolio companies).

This piece lays out the various areas where AI is and will have an impact, tackling challenges around vulnerability backlogs, GRC artifacts and processes, SecOps alert fatigue, identity security and more. I agree with the authors and I’m excited about the prospect of AI in cyber.

Secure AI Lifecycle (SAIL) Framework

With the rapid adoption and evolution of AI teams are often looking. for a framework to help turn principles and guidance into action.

Excited to have collaborated with the Pillar Security team on this publication, which I think is a helpful tool for security and software practitioners building with and on AI systems. From executable data in the form of prompts, agency via Agentic AI and nuanced angles to consider such as model poisoning and more.

The paper:

🔷 Lays out the AI development lifecycle and AI security landscape

🔷 Maps more than 70 risks across various AI development and deployment phases

🔷 Provides mitigations, mapped to leading frameworks such as ISO and NIST AI RMF

🔷 Captures a comprehensive definition of AI system components

Asana AI Incident: Comprehensive Lessons Learned for Enterprise Security and CISOs

We recently saw incident involving Asana AI, which helps enable organizations with AI assistants. The incident impacted over 1,000 organizations data through a single line of code. This equates to 0.8% of Asana’s 130,000 enterprise customers.

This piece from Adversa AI breaks the incident down, which is tied to MCP, which we’ve seen rapid adoption and implementation of, despite many security practitioners and researchers also raising security concerns.

The blog does a great job summarizing key impact metrics, what was at risk and why this matters to CISO’s below:

As the blog points out, the crux of the issue is that via the Asana MCP server, Organization A was able to query cached results from Organization B via a “confused deputy bug” in the MCP server, that doesn’t re-verify tenant context for cached responses, as well as a missing identity management piece for AI agents (a hot topic I have been talking quite a bit about).

The blog dives much deeper in terms of who was involved, the specifics of the incident, and the technical details. It is worth a read.

Intro to OAuth for MCP Servers with Aaron Parecki

There’s been a lot of fuzz about Authentication/Authorization when it comes to MCP among its rapid adoption. One of the key folks leading that thought leadership is Aaron Parecki at Okta, who has authored several crucial blogs on the topic as well as leading improvements for the MCP spec itself.

I stumbled across this session Aaron delivered at the MCP Developers Summit, and I recommend giving it a listen if you’re looking to better understand MCP and OAuths intersection.

AI Supply Chain Constraints

While not a specific security topic, supply chain constraints can impact things such as availability and internal business AI initiatives. This piece from Venture Beat discusses an uncomfortable open dialogue that is happening more and more.

This includes situations where leaders such as Anthropic, Cursor and OpenAI are seeing significant ARR growth but users are being constraints via API limitations and token shortages due to limits in power supply capacity, building permits, data centers, and high-end chip manufacturing.

It dubbed it as “the capacity crisis no one talks about” and provides direct quotes from industry leaders about the token constraints they’re facing from leading providers as well as varying quality among the model providers. It is partially being driven by model providers using techniques to improve inference costs, only to suffer downstream quality impacts on model outputs.

“Each optimization degrades model performance in ways enterprises may not detect until production fails”

With my security hat on, I could see the implications for everything from AI-driven development and vulnerable code to effectiveness and accuracy related to SecOps use cases of AI.

The article quotes comparisons to early oil competitions with leaders such as Standard Oil and others, with some diluting quality, suspecting customers won’t notice, only for the measures to have real ramifications on society and the consumers of the products.

They cite immediate imperatives for enterprise buyers as follows:

Establish quality benchmarks before selecting providers.

Audit existing inference partners for undisclosed optimizations.

Accept that premium pricing for full model fidelity is now a permanent market feature.

Quality variance, the difference between 95% and 100% accuracy, determines whether your AI applications succeed or catastrophically fail.

AppSec, Vulnerability Management, and Software Supply Chain

AI and Secure Code Generation

This piece on Lawfare from longtime industry leaders Dave Aitel and Dan Geer is a great read about the intersection of AI and AppSec. As they say:

“AI is reshaping code security - shifting metrics, unknown bugs, and autonomous decisions humans may never understand”.

In the article they discuss the massive impact AI is on having on software development, from both finding and fixing bugs, vulnerabilities becoming immediately and transparently visible (e.g. From N-Day to Every-Day Vulnerabilities) and the impact that Autonomy will have on the ecosystem.

The authors make the case for provenance standards for AI generated code, similar to SBOM’s, but tracking models, prompts and datasets which can be helpful in auditing the security of largely AI-written codebases. I will point out that some of this is underway by groups such as OWASP’s CycloneDX and folks such as Helen Oakley. I actually explored the topic with her in an episode titled “Exploring the AI Supply Chain”.

Can we fix all the vulnerabilities?

Much of the discussion in AppSec revolves around fixing “all the things”, scanner findings, vulnerabilities, misconfigurations, weaknesses and so on. This piece from Josh Bressers discusses the practical implications of trying to fix all of the vulnerabilities.

He starts by discussing how zero CVE base images are all the rage right now, and rightfully so, as we should build from secure base images. That said, when it comes to dependencies in images and keeping those in a constant state of zero findings is another story and many organizations instead optimize for their assessment or audit schedule as opposed to truly zero findings perpetually.

There are also operational challenges to navigate such as breaking things due to dependency changes or only updating dependencies that have associated vulnerabilities.

The question at its core is misguided because you’re really asking, can we eliminate all risk, and the answer is sure, if you want to prioritize above everything else and redirect resources that could be spent better for the organziation.

That’s why we have what’s called risk tolerance, and it looks different for each organization.

Just Because There’s No CVE Doesn’t Mean You’re Safe - And Just Because There Is One Doesn’t Mean It’s Bad

VP of Engineering at Edera recently published a good piece diving into the nuances of CVE’s and that their mere presence doesn’t mean things or bad, and conversely, their absence doesn’t mean you’re safe. This is a point known by many with deep AppSec expertise and experience but it is worth discussing nonetheless.

As Kavita discusses, fixing CVE’s alone doesn’t mean your safe or secure, and conversely a lack of them doesn’t mean all risks have been eliminated. As she notes, vulnerabilities may not be assigned CVE ID’s, same for a software bug, insecure default configurations and more.

Lately many incidents have started with hardcoded credentials in container images or source code for example. On the flip side, we know 95%~ of CVE’s annually are never exploited and pose little to no operational risks to organizations as well.

Like everything in cyber, and life - context is key.