Resilient Cyber Newsletter #51

Trump Cyber EO, AI Security Report, Small LLMs for Secrets Detection, Congress Calls Out NVD/CVE, CISA’s “North Star” for CVE & AI Vulnerability Scoring System (AIVSS)

Welcome!

Welcome to issue #51 of the Resilient Cyber Newsletter.

It is almost unbelievable that we’re one issue away from one year of issues, and a year since I kicked this initiative off. I’m genuinely thankful for the thousands of folks who have joined me on this journey and I look forward to speaking more about that soon!

This week there is a lot to discuss, including a new Cyber Executive Order (EO) out of The White House, utilizing LLMs for secrets detection, Congress calling out NIST’s NVD/CVE program and the introduction of an AI Vulnerability Scoring System (AIVSS).

So, let’s get into it.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Cyber Leadership & Market Dynamics

Trump Admin Publishes New Cyber EO

Cybersecurity Executive Orders (EO) have played a significant role in cybersecurity discussions over the last several years, most notably due to President Biden’s 14028. However, the Trump administration recently released a Cyber EO, which revoked some aspects of prior Biden Cyber EOs.

The two primary areas it target include:

Rescinding Biden EO’s digital identity development section

Rolling back secure software attestations, including SSDF and CISA’s self-attestation repository

The EO also emphasizes using AI to tackle vulnerabilities and setting definitive post-quantum deadlines.

Horizon3.ai Raises $100M to Cement Leadership in Autonomous Security

Horizon3.ai, an offensive and autonomous security leader, has recently announced its $100M Series D funding round. In the announcement, they touted their NodeZero capability and over 3,000 customer organizations using it, leading to a sustained 100% YoY ARR growth and being Rule of 40 positive.

I interviewed the founder of Horizon3.ai, Snehal Antani, in an episode titled “Building and Scaling a Security Startup” a while back, which you can catch below:

Horizon3.ai states they’re targeting an $80B TAM in autonomous security, due to the generational shift that AI is driving where human labor can be replaced with AI and agentic capabilities.

Their plans for the use of funding include scaling through partners, product innovation and pursuing the Federal market, building on their success in the DIB, NSA and other pockets of the Federal market already.

a16z Targets Elite IDF Alumni for Startup Accelerator

You’ve inevitably seem me speak about the outsized role Israel plays in the startup and cybersecurity ecosystem at large. This is a trend many others are deeply familiar with as well, including the silicon valley venture giant Andreessen Horowitz, who its been reported is targeting Israel’s IDF alumni for startup acceleration.

a16z, which manages roughly $45 billion is looking to expand their Israe li market and have been hosting events and what they have called a 16z “speedrun program” where they try and lure Israeli talent to their organization rather than competing funds such as Sequoia and Greylock. It’s aimed at Seed and pre-Seed stage startups and provides up to $1 million per company along with mentorship and network access.

Security is not the department of “No”; it’s the department that gets told “No”

We often hear the tired quote that “security is the office of no”, this is perpetuated due to the fact that security tends to be risk adverse and shut everything down. Most practitioners know the folly of this approach and that this just leads to rampant shadow risks and a lack of engagement from peers as they do things anyways and just avoid us, or telling us about whatever it is.

In this hard hitting piece from Ross Haleliuk at Venture in Security, he points out how security isn’t the office of no, but instead is the department that gets told no, which is true, even if it bruises some egos.

As Ross points out, sure, security gets to say no to things, but it is generally on the outskirts and obscure technical decisions related to SSH access, browser extensions, MFA and so on. When it comes to actual business impacting decisions such as product launches, feature releases or partner integrations, security often has little say, and if they do, they generally get overridden by the business, because security typically doesn’t get to shut down revenue related activities.

Ross’ take in the article reminds me of my own article “Cybersecurity’s Delusion Problem: A discussion about cybersecurity’s inability to accept the world (business) doesn’t revolve around them” where I made similar points.

MSFT Copilot Flaw “EchoLeak” Signals Broader Risk of AI Agents Being Hacked

AI security startup AIM Security identified a “zero-click” attack on an AI agent. The attack can be triggered by simply sending an email to a user and a combination of clever techniques that turns the AI assistant against itself.

It preys on the fundamental way Copilot works, and involves the attacker sending an innocent-seeming email with hidden instructions for Copilot, and since Copilot scans user’s emails, it inadvertently follows an embedded prompt to dig into files and pull out sensitive data.

Aim points out similar agent-based capabilities from other vendors may have a similar vulnerability and given it is a fundamental design flaw with the technologies, is very likely to be happening in other places.

They call it an “LLM scope violation vulnerability”, where a model is tricked into accessing or exposing data beyond what it’s authorized or intended for. Challenges involve right-sizing permissions and the unpredictable nature of AI.

This is something many have raised before, being tied to content and prompts and AI struggling to distinguish between instructions and data.

AI

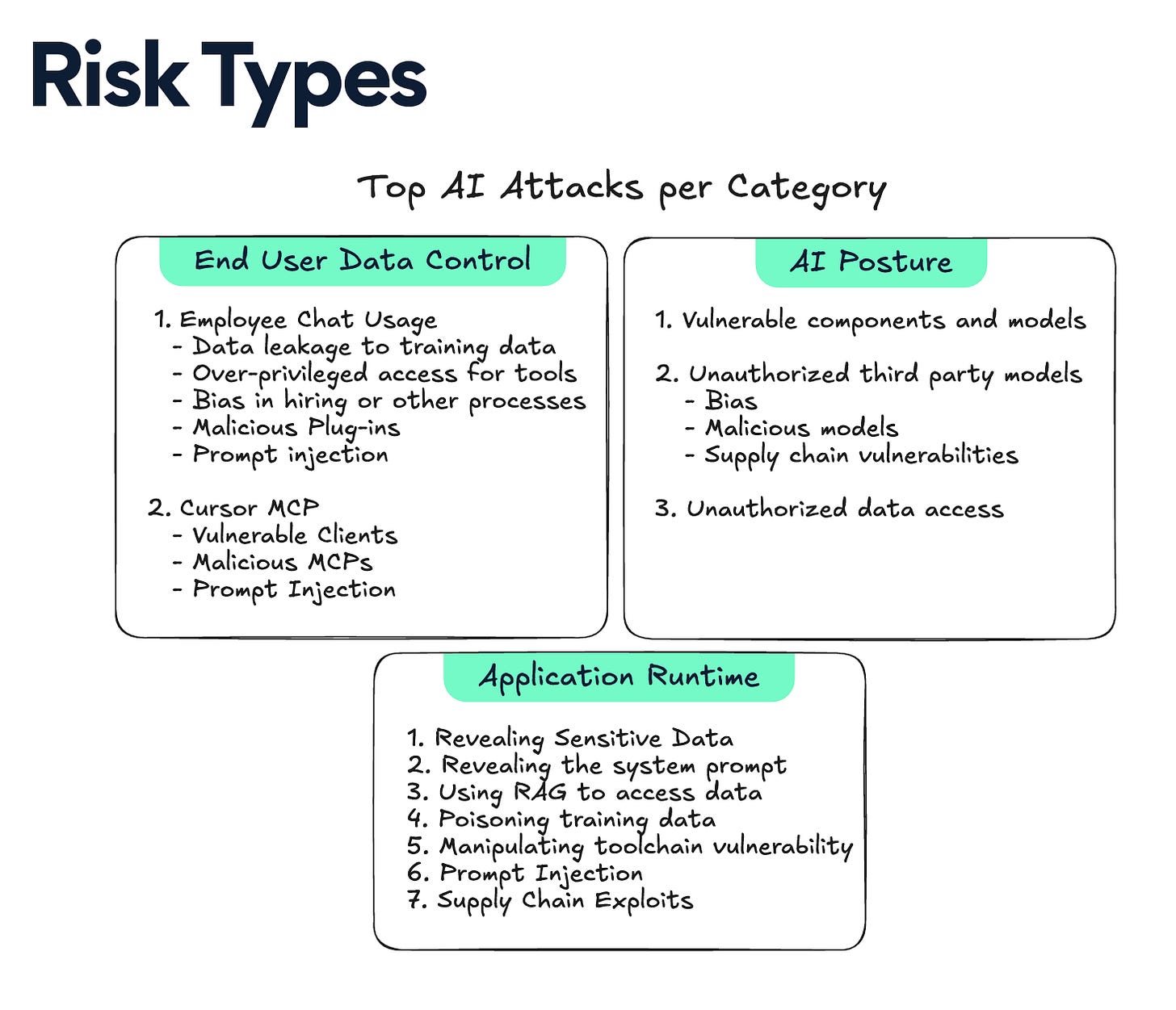

2025 Latio AI Security Report

If you’re like me, you are likely often looking for quality reports that aren’t filled with vendor fluff and hype and truly cut through noise to help you understand the various vendors, technical considerations and problems within security.

This latest AI Security Report from my friend James Berthoty of Latio Tech does just that, covering the top AI risk types:

It also provides a market map of leaders across various product categories such as End User Data Control, AI Posture, AI for Security and AI Application Protection. It discusses the key problems related to AI security and the innovators making an impact. This was a highly informative and well put together report, as someone who shares a lot of James Berthoty’s content, I can say this is some of the best stuff he has produced so far.

Model Context Protocol (MCP): The Good, the Bad, and the Ugly of AI’s interface to the real world

The Model Context Protocol (MCP) continues to dominate discussions around AI, specifically for its potential to enable agentic workflows and extend the capability of many existing tools and services.

This post on Stackaware from Daniel Kalinowski discusses MCP, including the good AND the bad. It frames MCP as a natural language for DevOps, facilitating improved security efficiency. It also discusses challenges such as a lack of fine-grained permissions and access control, opaque auditing, and supply chain risks.

Given that MCP is in its infancy, we will continue to see organizations encounter the good, the bad, and the ugly as they explore how MCP can power the modern agentic enterprise.

The last six months in LLM’s, illustrated by pelicans on bicycles

It can be incredibly tough to keep up with the rate of developments in the AI and LLM space, even for the best of us. Industry AI leader Simon Willison recently gave a keynote at the AI Engineer World Fair and covered the last six months in LLMs in a great talk.

He covers key developments, capabilities across models and much more.

Security Takeaways from 2025 AI Engineer World’s Fair

Speaking of the AI Engineer World Fair, Matt Maisel put together a great article discussing the security-specific takeaways from the event.

It shouldn’t be a surprise that one of the primary takeaways is 2025 is being positioned as the year of agents. This is something I discussed extensively in an article titled “Agentic AI’s Intersection with Cybersecurity: Looking at one of the hottest trends in 2024-2025 and its potential implications for cybersecurity”.

The key takeaways Matt highlights include:

Anatomy of an AI breach

The system-level control imperative

The promise and limits of private compute

When standard AI evaluations fail

Solving the agent identity crisis

Containing the software-building agent

A new framework for agent reasoning

As you can tell, and Matt points out, the key themes involve moving beyond the LLMs and into the broader architecture and workflows agents will be involved in.

Lean and Mean: How We Fine-Tuned a Small LLM for Secret Detection in Code

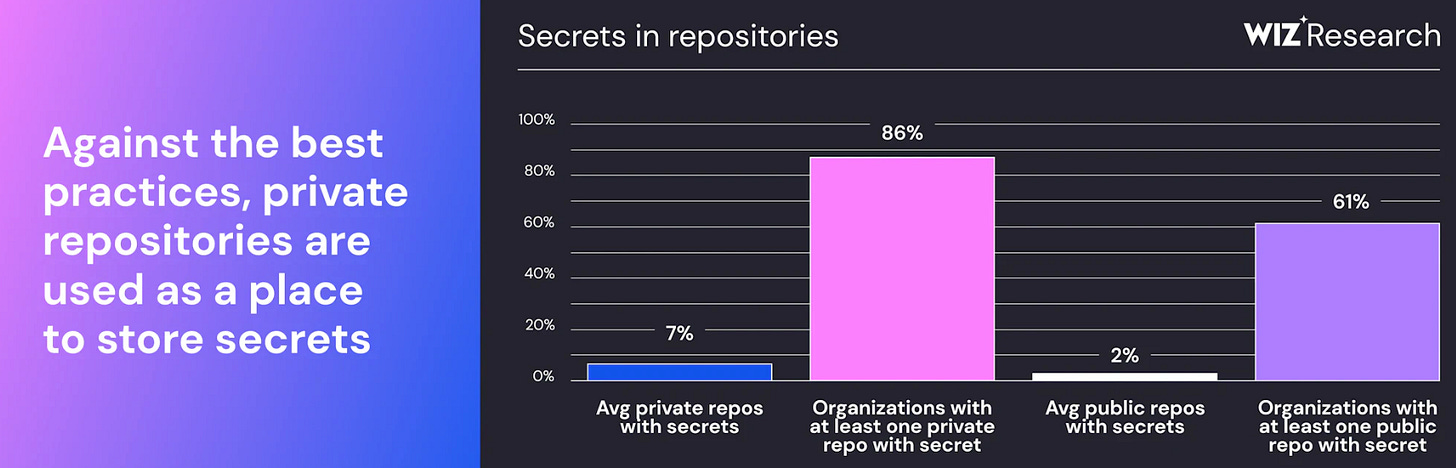

There’s a lot of focus on frontier models and the industry leading mega models, but many are experimenting with smaller models as well. This piece from Wiz looks at how they fine-tuned a small LLM (Llama 3.2 1B) to be used for detecting secrets in code, reaching an 86% precision and 82% recall, which outperformed traditional regex-based methods for secrets detection, a painful false positive problem many are familiar with when it comes to existing tools.

The Wiz research also highlights challenges such as secrets being stored in repositories (and potentially exposed).

The Wiz blog goes on to discuss how they handled training the model, testing results, moving into production and more. It is really promising to see LLMs applied to cybersecurity use cases in the real-world and the potential use cases expand well beyond secrets detection.

AppSec, Vulnerability Management and Software Supply Chain

Congress Sends Letter to NIST about NVD/CVE Performance and Effectiveness

I shared recently how the Government Accountability Office (GAO) had sent an inquiry to NIST about the efficiency and effectiveness of NVD and CVE. Now, a letter from Congress themselves has been sent to the GAO regarding NIST, NVD and CVE.

The letter lays out the initial importance of NVD/CVE’s, as well as recounting some of the programs struggles starting in 2024 with funding issues, backlogs of unenriched CVE’s and more.

It asks that GAO assess the efficiency and effectiveness of:

NIST programs that support the creation and publication of standards-based vulnerability management data, including the NVD

The CVE program, including DHS’s role in supporting CVE

The degree to which the government and non-government entities rely on the NVD and CVE

CISA’s North Star Vision for the CVE Program

Speaking of the CVE program and DHS (CISA)’s involvement in the program, as part of VulnCon 2025, CISA spoke at the event, laying out their “North Star Vision” for the CVE program. The talk stresses the importance of the CVE database, the uptick in CVE records and the challenges that brings as well as CISA’s goals for CVE such as:

Intentional Scaling and Federation

Raising the Data Enrichment Bar

Improving Quality

This is a really informative talk, not only discussing the CVE program but broader industry trends around vulnerability management.

AI Vulnerability Scoring System (AIVSS)

Incredibly excited to be among the Founding Members for the OWASP® Foundation AIVSS. It's clear that AI introduces challenges, such as prompt injection attacks, its non-deterministic nature, lifecycle vulnerabilities, and even ethical impacts.

Agentic AI further challenges this in terms of autonomy, dynamic identity, multi-agent systems, tool use, and adaptability. Traditional vulnerability systems and scoring methodologies aren't well-suited for AI, particularly Agentic AI.

Existing scoring systems can be leveraged with AI-specific metrics and environmental factors to help prioritize AI vulnerabilities, but work must be done to get there.

I'll be collaborating with a powerhouse group of folks, such as Ken Huang, CISSP, Michael Bargury, Vineeth Sai Narajala, Rob Joyce, Jason Clinton, Apostol Vassilev, and a great group of founding members to build out AIVSS for the community.

Salesforce Industry Clouds: 0-days, Insecure Defaults and Exploitable Misconfigurations

Most know the industry leader Salesforce and the fact that they have a massive customer base and presence in enterprise environments. That’s why this recent report and research from AppOmni, a SaaS Security vendor was interesting. They identified over 20 security issues in OmniStudio along with five critical CVE’s in Salesforce.

The paper covers key topics such as default sharing settings, access control, caching and data leaks and more.

Insuring Catastrophic Cyber Risk

I’ve spoken many times about how were living in a software-driven society. Software powers everything from consumer goods to critical infrastructure and touches nearly every aspect of society from in our homes, to national security and geopolitics.

This recent paper out of RAND looks at an investigation being done by the Department of Treasury about the potential need for a federal response to manage harms from a catastrophic cyber event. They discuss the implications of the nature of cyber risk for the functioning of insurance markets, review trends, and potential gaps in the insurance market and also potential policy options about a public-private risk shartng scheme such as a Federal “Cyber Risk Insurance Program (CRIP).