Resilient Cyber Newsletter #40

The “Wiz-Ardry” of Google, Next $100B Cyber Opportunity, FedRAMP Overhaul, Vibe Coding Conundrums, & Weaponizing AI Coding Assistants

Welcome!

Welcome to the 40th issue of the Resilient Cyber Newsletter.

It’s wild how quickly time flies with these issues, but I am having a ton of fun collecting and sharing the content and learning alongside the community. We have a lot of great resources to share this week.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Here's how to automate and streamline vulnerability management

Did you know that 22% of cybersecurity professionals have admitted to ignoring a critical security alert completely? When it comes to vulnerabilities, the longer a vulnerability goes unaddressed, the greater the risk.

Many organizations are turning to automation to streamline vulnerability management and improve response times. Register for this upcoming webinar on April 9 to learn:

Challenges security teams face in vulnerability management today

How LivePerson optimizes their workflows to stay ahead of evolving threats

Best practices for integrating automation with your existing tools

Cyber Leadership & Market Dynamics

The “Wiz-Ardry of Google” Panel - Cloud Security Podcast

This past week, I had an awesome chance to hang out with my friends Ashish, Mike Privette, Francis Odum, and James Berthoty and dive into the recent news of Google’s Acquisition of Wiz.

We spoke about:

The deal itself and what it may mean for both current Google and Wiz customers

The implications for the broader Cloud Security market

The signal it sends to both startup founders and venture capitalists

How other vendors in both Cloud and AppSec are now looking to position themselves given this move by Wiz

W is for Wiz: Alphabet’s Audacious Acquisition

While there is a lot of angles to the Wiz acquisition in terms of Cloud Security, product, and more, there is also the financial angle. Cole of Strategy of Security breaks it down very well in this detailed piece looking at the financial side of the acquisition.

Cole points out the acquisition is around 45-65x revenue multiples based on current estimates with Wiz’s ARR likely around $700 million. The chart below from the article really puts in perspective just how massive the Wiz acquisition was, both in terms of deal size and revenue multiple within the Cyber industry.

If you’re interested in the financial details and comparisons of historical M&A within Cyber, this article is a great read.

The Next $100B Cybersecurity Opportunity💰

In the wake of Wiz's record-breaking acquisition by Google, many are now asking what the industry's future holds.

Menlo Ventures attempts to answer that question in this article, which looks at:

🔷 Major platform shifts, from Internet, Mobile, Cloud, and now AI

🔷 Breaking $10B: The Security Markets Poised to Explode with AI

🔷 $100B and Beyond: How AI Will Reshape Security's Biggest Markets

🔷 The AI-Driven Future of Cyber

The article covers key use cases and areas in Cyber that explore AI, including AI Guardrails, DLP, Pen Testing, Agentic Identity, AI-driven code security, and more.

This article also covers where Cyber has been, where it is headed, and the role that AI will play along the way.

Cyberattack Readiness Shifts to State and Local Governments

In the wake of changes in the Federal IT and Cyber landscape, most notably as part of a recent White House Executive Order (EO) titled “Achieving Efficiency Through State and Local Preparedness”. This preparedness includes cyberattacks and has led to some raising concerns about the readiness and capabilities of states and local governments to handle such responses and incidents.

The EO lays out five actions that will be involved to facilitate state and local government critical infrastructure resilience and readiness:

Create a national resilience strategy

Create a national critical infrastructure policy

Create a national continuity policy

Developing new preparedness and response policies

Create a national risk register

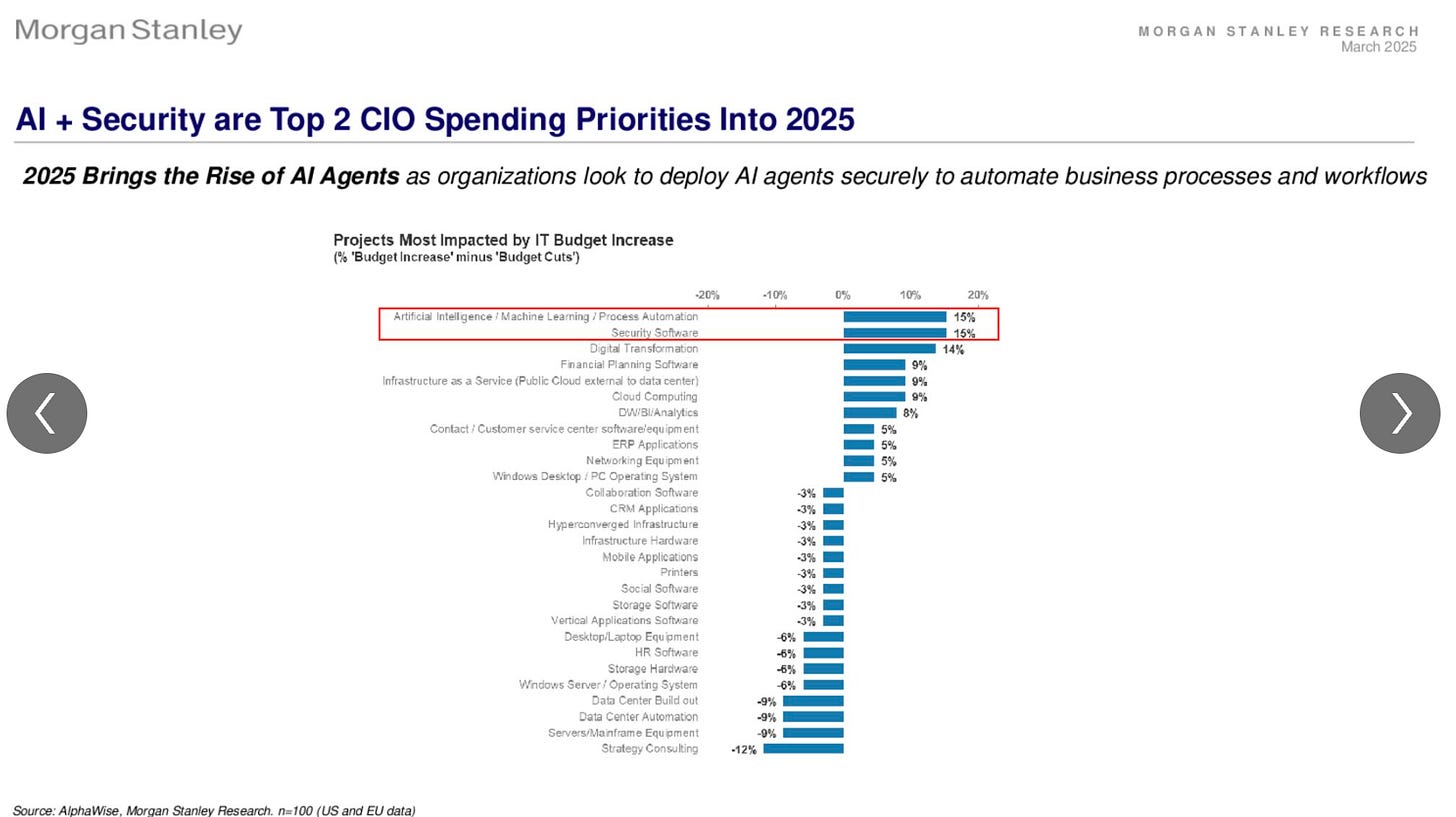

AI + Security are Top 2 CIO Spending Priorities into 2025

Ed Sim of Boldstart Ventures recently shared that AI and Cyber are the top 2 CIO spending priorities for 2025, based on a recent Morgan Stanley Research publication. Digital transformation was a close third, but I’d argue this is a catch all phrase that includes both AI and Cyber and many other associated activities.

As per the story below about the cyber job market, I would recommend focusing on AI Governance and Security if you want to position yourself as a sought after resource in the cybersecurity workforce.

There are also some market indicators about security still being a best-of-breed market, but a growing appetite for consolidation among CIO’s.

The same Morgan Stanley report cites securing machine identifies (e.g. Non Human Identities) as the next frontier for AI and Agents.

I currently serve as an advisor to Astrix Security, and have written extensively about NHI’s in articles such as:

CISO’s are taking on ever more responsibilities and functional roles - has it gone too far?

From compliance, AppSec, VulnMgt, Third Party Risk, Privacy, Disaster Recovery/Continuity, AI and the list goes on, CISOs find themselves in a position of taking on an ever-growing list of responsibilities under their role.

But when is it too much and may lead to being stretched thin, a lack of sufficient oversight, burnout and lead to unmanaged risks? That is a question every CISO and organization must be asking themselves.

This piece discusses how the CISO role continues to evolve, taking on more and more responsibilities with no end in sight.

Cybersecurity Job Market Faces Disruptions - 2025 U.S. Cyber Job Posting Data Report 🔎

This insightful report from CyberSN shows the state of the U.S. cyber job market.

📈 Governance, Risk, and Compliance (GRC) roles are rising due to factors such as regulatory pressures (e.g., SEC, FTC, EU's slew of requirements, etc.)

📉 Security Engineering and Cloud Security are actually seeing a dip, which is being attributed to factors such as AI-driven security automation and managed security for SecEng and CloudSec being absorbed into broader IT

🫰 Heavy emphasis on outsourcing and automation, which is impacting hiring, while organizations cut costs and look to maintain operational resilience

This jumped out to me and should to you as well:

"the key to staying relevant is up-skilling in governance, compliance, and automation-driven SecOps."

We have immense learning materials and outlets. But no one can do the work for you.

FedRAMP Announces Major Overhaul

The U.S. Federal Governments cloud authorization program, FedRAMP is announcing a major overhaul, focused on clearing a backlog of cloud service offerings pending authorization, as well as a focus on increased assessment and authorization velocity.

It will shift from a point-in-time manual assessment model to one based on automation and streamlined assessment and authorizations.

This aims to help clear out the backlog of cloud service offerings awaiting authorization and also open up what has been a 10+ year bottleneck of access to innovative SaaS offerings for the U.S. Government.

In my opinion, this is LONG overdue and is part of a broader push for GRC engineering to bring GRC along on the path of DevSecOps, automation, and real-time continuous monitoring.

I have written about FedRAMP quite a bit, including:

In a past life, I served as a Technical Representative (TR) on the FedRAMP Joint Authorization Board (JAB), while a Federal employee at GSA. I have strong opinions about how FedRAMP and Federal compliance works in general, and am curious to see how FedRAMP will achieve the desired goals, while also cutting the FedRAMP PMO’s contract support staff significantly, especially if modern technologies and automation isn’t introduced.

I hope it isn’t a matter of rubber stamping assessments and authorizations and calling it “progress”.

FedRAMP’s Director held an event yesterday with industry where they shared a lot of details of the projected overhaul of the program, which are summarized in this recent NextGov article. FedRAMP also stood up a “FedRAMP 20X” page with details of the path forward, projected industry working groups around automations, reciprocity with existing commercial frameworks and more. You can find details on the FedRAMP 20X page and other sub-pages.

AI

Vibe Coding Conundrums

So-called “Vibe Coding” has taken the industry by storm the past several weeks. It is loosely defined as giving into LLMs and forgetting the code exists. This generally includes not validating anything, reviewing outputs, and shipping things without review.

It is a hot topic among investors, startups, and others. It has been reported that 25% of Y’s latest coho cohort have codebases almost entirely written by LLMs.

Of course, this has led to a massive acceleration in productivity and velocity, but many in security are concerned about potential ramifications in years to come.

In my latest article, I look at Vibe Coding and its potential pros and cons.

BaxBench: Can LLM’s Generate Secure and Correct Backends?

Speaking of vibe coding and AI-driven development, a team of researchers recently developed a novel benchmark to look at whether or not LLMs can produce secure and correct code generation.

What is cool is they have a comprehensive research paper, as well as making their code publicly available and their datasets and even publishing a running leaderboard, showing how each of the LLMs, even flagship models perform.

The key findings are summarized below:

These findings don’t inherently mean AI-driven development is bad, but it does emphasize the need for longstanding security best practices such as tooling, automation, assessment and verification.

The problem, as expressed in vibe coding, and in other reports I have shared is that Developers are inherently trusting the output of LLMs and Copilots without any sort of validation or review, and this is what will help risks proliferate and rapidly expand the digital attack surface as Developers and organizations become more “productive”.

We know speed to market and revenue is prioritized over security, and that certainly won’t change now, and this will exacerbate existing AppSec challenges.

AI Cyber Magazine

I recently came across this awesome resource that a lot of great insights from industry leaders on AI Security such as Steve Wilson Anshuman Bhartiya Betta Lyon Delsordo Chenxi Wang, Ph.D. Dylan Williams and many others!

Some of the timely topics and discussions

✅ Will AI Agents Upend GRC Workflows

🤖 Mitigating Non-Human Identity Threats in the Age of GenAI Lalit Choda

🪲 The most dangerous AI vulnerability by Steve Wilson

🛠️ Secure-by-Design in Agentic AI Systems by Chenxi Wang

🔥 The dual nature of AI for cyber defense, as well as AI as a weapon

💪 AI as a Force Multiplier: Practical Tips for Security Teams by Dylan Williams

And many other excellent topics and discussions around the intersection of AI and Cyber.

Adversarial Machine Learning - A Taxonomy and Terminology of Attacks and Mitigations

NIST’s Trustworthy and Responsible AI group recently published a comprehensive taxonomy and terminology of attacks and mitigations for ML systems.

It covers:

Predictive AI Taxonomy

GenAI Taxonomy

Key Challenges and Discussion

This is a great document to formally define key adversarial methodologies and attack types against ML systems from poisoning, private, supply chain, prompting and more. It also has a brief section dedicated to the security of agents.

AppSec, Vulnerability Management, and Supply Chain Security

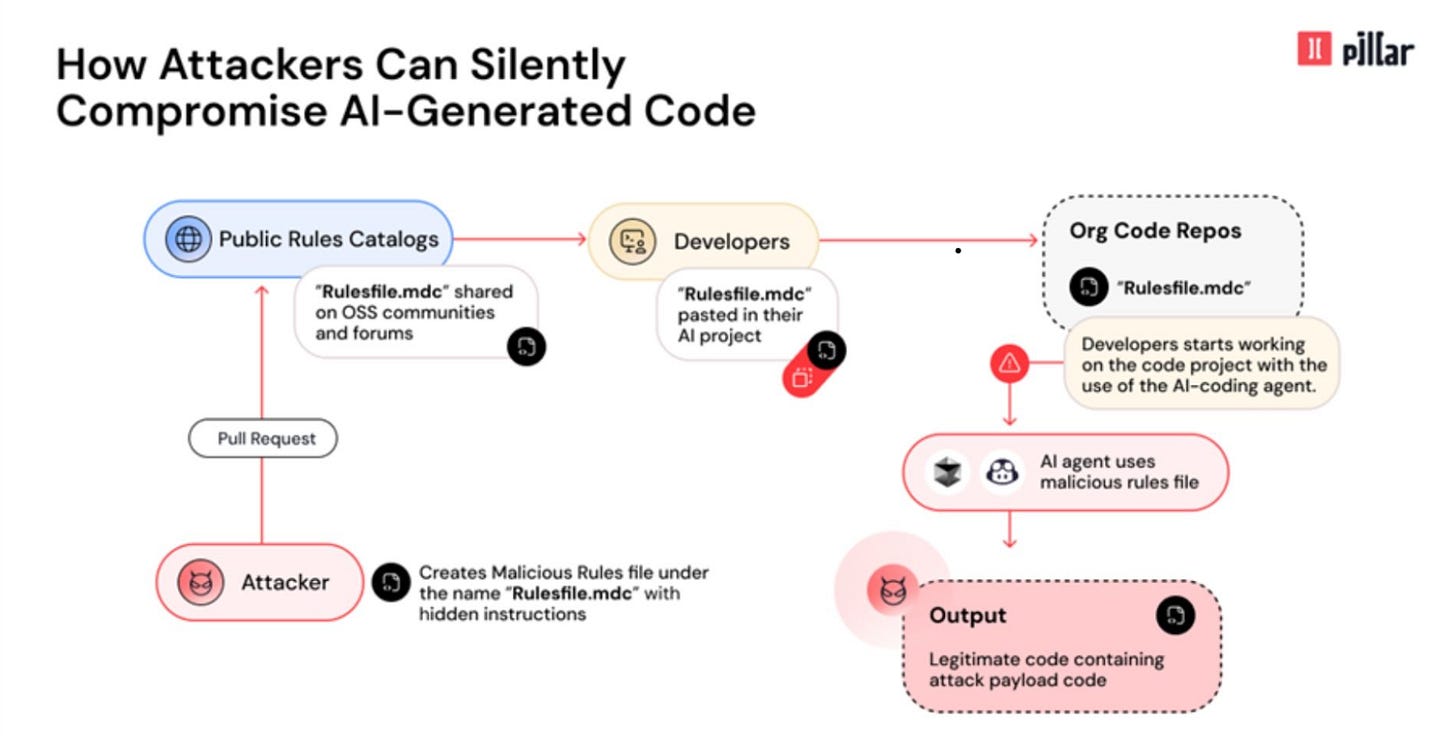

Weaponizing AI Coding Assistants: A New Era of Supply Chain Attacks

AI coding assistants like GitHub Copilot and Cursor have become critical infrastructure in software development—widely adopted and deeply trusted.

With the rise of “vibe coding,” not only is much of modern software written by Copilots and AI, but Developers inherently trust the outputs without validating them.

But what happens when that trust is exploited?

Pillar Security has uncovered a Rules File Backdoor attack, demonstrating how attackers can manipulate AI-generated code through poisoned rule files—malicious configuration files that guide AI behavior.

This isn't just another injection attack; it's a paradigm shift in how AI itself becomes an attack vector.

Key takeaways:

🔹 Invisible Infiltration – Malicious rule files blend seamlessly into AI-generated code, evading manual review and security scans.

🔹 Automation Bias – Developers inherently trust AI suggestions without verifying them, increasing the risk of undetected vulnerabilities.

🔹 Long-Term Persistence – Once embedded, these poisoned rules can survive project forking and propagate supply chain attacks downstream.

🔹 Data Exfiltration – AI can be manipulated to "helpfully" insert backdoors that leak environment variables, credentials, and sensitive user data.

This research highlights the growing risks in Vibe Coding—where AI-generated code dominates development yet often lacks thorough validation or controls.

As AI continues shaping the future of software engineering, we must rethink our security models to account for AI as both an asset and a potential liability.

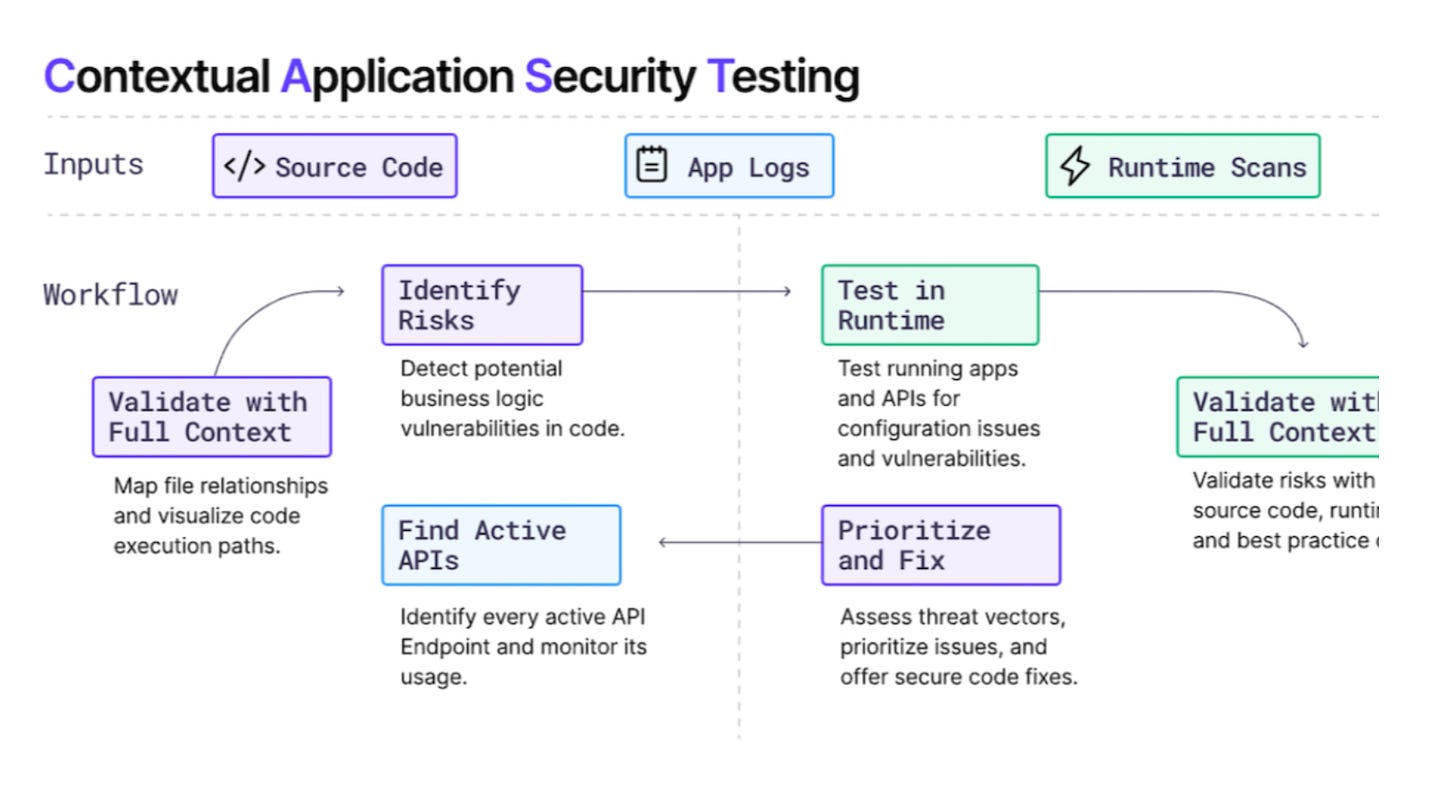

A New Category? Contextual Application Security Testing (CAST)

I know, I know, we’re all sick of acronyms and security product categories but this is the nature of our industry. This article from Ghost Security CEO and Co-Founder Greg Martin positions that we need a new AppSec category, one of CAST.

Greg discusses concepts I have covered in my books and many articles, such as the fact that Developers outnumber AppSec teams 100:1 or even 1000:1, and due to this disparity many have leaned into automated security scanning tools, but these inevitably have now produced massive backlogs of “findings” that lack any deep context around known exploitation, exploitability, reachability and more.

Organizations continue to drown in these massive vulnerability backlogs, and the security technical debt is running away from organizations, as competing incentives such as speed to market and revenue are placed ahead of security.

Ghost is advocating for converging SAST and DAST to get both code level (shift left) and runtime (shift right) visibility that pairs code level insights with production runtime context. This involves the creation of knowledge graphs to inform risk-based decision making. To test it out they ran leading LLMs against open source web apps and repos and discovered many unknown risks and vulnerabilities.

I would argue this has promise not just for organizations but also for the broader open source ecosystem, if these findings can then be acted on by maintainers and downstream consumers of the code will benefit.

NVD Collapse (Again)?

Vulnerability Researcher Andrew Lukashenkov took to LinkedIn to raise some concern about the NIST National Vulnerability Database (NVD) experiencing another degradation in service. This comes on the heels of significant fallout in 2024, as the NVD outright collapsed, quit enriching Common Vulnerability and Enumerations (CVE)’s and left the industry scrambling.

Andrey points out that no CVE’s have been analyzed since March 15th, and are sitting with a status of Received, Awaiting Analysis, or Undergoing Analysis. Given that there are hundreds a day that requiring analysis, it is easy for a backlog to grow quickly. The NVD still has a significant backlog to this day, of CVE’s from 2024 that accumulated when they experienced a disruption, not enriching CVE’s for several months, hired a new contractor to support them and tried to burn down the backlog, which never actually finalized and still lives on.

I previously wrote an in-depth piece discussing the NVDs challenges, titled “Death Knell of the NVD?”

Everything to know about the tj-actions attacks

Last week the industry was shook by news of a novel supply chain attack targeting “tj-actions” and taking advantage of some of the native functionality of platforms like GitHub. James Berthoty recently recorded a discussion breaking down everything we know, what was impacted, how the attack unfolded and more, which is really informative for those interested in the nuance here.