Resilient Cyber Newsletter #27

CISO Paradox, Cyber’s IPO Pipeline and Market Index, AI Agents Under Threat, Beyond the Tiered SOC, & the Future of AppSec, Integrating LLM’s and AI Agents

Welcome!

Welcome to another issue of the Resilient Cyber Newsletter.

Christmas is upon us, and I don’t know about you all, but I’m looking forward to a bit of downtime and connecting with family and friends, but there is also a lot of interesting activity still happening across the ecosystem heading into the holidays.

Let’s take a look at some of it below!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 7,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

As the front door of an enterprise and the gateway upon which employees rely to do their jobs, the inbox represents an ideal access point for attackers. And it seems that, unfortunately, cybercriminals aren’t lacking when it comes to identifying new ways to sneak in.

Abnormal Security’s white paper, Inbox Under Siege: 5 Email Attacks You Need to Know for 2025, explores some of the sophisticated threats we anticipate escalating in the coming year and examines real-world examples of attacks Abnormal customers received in 2024.

Cyber Leadership & Market Dynamics

Open Letter to CEO’s from CISO’s

In an opinion piece recently published on CSO Online, Tyler Farrar echos the sentiment of many CISO’s and previous CISO’s, which is that they are being hold accountable and even liable for security incidents, despite lacking the actual organizational authority to make a difference.

The piece builds on an article titled “The CISO Paradox” from Tyler Farrar, where he discusses how CISO’s have great responsibility but little actual power. This is also why we often hear CISO’s called “Chief in Name Only” because their CxO title doesn’t align with their C-Suite peers when it comes to actual power to effect change.

I have experienced in previous CISO and Security Leadership roles I’ve held in organizations and on large programs. This is because CISO’s are there to help the business make risk-informed decisions but the business owns the risk.

CISO’s of course may have autonomy and control over things such as the security tech stack, tooling, building the security team, and more (assuming they can get sufficient budget to do so, which is another battle), but they don’t make the actual decisions around what the business does or doesn’t do in terms of technology and projects.

As Tyler mentions, CISO’s often get stuck “selling” the importance of security to their superiors, who are focused on many other priorities, with security just being one of them. As I wrote in “Cybersecurity’s Delusion Problem”, the issue is that it is the most important risk to us in security. Still, to the business, it is one of many, and often not at the top, being beaten out by competing risks and concerns such as revenue, return on investment, growth, financial runway, market share, customer attraction/retention, and much more.

Another uncomfortable truth for security practitioners is that those risks mentioned above often have a more tangible impact on the business than security. We see incident after incident has little to no long-term impact on share price or market share for large enterprise organizations. Even CISO pointed this out in a recent publication where they stated as an industry we lack sufficient empirical data to use financial consequences as a driver for business investment in security.

The overall complaint from CISO’s and security leaders is that they are being held liable despite lacking the actual empowerment, authority, resources, and support to mitigate organizational risks. This understandably is an incredibly frustrating situation to be in, and while CISO’s deserve a seat at the table, there also is a lot of room for improvement on the security side of the debate to improve how we engage, communicate with, and support the business. There’s no shortage of examples of security theater, legacy approaches to GRC, functioning as the office of “no” and failing to understand how the business delivers value on behalf of security practitioners as well.

There’s a lot of blame to go around, including for us.

Human-Centered Security

"Weakest Link" ⛓️💥

How often in your cyber career have you heard (or even said) this about humans?

We often hear the trope that "humans are the weakest link" when discussing cybersecurity. Yet, how often do we take a step back and think about User Experience and Human-Centered Design in Cybersecurity?

The answer, of course, is not often.

We hyper-focus on technical controls, tech stacks, "best"-practices, and more. All while rarely, if ever, thinking about the way humans/users interact with our systems and how our design and control implementation decisions may impact their behavior or vice versa. This often ends up creating more risks, as users work around our security controls that have abysmal user experiences, leading to shadow usage, silos, resentment, and more from the very users within organizations we're supposed to protect.

This concept is very applicable to security products as well, which is why you see industry leaders getting to where they are by emphasizing the user experience. That's why I was excited to chat with Heidi Trost this week and to dive into her new book "Human-Centered Security: How to Design Systems That Are Both Safe and Usable".

Heidi brings a wealth of expertise at the intersection of cybersecurity and human-centered design, something our industry sorely lacks among practitioners and in implementation.

There are four ways to compete as a security platform

One of the most notable trends in the cybersecurity product/vendor space throughout 2024 has been a renewed debate around platform vs. point products/best-of-breed.

Folks have taken up each side of the argument and everything in between. Big industry leaders have leaned into platforms, advocating for cost savings, more cohesive solutions, minimizing security tool sprawl, and so on, while others have stated that you need the best tool for the job and no platform does it all, as well as the harsh truth that some “platforms” are just a bunch of tools slammed together poorly via M&A and bolt-on solutions with poor CX and capability.

This piece from Ross Haleliuk of Venture in Security dives into what he calls the four ways to compete as a security platform. This builds on a previous article of his where he framed point product vs. platform as a wrong way of looking at things and framed it as the four buckets of:

Best in-class point products

Best in-class platforms

Good enough point products

Good enough platforms

Building on that previous piece, Ross lays out four ways he thinks security platforms compete, which are:

Security platforms that consolidate existing point solutions

Security platforms that compete based on a specific use case

Security platforms that serve a specific type of buyer

Security platforms that compete with other platforms head-to-head

Ross lays out examples of various vendors and platforms doing exactly this. This is an insightful read for those following the point product vs. platform debate, and good for both practitioners and product companies alike to think about as we see the cybersecurity product market continue to evolve.

Cyber’s IPO Pipeline: 2026 and Beyond

The stagnating of IPO in Cyber (and tech more broadly) has been an often discussed topic during 2024. With the upcoming administration change, some suspect we may see some changes, but time will tell.

Cole Grolmus of Strategy of Security took a look at the Cyber landscape and some of the leading companies and when they may make their IPO appearance.

This builds on a previous piece of Cole’s where he looked at 2025 candidates for IPO among the Cyber landscape.

Cole makes the case that several of our best companies in cyber are likely a couple of years out from potential IPO’s and that even some of the 2025 candidates listed above more be pushed back to 2026 or beyond.

He cites 16 companies that are IPO candidates in 2025 and potentially 38 or more for 2026 and beyond. Cole also draws some unique insights when it comes to considerations for IPO’s among companies that are private equity (PE) backed vs. those that are venture capital (VC) backed.

To make his case, Cole draws upon public commentary from leadership among the candidate companies along with other financial and market metrics.

As always, Cole provides some excellent thought-provoking analysis and if you haven’t already subscribed to his site Strategy of Security and you’re interested in market analysis of cyber I strongly recommend it!

Cybersecurity Titans Index

Speaking of large cyber industry leaders, Canalys recently published their “Cybersecurity Titans Index” which showed some interesting insights.

Double-digit market growth, with software and hardware sales expected to reach $97 billion next year

Partners continue to play a critical role, contributing $2 for every $1 in vendor sales

The overall cyber industry is projected to grow just shy of $300 billion in 2025

We often hear about the importance of partners and channels, and Jay McBain of Canalys mentions that “91.5% of this market flows through, to, or with partners and we expect that to continue”.

They also projected more market consolidation, a trend we saw in 2024, and for it to occur via M&A as well as companies going private (a trend Cole Grolmus pointed out in a piece titled “Cybersecurity is Going Private”).

Power Law at Play

Within Venture Capital, there is a well-known principle of what is known as the “power law”. This essentially means that a small number of investments will produce the majority of returns, while the rest break even or fail.

This can also be thought of the Pareto principle or the 80/20 rule and similar concepts, at least loosely. This seems to be the case as well when we look at the total capital raised by U.S. VC firms this year. 74% of the capital raised when to 30 firms, and 9 of those firms raised 50% of all capital committed.

This can be seen below in data recently shared from PitchBook

Andreessen Horowitz along brought in over 11% of all capital raised.

The PitchBook article discusses the evolution of VC, as we see firms not just providing capital but also aiding startups in areas such as in-house marketing, recruiting, and more - all of which are oriented around increasing the startup’s success, but most importantly, returning value to the fund.

They highlight the struggles of emerging firms, showing that outside of the top 30 firms, emerging firms closed only 14% of all US VC commitments in 2024.

There’s also discussion of the IPO window being shut, and its impact on GP’s, which has led to secondary fund stakes. This was also discussed in a recent CTECH article I read titled “With IPO's paused, Israel Secondary Fund scales third fund to $350 million”, showing the IPO window stagnation impacting funds outside the U.S. as well.

It explains that secondary transactions are made by “purchasing holdings from existing shareholders in startups, including investors, entrepreneurs, managers and employees, and purchasing holdings from limited investors (LP’s) in VC funds”. This second transaction activity opens liquidity options for earlier investors, as tech firms have seen a drawdown in the number of companies pursuing and achieving IPO’s.

AI

AI Agents Under Threat

By now we know the hype of 2024-2025 if Agentic AI or AI Agents. Their promising potential applies to use cases spanning countless industries and verticals and we’re seeing a lot of focus on Agentic AI from VC’s, startups, and industry leaders.

That said, like any other technology AI agents are also potentially vulnerable and add to the attack surface. However, much of the discussion around AI agents hasn’t focused on this so far. I was excited to see this new research publication “AI Agents Under Threat: A Survey of Key Security Challenges and Future Pathways”.

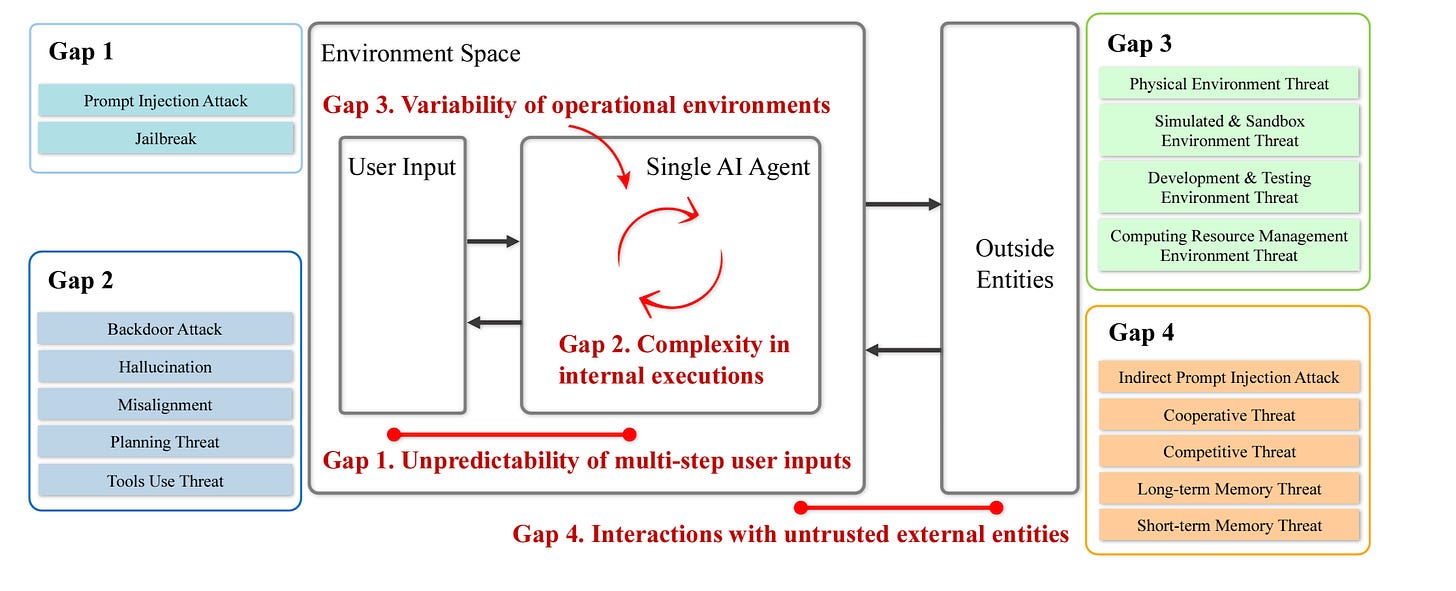

The paper covers gaps in the current landscape around AI agents and their security as well as key risks that they call out which include:

Unpredictability of multi-step user inputs

Complexity in internal executions

Variability of operational environments

Interactions with untrusted external entities

They also discuss the various aspects of AI agents, from Perception, the “Brain” and Action, each of which plays a critical role, and then external interactions with environments, other agents and memory, as seen below.

This is a great read for those of us focusing on not just the potential and promise of Agentic AI, but also the potential unique risks and considerations for implementing them securely.

Agentic AI and SecOps

Building on a core focus of AI, Agentic AI and potential - I recently sat down with Filip Stojkovski and Dylan Williams to dive into this exact topic in an episode of Resilient Cyber titled “Agentic AI & SecOps”, which you can listen to below!

What is Agentic AI, and How Will it Change Work?

As I’ve been discussing, Agentic AI is the key theme throughout 2024 and now 2025 when it comes to the most exciting and talked about aspect of AI trends. This piece from Harvard Business Review (HBR) does a great job discussing what exactly Agentic AI is and the implications for how it will impact work across a wide variety of industries and verticals.

This goes beyond the typical focus of software development and technology and looks at the implications of agentic AI in many other non-traditional tech industries.

For a great discussion on the topic, I also recently was listening to the to the a16z podcast run by VC firm Andreessen Horowitz and they had an episode titled “How AI is Transforming Labor Markets” where the discussion around Services-as-a-Software was unpacked, including pricing, the role of AI in markets from nursing to legal and more.

AI Engineering Resources

I’ve previously shared how I’ve been reading Chip Huyen’s book AI Engineering. It’s easily one of the best books I’ve read on AI yet, that really helps me understand the technical concepts in terms of how GenAI and LLM’s really work under the hood, their hosting environments, their pitfalls, risks, and much more.

Chip recently shared her repository for the book where she captured a TON of amazing links and resources she gathered while writing the book. This is one to bookmark if you want to dig deeper into the domain of AI and have hundreds of papers and resources on hand.

AppSec, Vulnerability Management, and Software Supply Chain

Prevention is Ideal but Detection and Response is a Must

Cloud Security leader Wiz recently introduced their Wiz “Defend” capability, adding to Wiz Cloud and Wiz Code. This enhances their CNAPP offering, specifically around runtime coverage and defense, for use cases such as SecOps, incident response, digital forensics and more.

I took a look at that in a recent article titled “Prevention is Ideal but Detection and Response is a Must: Introducing Wiz Defend”.

Beyond the Tiered SOC

I recently shared a piece from Filip Stojkovski and Dylan Williams on what a SecOps AI Blueprint may look like. Filip actually just dropped a bit of a follow-up article focusing on “Rethinking the SOC with Autonomous SecOps Orchestration”.

Anyone who has worked with SOC’s knows the tiered concept (e.g. Tier 1, Tier 2 and Tier 3), but is it time to rethink this model that dates back literal decades? Not only are they potentially dates, but they often mean different things to different organizations and teams.

Filip takes a look at the SOC and rather than strictly via tiers, visualizes activities across the incident response (IR) lifecycle phases of: Preparation, Identification, Containment, Eradication, Recovery, and Lessons Learned, as seen below, and with varying levels of autonomy, as we see SecOps as a key target for Agentic AI use cases.

His article looks at what activities within these various phases have the potential to be either partially or fully automated/autonomous. While there are many potential scenarios, environments, tech stacks, and more, it is definitely clear that the opportunity for automation and leveraging AI Agents for SOC use cases is very strong.

The future of AppSec, Integrating LLM’s, and AI Agents

We continue to see a ton of promise and hope for both LLM’s and the use of AI agents, or Agentic AI and its implications for various fields in Cyber, including AppSec. This is a great combination of articles

The series of articles take a look at the implications for the future of AppSec and the role GenAI and LLM’s may play, including in activities such as risk classification, risk assessment, threat modeling, and much more. These technologies also have the opportunity to aid teams who are often understaffed, overwhelmed and struggling to keep up.

Anshuman Bhartiya even put together a YouTube video discussing “Scaling AppSec Activities Using LLM’s”

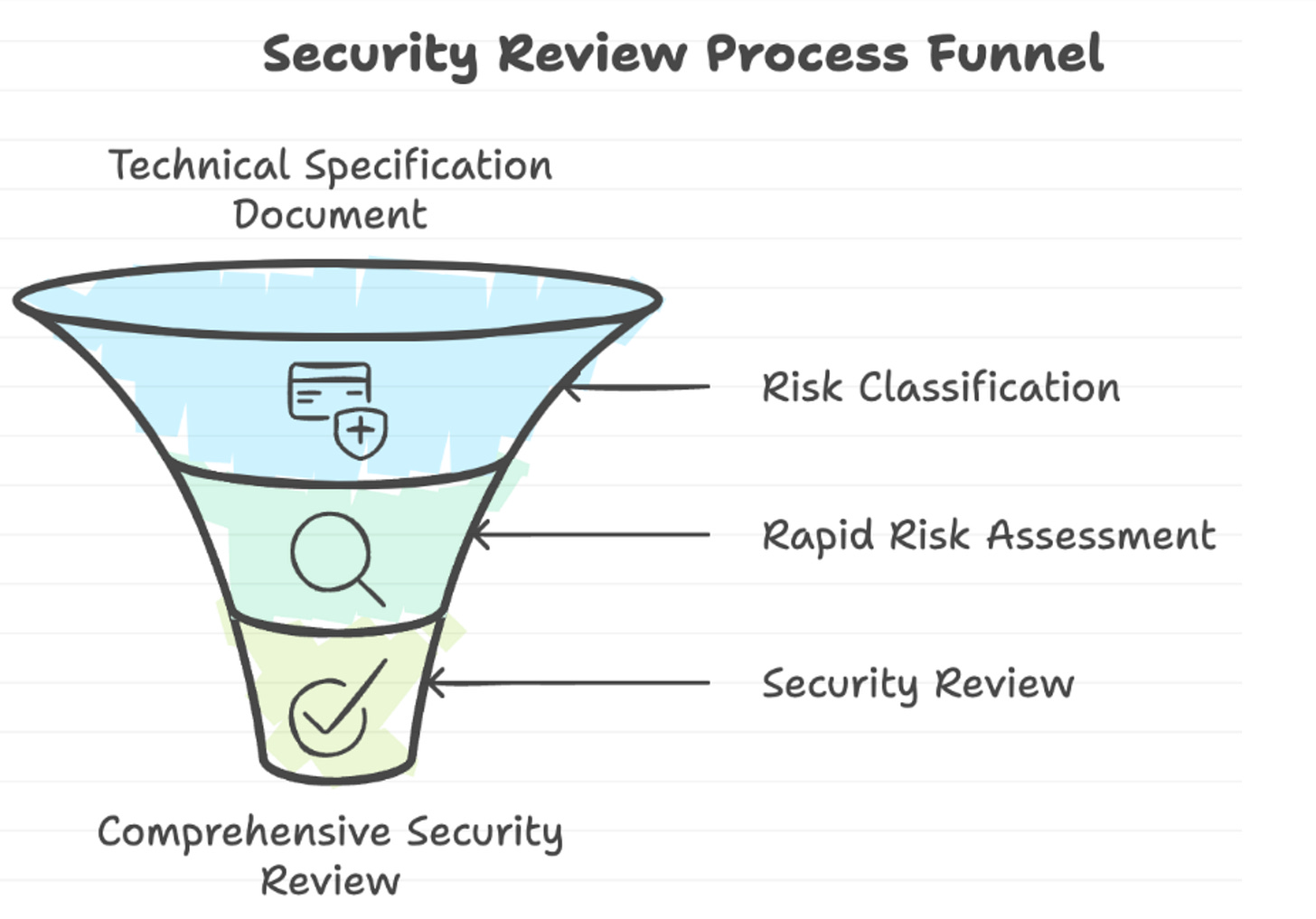

He also put together a great visualization of what he frames as the “Security Review Process Funnel”, which demonstrates various activities AppSec practitioners perform to contribute to a comprehensive security review, while also demonstrating that these activities are largely manual, cumbersome and struggle to scale - which as we know, often leads to being a blocker for Developers and causing friction with the business.

The article goes on to walk through uploading technical specification documents and highlighting key risks and considerations in key areas such as authentication and authorization among others.

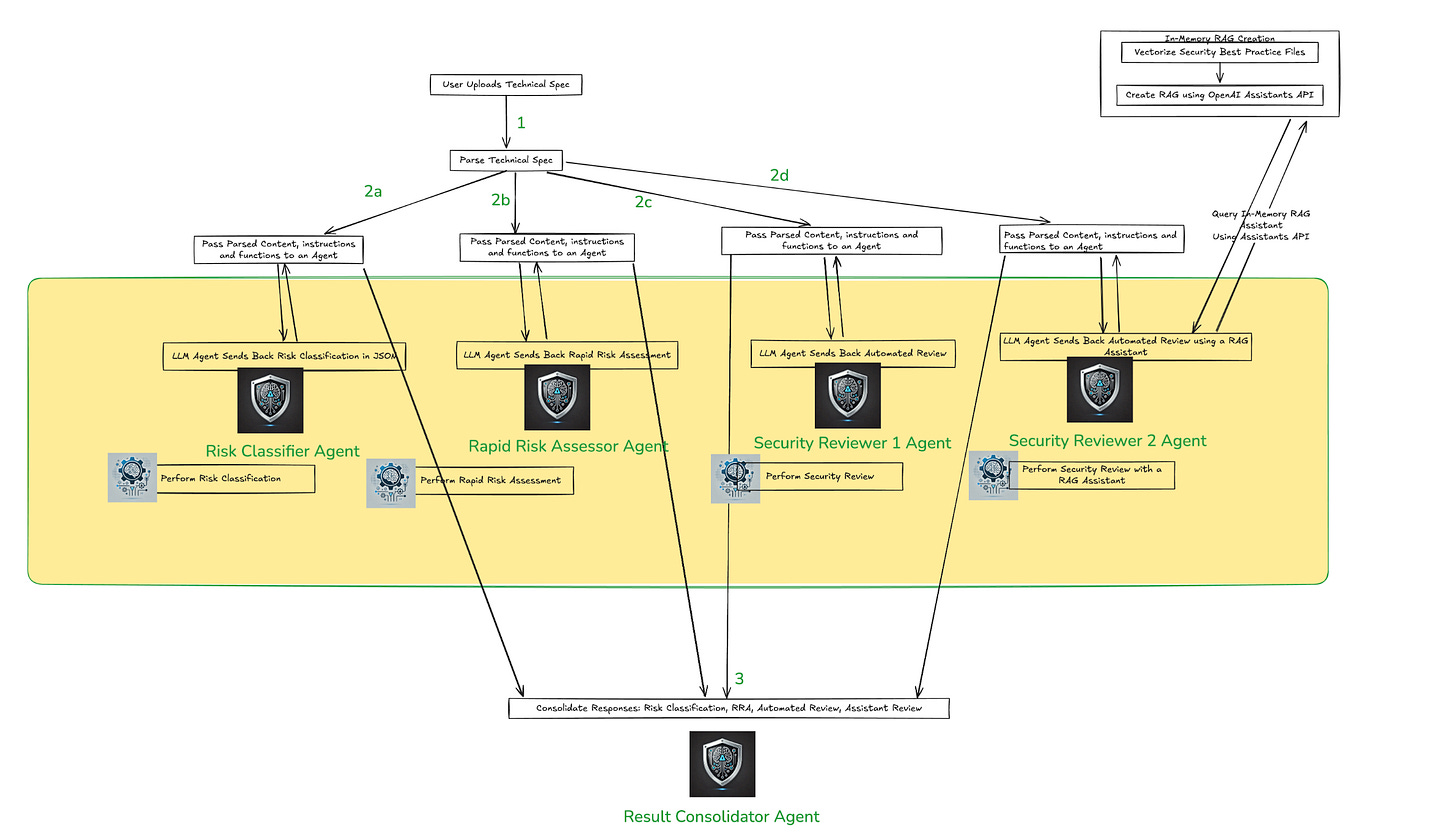

Building on the theme of Agentic AI and multi-agent architectures, with agents performing specific tasks and activities, he even shows what this may look like in action, and uses open sourced options such as OpenAI’s Swarm agentic framework.

He ultimately ends up summarizing lessons learned and observations, including the potential of multi-agent architectures to perform complex workflows and activities and accelerate AppSec practitioners through augmentation.

That said, I was happy to see he also raised concerns about the autonomy of agents, something called out in the OWASP LLM Top 10 as a key risk and mentioned that there needs to be guardrails to impact for unintended consequences and errors in the agentic activities, as well as safeguarding the agents and their use as well.

Following up on this, he has a second article where he explores building an agent for OffSec purposes, in this case analyzing JavaScript API endpoints and looking for vulnerabilities.

The workflow goes through the entire process, and included identifying and reporting on vulnerabilities. The non-deterministic nature of LLM’s allowed for them to get creative in exploring and identifying potential vulnerabilities and the author emphasizes his belief that agentic AI is the future, including for AppSec and OffSec use cases due to massive time savings, productivity and ability to scale individual impact like nothing before.

Open Source Threat Feed - Powered by LLM’s

Organizations continually look for new ways to leverage GenAI and LLM’s for cybersecurity use-cases. Another recent example is from AppSec company Akido, who published a blog and project they’ve dubbed “Intel”, an open source threat feed powered by LLMs.

They used a custom-trained LLM to review changes in packages and identify when a security issue has been fixed. This include public change logs and release notes indicating when security issues have been fixed. Many of these are occurring before the vulnerabilities are even disclosed in vulnerability databases.

Through their testing and early implementation they were able to identify 511 vulnerabilities that were patched but not disclosed publicly, which of course has risks for downstream consumers who wouldn’t be aware of the vulnerabilities prior to reaching widely used vulnerability databases.

They even go on to describe that 67% of the vulnerabilities that were identified never get publicly disclosed, or “silently patched” as it is often called. They showed that this was occurring not only across smaller open source projects but also ones with tens of millions of downloads as well.

They said for those that did get disclosed it takes an average of 27 days from the time the patch released to a CVE being assigned but it ranged from as short as a day, to as long as 9 months.

The LLM looks for keywords and contextual cutes that indicate relevance for vulnerabilities in the changelogs maintained by open source projects. They also speculate why vulnerabilities may not be getting disclosed, ranging from concerns about a projects reputation to simply a lack of resources.

The problem with silent patching is that if downstream users of the projects and components never know there are vulnerabilities associated with the components, they are unlikely to patch or upgrade the versions they’re running, since known vulnerabilities are often a primary drive for upgrades. This means we could potentially have many instances of insecure vulnerable components running in production environments unbeknownst to the users.

While this is really neat and useful, I do think there of course are some challenges here, such as the lack of high-quality changelogs, comments and artifacts which means this method isn’t foolproof, requires some level of manual verification (as they mention with a human in the loop) and so on - but it is yet another novel application of LLM’s to provide value when it comes to cybersecurity.

Vulnerability Scoring and Prioritization Woes Continue

Several articles have come out recently continuing to bemoan both the Common Vulnerability Scoring System (CVSS) and Common Vulnerabilities & Enumerations (CVE)’s and its ecosystem of CNA’s, who can submit CVE’s. While promising alternatives and/or high-fidelity signals continue to emerge such as CISA KEV and EPSS, along with reachability analysis, the reality is that there are no shortcuts, and organizations are and will continue to be outright overwhelmed with the number of vulnerabilities they need to deal with.

There is a lot of positive talk about Secure-by-Design, which in theory would stem the flow of vulnerabilities downstream to customers and consumers, the movement is far from all-encompassing and is ultimately voluntary at the end of the day as well.

This was similarly echoed in a recent piece on CSO Online titled “Security researchers find deep flaws in CVSS vulnerability scoring system”. In this article, the complaints included the severity of vulnerabilities being underrated, a lack of consideration for APT, exploitability and so on.

I laid out many of these concerns myself in a 2023 article titled “Will CVSS 4.0 be a vulnerability-scoring breakthrough or is it broken”, as well as in my book Effective Vulnerability Management.