Resilient Cyber Newsletter #26

Secure Design vs. Default, Y-Combinator “Request for Startups”, Agentic Security Marketmap, LLM’s in Production & Revolutionizing SecOps with AI

Welcome

Welcome to another issue of the Resilient Cyber Newsletter!

You would think as the year comes to a close that things would slow down a bit, but it has been quite the opposite. From research, fundraising, legal action, and continued research and innovations around AI and its intersection with AppSec and Vulnerability Management.

That said, let’s get to it.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 7,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Cyber Leadership & Market Dynamics

Design vs Default: Analyzing Shifts in Cybersecurity

CISA has spent the past year+ advocating for an initiative they have called Secure-by-Design (and Default). CISA’s Director, Jen Easterly has been among the most outspoken advocates of Secure-by-Design and has given many great talks on the topic and brought industry leaders together to rally around this shift.

She recently shared a Congressional hearing titled “Design vs. Default: Analyzing Shifts in Cybersecurity”, which featured testimonies from Heather Adkins (Google), Jim Richberg (Fortinet), Shane Fry (RunSafe Security), and Srinivas Mukkamala (New Mexico Institute of Mining and Technology).

The speakers highlighted systemic challenges across the software ecosystem from insecure design, a lack of memory safety, failure to establish a secure SDLC, and more, and the impact it has across society, now that software powers everything from consumer goods to critical infrastructure.

They also discussed the challenges of trying to change the market incentives and dynamics at play that contribute to these vulnerabilities and risks.

Speaking of Jen Easterly, she unfortunately announced she will resign from CISA at the time of the upcoming U.S. Presidential administration change. Jen has been an incredible advocate for a more secure digital society and she recently had a great conversation on a wide range of topics with Jason Healey.

Jen has been a force in the industry and driving various efforts for CISA such as Secure-by-Design forward and I truly hope and suspect we will see her continue making an impact post-CISA.

Incidents DO Cause Impact - Sometimes

I’ve often cited studies and research including from CISA and others who point out that cybersecurity incidents often don’t have damning financial and business impacts for organizations. This is why I wanted to be fair and share this story, showing how a ransomware attack impacted the U.S. arm of spirits maker Stoli Group to file for bankruptcy. In their filing, the organization states the ransomware incident and its impact played a part in their need to file for bankruptcy.

The organization suffered a ransomware incident in August that disabled their primary system for tracking resources and operations, and shut down essential functions, including accounting.

FCC Calls for Urgent Cyber Overhaul Amid Salt Typhoon

I recently shared that several leading U.S. telecom leaders were impacted by a cyber espionage incident, which some have dubbed “the worst telecom hack in U.S. history.” Now, the FCC is calling for urgent cybersecurity changes in light of this recent incident.

Considerations include the draft of a “Declaratory Ruling” that will mandate telecom carriers secure their networks against unauthorized access (something you would suspect industry telecom leaders already do).

The incident involved stolen data and intercepted phone communications, including those of notable political leaders, presidential candidates, and nominees. Telecom providers would have to submit annual certifications to the FCC stating they “created, updated, and implemented a cybersecurity risk management plan, which would strengthen communications from future cyberattacks.”

It is also reported that the Cyber Safety Review Board (CSRB) will be focusing on the incident and investigating and reporting on it, so we will inevitably learn much more as their report is released.

New York State (NYS) Fines Geico and Travelers $11.3 Million for Data Breaches

NYS recently shared that they fined auto insurers Geico and Travelers a combined $11.3 million or lapses in their respective cyber programs that led to data breaches impacting over 100,000 people during COVID 19.

The incidents involved a breach of Geico’s online quoting tool, stealing driver’s license numbers and DOB’s, and using stolen credentials to pivot into Travelers’ insurance quoting tool, which allowed displaying plain text license numbers and lacked MFA. The incidents went on undetected for seven months.

The companies were cited as violating 2017 cyber regulations under DFS in NYS, which are some of the toughest in the nation when it comes to governing data protection.

This is a great example of organizations not only failing at fundamental security controls but companies being impacted by the patchwork state-level regulatory landscape we have in place due to a lack of nation-wide cybersecurity regulations.

That said, critics have pointed out that financially these fines are a “slap on the wrist” and not financially material to the businesses and it will remain to be seen what changes they drive.

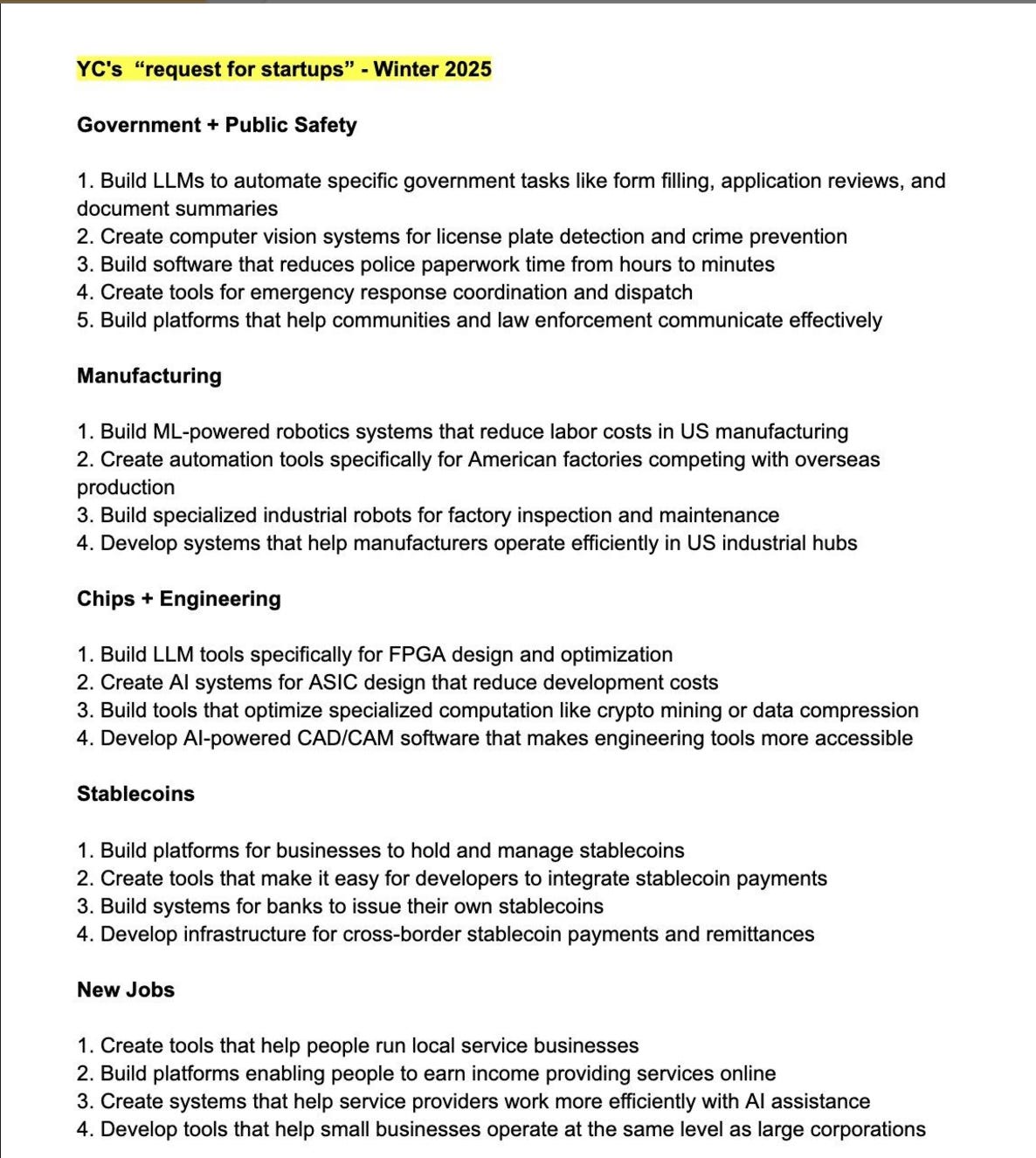

Y-Combinator “Request for Startups”

VC Y-Combinator released what they dub their “request for startups” for 2025, laying out key areas where they are seeking startups and founders and project innovation and opportunities reside, see below:

Government being listed at the top speaks to several things. For a long time, Government and Public Sector were considered a dead end for many VC and founders.

We now of course see major changes among the VC landscape on both the Federal Civilian and Defense side, with breakout companies like Anduril, Palantir, and others (although they are definitely unique in their capital resources) and efforts by the Defense Innovation Unit (DIU) and countless others to address longstanding gaps between silicon valley and the public sector. This comes at a time when we’re hearing a lot about the need for Government efficiency and modernization as well.

Israeli’s First defense-exclusive VC fund

Speaking of Defense focused investments, news broke that a VC named “Protego” out of Israel raised $70M in just two weeks, aiming to raise a $200M round. This will be Israels first defense-focused VC firm. This of course comes after a tumultous period in Israel and further emphasizes the international intersection of capital, technology and national security.

Tuskira Emerges from Stealth - $28.5M Seed Round, AI-Powered Unified Threat Defense Platform

News broke this week that Tuskira emerged from stealth mode with $28.5M funding, including investments from Intel Capital and SYN Ventures, Rain Capital and others. It is looking to unify security tools with automated security control assessments and in-depth exploit analysis of vulnerabilities across code, cloud environments, apps and infra.

The founding team involves Piyush Sharrma (CEO), Om Moolchandani (CISO) and Vipul Parmar (CTO) and they have decades of startup and large cyber vendor experience. I was fortunate enough to get to collaborate with Piyush and Om in their previous startup, Accurics, which focused on Infrastructure-as-Code (IaC) Security and was acquired by Tenable.

I’m excited to see what their team does this time around and think the area they are focusing on has a ton of potential given the noise of shift left, need for runtime context and the ability of AI and LLM’s to make sense out of the cybersecurity tool sprawl most organizations suffer from.

Astrix Security Announces $45M Series B

Non-Human Identities (NHI)’s continue to be a hot topic in the security industry, as organizations grapple with credential compromise as a leading cause of security incidents and the explosion of NHI’s due to cloud, microservices and automation.

NHI Startup (where I’m an advisor) announced their $45M Series B, which was led by Menlo and Anthropic to “enhance AI era identity protection”. The company helps identify and inventory NHI’s and automatically detect and remediate over-privileged, unnecessary and malicious access that leads to incidents and data breaches.

I’m pleased to see the involvement of Anthropic in the announcement and funding, because as I wrote in my recent piece titled “Agentic AI’s Intersection with Cybersecurity”, were seeing a rapid push for Agentic AI and AI Agents, each of which will need to involve identity, authentication and authorization and far outnumber human identities in enterprise environments, which holds a significant amount of risk organizations will need to address. It looks like AI leaders such as Anthropic see the same potential!

AI

Agentic AI’s Intersection with Cybersecurity

As you’ll notice in this issue of Resilient Cyber, and more broadly in the industry, Agentic AI, or AI Agents are causing a ton of buzz. 2024 and now 2025 are even being dubbed “the year of AI agents”.

That’s why I recently published a standalone article looking at this trend titled “Agentic AI’s Intersection with Cybersecurity: Looking at one of the hottest trends in 2024-2025 and its potential implications for cybersecurity”.

In the article, I discuss:

What is Agentic AI

Why Agentic AI is top of mind for so many, including Venture Capitalists (VC)’s, Private Equity Firms, Investors, Startups, Founders, and Practitioners

Potential intersection with and implications for cybersecurity, including areas such as:

GRC

AppSec

SecOps

Agentic AI is easily one of the hottest trends in the industry right now, including in Cyber, and it is very likely to shape the landscape of many industries and niches for years to come, due to the autonomous power and potential of these technologies.

Agentic Security Market Map

Brandon Dixon of Microsoft published a really excellent article discussing the Agentic Security Marketmap. It lays out the various vendors and products across areas and a spectrum, such as domain/task focus, job, and the level of autonomy, from assistive to partial and high autonomy.

Brandon discusses several promising startups in key areas such as Incident Triage in the SecOps use case, or Code Vulnerability Analysis on the AppSec front, as well as security copilots/agents that augment security practitioner’s daily activities with enriched context and insights.

The use of GenAI and LLM’s will contribute to boosts in developer productivity and the overall volume of code produced. Inherently, it is inevitable that much of this code will not be safe, especially given LLM’s are trained on existing code bases, which of course include vulnerable code, a point made by AI Security Caleb Sima in discussions I’ve previously shared. This leads to the need for agentic code vulnerability analysis and remediation, or a situation where AI is both helping develop and then secure code.

Agents and Security - Friend or Foe?

Building on a focus on AI Agents or Agentic AI, Shreya Shekhar’s blog focuses on Agents and Security and whether they may end up being a friend or foe. Her piece lays out the context around the excitement with Agentic AI, and the difference between LLM’s (e.g. System 1 Thinking), hybrids like Copilots, and then AI Agents (System 2 Thinking).

Much like many others, her piece primarily focuses on Agentic AI and its implications for SecOps in particular, something I discussed in my recent article “x”. SOC automation via agents holds potential in various capacities, such as tier 1 and potentially 2 SOC analysts, triaging and investigating alerts and remediating simple vulnerabilities.

Agents can help in predictive cases, of analyzing data and predicting trends and future events, or generate solutions based on evidence gathered and insights drawn. The image above shows the intersection of Predictive, Generative, and the hybrid scenario as well.

She also discusses the value of using agentic AI to provide insight into various use cases such as vulnerabilities and the assets they involve, credentials and the assets they have access to and much more. We can quickly see how AI when coupled with agents and the ability to leverage multiple tools in conjunction could be a force multiplier for cybersecurity.

The use cases expand beyond the above to cover autonomous pen-testing (using Horizon3.ai as an example), as well as threat analysis & detection among others. The challenge here of course is anything we as defenders can use this technology for, so can attackers. Time will tell which of us adopt it more effectively to achieve our desired outcomes.

Lastly, she lays out a decision tree to use when trying to determine what problems may be a good fit for AI Agents vs. a single LLM API call or query.

LLM’s in Production Database - Real World Case Studies

There is of course a ton of hype about AI, GenAI and LLM’s but still not a lot of great information about real-world implementations. That is why this LLM in-production database that was recently launched with over 300 real-world LLM implementation case studies is so awesome.

The cool thing is, that they also used AI (specifically Claude) to help summarize the case studies and highlight the valuable technical details

It includes:

Production architectures from startups to enterprises

Detailed technique implementations across industries

Hard-learned lessons about what works

Practice solutions to common challenges like cost optimization, monitoring, and scaling

AppSec, Vulnerability Management and Software Supply Chain

Revolutionizing Security Operations: The Path Toward AI-Augmented SOC’s

We’ve been discussing a lot recently about the potential for AI to assist in various areas of Cybersecurity, but no area gets more attention for this potential than Security Operations (SecOps).

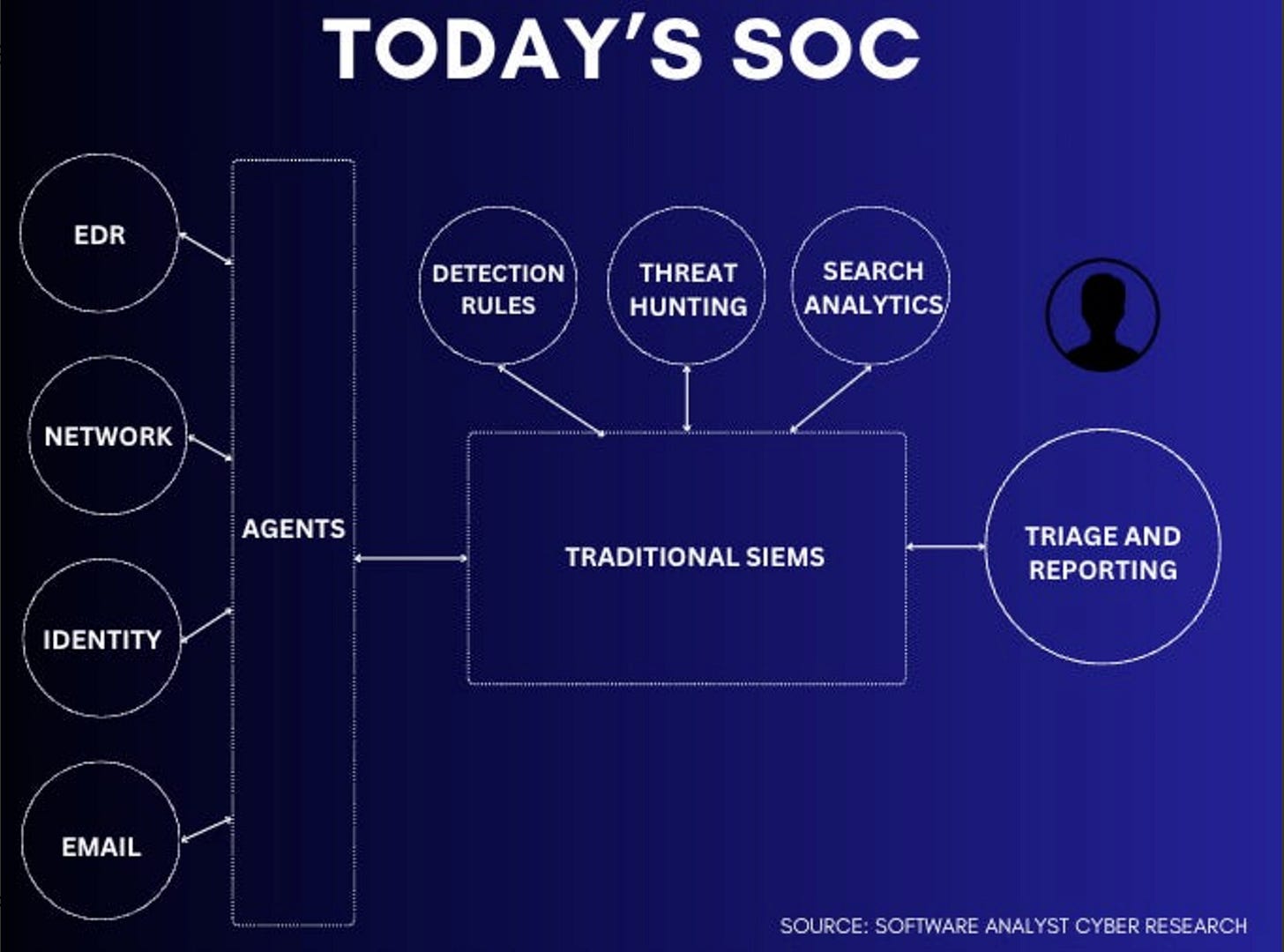

That’s why this comprehensive piece from Filip Stojkovski and Francis Odum, published on the Software Analyst Substack is so awesome. It goes incredibly deep on the discussion around SecOps/SOC’s and AI’s potential.

It starts off covering the existing AI-SOC Assistant Ecosystem, looking at vendors and the areas they focus on from AI-SOC Analyst at different tiers, hyper-automation, AI-powered XDR Security Co-Pilots, and more.

One thing they clarify right out of the gate is that while there is a lot of excitement about the potential for AI in the SOC, nearly all the leaders they spoke to agreed that they do not envision the future of SOC’s to be entirely “AI-driven”, meaning there is and will likely always be a place for humans in the SOC.

The pieces looks at how most SOC’s operate, key pain points and areas where AI holds the most potential for impact. They lay out a comparison (see below) of how existing SOC’s primarily operate and what the future SOC architecture may look like:

The article goes on to discuss the key layers of the future SOC architecture, including data fabric, storage and detection, and AI response and automation. All of the components they discuss come together to demonstrate a modern SOC “platform”, I use this in quotations because as you can see it is a collection of products, vendors and tools, not one - a problem every other area of cyber such as AppSec and others share too.

This is one of the most comprehensive and thought-provoking pieces I’ve seen around AI’s intersection with SecOps, and I definitely recommend giving it a read from both the practitioner and vendor/startup perspective.

Datadog Introduces Supply-Chain Firewall

Malicious open-source packages have created havoc across the software supply chain. As I have discussed in previous articles such as “x” and “y”, compromised open-source packages have become one of the leading causes of software supply chain attacks.

Datadog’s tool will allow the blocking of known malicious packages, aborting installations of vulnerable packages, and utilizing open source to provide observability via their Supply Chain Firewall.

It currently provides support for Python and NPM and leverages OSV as well as Datadog’s internal dataset to identify malicious packages to help organizations avoid them.

Modernizing AppSec

There is a lot of talk about the need to modernize AppSec, as organizations struggle with noisy, low-fidelity tools, silos and frustrations continue to grow between Security and Developers and vulnerability backlogs continue to balloon out of control.

This was a good conversation on the topic with Security Weekly, and Melinda Marks.

AWS GuardDuty Adds AI/ML Threat Detection

One of the biggest events of the year happened this past week, in AWS re:Invent. Of the many announcements coming out of the event was the announcement of AWS adding AI/ML threat detection capabilities in Amazon GuardDuty.

AWS added the AI/ML capabilities to help identify threats and correlate security signals that identify active attack sequences in AWS environments.

Supply Chain Attacks Continue

News recently broke that a version of Ultralytics was compromised on PyPI, the official python package index. This supply chain attack involved compromising the build environment of the popular library, which is used for creating custom machine learning models.

This demonstrated the continued evolution of how attackers try and compromise widely used OSS libraries and projects. I suspect 2025 will bring more similar attacks and well as new novel techniques.