Resilient Cyber #57

Stuxnet - 15 Years Later, Looming AI Cyber Exits, The U.S. AI Action Plan, Better AGAINST AI Agents, FedRAMP VulnMgt Evolution, & MSFT Security Woes (Again)

Welcome

Welcome to issue #57 of the Resilient Cyber Newsletter.

It’s been quite the week, with reflections on Stuxnet and the state of IT/OT security, discussions/rumors around Israeli AI Cyber exists, the White House unveiling the U.S. AI Action Plan, FedRAMP dropping modernized continuous vulnerability management publications, and Microsoft incidents impacting the industry (again).

So, let’s go get going, because we have a lot of ground to cover!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Security Leaders Trust Absolute Security for Endpoint Resilience

Between cyberattacks, outages, and hybrid work, it’s a struggle to keep devices secure, compliant, and operational. Blind spots create risk, and manual remediation slows things down.

Absolute Secure Endpoint 10 introduces AI-powered Assistant for automated workflows and Customizable Dashboards for deeper insights to improve productivity and reduce risk. Required Applications (coming soon) ensures critical tools are in place and configured to optimize compliance.

With self-healing capabilities rooted in firmware, Secure Endpoint 10 revolutionizes endpoint control, security, and resilience from the core.

Take control of your cyber resilience strategy today.

Cyber Leadership & Market Dynamics

One Year Later: Reflecting on Building Resilience by Design

We recently passed the one-year mark (July 19th) of the infamous CrowdStrike incident, which impacted organizations worldwide, from IT firms to airlines. It was one of the most significant IT incidents in recent memory, and the vendor at the center of it, CrowdStrike, recently shared a blog covering some of the key efforts and changes they’ve made in the past year.

This includes a three-pillared approach to Resilience-by-Design, across Foundational Adaptive and Continuous improvement. They go on to discuss their sensor and content safety, standards for customer control, and infrastructure and operational excellence.

How AI and Outsourcing Are Killing Junior Jobs

We continue to hear about AI's impact on the workforce, predominantly white-collar jobs. That impact is disproportionately impacting junior roles in the workforce so far. This article from CTECH appears to emphasize that point, stating:

"Only 360 junior employees entered Israel’s high-tech industry last year, out of approximately 6,500 university graduates who complete relevant degrees each year.”

The article cites findings from Israel’s Employment Service, which revealed that the number of job seekers in high-tech professions has more than doubled, from 7,000 in January 2019 to 15,00 in April 2025. It says these figures don’t typically include entry-level candidates, indicating the figures may be even worse.

The article goes on to discuss a lack of programs for employees to hire and train entry-level talent, as well as increased use of AI or aspirations to do so, which is causing companies to slow down hiring to evaluate whether AI could potentially do the work of some of these junior roles. That said, it also makes the point that junior talent are often “AI natives” and are not only introducing AI to teams but teaching senior staff how to use it.

It’s worth noting that this article refers to Israel, not the U.S. Still, it does represent a microcosm of a larger discussion about the intersection of AI, economics, and the workforce.

It does remind me of comments of Dirty Jobs host Mike Rowe, who discusses the unintended workforce impacts of driving kids away from aspiring towards blue collar work and instead into tech, and the fact that AI isn’t quickly replacing Electricians, Plumbers, Linemen, and more, like it is with the white collar workforce. He cites quotes from folks like Larry Fink, who claim we need hundreds of thousands of Electricians and other similar blue-collar workers.

Stuxnet - 15 Years Later & the Evolution of Cyber Threats to Critical Infrastructure

This week the Committee on Homeland Security hosted a session reflecting on the 15 years since Stuxnet and the evolution of threats facing IT/OT environments. It featured witness testimony from industry leaders:

Tatyana Bolton

Kim Zetter

Robert Lee

Nate Gleason

I had a chance to listen to most of the session and there was a lot of great points made, ranging from the evolution of cyber threats and malware, the nuances of OT environments compared to IT, risks facing critical infrastructure, the need to sustain funding and support for CISA and much more.

This was very well done by Tatyana, Kim, Rob and Nate, attempting to explain complex cyber topics to a non-technical audience while not just raising awareness but garnering support for deeper commitments for cybersecurity.

Build vs. Buy in the Age of AI: A New Equation for Security Teams

Does AI change the Build vs. Buy paradigm for security teams?

That's the intriguing question posed by Rob Fry in this piece. Historically, organizations have faced the Build vs. Buy dilemma, which often comes down to factors such as cost, internal expertise and competencies, total cost of ownership (TCO), and more.

With the rise of AI and LLM-driven development, some are revisiting this dilemma with fresh eyes and the ability to replicate vendor capabilities much easier than in the past. Rob raises a lot of thought-provoking questions and topics in the post.

That said, I still strongly think most organizations will rightfully opt to Buy vs. Build, due to building not being a core competency, and often a distraction from focusing on delivering value to stakeholders and customers, rather than leaning into innovative commercial offerings and capabilities.

In fact, I think AI will (and likely already is for some) lead to faster/short iterations among product versions and the ability to field customer requests into product backlogs, innovate and turn new capabilities around to customers faster than we have seen historically.

Securing the Budget: Demonstrating Cybersecurity’s Return

One of the most real challenges for CISOs and security leaders is demonstrating an ROI for cyber spend. This article from Kara Sprague, CEO of HackerOne dives into tying security investments into measurable business outcomes, including reduces breach likelihood and minimized financial impact to help align internal stakeholders when it comes to investing in cybersecurity.

Kara discusses why traditional ROI doesn’t cut it for cyber, due to distinct aspects of cyber. Whereas other areas such as product and sales focus on revenue generation, new customers and more, cyber is often measured based on risk/cost reductions and avoidance. It is akin to trying to prove a negative, and that is why it is easy to get lulled into a false sense of security. “We haven’t had an incident yet, so we must be spending enough”, is a common phrase rumbled.

Wave of Israeli AI-Cyber Exits Looming?

In the dust settling of the massive $700 Million Prompt AI acquisition by Palo Alto around RSA, something Ed Sim and I discussed in an interview last week, there are now rumors that there may be other AI cyber exits on the horizon.

An article from CTech calls out other players such as Lasso, Aim, Pillar and Noma as the next potential targets in the hot Israeli AI cyber landscape as potential M&A targets.

The article cites ZScaler and F5 as potential acquirers, looking to follow suit of PANW in terms of large AI acquisitions, to accelerate their role as becoming key players in the AI security space. This isn’t a surprise, given large incumbents are often faced with the Build vs. Buy paradigm when it comes to expanding into new categories, and it is often faster to acquire an innovative startup in the space than try and organically build the capability themselves.

These industry leaders, atop the cyber market through tremendous performance have “earned” the right to become a platform, as discussed by my friend Ross Haleliuk recently in a piece titled “You don’t start a platform, you earn the right to become one”.

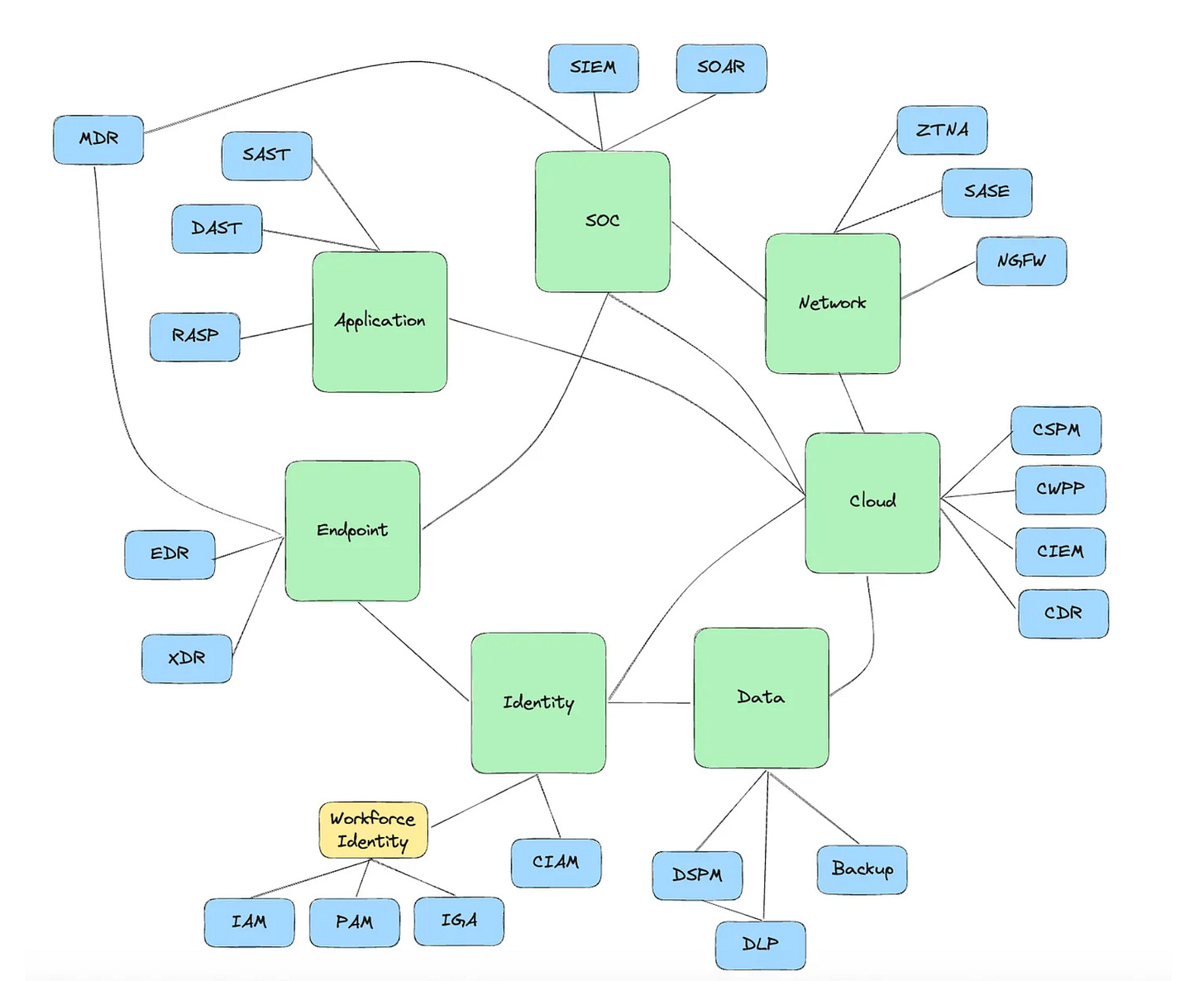

The industry leaders such as PANW, ZScaler and F5 have all earned their stripes, starting with a core competency and are now rightfully expanding into AI security, which does and will include various subsets of capabilities itself, something broken down exceptionally well by James Berthoty in his “2025 Latio AI Security Report”, as shown below:

James and I dove deep into this topic in an episode of Resilient Cyber titled “Analyzing the AI Security Market”.

AI

Winning the AI Race

The White House recently unveiled "America's AI Action Plan." It's built around 3 pillars:

1️⃣ Accelerating AI Innovation

2️⃣ Building American AI Infrastructure

3️⃣ Leading in International AI Diplomacy and Security

The plan contains a TON of interesting intersections around cybersecurity and national security, which are all underpinned by economic prosperity.

This plan also emphasizes the further bifurcation of approaches between the U.S. <> and EU, with one emphasizing removing barriers to innovation, unleashing economic opportunity, and the other pushing to be a regulatory superpower, and saying there will be "no pauses" on regulatory efforts, despite pleas from industry.

I'll be writing a much more detailed article on this, but whether you're an investor, founder, or practitioner in technology and cybersecurity, there's something here for you.

Chamath Palihapitiya and the crew at All-In recently helped facilitate a “Winning the AI Race”, featuring speakers across Government and industry, including comments from President Trump himself on the AI Action plan.

Why I’m Betting Against AI Agents in 2025 (Despite Building Them)

The industry fervor around Agentic AI continues to be at a fever pitch, with VC firms dubbing 2025 the “year of agents” and the ecosystem seeing both a lot of capital allocated to Agentic AI startups, as well as founders and incumbents focusing on this space.

That said, this piece from Utkarsh Kanwat is a thoughtful counter-piece around the Agentic AI hype and its coming. from someone who has built 12+ production AI agent systems. Utkarsh mentions that they’ve built agents for a variety of purposes including Development, Data & Infrastructure and Quality & Process Agents.

He goes on to lay out three hard truths about AI agents:

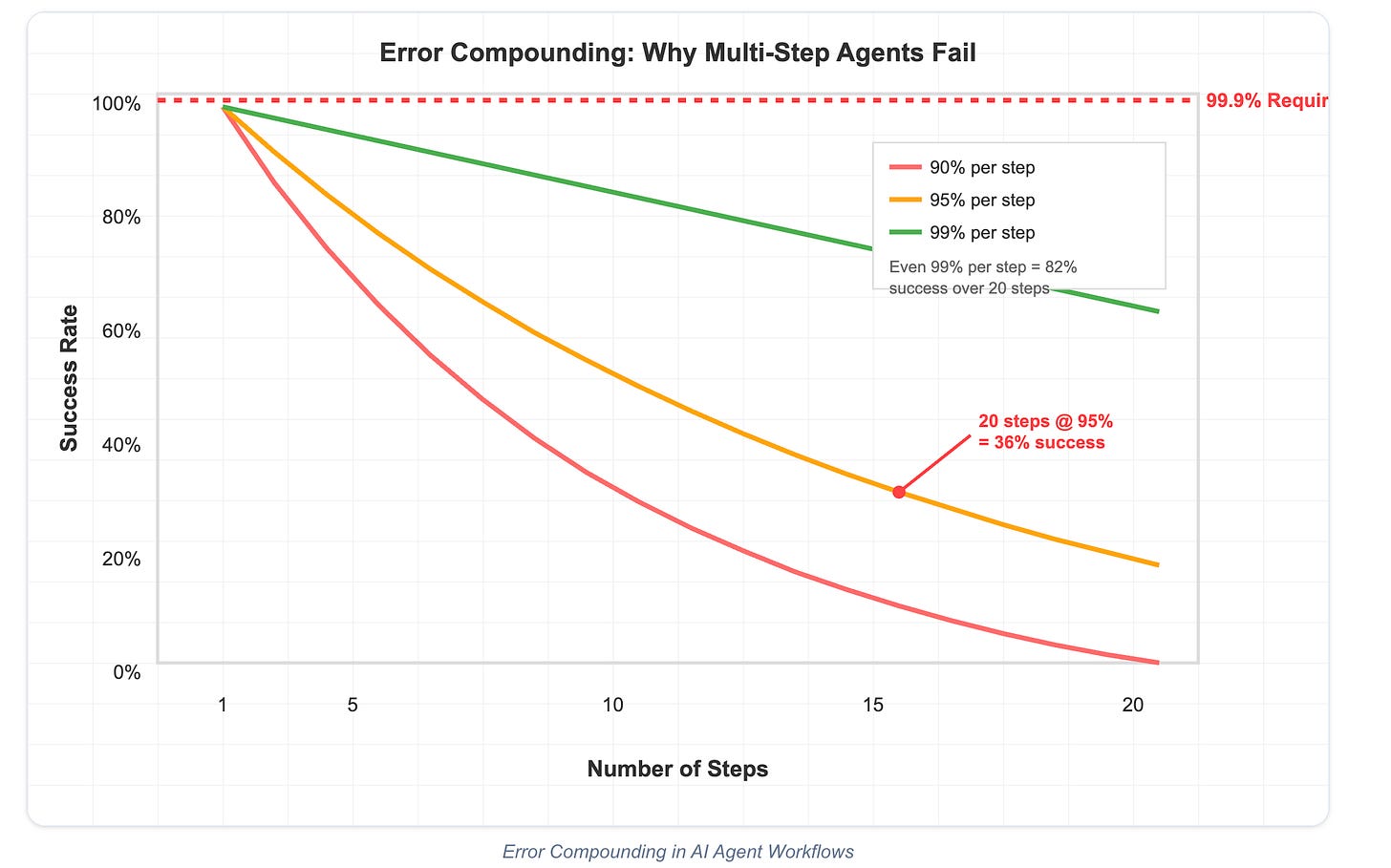

Error rates compound exponentially in multi-step workflows. 95% reliability per step = 36% success over 20 steps. Production needs 99.9%+.

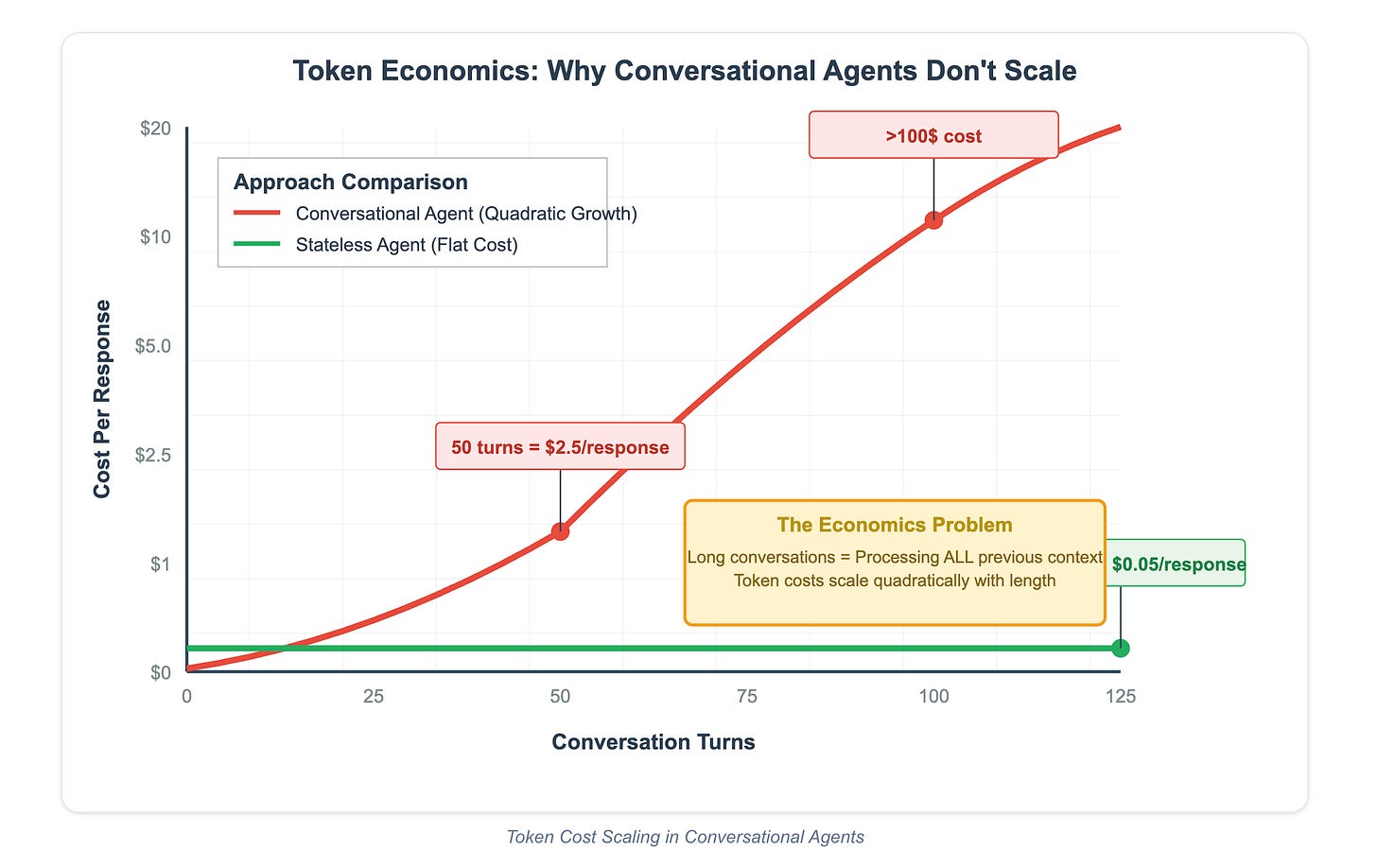

Context windows create quadratic token costs. Long conversations become prohibitively expensive at scale.

The real challenge isn't AI capabilities, it's designing tools and feedback systems that agents can actually use effectively.

There’s a helpful diagram showing how multi-step agents fail with the increased number of steps necessary:

He points out that production systems need 99.9%+ reliability and if each step in a agent workflow has 95%~ reliability and each step (as seen below) diminishes the success rate, this poses a real problem for all the claims and hopes around multi-agent multi-step workflows, especially in the context of Services-as-a-Software and those who are looking to have AI and Agents augment and replace human labor.

5 steps = 77% success rate

10 steps = 59% success rate

20 steps = 36% success rate

Key characteristics for a successful agentic system includes bounded contexts, verifiable operations and human decision points (sometimes) at critical junctions. He explains that when you stray from these themes the math simply works against a successful agentic workflow and implementation.

Another excellent point made is the token economics, using conversational agents as an example of how it doesn’t scale:

The more turns in the conversation the higher the costs. While this article isn’t focused on security, you can see how this would play out if you look at SecOps, AppSec, or GRC as cyber specific examples. For example, using conversational agents for threat hunting, compliance assessments and remediations, vulnerability management and more will likely require multi-turn conversations that could have token based economic implications that make them unfeasible or expensive.

One thing I thought was interesting was this statement:

“The most success agents in production aren’t conversational at all. They’re smart, bounded tools that do one thing well and get out of the way”

This makes me think we will most certainly see agent “sprawl” to come, with agents exponentially outnumbering human users, and create the strong need for agentic governance as well as tackling problematic topics such as agentic IAM, zero trust and threats pointed out in OWASP’s Agentic AI Threats and Mitigations publication.

The author also highlights challenges he dubs the “tool engineering reality wall”, where he discusses the need to have effective tool design, accounting for factors such as not overwhelming context windows, agents knowing if operations fully or partially succeeded, communicating state changes without burning tokens and much more.

Another excellent point raised is the “integration reality check”, where he highlights the complexity of integrating agents with enterprise systems, such as a lack of clean API’s, partial failure modes, authentication flow complexity, rate limits and more. I particularly thought this line jumped out:

“The companies promising "autonomous agents that integrate with your entire tech stack" are either overly optimistic or haven't actually tried to build production systems at scale. Integration is where AI agents go to die.”

The article isn’t all doom and gloom though, and highlights why the dozen agentic systems he’s built do work, which includes key aspects such as:

Humans reviewing UX generated interfaces before deployment

Humans maintaining control over the integrity of database integrity

Defining discrete, well-scoped tasks

Leverage robust deployment pipelines with review and analysis prior to deployment

Having functional rollback mechanisms in place

The author suspects venture-funded “fully autonomous agent” startups will hit economic walls, as they struggle to scale beyond demos, adoption will stagnate when it comes to real workflows, and the real winners will be those building “constrained, domain-specific tools that use AI for hard parts while maintaining human control or strict boundaries over critical decisions”.

While many may disagree with this piece, I thought it was a reasoned insightful rebuttal to the current bubble and hype. This isn’t to say agentic AI isn’t without its promise and potential, but it is clearly not without its problems either.

The piece closes recommending building the right way, with these key principles

Defining clear boundaries

Designing for failure

Solving the economics

Prioritizing reliability over autonomy

Building on solid foundations

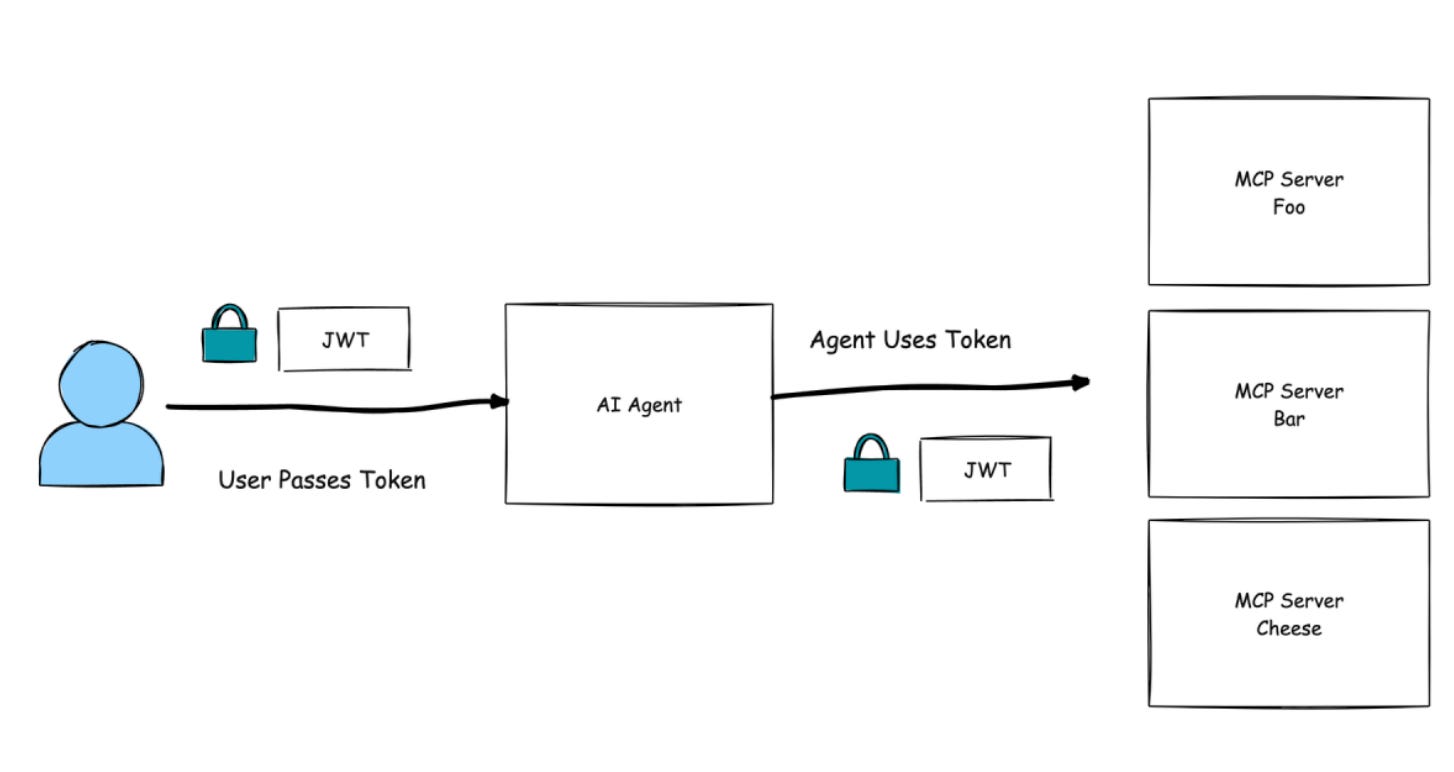

Enterprise Challenges with MCP Adoption

We continue to see widespread excitement around Agentic AI and protocols such as MCP. That said, the security community has raised concerns around IAM, inherent gaps in the original spec and more.

One of those folks include Christian Posta, who previously had written how the MCP spec is for enterprises. That said, Christian recently wrote a great article discussing. that despite recent improvements around the MCP spec itself, enterprise challenges with adoption still remain, and key questions such as:

How to onboard/register/discover MCP services

How much of the MCP authorization spec to adopt

How will they manage upstream API/service permissions and consent

As Christian points out, it seems like every vendor is quickly standing up MCP servers, as are others across the Internet, and as it turns out, many are connecting to/with these servers with little consideration for the security implications of doing so. Much like SaaS, Christian says organizations should have a way to inventory and onboard new MCP connections and integrations as well as a method to assess and approve new MCP integrations for security risks.

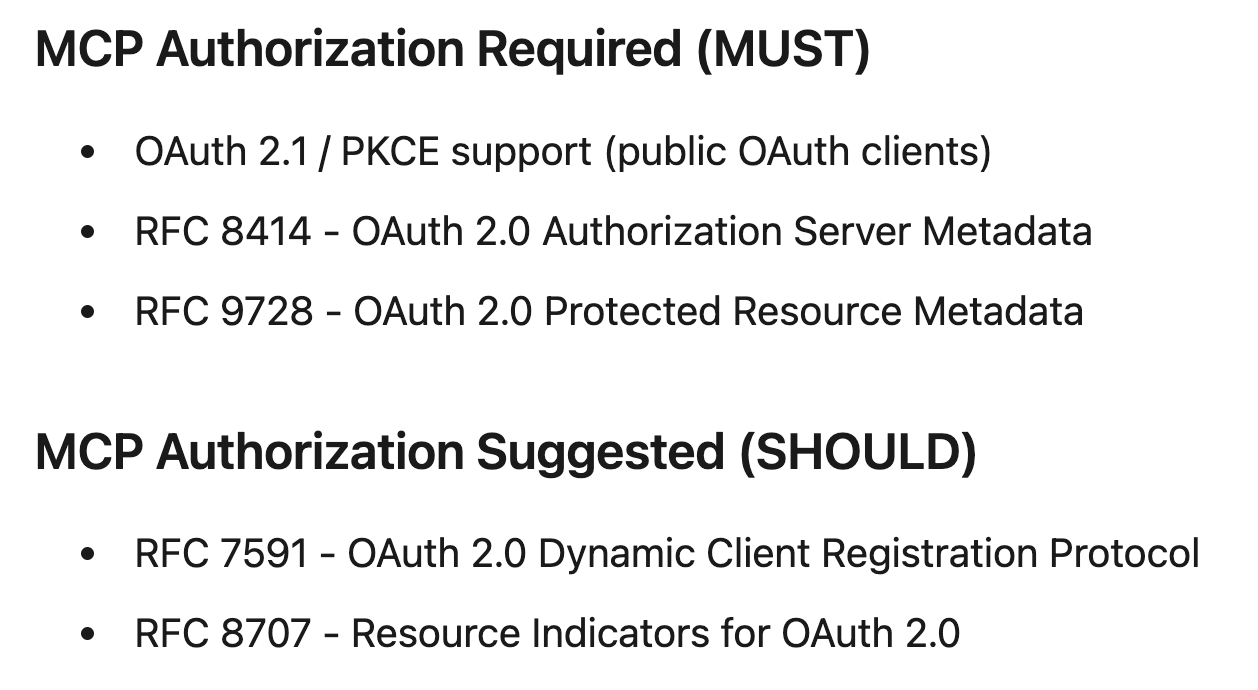

Christian also lays out aspects of the MCP Authorization spec which MUST, and SHOULD be implemented:

As he closes with, MCP Services and the MCP protocol more broadly offer a lot of innovative opportunity for enterprises, but they aren’t without security implications to, and as they said, an ounce of prevention is worth a pound of cure, but this infers organizations actually take the time to thoughtfully and securely think through what they’re implementing and integrating with - which as we all know, isn’t likely, especially against the FOMO back drop around AI.

AppSec, Vulnerability Management & Supply Chain Security

FedRAMP Vulnerability Management Evolution 🚀

We recently saw FedRAMP® release its Continuous Vulnerability Management RFC. It's a long-overdue evolution towards context-based vulnerability prioritization. This includes accounting for known exploitation, exploitability, and reachability, along with business context (e.g., criticality, compensating controls, etc.)

FedRAMP provides a summary and motivation of the RFC as laid out below:

This standard’s intent is to ensure providers promptly detect and respond to critical vulnerabilities by considering the entire context over Common Vulnerability Scoring System (CVSS) risk scores alone, prioritizing realistically exploitable weaknesses, and encouraging automated vulnerability management. It also aims to facilitate the use of existing commercial tools for cloud service providers and reduce custom government-only reporting requirements.

Gone are the days of prioritizing vulnerabilities based on legacy CVSS base scores without consideration for the above criteria. This wasted the time of developers and engineers and failed to remediate real organizational risks.

In this article, I break down the rise of CVEs contrasted against actual exploitation.I also dive into the use of reachability analysis for vulnerability prioritization, citing some of the helpful resources from my friend James Berthoty.

I discuss some of the innovative offerings from folks such as Chainguard and Endor Labs that allow teams to focus on their core competencies and deliver value to customers rather than vulnerability toil. I share the link to a live deep dive Ron Harnik and I did on the FedRAMP RFC and vulnerability management more broadly.

This is great work by Pete Waterman and the FedRAMP team, and I'm glad to see them bring innovation to cloud security and compliance, and hope other compliance frameworks follow suit!

Microsoft Software Being Exploited (Again)

News broke this week that Chinese hackers were actively exploiting flaws in Microsoft’s software, this time in SharePoint. The activity involves Chinese APT’s dubbed “Linen Typhoon” and “Violet Typhoon” and involved on-premise versions of SharePoint, which have now had patches rolled out.

This comes on the heels of news in 2024 where U.S. government officials criticized Microsoft’s handling of the breach of U.S. government officials’ email accounts, which included a once active Cyber Safety Review Board (CSRB) investigation of the Microsoft outlook incidents, but has been stalled due to political changes and impacts at CISA/DHS.

Microsoft also made headlines recently when ProPublica published an extensive report describing how MSFT was using engineers in China as part of its support for the U.S. DoD’s cloud Azure services, which is truly astounding when you realize this was happening at the same time as the MSFT Exchange incidents impacting the U.S. Government, CSRB investigation, inquiries from Congress on national security risks from MSFT and more.

All the while, MSFT has continued to receive large Federal/DoD IT contracts too, really calling into question the power of lobbying and the inability of the Government to change its purchasing patterns despite massive risks from one of its largest IT vendors.

The team at Rapid7 recently shared a Exploit module for Microsoft SharePoint ToolPane Unauthenticated RCE (CVE-2025-53770 and CVE-2025-53771.

Google’s AI “Big Sleep” Discovers a Zero Day

Google recently shared that their LLM-assisted vulnerability discovery framework “Big Sleep” helped discover a zero day impacting the SQLite open source database engine, prior to hackers being able to exploit it in the wild.

"Through the combination of threat intelligence and Big Sleep, Google was able to actually predict that a vulnerability was imminently going to be used and we were able to cut it off beforehand," Kent Walker, President of Global Affairs at Google and Alphabet, said.