Agentic AI Threats and Mitigations

A deep dive into OWASP's Agentic AI Threats and Mitigations Publication

By now, it should be clear to anyone following industry trends that we’re diving headfirst towards widespread agentic AI adoption and implementation, with use cases spanning nearly every industry vertical.

This applies in Cyber as well, with startups, investors, and innovators experimenting across sub-domains in cyber, such as SecOps, GRC, and AppSec, to name a few. I specifically laid this out in a comprehensive article I wrote titled “Agentic AI’s Intersection with Cybersecurity”.

However, the widespread rollout of agents and agentic workflows presents new threats and risks. That's why we will examine a recent OWASP publication, “Agentic AI Threats and Mitigations.”

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

What is an Agent?

First, the paper does a great job defining what an AI “Agent” even is:

“An agent is an intelligent software system designed to perceive its environment, reason about it, make decisions, and take actions to achieve specific objectives autonomously.”

This means agents differ from LLMs and other aspects of tech by being able to perceive the environment and systems around them, reason about those perceptions, and make decisions based on those inputs, either semi- or fully autonomously.

They also lay out some key characteristics of Agents, which they cite as:

Planning & Reasoning

Reflection

Chain of Thought

Subgoal Decomposition

Memory/Statefulness

Action and Tool Use

We’re seeing this across countless industries and in cyber as startups and innovators looking to employ agents and agentic workflows to address systemic issues such as compliance modernization and automation, vulnerability management, AppSec, and more.

We’re also seeing the rise of new protocols and entire ecosystems to facilitate some of these characteristics, most notably with the rapid spread of the Model Content Protocol (MCP), which is being quickly deployed and used to facilitate the interaction with APIs, services, and tools for LLMs and agents.

Below is a great excerpt from an MCP paper I recently shared titled “MCP: Landscape, Security Threats and Future Research Directions”.

It’s easy to see the appeal of MCP, as it abstracts the need for a unique interaction with each external tool and resource for an AI application and instead allows them to be exposed via an MCP Server.

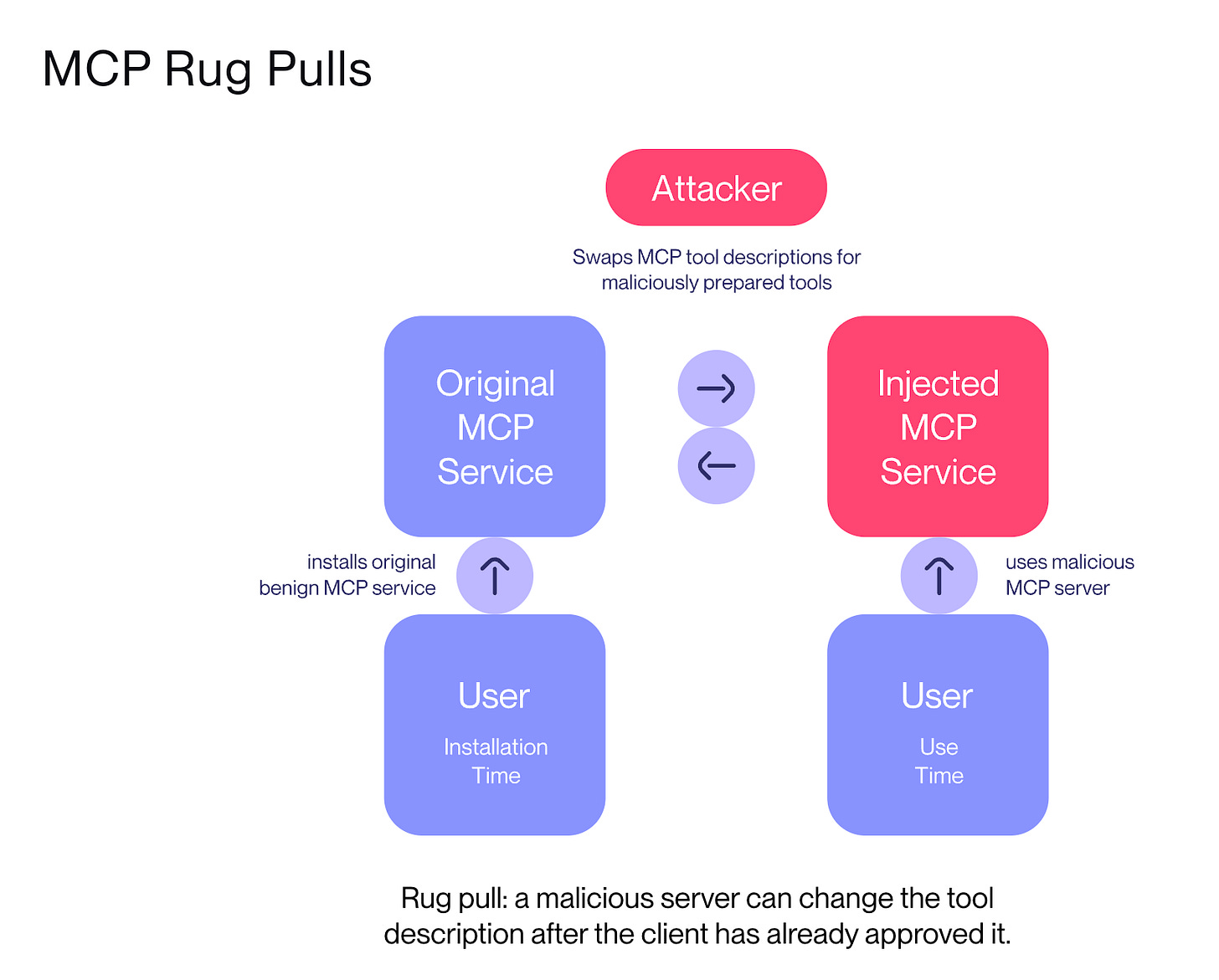

That said, as the paper goes on to show, MCP faces unique risks as well across the MCP Server lifecycle, from Creation to operation and Update, such as name collisions, backdoors, configuration drift, and more. Below in the article I’ll also show a real-world finding associated with MCP.

Below, OWASP provides a diagram that demonstrates Agentic LLM Applications, including examples of single and multi-agent scenarios:

OWASP cites LangChain, AutoGen, and CrewAI as some of the leading agentic frameworks. They even provide a comparison of some of the leading Agentic frameworks, which can be helpful for understanding how they function.

Agentic AI Reference Architecture

It can be difficult to conceptualize how these agents and agentic workflows will fit into enterprise architectures, so it is great to see OWASP provide examples for single-agent and multi-agent scenarios.

Each example has similarities when it comes to its core components, such as:

Application(s)

Agent(s)

Service(s)

You can think of these components coming together to facilitate various enterprise use cases. The application may have embedded agentic functionality, while the agents can and will likely be multimodal, accepting natural language inputs and images, files, audio, and video.

These inputs can be fed to internal or external LLMs, to the application, and to the environment to reason. Then, additional services, such as local or remote services and tools, can be engaged to perform additional tasks before providing an outcome to the user/organization.

While this is incredibly promising and has deep potential for value around automation, efficiency, and scalability of traditional manual cumbersome tasks, we can start to look at it from the security perspective and see changes it makes to the attack surface, attack vectors, potential vulnerabilities, and risks.

While not specific to only agents, the OWASP LLM Top 10 is a good reference point where you can see how some of these risks can come into play with agentic architectures, such as prompt injection, sensitive information disclosure, and excessive agency, especially as agents are empowered to interact with internal and external data sources, services, systems, and tooling.

It’s also not even just the agents themselves that should be part of your threat models and are potentially vulnerable, but also their associated credentials, as we see the exponential expansion of Non-Human Identities (NHI), which I’ve covered in previous articles, such as:

Additionally, the infrastructure and mechanisms to support the agentic workflows can and will be vulnerable, such as a recent example from Invariant Labs, where they showed how a vulnerability in MCP (which I mentioned above) could be used for “Tool Poisoning Attacks”, see below:

Agentic AI Patterns

The OWASP publication goes on to discuss a variety of existing and potential Agentic AI Patterns with different types of agents, such as:

Reflective Agent

Task-Oriented Agent

Coordinating Agent

Planning Agent

Human-in-the-Loop Collaboration

And many more. I won’t belabor the details in this article, but if you want to dig in, I recommend checking out the paper, which shows how vast the number of potential agent types and use cases can and will be.

Agentic AI Threat Modeling

As I mentioned above, while Threat Modeling is a tried and trusted foundational security practice, the introduction of LLMs and Agentic architectures opens some new considerations, both in terms of attack vectors and attack surfaces and potential risks and vulnerabilities.

OWASP specifically calls out attack vectors such as memory poisoning and tool misuse when it comes to agents and their autonomy and role within architectures. They also cite examples such as Remove Code Execution and Code Attacks code generation tools and scenarios where confused deputy vulnerabilities and privilege escalation could occur with agents performing unauthorized activities on behalf of users.

Fundamental controls such as least-permissive access control, which is also part of the recent trend around zero trust, are pivotal. That said, doing so in environments with 10-50x as many Non-Human Identities (NHIs) tied to agents and autonomous workflows at scale will be challenging in enterprise environments, given we already historically struggle with credential compromise, lateral movement, and least-permissive access control for human identities, let alone NHI’s, which there are and will be exponentially more of as agentic architecture adoption grows.

There’s always a painful irony in the fact that emergent technologies can both help and harm security, like cloud-native infrastructure's ability to quickly set up ephemeral, disposable environments to enable their attacks, complicating attribution and forensics.

One interesting risk OWASP points out is the potential for cascading hallucinations. It is well known that one of the problematic aspects of LLMs is that they hallucinate (e.g., make stuff up). When we have agentic architectures with statefulness/memory, external tool use, and multi-agent interactions, this risk can be amplified at scale, as the hallucinations have cascading impacts.

As the saying goes, complex systems fail in complex ways.

Their paper enumerates several other key risks, such as overwhelming the human-in-the-loop due to complex agentic interactions, manipulating the agent's intent, traceability concerns, and more, which is why I recommend reading the full paper.

What is particularly helpful is the threat model summary they provide, which shows a notional agentic reference architecture with specific threat IDs, names, descriptions, and mitigations captured in an accompanying table.

Each of the identified threats has specific descriptions and mitigations they recommend, which can be helpful when threat modeling agentic architectures and thinking through the complexity of these environments and how they can be abused.

Below is just a snippet of that table:

The remainder of the OWASP AI Threats and Mitigations publication is dedicated to their “Agentic Threats Taxonomy Navigator”, which is a great way to identify and assess threats described in the above agentic threat model.

While not suited for this article, this is a great resource for organizations trying to understand the potential threats and risks to agentic architectures and the associated mitigations that can and should be implemented.

This is a timely resource as security practitioners look to keep pace with the rapid business adoption of Agentic AI and implement practical measures to enable secure adoption.