Resilient Cyber Newsletter #84

Global Cyber Spend, SaaS-Pocalypse (or not), OpenClaw Security Roadmap, RSAC Innovation Sandbox Finalists, Identity Context Decay & The Path to 50K Annual CVEs,

Week of February 10, 2026

Welcome to issue #84 of the Resilient Cyber Newsletter!

If you read last week’s issue (#83), you know I was raising alarms about OpenClaw (formerly Moltbot) and the security nightmare it represents. Well, this week the situation escalated dramatically. Gartner issued uncharacteristically strong guidance recommending organizations block OpenClaw entirely, calling it “a dangerous preview of agentic AI” with “insecure by default” risks.

The Register ran four separate articles in three days about OpenClaw security issues. And Ken Huang applied the MAESTRO threat modeling framework to OpenClaw, identifying critical vulnerabilities including plaintext credential storage and model provider API key exposure - exactly the risks I highlighted when discussing the OWASP Agentic Top 10.

But it’s not all doom and gloom.

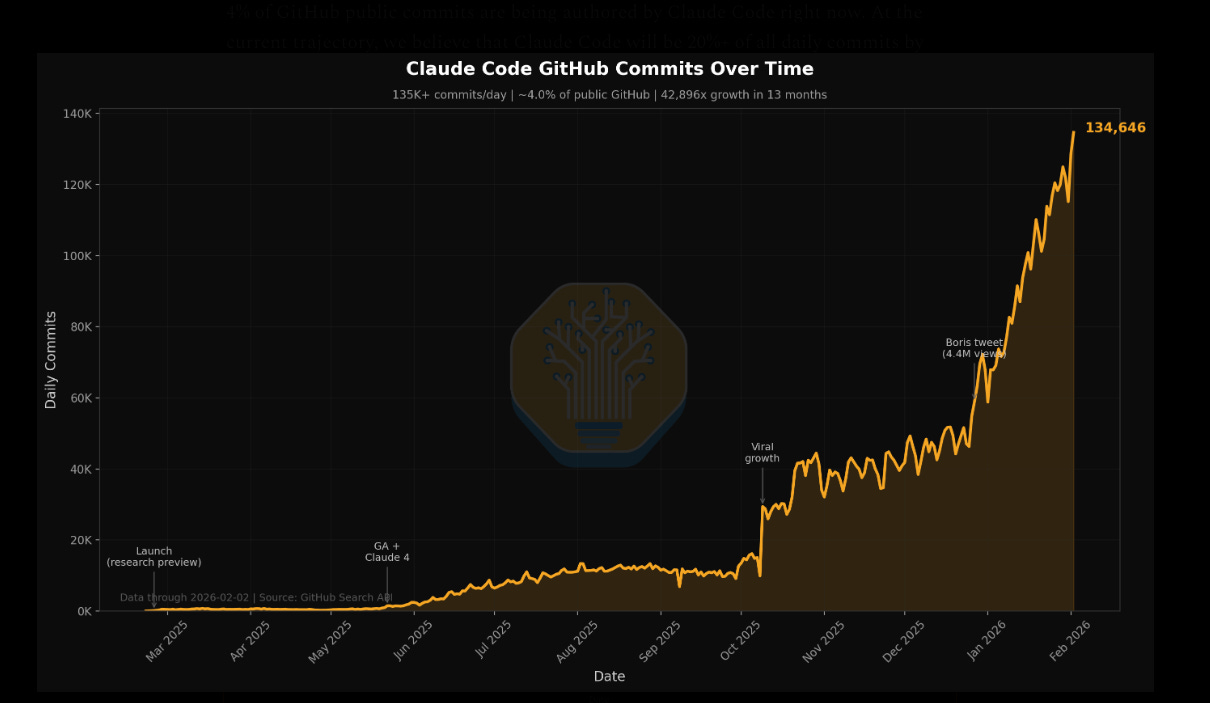

We also got OpenAI’s Frontier platform announcement, Dylan Patel ’s deep dive on Claude Code hitting an inflection point (4% of GitHub commits and climbing), and some genuinely useful frameworks for thinking about where we’re headed, from Dan Shapiro’s “Five Levels” to a16z’s pushback on the “death of software” narrative.

Let’s unpack a packed week.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 31,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Deepfake Phishing Is Already Targeting Teams — Are You Ready?

For years everyone has bemoaned security awareness training, especially those of us in security.

That’s why I found Adaptive’s AI-native approach to Security Awareness Training refreshing, and critical.

Especially with the rise of deepfake and AI-powered attacks, the potential for incidents is as high as ever, and the phrase “humans are the weakest link” has an even more critical ring to it.

Modern phishing has gone multi-modal, from AI voices, videos and even deepfakes of executives. But don’t just take my word for it, check it out yourself.

Protect your team with:

AI-driven risk scoring that reveals what attackers can learn from public data

Deepfake attack simulations featuring your executives

Cyber Leadership & Market Dynamics

Global Cybersecurity Spending to Reach $311B in 2026

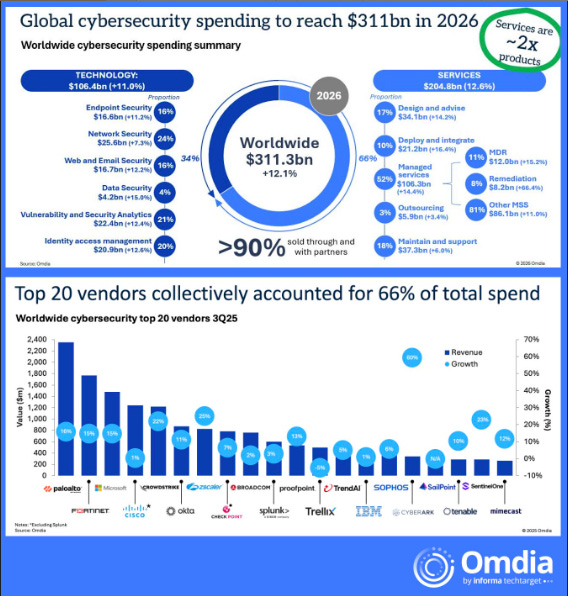

One of my go to market analysts, Jay McBain, who is the Chief Analyst for Channels, Partnerships & Ecosystems at Omdia pointing out that global cyber spend is projected to hit $311B, growing at 12.1% YoY. What was super interesting (but not surprising), is he points out that partner led services are now more than 2x product sales, and attached services are poised to outgrow products in 2026.

In his own words:

“Buyers don’t want another dashboard and struggle to find t he cyber (or AI) talent internally to protect and defend their organizations”.

"The market has shifted from buying tools to buying outcomes”.

I commented pointing out that this makes sense, given cyber isn’t something you “buy” but something you “do”, and were seeing a blur between products and services as vendors look to ensure their products deliver outcomes for customers and ensure stickiness versus sheflware.

SaaSpocalypse…or Not

One massive story this past week has been that of the “SaaS-Pocalypse”, where speculation around organizations building their own software with AI is driving major drops in stock prices for leading SaaS vendors and product companies. It was a hot topic for leading VC’s such as a16z and Harry Stebbings, as seen below:

That said, others, such as the always insightful Benedict Evans joking posted:

“I can’t believe I have to actually explain why this is bullshit”.

This is a topic Caleb Sima from our own cyber world has highlighted too, in his piece “The Era of the Zombie Tool”, where he said sure, we may see an uptick in organizations deciding to build vs. buy, but in time we will see a slew of zombie tools that never got maintained or kept up.

This is the core of the argument. One side is claiming the ability to build with AI will lead to a massive shift in SaaS usage, while others say it is far more complicated than that, and upkeep and maintenance is a real concern.

I tend to fall in the latter category and think it is more nuanced. We will see some deciding to build their own tools in some cases, especially for organizations with the teams and resources to do so. That said, they won’t be replacing complex ERM, CRM etc. type systems overnight, as those are incredibly complex, deeply integrated and require significant ongoing maintenance and customization, often being done by large venture backed SaaS companies.

I do think we will see this play out as adding pressure to SaaS companies to be more price competitive, deliver more value, and actually make efforts to meet customer demands due to the fears that they can potentially be replaced in some cases, either by internal builds, or disruptors using AI to build a better competing offering.

National Cyber Director Calls for Industry Help Cutting Cybersecurity Regulations

Sean Cairncross is taking a different tone than his predecessors. At an ITIC event, he emphasized the administration wants to be a “partner with industry rather than a scold” and called on the private sector to help identify regulatory friction points. His message: “You know your regulatory scheme better than I do.” He’s also pushing for Congress to renew the Cybersecurity Information Sharing Act.

I’m cautiously optimistic about the partnership framing, though execution matters more than rhetoric. The forthcoming cybersecurity strategy, which Cairncross says is coming “sooner rather than later,” will be the real test.

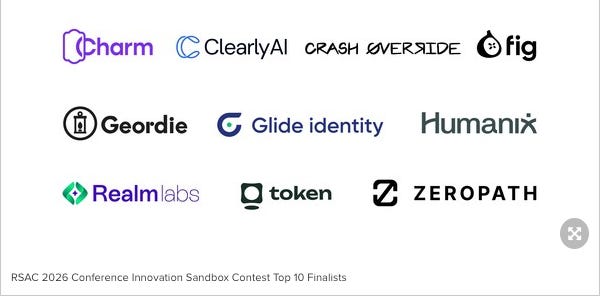

RSAC Announces Innovation Sandbox Top 10 Finalists

RSAC is around the corner and one thing folks keep an eye on is the RSAC Innovation Sandbox context. The RSAC recently released their top 10 finalists, with a mix of promising and innovative teams. The mix brings a blend of AI, Agents, Governance, AppSec and more.

The Week Anthropic Tanked the Market and Pulled Ahead of Rivals

The WSJ captured a pivotal week for Anthropic. While I’ve been focused on the security implications of Claude Code’s adoption, the market dynamics are equally striking. As I noted in issue #82, Boris Cherny’s viral post showing 259 PRs in 30 days - all AI-written - signals a fundamental shift.

The SemiAnalysis data I’ll discuss below shows this isn’t an outlier. Anthropic’s position in the enterprise and developer markets is strengthening rapidly, which has profound implications for how we think about AI security investments.

The CISO in 2026: Enabling Business Innovation

This piece aligns with something I’ve been advocating for years: security leaders need to shift from being gatekeepers to enablers. In the age of AI agents and rapid development velocity, the CISO who says “no” without offering alternatives gets routed around.

The ones who thrive will be those who understand the business context, can articulate risk in business terms, and enable innovation with appropriate guardrails. It’s a mindset shift that not everyone in our field has made.

Polish Grid Systems Targeted in Cyberattack Had Little Security

Kim Zetter’s reporting on the Polish grid attack is a sobering reminder that while we’re debating AI agent security, critical infrastructure still has fundamental security gaps. The report reveals systems with minimal protections were successfully targeted. This isn’t a hypothetical threat - it’s operational reality. As I’ve discussed in prior issues, the intersection of AI capabilities and vulnerable infrastructure is a scenario that keeps many of us up at night.

The Third Golden Age of Software Engineering - Thanks to AI, with Grady Booch

We’re all witness to the massive transformative impact AI is having on software engineering and development. Even though I’m not a developer myself, I found this conversation from The Pragmatic Engineer to be a fascinating and informative one, with longtime software leader Gary Booch. Gary discussed:

Software engineering is in its third golden age: The field has gone through several transformations, with each “golden age” introducing new levels of abstraction. We are currently in the third such period, characterized by the rise of whole libraries and packages as part of platforms.

AI is a continuation of abstraction: AI coding assistants are not an end to software engineering but rather a new shift in the level of abstraction, accelerating the use of existing libraries and packages.

Human skills remain crucial: Despite advancements in automation, human judgment, systems thinking, and the ability to balance technical, economic, and ethical considerations remain central to software engineering.

Adaptation is key to thriving: The history of software engineering shows that those who understand and adapt to new levels of abstraction and technology shifts are the ones who succeed and thrive

Vega Raises $120M Series B to Rethink How Enterprises Detect Cyber Threats

We continue to see strong security funding signals, with a recent example being Vega, who announced their $120M Series B. Vega is a really interesting player from my perspective, as they look to bring security to where data resides, as opposed to trying to aggregate security data, which is a typical approach around platforms such as Splunk.

GitGuardian Raises $50M Series C to Address NHI Crisis and AI Agent Security Gap

Another one on the radar this week is GitGuardian, who announced a $50M Series C focusing on NHI’s and Agentic AI Security. We continue to see a strong focus of venture backed startups and incumbents alike looking to tackle identity security.

Nucleus Security Raises $20M Series C To Address Exposure Management

Aaaaand another one. This time, Nucleus Security who has a strong background in VulnMgt announced they are raising a $20M Series C to focus on the demand for exposure management.

AI

AI is Ready for Production, Security, Risk and Compliance Isn’t

In this episode of Resilient Cyber, I sit down with James Rice, VP of Product Marketing and Strategy at Protegrity.

We discuss how traditional approaches to security aren’t solving the AI security challenge, the importance of data-centric approaches for secure AI implementation, and addressing issues such as AI data leakage.

Some Claim OpenClaw Is a Security Dumpster Fire - Gartner Recommends Blocking

In issue #82, I warned about Moltbot/OpenClaw’s security issues. This week, it got worse. Gartner used uncharacteristically strong language, calling it “a dangerous preview of agentic AI” with “insecure by default” risks. CVE-2026-25253 (CVSS 8.8) was disclosed, followed by three more high-impact advisories in three days. Snyk found 7.1% of ClawHub skills expose credentials. Laurie Voss, founding CTO of npm, summarized it perfectly: “OpenClaw is a security dumpster fire.” Cloud providers rushed to offer OpenClaw-as-a-service anyway.

Gartner’s advice: block downloads, stop traffic, rotate any credentials it touched. The speed of adoption despite obvious security issues validates every concern I’ve raised about the gap between AI capability and security maturity.

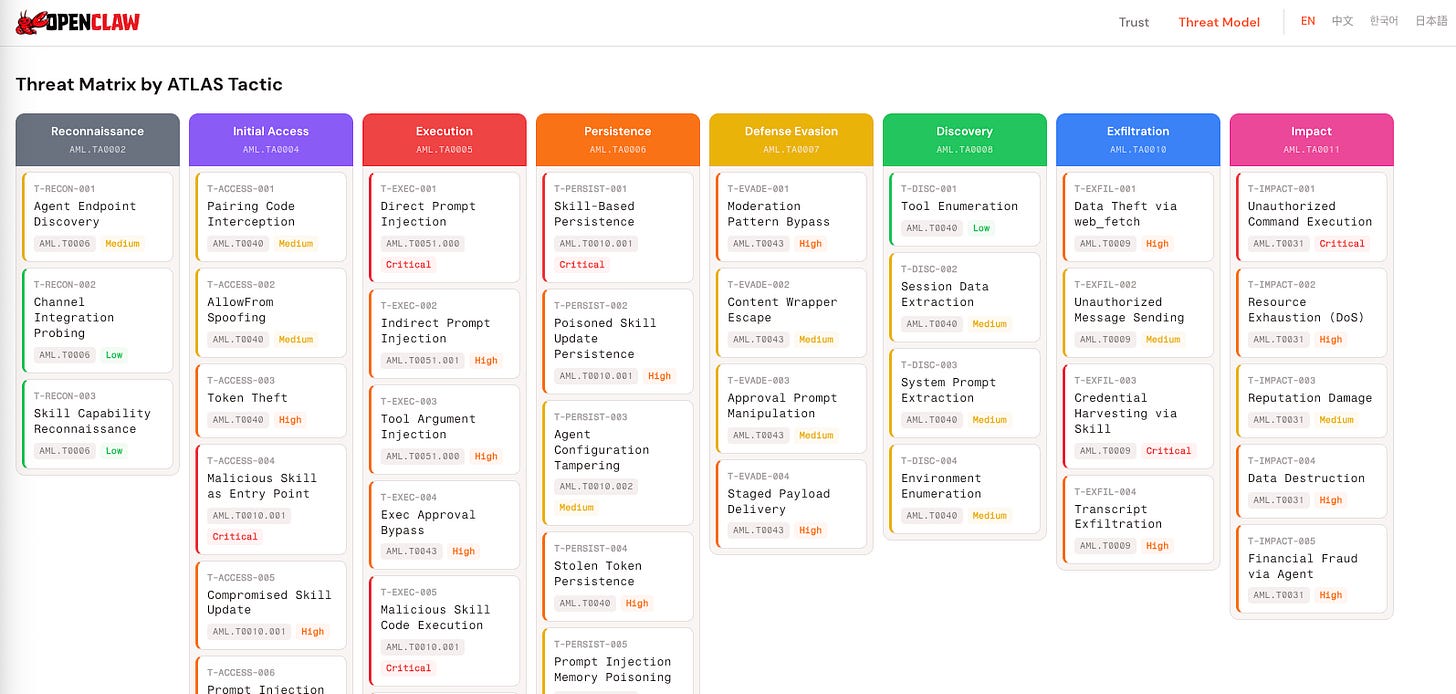

OpenClaw Threat Model: MAESTRO Framework Analysis

Ken Huang , who co-chairs AI Safety Working Groups at CSA and contributed to OWASP’s LLM Top 10 (alongside my work on the Agentic Top 10), applied the MAESTRO 7-layer threat model to OpenClaw. The findings confirm what we’ve been warning about: critical-severity API key exposure in config files, plaintext credential storage for OAuth tokens and pairing credentials, and high-severity prompt injection via file uploads.

MAESTRO provides the structured methodology our industry needs for agentic AI threat modeling. If you’re deploying any AI agents, this analysis is essential reading.

A New Era in Computing Security

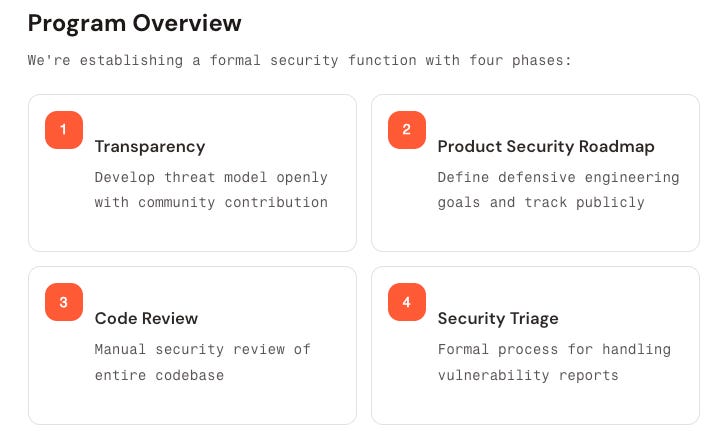

That's how this latest release from Jamieson O'Reilly, 🦄 Peter Steinberger and the OpenClaw team frames things, and, they're right. They lay out the change that agents introduce and also a program overview of the security function/program for OpenClaw, which as a reminder is an open source program run by unpaid volunteers.

Agents flip the security paradigm of decades past on its head, as AI agents can execute capabilities, have access to messaging communications, read and write files in the workspace, access connected services, carry out computer use and much more, all in production environments.

They also have risks that legacy software didn't have, including direct and indirect prompt injection, tool abuse and, novel supply chain vectors and increased identity complexity. It's awesome to see the OpenClaw team proactively establishing a security approach focused on Transparency, Code Review, Security Triage and a Product Security Roadmap.

They also created a comprehensive Threat Model, mapped to MITRE's MITRE ATLAS from Reconn through Impact, along with critical attack chains, adn defined trust boundaries. What I personally think is so cool is to see folks such as Jamieson not just find problems but help fix them for the community as well, especially for a project seeing rapid and widespread adoption and deployment.

Astrix Launches OpenClaw Scanner

As OpenClaw adoption continues to grow, so do concerns around security. Several teams have released helpful open source tools. Among those are Astrix, leaders in the NHI/Agentic AI Identity space. They released OpenClaw Scanner, and it can help identify footprints of OpenClaw by:

Connects to existing EDR platforms such as CrowdStrike, or Microsoft Defender using read-only access

Runs locally inside the organization’s environment as a Python-based script

Requires no endpoint execution, no new infrastructure, and no cloud connectivity

Produces a portable HTML report that stays entirely within the local perimeter

This is a helpful resource for those looking to get a handle on understanding where OpenClaw is running in their environments.

Kevin Mandia’s Take on the Current State of Cyber and Agentic AI

One interview I caught this week was with Kevin Mandia, longtime industry founder/leader on a podcast. He was giving his take on the state of cyber and agentic AI, and some of his thoughts were interesting.

Cybersecurity Breaches Are Inevitable and Sophisticated: Mandia emphasizes that security breaches are no longer a “so what” situation and are an unavoidable reality. He highlights the long history of cyber warfare, noting daily intrusions from nations like China and Russia since the mid-1990s. He also points out the severe business impact of breaches since the late 90s, underscoring that even major companies cannot expect to constantly pitch a perfect defense against nation-state attacks.

AI Agents are a Game-Changer in Cyber Warfare: Mandia warns that AI agents are emerging as highly effective hackers, surpassing previous threats. He explains that AI allows for the automation of human thought, leading to “swarms of agents” that can communicate, learn, and coordinate at speeds humans cannot. This dramatically increases the productivity of cyber operations, making AI-native developers “hundreds of times” more productive than traditional engineers.

Harnessing AI for Defense is Crucial: Despite the threats, Mandia is optimistic that AI is also the solution for defense. He argues that “good guys” must weaponize AI to build robust, autonomous defenses capable of withstanding the incoming “drone swarm” of cyberattacks. He advises CEOs to focus on “red-teaming” their critical infrastructure with AI-powered tools to understand their true security posture, rather than relying on outdated compliance dashboards.

For those unfamiliar, Kevin previously founded Mandiant which was acquired by Google for $5.4B. He now started a new company named “Armadin”, with $24M in seed funding aimed at using AI to test networks for vulnerabilities.

“Offense is going to be all-AI in under two years,” he said. “And because that’s going to happen, that means defense has to be autonomous. You can’t have a human in the loop or it’s going to be too slow.”

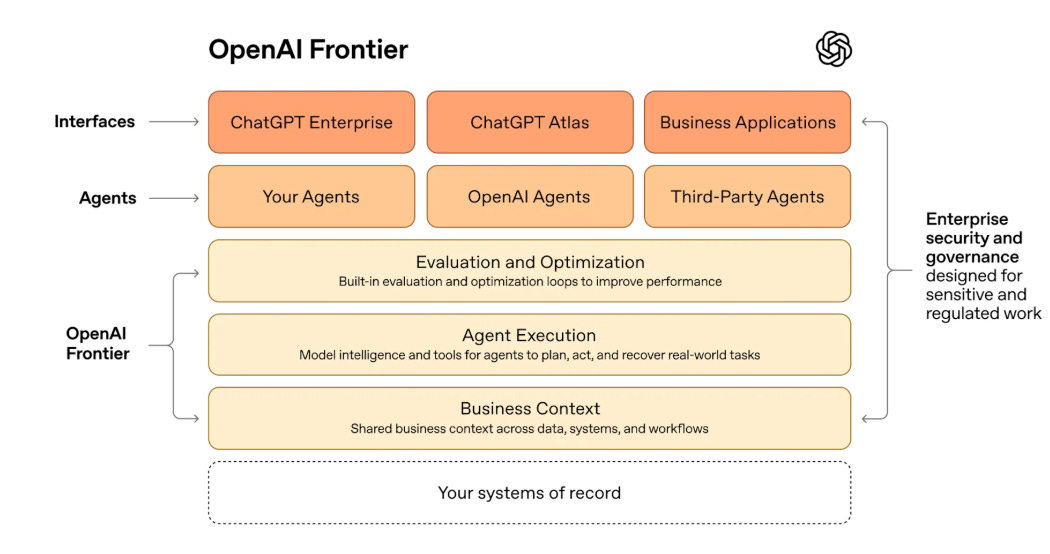

OpenAI Launches Frontier: Enterprise AI Agent Platform

OpenAI’s bid to become “the operating system of the enterprise” arrived this week. Frontier is an end-to-end platform for building and managing AI agents, compatible with agents from OpenAI, Google, Microsoft, and Anthropic. Key feature: agents “build memories” of tasks to improve over time. Initial customers include Uber, State Farm, Intuit, and Thermo Fisher.

Launched alongside GPT-5.3-Codex. Enterprise customers now account for ~40% of OpenAI’s business, expected to hit 50% by year-end. The multi-vendor compatibility is interesting - it suggests OpenAI sees the orchestration layer, not just the model layer, as strategically important.

Claude Code Is the Inflection Point

Dylan Patel dropped a must-read analysis. The headline stat: 4% of GitHub public commits are now authored by Claude Code, projected to hit 20% by end of 2026. But the deeper insight is about the economics: one developer with Claude Code can do what took a team a month, at $6-7/day versus $350-500 for a human. Anthropic’s quarterly revenue additions have overtaken OpenAI’s. Accenture signed a deal to train 30,000 professionals on Claude.

As I noted in issue #82, this velocity is coming whether we like it or not - the question is whether we can build security into these workflows. The 84% developer AI adoption rate from Stack Overflow’s survey confirms we’re past early adoption.

How to Build Secure Agent Swarms That Power Autonomous Systems

1Password follows up on their Moltbot warnings from issue #82 with constructive guidance. They built an autonomous SRE system where swarms of agents investigate reliability issues - some inspect logs, others correlate metrics, others evaluate remediation.

The key insight: agents must have explicit identity from creation through execution, every action must be attributable and auditable, and access must be scoped, time-bound, and revocable. When situations require elevated access, the system pauses for human approval. This is the model I’ve been advocating for: not blocking autonomous agents, but building proper identity and authorization frameworks around them.

AI Agents Don’t Need Better Secrets - They Need Identity

This piece crystallizes something I’ve been saying about non-human identity (NHI). The traditional secret-based approach breaks down with AI agents that need to act autonomously across multiple systems. Identity - with proper delegation chains, scoped permissions, and audit trails - is the answer.

As I discussed in issue #81, identity management for agents will require entirely new approaches to governance. The authentication delegation model, where users grant limited permissions via delegated tokens rather than sharing credentials, is the right direction.

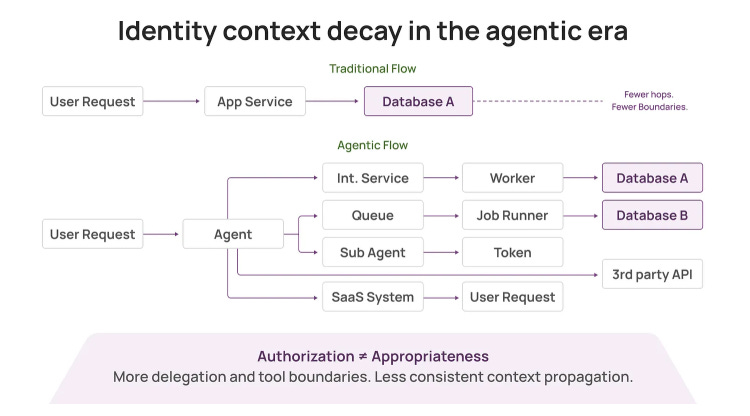

Identity Context Decay in the Agentic Era

Apurv Garg explores how agents can chain actions across platforms, achieving privilege levels no single human would possess. The excessive privilege problem mirrors traditional IAM challenges but occurs at machine speed. His recommendation: proof-of-possession tokens, delegation chains, and real-time revocation tied to risk. Without detailed logs, delegation graphs, and policy context, incident response and compliance fall apart.

This connects directly to the agentic identity challenges I highlighted in Ken Huang’s work in issue #81.

Clawing Out the Skills Marketplace: ClawHub Security Analysis

Pluto Security’s analysis of the ClawHub skills marketplace reinforces what Snyk found: the agent extension ecosystem is a supply chain nightmare. Skills execute with implicit trust and minimal vetting. In issue #82, I covered Cisco’s Skill Scanner tool release - but the problem is adoption.

Most organizations deploying OpenClaw aren’t scanning what they’re plugging in. The 26% vulnerability rate across agent skills I cited last week isn’t theoretical - it’s actively exploited.

The Five Levels: From Spicy Autocomplete to the Software Factory

Dan Shapiro’s framework for AI-assisted programming deserves attention. Level 0 is “spicy autocomplete” - the original Copilot. Level 3 is where you become a full-time code reviewer. Level 5 is the “Dark Factory” - nobody reviews AI-produced code, ever.

The goal shifts to proving the system works, not reviewing outputs. He notes Level 5 teams are fewer than 5 people doing what would have been impossible before. The security implications at each level are different. At Level 5, you need entirely different assurance models - automated testing, formal verification, continuous monitoring. Most organizations aren’t ready for this.

The Pinhole View of AI Value

Kent Beck offers a counterbalancing perspective to the AI hype. His “pinhole view” metaphor is useful: we’re seeing AI through a narrow aperture that makes certain capabilities look transformative while obscuring limitations.

For security practitioners, this is a reminder to maintain skepticism about claims that AI will solve security problems while also taking seriously its potential to create new ones. The truth is usually somewhere between “revolutionary” and “overhyped.”

The AI Coding Supremacy Wars: SaaSpocalypse and the Vibe Working Era

This piece tracks the competitive dynamics I’ve been following: Anthropic vs. OpenAI vs. Google in the coding agent space. The “SaaSpocalypse” framing - the idea that AI agents will disrupt traditional SaaS by doing work rather than providing tools - is worth considering.

For security, if AI agents are doing more work directly, the attack surface shifts from traditional web applications to agent orchestration and authorization systems. We need to follow the work to understand where security controls need to go.

Death of Software. Nah.

Steven Sinofsky at a16z pushes back on the “death of software” narrative. His core argument: “AI changes what we build and who builds it, but not how much needs to be built. We need vastly more software, not less.”

He draws parallels to the PC transition and streaming - predictions were wrong in both directions. I find this framing helpful. The security implications: we’re not reducing attack surface, we’re changing it. Domain expertise becomes more important as every domain becomes more sophisticated. The demand for security professionals who understand specific domains will grow.

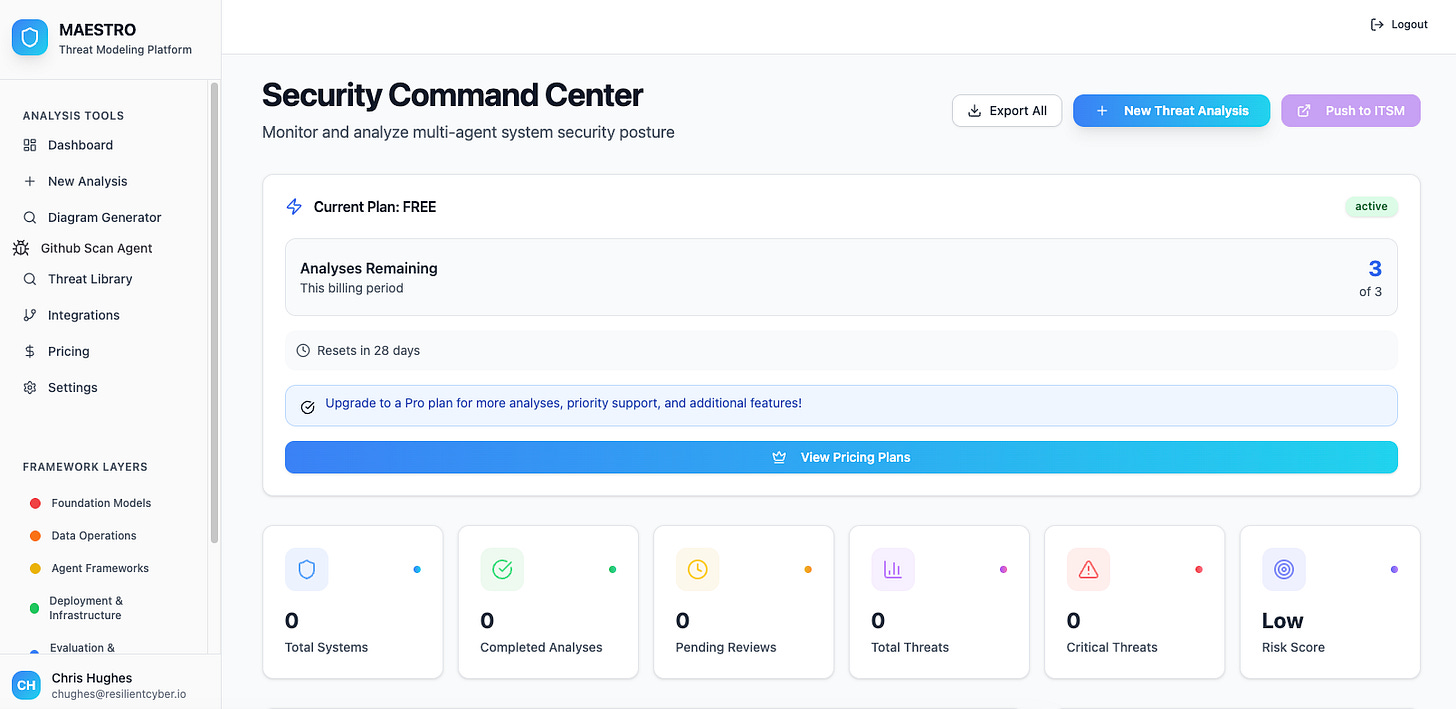

MAESTRO Sentinel - A Threat Modeling Tool for Agentic AI Systems

Ken Huang makes another appearance this week, this time releasing his MAESTRO Sentinel, which is a web-based tool for conducting threat modeling of Agentic AI systems, using the OWASP Multi-Agent System Threat Modeling document and aligning with the 7 layers of the MAESTRO framework.

AppSec

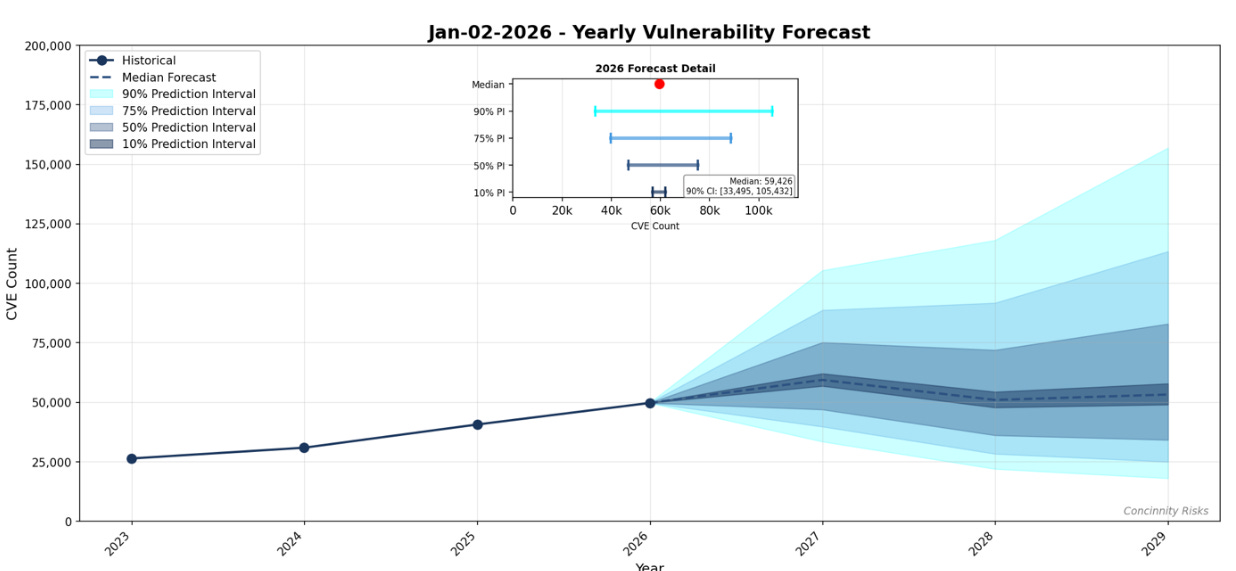

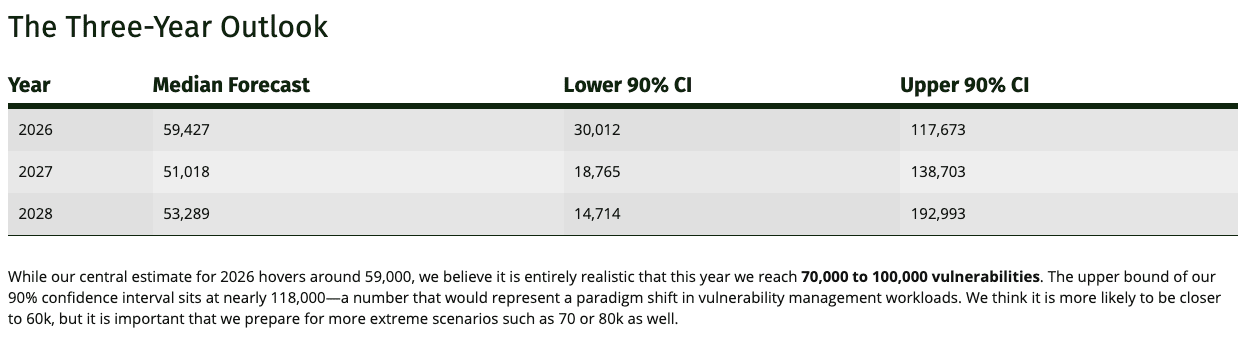

FIRST Vulnerability Forecast 2026: The Year Ahead

First dropped their 2026 yearly vulnerability forecast and for the first time ever we’re poised to see 50K+ CVE’s in a single year.

They’re predicting we will see 59,0000~ CVEs for the year and it is a number that will impact nearly anyone responsible for vulnerability management, SecOps, detecting engineering and more. They also provided a 3 year outlook.

They provide this as a resource to help teams prepare patching capacity, writing coordinated VDPs and also developing detection signatures. The real key in my opinion is, will teams do anything differently or not. Will they add more resources, funding, change their methodologies to embrace AI and automation, or just watch vulnerability backlogs pile up YoY and continue to fall behind.

Unfortunately, based on past research and incidents, we know the answer for many.

Cursor for Security Teams

We luckily are seeing many in security look to use the same AI-native tools that our Development peers are. That’s why it was so cool to see Travis McPeak at Cursor do a session on “Cursor for Security Teams”.

Travis walked through using Cursor for security work, and specifically uses OpenClaw as an example to examine for risks.

Malicious VSCode Extension Launches Multi-Stage Attack with Anivia and OctoRAT

Hunt.io documented a supply chain attack targeting developers through a fake Prettier extension on the VSCode Marketplace. The attack chain: VBScript dropper → Anivia loader (AES decryption in memory, process hollowing into vbc.exe) → OctoRAT with 70+ commands for surveillance, file theft, and remote access.

Before C2 communication, OctoRAT immediately harvests browser credentials from Chrome, Firefox, and Edge. The extension was up for only 4 hours before takedown, but this highlights how developer tooling is increasingly targeted. Combined with the OpenClaw skills marketplace issues, we’re seeing a pattern: anywhere developers extend their tooling is a supply chain attack surface.

AI SAST in Action: Finding Real Vulnerabilities in OpenClaw

AppSec companies continue to innovate with AI, modernizing traditional tools such as SAST with the use of AI. Endor Labs is a great example, and they published a blog showing how they used their AI SAST to find 7 exploitable vulnerabilities in the wildly popular OpenClaw.

How Do You Build a Context Graph?

In issue #81, I covered Foundation Capital’s “trillion-dollar opportunity” framing for context graphs. Glean’s blog provides the practical details: crawling and indexing data, running ML to infer entities like projects and customers, and continuously feeding activity signals to understand how information is used.

This matters for security because context graphs will power the next generation of AI agents. Understanding who did what, when, and why is exactly what we need for attribution and audit - if the graphs are secured properly. The flip side: compromised context graphs become extremely valuable targets.

Invisible Prompt Injection Repository

This GitHub repository demonstrates invisible prompt injection techniques - attacks that aren’t visible to humans reviewing content but are parsed by LLMs. This is the attack class I’ve been concerned about: content that looks benign to human reviewers but manipulates AI agents.

As we move toward Dan Shapiro’s Level 4-5 automation where humans aren’t reviewing code, these invisible attacks become even more dangerous. Defense requires detection at the model input layer, not human review.

Empirical Security EPSS Scores Repository

This is a useful resource for vulnerability prioritization. EPSS (Exploit Prediction Scoring System) provides probability scores for vulnerability exploitation in the wild. Given the volume of CVEs (we discussed the CVE quality challenges in issue #83), prioritization is essential. EPSS helps focus remediation effort on vulnerabilities likely to be exploited rather than treating all CVEs equally.

In an AI-accelerated development environment producing more code and more vulnerabilities, this kind of risk-based prioritization becomes non-negotiable.

Cybersecurity as We Know It Will No Longer Exist

A provocative piece arguing that AI will fundamentally transform cybersecurity, not just augment it. The core thesis: the adversary-defender dynamic changes when both sides have access to AI agents.

Speed becomes even more critical. The humans-in-the-loop model that defines current SOC operations may not scale. As I’ve discussed with the Agentic SOC concept, we’re heading toward human-supervised autonomous defense. The transition will be uncomfortable, but pretending AI won’t change our field is worse than planning for it.

Final Thoughts

This week drove home a point I’ve been making since the OWASP Agentic Top 10: the gap between AI capability and security maturity is widening, not narrowing. OpenClaw went from “interesting but concerning” to “Gartner says block it” in a week.

Cloud providers shipped OpenClaw-as-a-service anyway. The market is moving faster than security controls. But I’m not discouraged. The 1Password piece on secure agent swarms shows the path forward.

The MAESTRO threat modeling framework gives us structured methodology. Cisco’s Skill Scanner (from issue #82) and similar tools are emerging. The building blocks for secure agentic AI exist, the challenge is adoption. For security leaders: the question isn’t whether AI agents are coming to your environment.

They’re already there, probably in shadow IT. The question is whether you’ll shape how they’re deployed or react after incidents occur. Next week I expect we’ll see more fallout from the OpenClaw situation and potentially more clarity on OpenAI Frontier’s security architecture. Stay tuned.

Stay resilient!

To help secure OpenClaw, we open-sourced an extension that adds hard, deterministic guardrails using policy as code to stop the agent from using rm -rf, sudo, or leaking secrets, even if prompt injected.

https://securetrajectories.substack.com/p/openclaw-rm-rf-policy-as-code