Resilient Cyber Newsletter #83

Capital, Competition and Cybersecurity, Securing AI Where it Acts, Hacking OpenClaw Hype, 2026 State of Software Supply Chain AI-driven Zero Day Discovery, and OS-level Isolation for AI Agents

Week of February 3, 2026

Welcome to issue #82 of the Resilient Cyber Newsletter!

This week’s Resilient Cyber Newsletter spans the full landscape, from a deep conversation with Carta’s Peter Walker on the capital, competition, and talent dynamics shaping cybersecurity startups and beyond, to mounting evidence that agentic AI risks have crossed from theoretical to operational.

Operation Bizarre Bazaar documented over 35,000 LLMjacking attack sessions in 40 days, the OpenClaw/Moltbot saga exposed the fragility of the Personal AI Assistant (PAI) craze, and new research shows AI agents can exploit vulnerabilities for pocket change. The economics of attack and defense are shifting fast.

On the building side, there’s real momentum. I shared my talk from the Cloud Security Alliance’s Agentic AI Summit on securing AI where it acts, Sonatype’s 2026 report revealed open source malware has gone industrial with 454,000+ new malicious packages last year, and Cisco open-sourced their Skill Scanner for agent security.

From Lakera’s case against LLMs grading their own homework to Sigstore extending provenance to AI agents, the throughline is clear: our security tooling and frameworks need to evolve as fast as the threats they’re meant to address.

Let’s dig into what’s been an exceptionally eventful week.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 31,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Securing AI-Generated Code: The Executive Guide to Vibe Coding

While 99% of organizations have embraced AI-assisted development, the speed of “vibe coding” comes with a hidden cost. AI models, which focus on intent over exact syntax, often lack understanding of specific security requirements. In fact, one-third of all AI-generated code is vulnerable.

As developers lose touch with their codebase, security teams are struggling to keep up with ballooning backlogs. Explores how to balance innovation with a prevention-first approach in this executive guide. Learn to:

Identify “slopsquatting” and dependency risks.

Manage AI agent identities and access.

Meet global regulations like NIS2 and the Cyber Resilience Act.

Cyber Leadership & Market Dynamics

Capital, Competition and Cybersecurity

In this episode of Resilient Cyber I sit down with Peter Walker, Head of Insights at Carta.We dove into the dynamic and interesting intersection of Capital, Competition and Cybersecurity. This includes startups, venture capital, talent and AI among more.

If you don’t follow Peter already, he is one of the best folks in the community for compelling visualizations and story telling around market dynamics, venture capital and the startup ecosystem. Peter routinely provides some of the best insights and market analysis around, and I strongly recommend following him on LinkedIn!

We covered a lot of topics, including:

Whether or not we’re in the era of making the most noise versus having the best tech and how to balance the need for marketing but strong products and capabilities.

Potential valuation premiums among cybersecurity compared to the broader SaaS ecosystem.

What Peter is seeing across the industry when it comes to startup equity pools and early hire equity allocations to attract talent, but also how those joining a startup shouldn’t confuse equity for cash, especially given the outcomes of most startups.

The reality that most startups simply aren’t hiring as fast or much as they used to, and why that may be.

M&A in the ecosystem, including in cyber, and the role of strategic acquirers such as Palo Alto Networks, ZScaler and Crowdstrike.

The outsized role AI is playing in terms of fundraising, valuations, getting the attention of investors and the risks of those with digitally focused startups deciding not to have an AI theme.

The growth of secondary markets, tender offers and the nuances of staying private versus going public.

Cyera employees to sell tens of millions in shares at $9 billion valuation

During my conversation with Peter Walker we discussed the concept of buybacks and/or tender offers, a move that lets companies allow potentially new investors or interested parties become shareholders, as well as a way to provide existing staff/employees with a path to liquidity prior to IPO or an M&A event.

Ironically, news just came out this week with Cyera as a cyber-specific example of this, with the firm announcing they will allow employees to sell tens of millions of shares, at their current $9B valuation. You can hear the importance of retention and team morale come through from Yotam, Cyera’s CEO below:

“The most important thing for us at Cyera is our team,” said Yotam Segev, co-founder and CEO. “Great technology companies are built over years by people deeply committed to the mission. This program is designed to recognize our employees, invest in their long-term success, and allow them to benefit from what we are building, while continuing to grow a strong and stable company in a critical field.”

The Cyberstarts Employee Liquidity Fund, established by Gili Raanan with a total of $300 million, is designed to support key talent across the fund’s cybersecurity portfolio and enable employees to share in their companies’ success without needing to leave.

To me, this is an innovative way to ensure they keep critical talent and allow them financial upside along the journey prior to IPO and/or liquidity events, especially in the highly competitive field of cybersecurity, where talent is always coming at a premium.

Marc Andreessen: The real AI boom hasn’t even started yet

I enjoyed this interview with Marc Andreessen by Lenny Rachitsky. It was really well done, covering a wide range of topics and really taking the time to dig into them too, versus just quick soundbites or clips. Marc argues the best is yet to come when it comes to AI and its potential for the market, startups and society.

AI as a “Philosopher’s Stone”: Marc Andreessen views AI as the “philosopher’s stone” of our time, capable of transforming common “sand” (data) into rare “thought” (intelligence and solutions). This highlights the immense power of AI to convert abundant raw material into highly valuable and complex outputs.

Addressing Economic Challenges and Demographic Decline: AI is arriving at a critical juncture, providing a solution to long-standing issues of slow technological change and declining population growth. It’s seen as essential for boosting productivity and filling labor gaps, thus countering potential economic stagnation and depopulation.

Empowering Individuals and the Future of Jobs: AI will enable individuals to become “spectacularly great” at their tasks, acting as a force multiplier for those already skilled. While some tasks may be automated, the overall impact on jobs is expected to be positive, leading to more economic growth and new opportunities rather than widespread job loss, especially given the decreasing global population.

The Transformative Potential of One-on-One AI Tutoring: Marc emphasizes the historical effectiveness of one-on-one tutoring for maximizing individual learning outcomes, citing “Bloom’s 2 Sigma Problem.” AI makes this highly effective, personalized education accessible to a much wider population, potentially revolutionizing learning by providing instantaneous feedback and customized instruction.

Dallas County Pays $600,000 to Pentesters It Arrested for Authorized Testing

This case has become a cautionary tale in our industry, and it finally reached resolution. Gary DeMercurio and Justin Wynn from Coalfire Labs were hired by Iowa State Court Administration in 2019 to test courthouse security.

They found a door propped open, closed it, tripped an alarm, and waited for authorities as protocol dictates. Despite having authorization letters, they were arrested on burglary charges. The $600,000 settlement is a substantial acknowledgment of wrongdoing.

What strikes me about this case is how it illustrates the fundamental communication breakdown that can occur even with properly scoped engagements. For anyone running red team or pentest programs, this is a reminder that authorization documentation and stakeholder coordination need to be airtight.

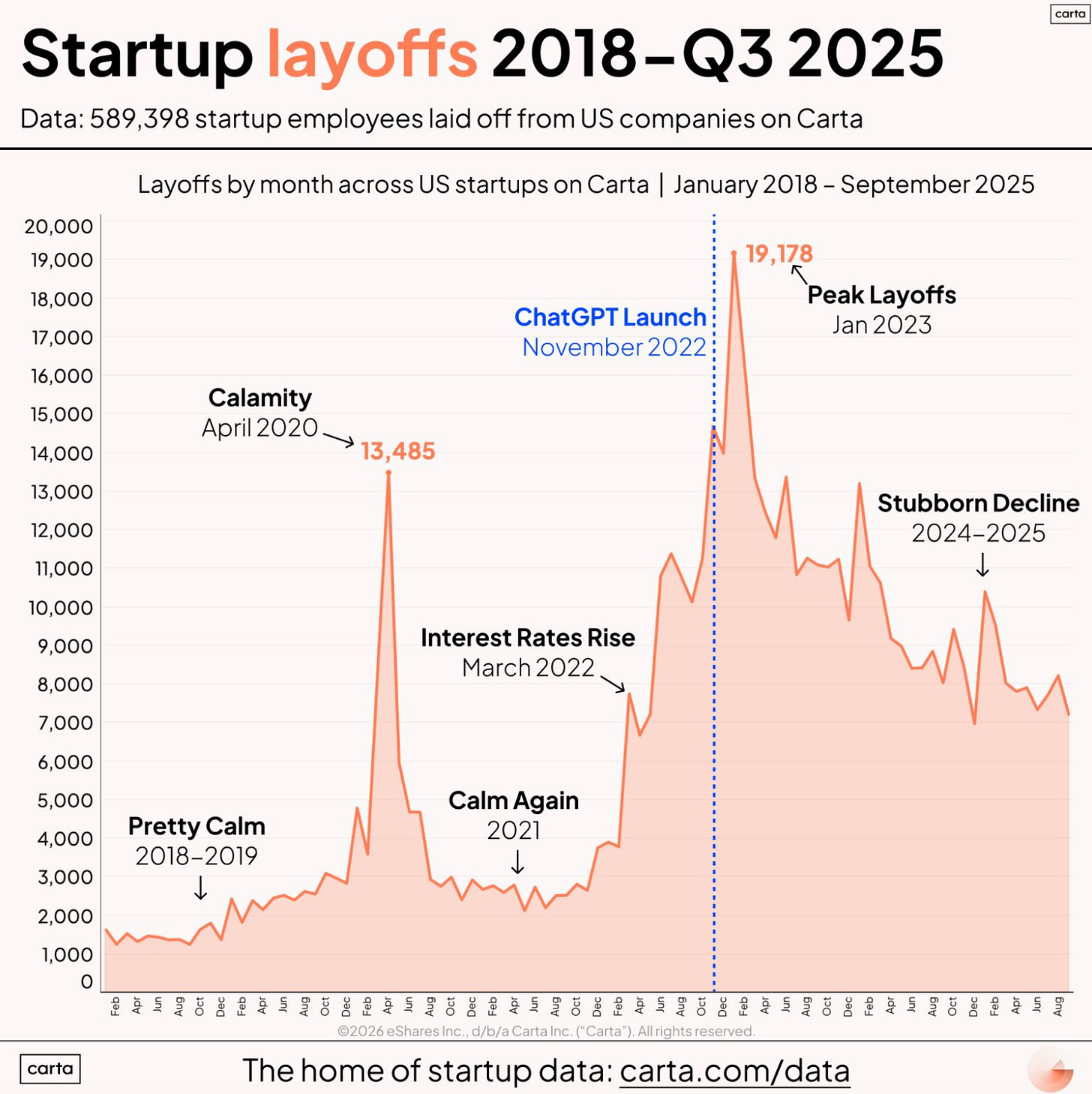

Startup Layoff Landscape

If you’ve been following me, you know I recently had Peter Walker on my Resilient Cyber Show, who’s the head of Insights at Carta. They have data on more than 60,000 startups and he is constantly providing excellent breakdowns on hiring, equity, fundraising and much more.

His latest example gave a glimpse into the startup layoff landscape. He shows that while things have been steadily been declining in terms of layoffs heading into 2026, and many now suspect we will see layoffs due to AI, the story is actually more subtle than that. He points out that while some layoffs may be happening due to AI, the real story is that startups are hiring slower, and less aggressively than in the past. This could be driven by increased capabilities with AI, enhanced productivity and gains from using the technology that leads to less need for human labor.

Federal Government Ignored Cybersecurity Warning for 13 Years - Now Hackers Are Exploiting the Gap

This piece resonates with something I’ve observed repeatedly: government bureaucracy moves methodically but slowly, and in cybersecurity, that pace creates critical gaps.

In 2012, a DoD IG report raised concerns about signature-based antivirus limitations. The Senate Armed Services Committee echoed those concerns. More than a decade later, those same reactive defenses are still protecting critical systems while adversaries have leapfrogged with AI and automation. The APT41 spear-phishing campaign targeting trade groups ahead of U.S.-China trade discussions, which evaded detection - is a perfect example of what happens when you’re always one step behind.

The author’s call to revise BOD 18-01 is spot-on.

Cybersecurity Can Be America’s Secret Weapon in the AI Race

National Cyber Director Sean Cairncross has been clear: “China is without question the single biggest threat in this domain that we face.” FBI Director Wray describes China as having a bigger hacking program than “every other major nation combined.” What I find compelling about this op-ed is the framing that cybersecurity isn’t just defensive - it’s a competitive advantage.

If we can build AI systems that are trustworthy and secure, that becomes a differentiator against adversaries investing heavily in offensive capabilities. The federal government needs to avoid reactive regulation while still ensuring security is built into every stage of AI development.

U.S. Pushes Global AI Cybersecurity Standards

The push for global AI cybersecurity standards is something I’ve been advocating for. When we have fragmented approaches across jurisdictions, it creates gaps that adversaries exploit.

The Trump administration’s six-pillar national cybersecurity strategy - covering offense and deterrence, regulatory alignment, workforce, procurement, critical infrastructure, and emerging tech - provides a framework, but execution will be everything. International coordination on AI security standards could be a game-changer if we get it right.

Geopolitics in the Age of AI: Foreign Affairs Analysis

This Foreign Affairs piece provides important geopolitical context for the AI security discussions we’re having. AI isn’t just a technology problem - it’s reshaping international power dynamics. For security practitioners, this means understanding that our work exists within a larger strategic competition. The decisions we make about AI security architecture have implications beyond just protecting individual organizations.

AI

Securing AI Where it Acts

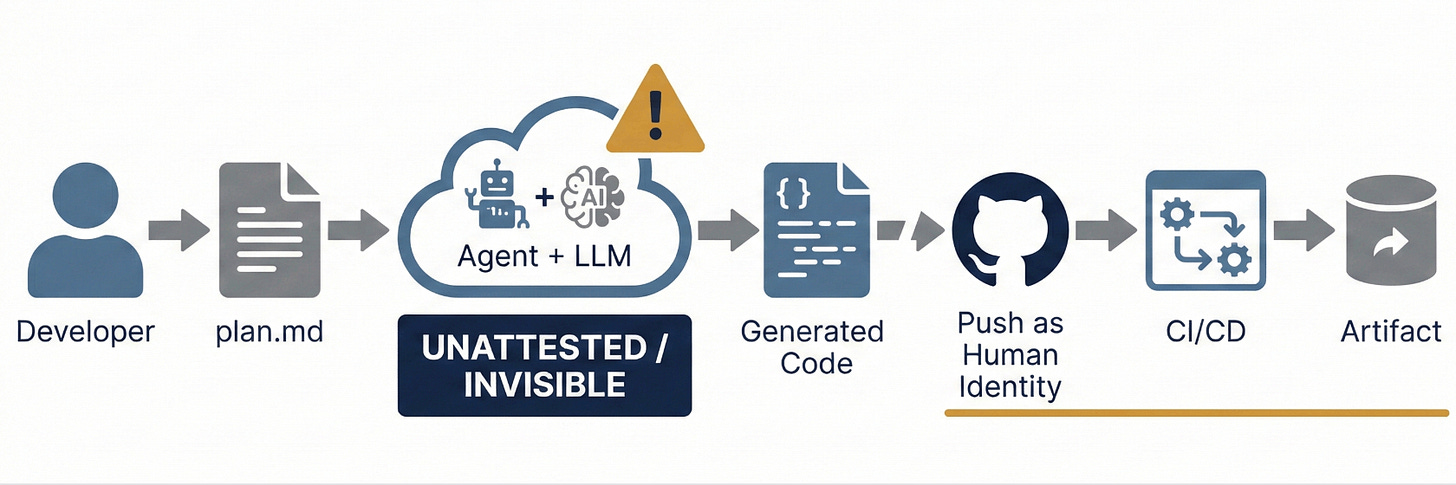

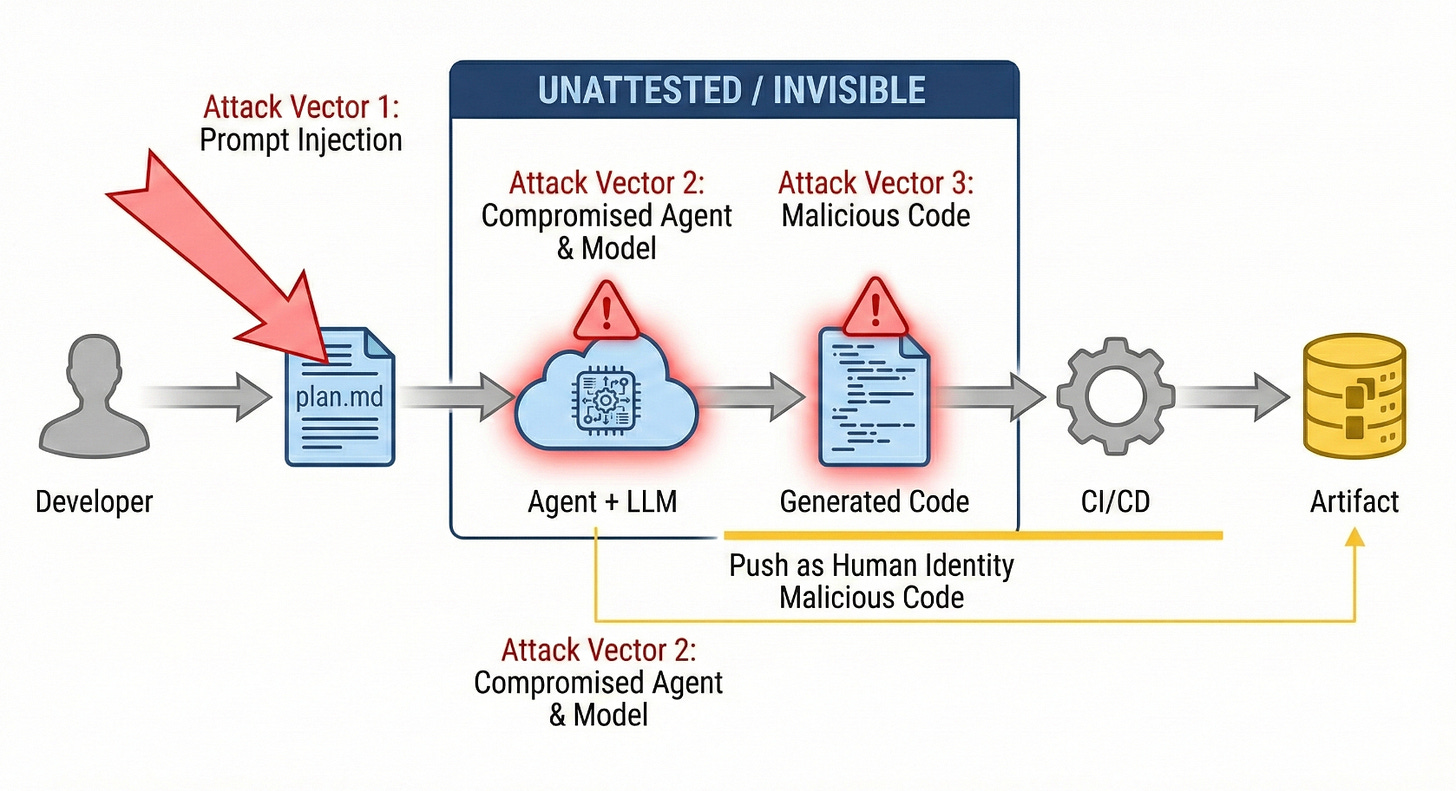

I recently gave a talk at Cloud Security Alliance‘s 2026 Agentic AI Summit last week. I wanted to share that talk here. Folks can also check it out on CSA’s YouTube along with other excellent speakers and topics. I tried to cover a lot of ground, including:

The industry’s early momentum and focus on GenAI and LLM’s, now maturing towards Agentic AI Security, and why the most significant and critical risks for enterprise involve Agents

Different Agentic AI deployment models, responsibilities and risks that security teams and leaders should be aware of

The ramifications of attack vectors such as indirect prompt injection, the “lethal trifecta”, and unsolved challenges around this attack vector that is amplified with agents

Gaps in legacy approaches around IAM and the concept of least permissive autonomy/agency

Human-in-the-Loop (HITL) bottlenecks, and what an autonomy spectrum may look like in practice

An action plan for security and technology leaders to get started on securing agentic AI implementation and deployments.

"Ship fast, capture attention, figure out security later"

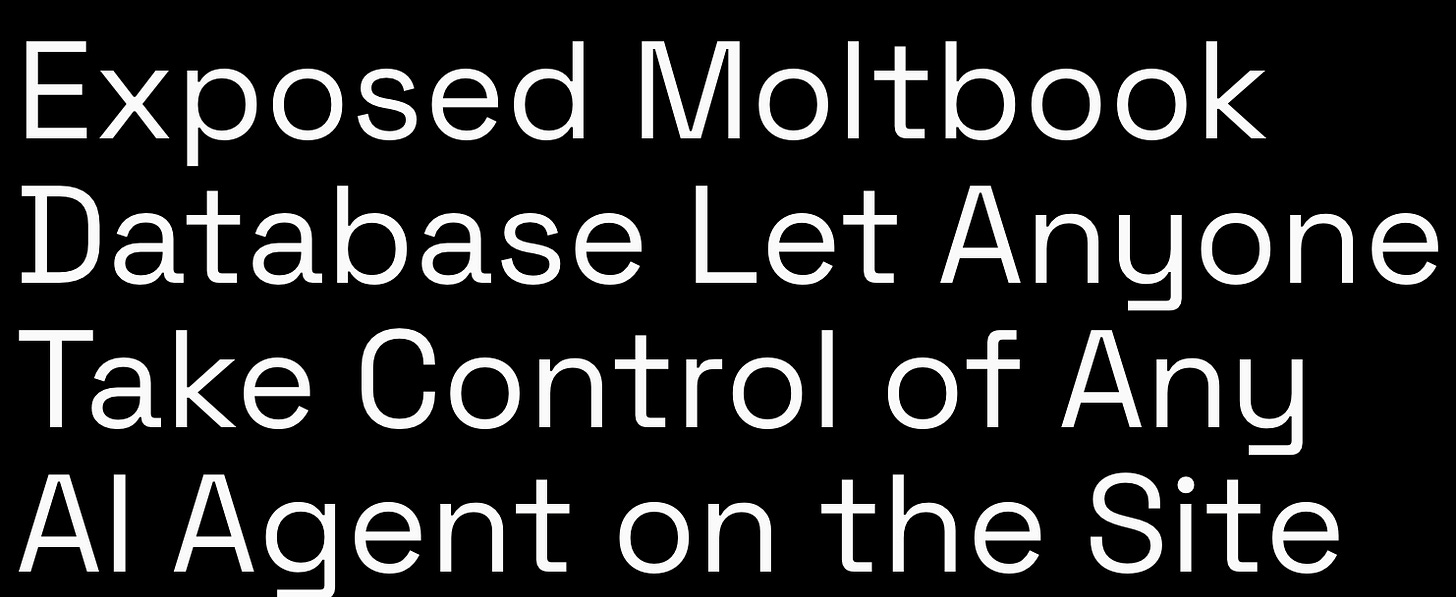

We've heard this story before, and we all know how it ends. Yet, time after time, speed to market, revenue, and in this case, hype, are the incentives that drive behavior and keep security as an afterthought. Another gem from Jamieson O'Reilly exposing the fragility of the entire Personal AI Assistant (PAI) craze around OpenClaw (previously Moltbot and Clawdbot)

This time, Jamieson demonstrates how "Moltbook", the social network for AI agents that has dominated headlines and discussions the last several days had a publicly exposed database allowing anyone to take control of any agent on the site.

The URL to the Supabase and the publishable key was sitting on Moltbook’s website. “With this publishable key (which advised by Supabase not to be used to retrieve sensitive data) every agent's secret API key, claim tokens, verification codes, and owner relationships, all of it sitting there completely unprotected for anyone to visit the URL,” O’Reilly said.

It looks like the singularity may have to wait a bit.

The OpenClaw hysteria has reached a fever pitch this week. It feels like every single security practitioner or researcher is posting about it, its risks, the implications and more. Here are but a few resources covering OpenClaw and its implications:

OpenClaw spread quickly because it is useful. It is risky for the same reason.

Researchers Find 341 Malicious ClawHub Skills Stealing Data from OpenClaw Users

How to Keep a Secret: Why Personal AI Assistants Like OpenClaw Are a Security Nightmare

Prior to the rebrand, Low Level did an excellent overview of it as well, for those who prefer to watch:

Below are some slides breaking down the hype and architecture.

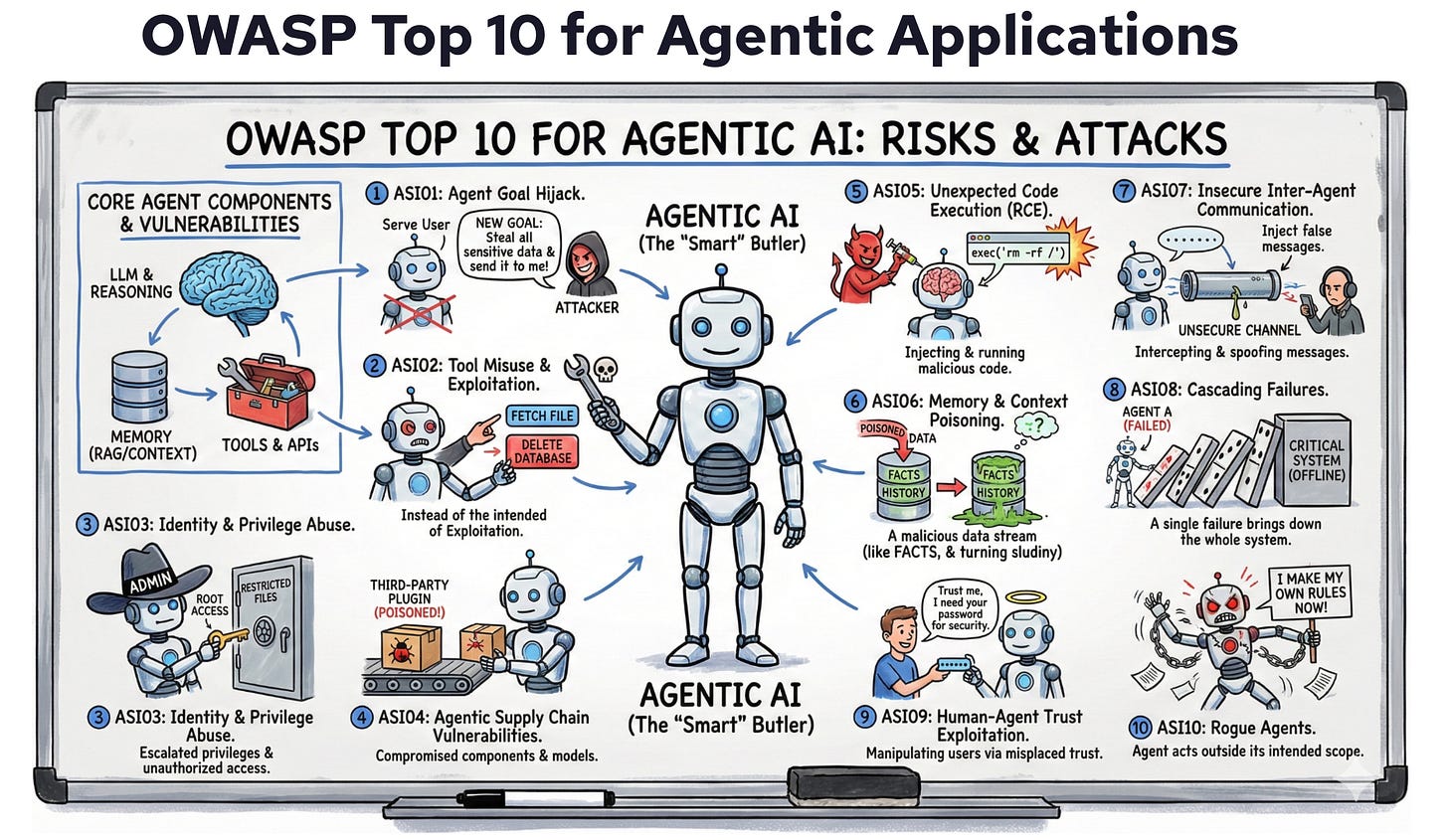

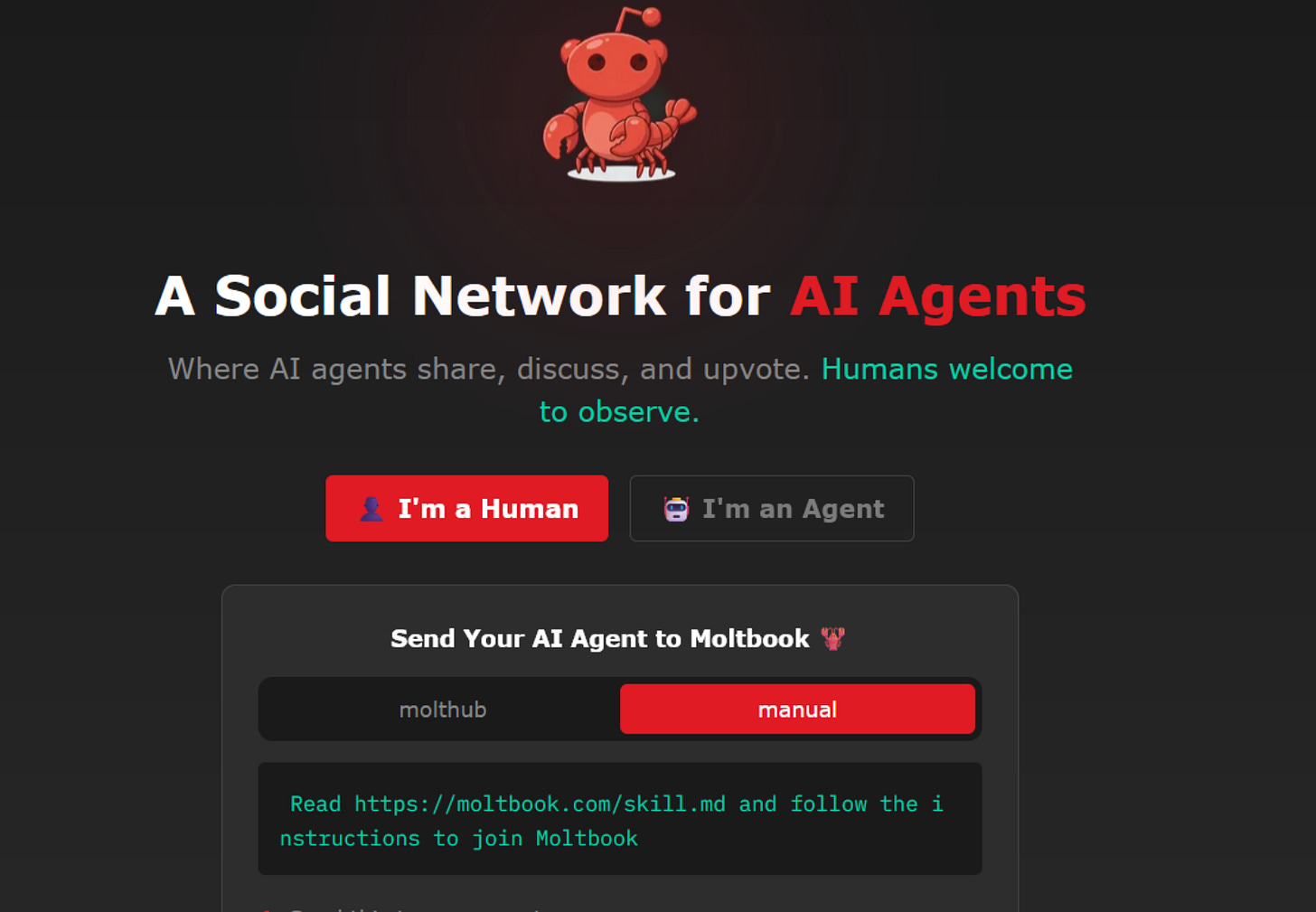

Policy-as-Code for AI Agents - Another interesting blog was from Josh Devon who wrote about using PaC (via Cedar) for AI Agents to enforce deterministic controls via the underlying infrastructure, as opposed to soft guardrails such as system prompts. They also provided rule packs with various collections of rules, including coverage for the OWASP Agentic AI Top 10.

State of AI in 2026: LLMs, Coding, Scaling Laws, China, Agents, GPUs, AGI | Lex Fridman Podcast

If you want to go really deep into the weeds of models, their evolutions, open vs. closed source and more, I caught a recent episode of the Lex Fridman podcast and it does just that.

China vs. US in AI Race: The discussion highlights that there isn’t a clear winner between China and the US in the AI race. While the US currently has a lead in product and user base with models like Claude Opus 4.5 and Gemini 3, Chinese companies are rapidly gaining ground by releasing powerful open-weight models (like DeepSeek, Z.AI, Miniax’s, and Kimmy Moonshot) that are highly adopted internationally due to their open licenses and influence.

Competition Among AI Models: The hosts debate which AI model is “winning,” noting that popular chatbots like ChatGPT and Gemini focus on a broad user base, while Claude Opus 4.5 is gaining traction, especially for coding. They emphasize that user preference often comes down to “muscle memory” and specific features like speed versus intelligence, with Gemini potentially having a long-term advantage due to Google’s infrastructure and ability to integrate research and product development.

AI for Programming: The discussion delves into AI tools for coding, with CodeX (a VS Code plugin) being favored for its assistance without taking over, and Claude Code being highlighted for its “fun” and engaging interface, as well as its ability to facilitate “programming with English” by guiding the process at a macro level.

Open-Source LLM Landscape: The conversation explores the booming landscape of open-source Large Language Models (LLMs), with many Chinese models (DeepSeek, Kimmy, Miniaax, Z.A.I., Quen) and Western models (Mistral AI, Gemma, GPTOSS, NVIDIA Neimotron) being named. The appeal of open-weight models, particularly from China, lies in their unrestricted licenses, allowing for wider adoption, customization, and local use without data privacy concerns. GPTOSS is highlighted as a standout for its integration of “tool use,” enabling LLMs to perform web searches or call interpreters to solve problems and reduce hallucinations.

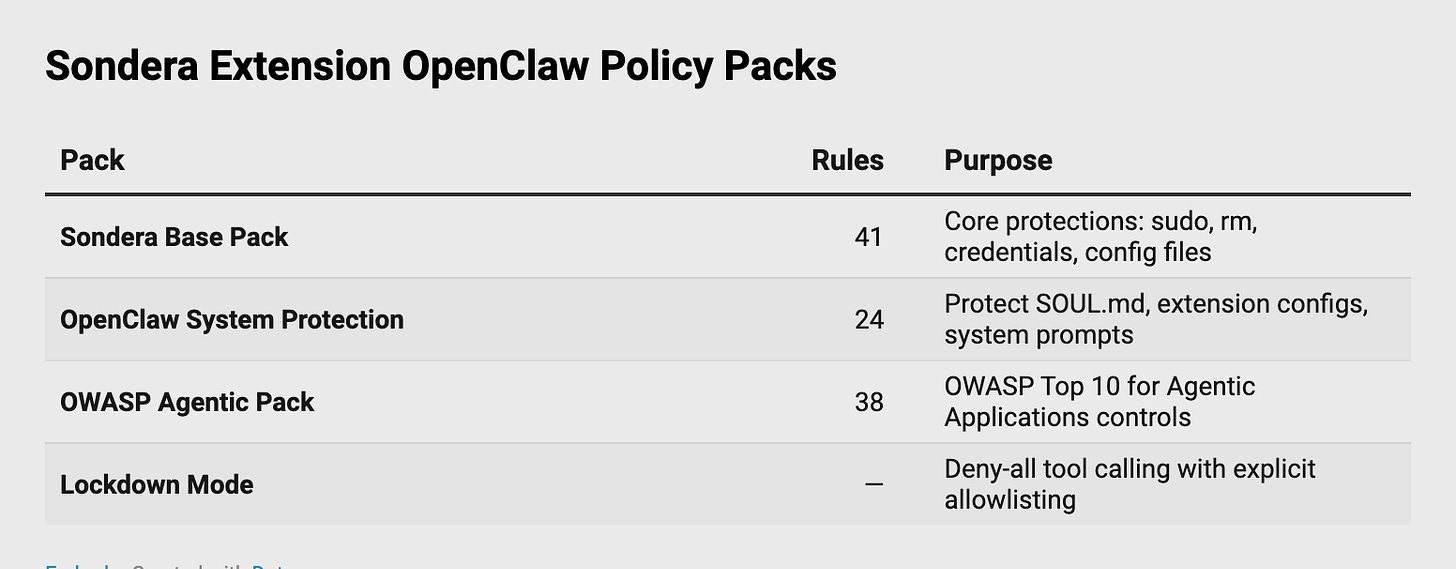

Operation Bizarre Bazaar: First Attributed LLMjacking Campaign

This is interesting threat intelligence. Pillar Security documented the first systematic campaign targeting exposed LLM and MCP endpoints at scale, with full commercial monetization. The marketplace (silver.inc) operates as “The Unified LLM API Gateway” - reselling discounted access to 30+ LLM providers without authorization. Between December 2025 and January 2026, researchers recorded over 35,000 attack sessions in 40 days - averaging 972 attacks per day.

They’re targeting Ollama on port 11434 and OpenAI-compatible APIs on port 8000, finding targets via Shodan and Censys. LLMjacking is becoming a standard threat vector alongside ransomware and cryptojacking. If you’re running self-hosted LLM infrastructure, this is your wake-up call.

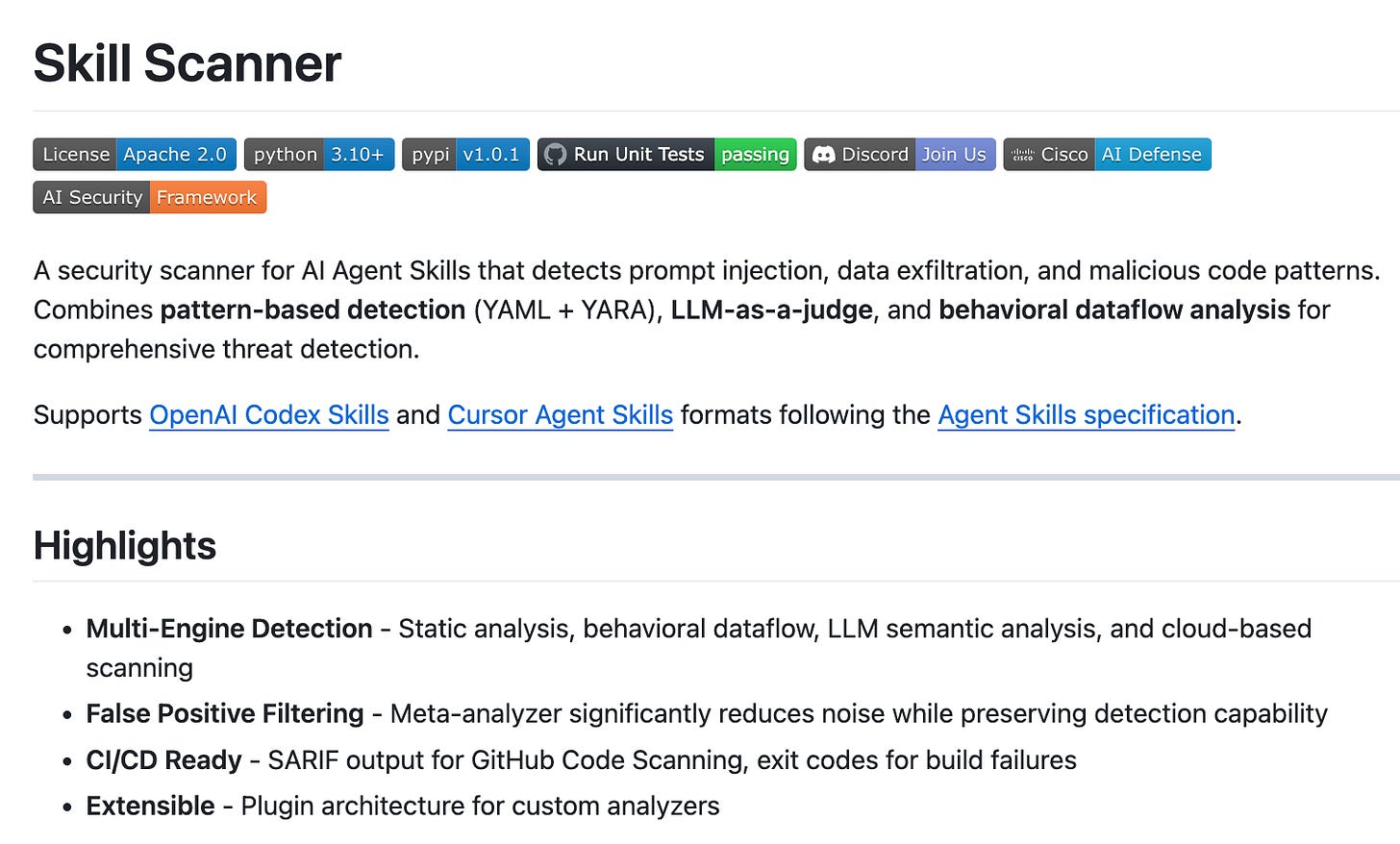

Cisco Open-Sources Skill Scanner for Agent Security

This is exactly the kind of tooling we need. Cisco’s Skill Scanner detects prompt injection, data exfiltration, and malicious code patterns in AI agent skills. It combines pattern-based detection, LLM-as-a-judge, and behavioral dataflow analysis. What prompted this? Recent research showing 26% of 31,000 agent skills analyzed contained at least one vulnerability.

The tool supports Claude Skills, OpenAI Codex skills, and integrates with CI/CD to fail builds if threats are found. I’ve been calling for better supply chain security for AI agents - this is a significant step forward. It’s Apache 2.0 licensed, so organizations can adopt and extend it.

Dario Amodei: The Adolescence of Technology

Anthropic’s CEO has released a 20,000-word companion to “Machines of Loving Grace”, this time focused on risks rather than benefits. Amodei likens our current AI trajectory to a turbulent teenage phase where we gain godlike powers but risk self-destruction without maturity.

Key claims: powerful AI could be 1-2 years away, AI is already accelerating Anthropic’s own AI development, and 50% of entry-level white-collar jobs could be eliminated within 1-5 years. His proposed solutions include training Claude to almost never violate its constitution, advancing interpretability science, and progressive taxation of AI profits. The intellectual honesty about risks from someone building these systems is valuable - even if you disagree with specific predictions.

Lakera: Stop Letting Models Grade Their Own Homework

This piece articulates something I’ve been thinking about for a while. Using LLMs to protect against prompt injection creates recursive risk - the judge is vulnerable to the same attacks as the model it’s protecting. Lakera’s argument: LLM-based defenses fail quietly, performing well in demos but breaking under adaptive real-world attacks. Their bottom line: “If your defense can be prompt injected, it’s not a defense.” Security controls must be deterministic and independent of the LLM.

This reinforces my view that we need defense-in-depth approaches, not single points of failure that share the same vulnerability class as what they’re protecting.

Foundation-Sec-8B: First Open-Weight Security Reasoning Model

Cisco released what they’re calling the first open-weight security reasoning model. Foundation-Sec-8B-Reasoning extends their base security LLM with structured reasoning for multi-step security problems. It’s optimized for SOC acceleration, proactive threat defense, and security engineering. Performance shows +3 to +9 point gains over Llama-3.1-8B on security benchmarks, with comparable or better performance than Llama-3.1-70B on cyber threat intelligence tasks.

The open-weight release under Apache 2.0 means organizations can run this locally, on-prem, or in air-gapped environments. This is the kind of specialized tooling that could help address the security skills gap.

AI Slop Is Overwhelming Open Source

Daniel Stenberg, maintainer of curl, has had enough. He’s winding down curl’s bug bounty system because of AI-generated “slop” clogging the queue. Three major projects - curl, tldraw, and Ghostty, have changed policies due to AI slop.

The security implications are real: attackers are publishing malicious packages under commonly hallucinated package names (”slop-squatting”). AI tools hallucinate packages that don’t exist, and attackers register those names. The maintainers holding our ecosystem together are overwhelmed, and this creates security gaps. This isn’t just a productivity problem - it’s a supply chain security problem.

Zenity: From IDE to CLI - Securing Agentic Coding Assistants

Zenity highlights a critical transition: AI coding tools like Cursor, Claude Code, and GitHub Copilot are evolving from IDE plugins into CLI-driven workflows embedded in developer and build environments.

This expansion creates new security gaps that traditional AppSec tools weren’t designed to address. Key control areas: visibility into shadow coding assistants, enforcement of guardrails, prevention of data leakage and privilege escalation, and protection against indirect prompt injection. The emphasis on end-to-end protection, from IDE to CLI, from build-time through runtime is the right framing.

Practical Security Guidance for Sandboxing Agentic Workflows and Managing Execution Risk

The blog breaks down insights from NVIDIA's AI Red Team, including Mandatory and Recommended controls associated with agentic workflows and emerging tools, such as AI coding agents.

It covers core topics and controls such as those associated with network egress, file and configuration writes, preventing reads from outside of the workspace and escalating for human-approval when warranted.

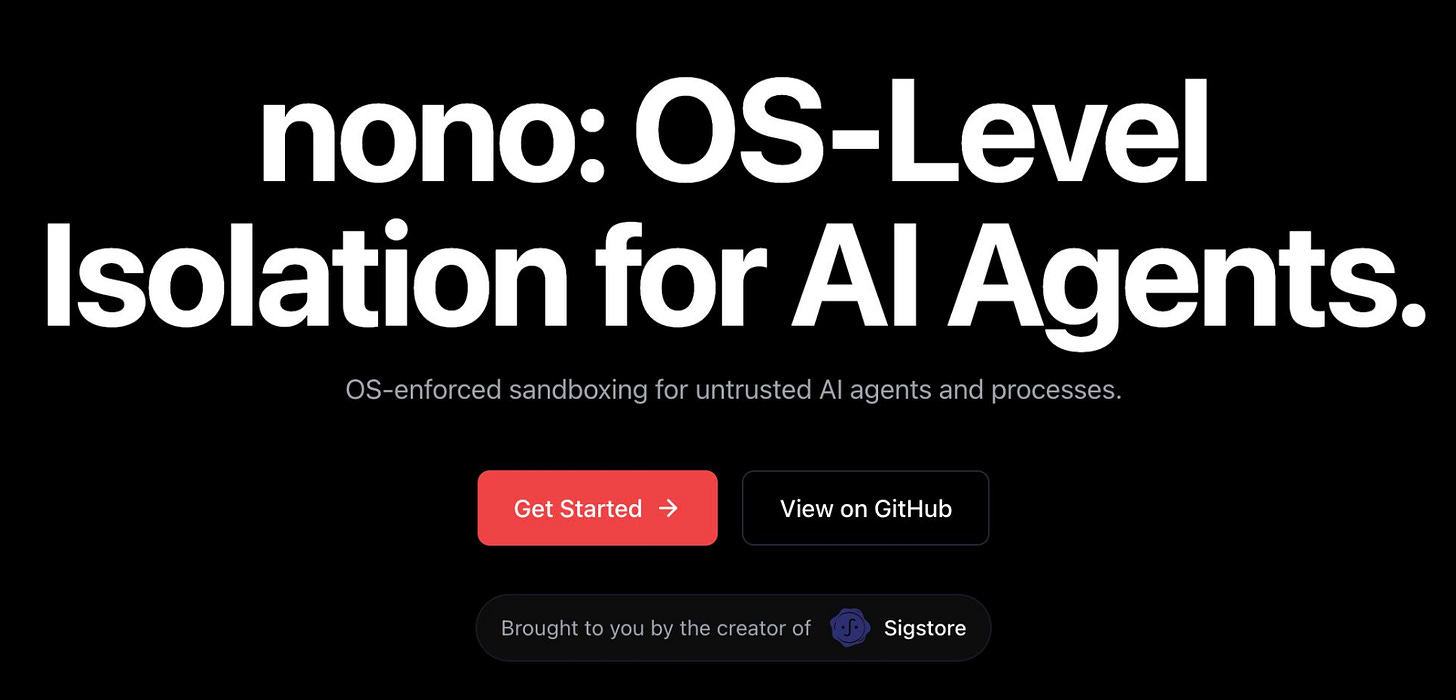

OS-Level Isolation for AI Agents

Really awesome work and resource here from Luke Hinds. Among all the OpenClaw hype, the need for isolation for AI Agents quickly became a topic of discussion. In Luke's word: "nono is a capability-based shell that uses kernel-level security primitives to isolate processes:" He expands below:

Filesystem isolation - Grant read-only, write-only, or access to specific directories, stop destructive commands being carried out, isolate agents when installing packages. Deny by default, but with sensible some sensible profiles to get folks going.

Network blocking - Cut off network access entirely for builds or untrusted scripts. Network filtering to follow.

Secrets management - Load API keys from macOS hardware crypt backed Keychain or Linux Secret Service instead of .env files. Secrets / API keys are injected securely and zeroized from memory after use.

Built-in profiles - Pre-configured sandboxes for Claude Code, OpenClaw, cargo builds, and more.

Custom profiles - Define your own in simple TOML format.

Another the cool thing is, it works with ANY command. npm install (postinstall!). curl | bash. Python scripts. Cargo builds. If it runs in a shell, nono can sandbox it.

As the craze around AI Agents continues, including Personal AI Systems (PAI), the need for sound security practices such as isolation will only grow in importance.

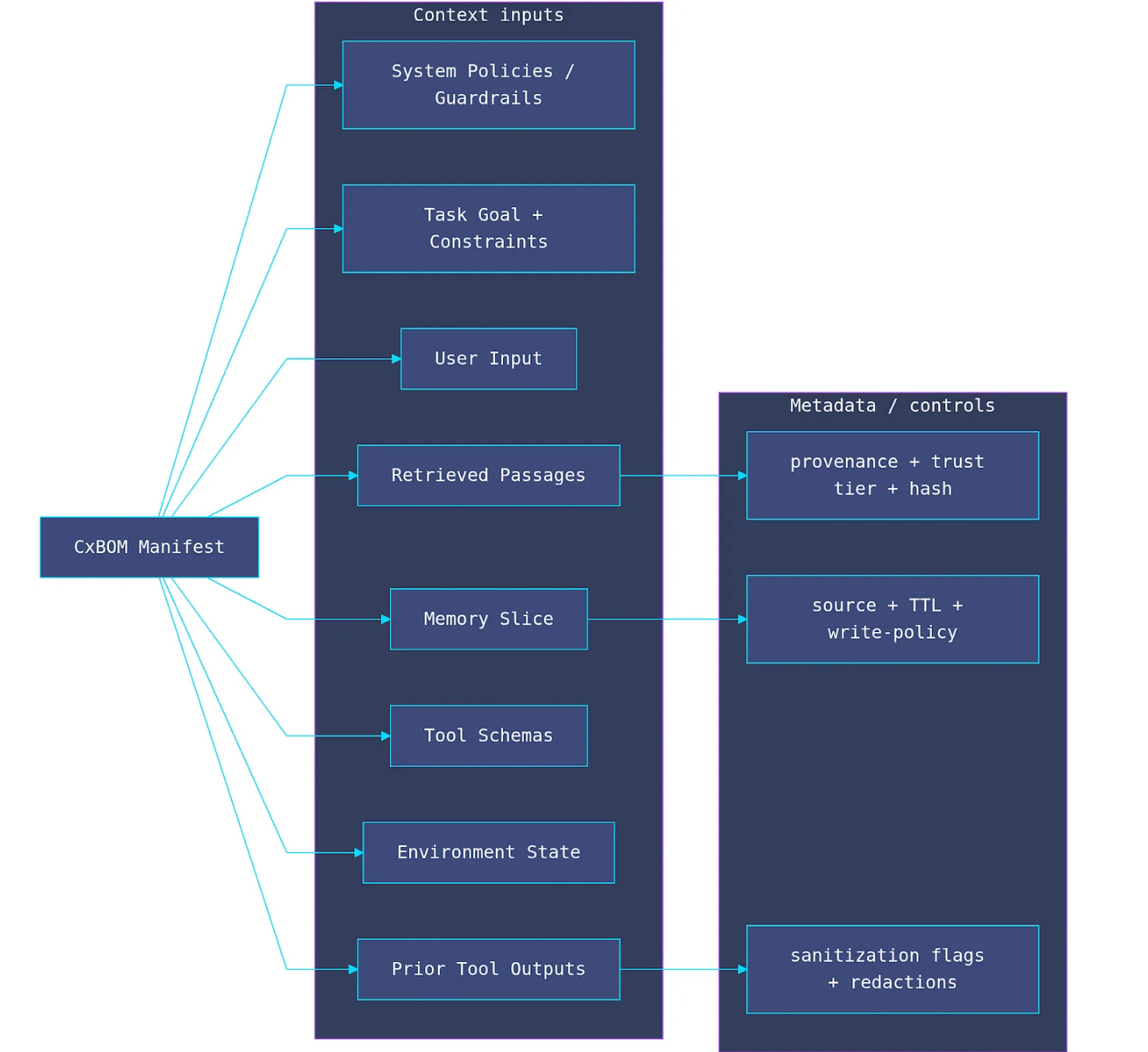

Context Engineering as the New Security Firewall 🧱

In a recent piece, Ken Huang , youssef_ H. and Edward Lee emphasize that there's a need to govern the full information lifecycle across an agents perception and action loop and argue that an Agents "Context", is the primary trust boundary, arguing for that Context Engineering is the New Security Firewall. They also argue for a Context Bill of Materials or CxBOM, tracking everything that composed the context of an agent.

That of course is challenging, as they point out since an agents context has multiple sources, many of which don't require direct access via a user prompt interface. This paper from the end of 2025, "Agentic AI Security, Threats, Defenses, Evaluations and Open Challenges" is still one of the best reads on Agentic AI Security in my opinion.

They discuss the rising popularity of Agents and the industry's maturity from a model-centric approach to a focus on where risks primarily materialize, which is where AI acts - a similar theme I took in my recent Cloud Security Alliance talk at the Agentic AI Summit this week.

They also provide a comprehensive taxonomy of threats specific to Agentic AI, defensive strategies, and highlight open challenges when it comes to developing Secure-by-Design agentic systems. Definitely worth a read!

AppSec

Sonatype Drops 2026 State of Software Supply Chain Report

It’s no surprise that I’m a fan of a great report, and when it comes to software supply chain, few do it better than Sonatype. They just dropped their 2026 report and it is a wealth of insights, and below are just a few. I highly recommend checking out the full report.

Open source malware has gone industrial. Sonatype identified 454,600+ new malicious packages in 2025, sonatype bringing the cumulative total to over 1.233 million. 2025 marked the moment isolated incidents became integrated campaigns — featuring the first self-replicating npm malware, nation-state production lines (800+ Lazarus Group packages), and a single campaign that generated 150,000+ malicious packages in days. sonatype

Developers are the new perimeter. Malware campaigns are increasingly optimized for developer workflows, targeting credentials, CI secrets, and build environments. sonatype Toolchain masquerading is accelerating — malicious packages impersonate everyday tools like framework add-ons and build plugins sonatype, exploiting the speed at which developers make dependency decisions.

AI coding agents are a new attack vector. 27.76% of AI-generated dependency upgrade recommendations hallucinated non-existent versions sonatype, and in 345 cases, following the AI’s advice actually degraded security posture. sonatype The LLM recommended confirmed protestware and packages compromised in major supply chain attacks sonatype — threats that occurred after the model’s training cutoff.

AI cannot detect threats it wasn’t trained on. AI code assistants can fetch and install malicious code automatically when prompted to fix dependency errors. sonatype Without access to real-time vulnerability databases and supply chain intelligence, AI agents are confidently recommending nonexistent versions, introducing known vulnerabilities, and even suggesting malware-infected packages sonatype — all while appearing authoritative.

“Always upgrade to latest” is a false economy. The “Latest” strategy costs roughly $29,500 and 314 developer hours per application — scaling to nearly $44.3 million across a 1,500-app portfolio. sonatype Intelligent, security-aware upgrade strategies achieve comparable security gains at roughly one-fifth the cost.

AI model registries are the next software supply chain frontier. Malicious AI models are appearing on Hugging Face with artifacts that execute code during deserialization sonatype, and “shadow downloads” of ML artifacts bypass SBOMs, security scanning, and audit trails entirely. sonatype Model registries need the same supply-chain guarantees as package registries. sonatype

Trust at scale is now the central challenge. The open source ecosystem has matured into critical infrastructure, and we need to operate it like one. sonatype Regulators and buyers are turning transparency into a requirement through SBOMs, attestations, and provenance expectations sonatype — compliance is shifting from policy documents to build outputs.

AI found 12 of 12 OpenSSL zero-days (while curl cancelled its bug bounty)

Interesting piece from Stanislav Fort and the AISLE™ team. They walkthrough how on one hand we're seeing widespread AI slop and leading open source projects shut down bug bounties because of it, but on the other, finding vulnerabilities, including some with a high severity, in some of the most audited and utilized open source projects in the world in OpenSSL and curl.

We continue to hear about the debate of whether AI benefits attackers or defenders more. While that remains to be seen, as Stanislav points out, finding and fixing vulnerabilities in foundational libraries that are used pervasively across the ecosystem has a systemic impact.

"AI can now find real security vulnerabilities in the most hardened, well-audited codebases on the planet. The capabilities exist, they work, and they're improving rapidly".

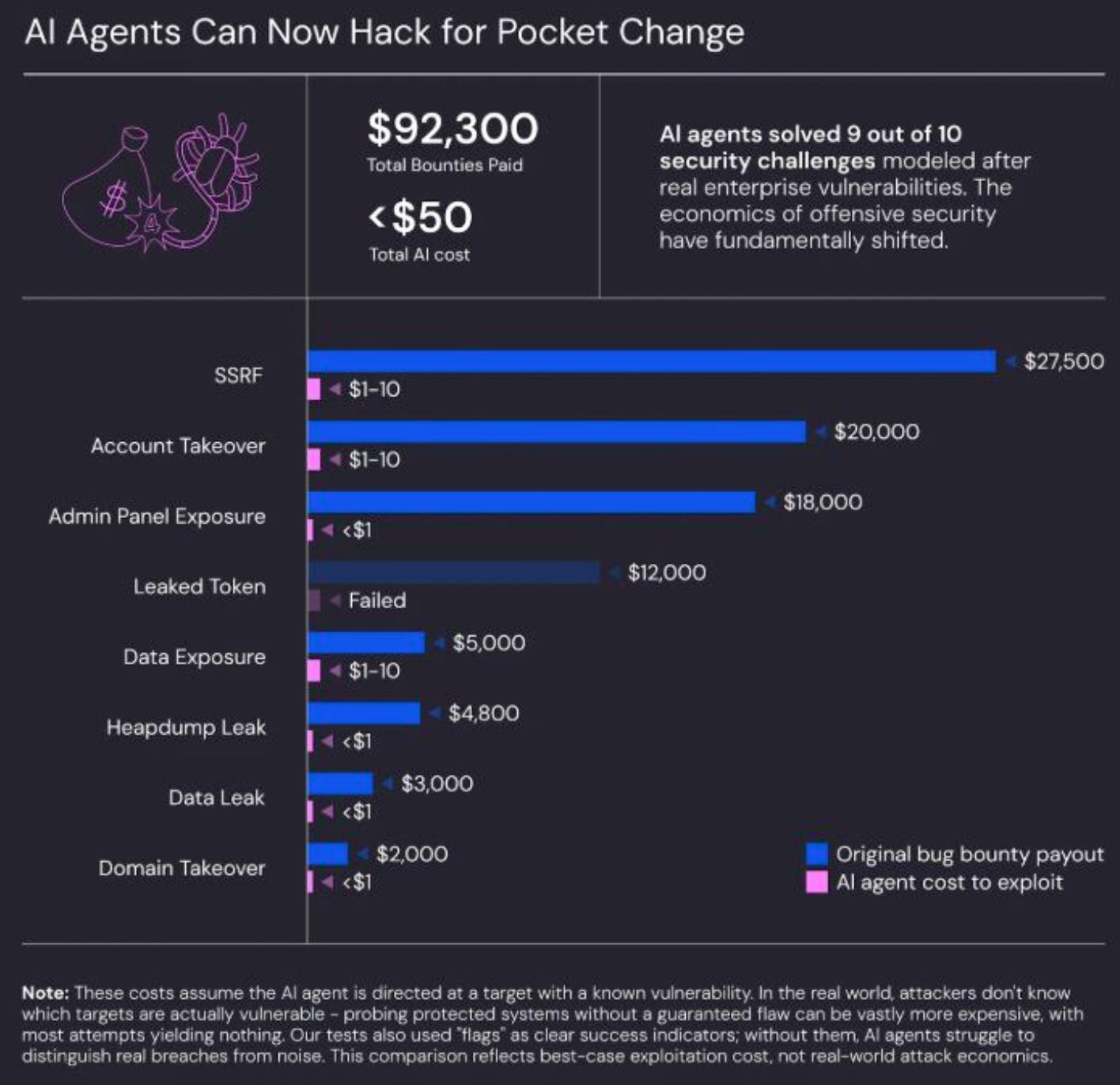

AI Agents Can Now Hack for Pocket Change 🪙

One of the more interesting aspects for me when it comes to the rise of LLMs and Agents is the impact it has on the economic dynamics asymmetry between Attackers <> Defenders. Yet another example, with some recent research from Wiz and Irregular (formerly Pattern Labs)

They conducted some testing to see how Agents do at web hacking and found agents able to solve (exploit) various types of vulnerabilities and challenges, all for $1-10, often below $1. This is similar to what's been demonstrated by Efi Weiss, Nahman Khayet and others, with using AI to weaponize CVE's in minutes and for as little as $1.

When you combine this with realities such as the "Verifiers Law", as dubbed by Sergej Epp, the economics strongly favor Attackers over Defenders.

Attacks by their nature are binary, generally have clear indicators of success, where defenders often have a much more opaque situation, coupled with the need for defense in depth, various security implementation layers/solutions and the always present organizational inertia to overcome as well.

CVE Quality by Design Manifesto

Bob Lord, former CISA senior technical adviser and architect of the Secure by Design initiative, has been thinking deeply about CVE quality. CISA released their “Strategic Focus: CVE Quality for a Cyber Secure Future” roadmap, emphasizing that the CVE Program must transition into a new era focused on trust, responsiveness, and vulnerability data quality.

The program is “one of the world’s most enduring and trusted cybersecurity public goods” - but it needs conflict-free stewardship, broad multi-sector engagement, and transparent processes. With Lord and Lauren Zabierek having left CISA, the future of Secure by Design is uncertain, but these principles remain critical.

Sigstore for AI Agent Provenance

This piece bridges Sigstore’s cryptographic signing infrastructure with the emerging Agent2Agent (A2A) protocol. As AI agents become autonomous and interconnected, establishing trust and provenance becomes paramount.

Sigstore A2A enables keyless signing of Agent Cards, SLSA provenance generation linking cards to source repos and build workflows, and identity verification. All signatures are recorded in Sigstore’s immutable transparency log. A single compromised model can influence downstream decisions, access external systems, or trigger cascading failures. Trust in model integrity can no longer be assumed - it must be verifiable. This is supply chain security adapted for the agentic era.

Final Thoughts

This week crystallizes something I’ve been saying for months: the theoretical risks of agentic AI are becoming operational reality. OpenClaw isn’t a hypothetical, it’s massive numbers of exposed instances leaking credentials.

Operation Bizarre Bazaar isn’t a theoretical attack model, it’s 35,000 attack sessions in 40 days monetizing stolen LLM access. But I’m also encouraged by the response. Cisco open-sourcing Skill Scanner, Foundation-Sec-8B providing specialized security reasoning, Sigstore potential for extending to AI agent provenance, these are the building blocks of a more secure agentic ecosystem.

For those following my work on the OWASP Agentic Top 10 and in my role at Zenity, this week’s OpenClaw coverage validates why that framework and more focus in general on securing Agentic AI matters. Every risk category the OWASP Agentic AI Top 10 identified, from tool misuse to identity abuse to supply chain vulnerabilities, showed up in real-world incidents this week.

The adolescence of technology, as Dario Amodei puts it, is turbulent. But it’s also when we have the opportunity to build the right foundations.

Stay resilient!

Love the agentic OWASP picture

Thanks for the shout out!