Resilient Cyber Newsletter #82

OpenAI Raises Alarm on "High" Cyber Preparedness Framework, Davos Distallation, Exploiting Clawdbot Hype, Malicious "Skills", Secure-by-Design Goes Prime Time & the Identity Perimeter Fallacy

Week of January 27, 2026

Welcome

Welcome to issue #82 of the Resilient Cyber Newsletter!

This week has been absolutely packed with developments that I’ve been tracking closely. We’ve got Anthropic dropping Claude’s new constitution OpenAI raising the alarm saying we’re reaching the “High” level of their Cyber Preparedness Framework, a series of excellent and critical talks around AI and Economics from Davos, and the ongoing debate about whether AI security is legitimate or just hype - spoiler alert, I have thoughts.

As I’ve discussed extensively in my prior writing on agentic AI risks and the OWASP Agentic Top 10 (which I had the privilege of contributing to as part of the Distinguished Review Board), this week’s content reinforces something I keep coming back to: we’re at an inflection point where the security community needs to move from theoretical discussions to operational reality and be an adopter of AI if we have any hope of keeping pace with both the business as well as attackers.

The pieces are coming together - from MCP security frameworks to AI-native AppSec.

Let’s dig in.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 31,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Stop Deepfake Phishing Before It Tricks Your Team

Today’s phishing attacks involve AI voices, videos, and deepfakes of executives.

Adaptive is the security awareness platform built to stop AI-powered social engineering.

Protect your team with:

AI-driven risk scoring that reveals what attackers can learn from public data

Deepfake attack simulations featuring your executives

Cyber Leadership & Market Dynamics

Reaching “High” on OpenAI’s Cyber Preparedness Framework

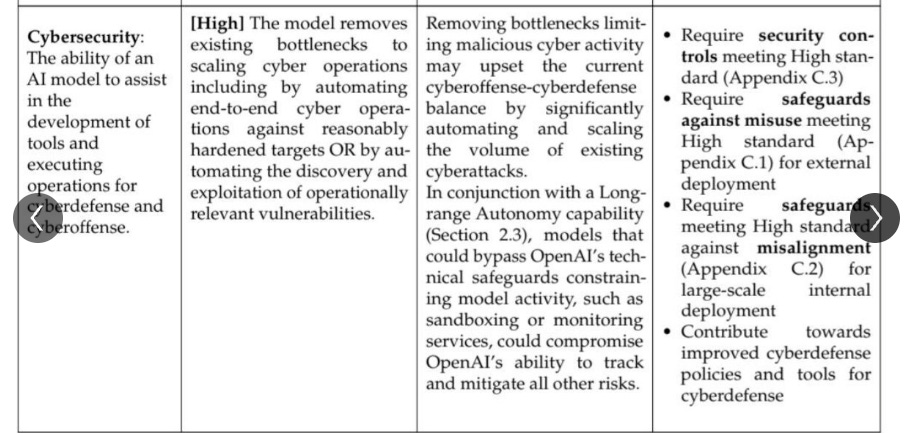

OpenAI's upcoming releases for Codex will bring us to their "High" Cybersecurity level from their preparedness framework. Things are about to get very interesting quickly, if true.

We already saw others, such as Anthropic discuss how malicious actors are using their platform for cybercrime as well as cyber espionage campaigns. While Sam Altman says they will "attempt to block people using their coding models to commit cybercrime", as we saw in the cases with Anthropic, malicious actors can find all sorts of unique ways around the guardrails that the model providers implement.

He also rightly points out that cyber is dual-use, being able to be used to bolster the defenses of systems, or, exploit them - and AI is a force multiplier on both fronts. The question is, who will more effectively and more quickly adopt AI and Agents to achieve their objectives.

There's a longstanding information asymmetry between attackers and defenders, and as Sergej Epp has pointed out with his "Verifiers Law", attackers have the upper hand in ability to automate with AI due to the binary nature of attacks compared to defense, especially in complex enterprise environments.

Thanks to Ethan Mollick and Rob T. Lee for bringing this one on my radar. Buckle up folks, 2026 is going to be quite the ride at the intersection of Cyber and AI! 🍿

A look at NCSC’s ‘Forgivable vs Unforgivable’ Vulnerability Framework

The NCSC has published a framework to categorize vulnerabilities as “forgivable” (low-risk, obscure, difficult to exploit) versus “unforgivable” (easily exploitable, well-known flaws requiring immediate attention).

The key insight here: “Vulnerabilities that are trivial to find and occur time and time again are ones the NCSC aims to drive down at scale.” They’re pushing vendors to adopt secure programming concepts and catch vulnerabilities early in development.

What I find particularly compelling is their acknowledgment that the market isn’t incentivized to fix this - it’s a point I’ve made repeatedly about the misaligned incentives that prioritize “features” and “speed to market” at the expense of security fundamentals.

Massachusetts Tackles End-of-Life Software Risk

This is a legislative development worth watching. Massachusetts has introduced bills requiring manufacturers to clearly disclose the duration they’ll provide security and software updates, both on packaging and online.

The problem they’re solving is real: when connected devices reach end-of-life status without software updates, they become “zombie devices” vulnerable to exploitation and botnet recruitment. A December 2024 Consumer Reports survey found 72% of Americans who own smart devices believe manufacturers should be required to disclose support timelines.

This is exactly the kind of market-forcing mechanism I’ve argued we need, when voluntary approaches fail, legislation steps in. Similar bills are emerging in New York.

Momentum Cyber: Record $102B in Cybersecurity M&A

Momentum Cyber’s latest Almanac shows cybersecurity M&A hit a record $102 billion in disclosed deal value across 398 transactions in 2025 - a 294% year-over-year increase. Q3 2025 alone saw $44.2 billion driven by mega-deals in cloud security and identity platforms.

As someone who tracks market dynamics closely, this confirms what I’ve been observing: we’ve entered a new capital “super cycle” defined by platform consolidation. Security is becoming the insurance layer for AI-era infrastructure. The consolidation trend reinforces my view that point solutions are increasingly giving way to integrated platforms.

Cyber Startup Upwind in Talks to Raise $250M at $1.2B Valuation

The CNAPP ecosystem continues to be highly competitive with innovators looking to disrupt the category leaders. Upwind is currently raising more than $250M at a valuation of $1.2-$1.5B, with involvement from firms such as Bessemer.

As the article mentioned, there were prior talks for Upwind to be acquired by Datadog for an estimated $1B last year. For those unfamiliar with CNAPP solutions, they consolidate various cloud security categories such as CSPM, CWPP, CDR etc.

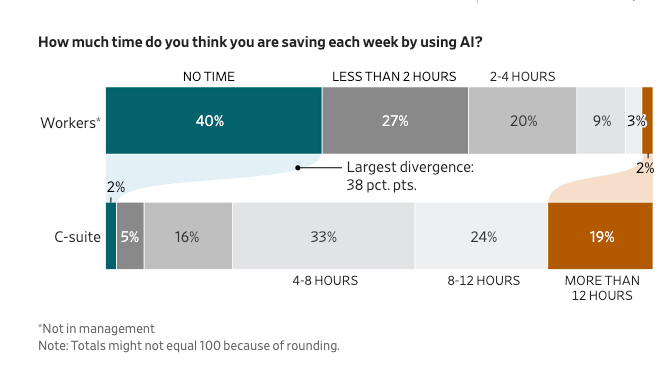

CEOs vs. Employees: The AI Productivity Gap

This caught my attention because it speaks to a disconnect I’ve observed in security organizations too. According to the WSJ, 19% of CEOs say AI saves them 12+ hours per week, but 40% of workers say it saves them no time at all.

As someone who’s been advocating for AI adoption in security (while acknowledging the risks), this gap matters. If we want security teams to effectively leverage AI tools, we need to bridge this perception gap through better training, clearer use cases, and honest assessment of where AI actually helps versus where it adds friction.

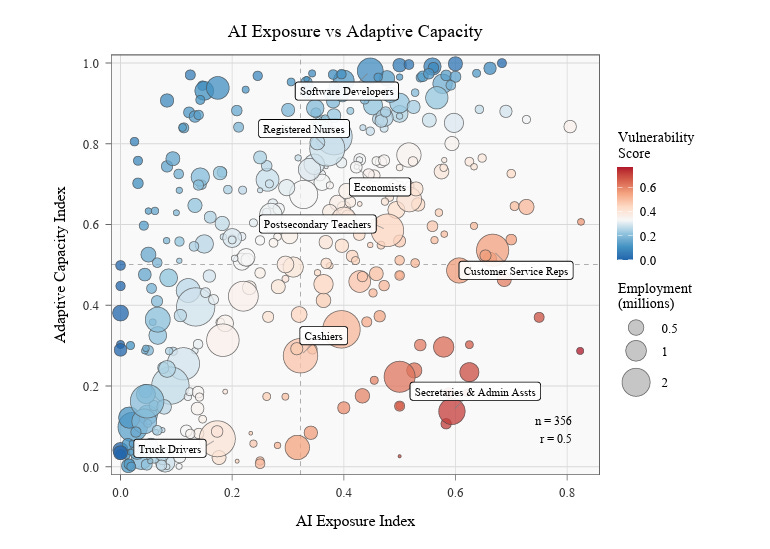

How Adaptable Are American Workers to AI-Induced Job Displacement?

We’ve heard a lot of discussion the year 12-18 months about the impact AI is going to have on the labor force, especially among knowledge workers such as in IT and Software. That’s why this paper from the National Bureau of Economic Research (NBER) caught my attention.

In the paper, they lay out what they define as their “AI Exposure Index” and it includes a chart contrasting the Adaptive Capacity vs. AI Exposure across various jobs. Some jobs are less exposed to AI, while some among the workforce are deemed more adaptable. Software Developers for example are ranked strongly in terms of AI exposure, but also in terms of being highly adaptive.

To determine these scores they looked at factors such as AI exposure but also the transferability of skills, net liquid wealth, the workforce density of areas and the age among a population as well.

Safe to say, those who are highly exposed but aren’t adaptable may be in for the worse of the impact from AI, should it actually materialize as some project.

Davos Distillation

Last week of course was Davos, where world leaders in various capacities and industries gathered to discuss a lot of critical topics. Geopolitics and drama aside, I keyed into a few talks, primarily around AI, and wanted to share a few of them below, along with a synopsis.

This first one involved two giants from Anthropic and Google with the three key points I walked away with being:

AGI development timelines are coming quicker than most suspect and likely faster than society will be able to handle. They specifically call out the remarkable rapid progress when it comes to coding.

There’s also major risks and challenges of advanced AI, such as control and autonomous, misuse by both criminal actors and nation states, economic impacts and the looming geopolitical competitions most notably between the U.S. and China.

They covered the need to mitigate risks and take proactive measures as well, such as the responsibility of industry, the role of the government and the timelines for adaption from a technology that is poised to disrupt society.

Next up is a talk with a combination of guests across finance, tech and politics and there was some really interesting discussion here around debt, AI, and the current political landscape as well. On the AI front, three major takeaways were:

AI as a potential productivity savior and the ability for it to potentially offset reckless government spending. This is something nations like the U.S. and others need to happen if they want to hope they can outgrow the growing national debt and its implications.

The hype and bubble potential around AI. They discuss how the companies helping create the hype are among those who need to raise tens or hundreds of billions of capital and the hype helps them do so. They also discusses the massive capital expenditures and CapEx, especially in the U.S. with data center buildouts.

Diffusion of AI was a critical topic and fragmentation in geopolitics and lopsided adoption all have potential implications. Larry Fink also made the point that we need to diversify from the AI growth being just the domain of the six hyperscalers and to the technology needs to become ubiquitous among not just large corporations but the broader economy, SMBs and society as whole.

The last major talk I really enjoyed and recommend folks check out is from Microsoft’s CEO. Satya discussed:

AI as a productivity engineer and cognitive amplifier that can boost productivity for knowledge workers and compared this to the early wave of computers but bigger.

Again, like the prior talk above, Satya stressed the importance of society diffusion of AI, and ensuring the benefits are being received across all countries not just the most developed nations. This is important for both ethical and geopolitical reasons but also for the broader success of the technology and the companies building it.

Data sovereignty was a key topic, with Satya arguing that true sovereignty in the AI era means organizations controlling their knowledge that is embedded in AI models, not just where data centers are located.

AI

Clawdbot Takes The Community by Storm - Promise & Peril

"It can do anything a user can do!"

Another week, another promising innovation in the world of AI. My feed the last several days is full of folks raving about "Clawdbot". It's an open-source project that is an AI agent gateway, able to function as an AI assistant that operates inside messaging apps, has a remember and can execute tasks autonomously.

Some have quipped it is like "giving AI hands". In my mind, LLMs were the "brain. Agents gave LLM's arms and legs Now, Clawdbot gives your AI hands, to do. It's interesting to watch how the broader ecosystem views these breakthroughs compared to security too.

It can run on your local machine, or a virtual private server. It can access any chat app, has a persistent memory, browser control, full access and ability to leverage skills and plugins.

The primary breakdown of Clawdbot seems to be from Alex Finn on YouTube, which is worth a watch to understand what it is, how it works and what it is capable of.

To the general community, it looks like potential.

To the security community, it smells like peril.

Remote code execution, access to sensitive data/files, websites and credentials/secrets, remote code execution, malicious skills and more.

Never a dull moment these days!

Hacking Clawdbot and Eating Lobster Souls

Speaking of the risks and peril of Clawdbot, Jamieson O’Reilly wrote an excellent piece diving into exactly that. Jamieson opens up discussing how when every new class of software gains traction he begins by asking:

“What does the real-world deployment surface actually look like”

This is a question everyone should ask when introducing a new technology, however, as we know that is rarely the case.

He lays out how Clawdbot is an open-source AI agent gateway that connects LLMs to messaging platforms, runs persistently, executes tools on behalf of users and has a memory.

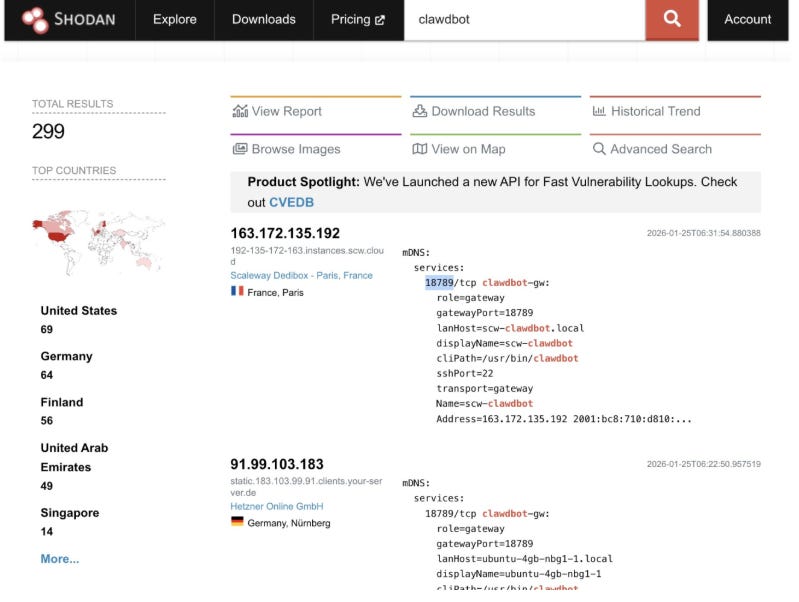

The two core aspects he examines is that gateway, that handles AI agent logic, such as message routing, tool execution and credential management. He walks through using popular tools such as Shodan to find publicly exposed Clawdbot gateways, and he said he found hundreds:

He explains the threat model involving Clawdbot control access and enumerating filesystems and much more. This is the most comprehensive and detailed breakdown on the security implications of Clawdbot and tools similar to it, which I suspect we will see more of to come. I highly recommend checking it out.

“The real problem is that Clawdbot agents have agency”

File access, user impersonation, credential theft and much more!

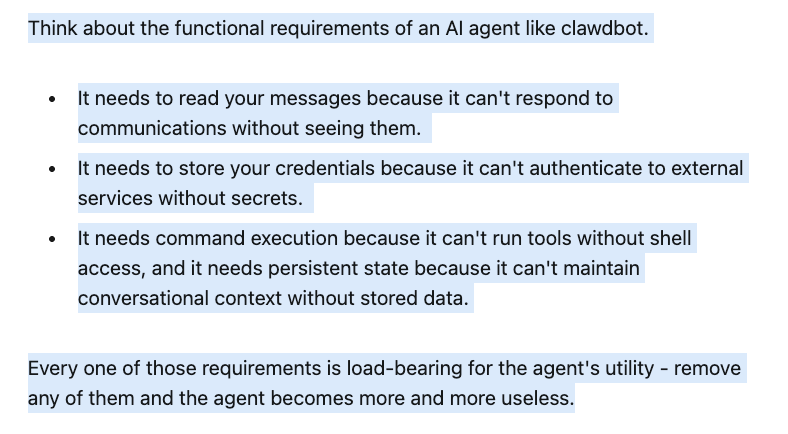

His closing comments are precisely what I think the crux of the issue with AI and Agents is. It is the quintessential Usability vs. Security:

Jamieson then followed up with a Part II of his investigation into the vulnerabilities around Clawdbot. He created a vulnerable skill for “Clawdhub”, a package registry where developers can share and download skills that extend the capabilities of Clawdbot, and was able to embed a backdoor into it, and have it quickly downloaded by developers across 7 different countries, as the vulnerable “skill” executed commands on their machine. He emphasizes that he didn’t make it malicious, but it is easy to see how someone could.

This is a fascinating intersection of the rapid popularity of the personal AI assistent (PAI) Clawdbot, and how to weaponize it due to its vast permissions and access on endpoints, as well as using supply chain attack vectors via “skills” to weaponize a new aspect of the AI ecosystem.

“If we’re going to rush headfirst into AI acceleration, we need to speedrun security awareness alongside it.

Consider this a wakeup call.”

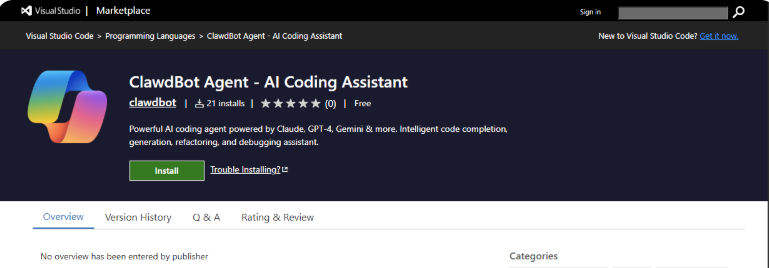

Fake Clawdbot VS Code Extension Installs ScreenConnect RAT

Building on the scheme of exploiting the excitement around Clawdbot, AppSec team Aikido reported they identified a malicious “Clawdbot Agent” that is a functional trojan VS code extension. It’s a working AI coding assistant on the surface but drops malware onto Windows machines when VS code starts, including legitimate ScreenConnect software that’s been weaponized to a relay server.

When victims install the extension they get a fully functional ScreenConnect client that reaches back to the attackers infrastructure. It showed up in the VS marketplace as a functional and valid extension to use as well. They walkthrough how the attackers set up multiple layers of redundancy too, to ensure their attack has multiple layers of payload delivery in case one is shut down.

Prompt Injection is NOT SQL Injection (it may be worse)

While the term "Prompt Injection" was coined by Simon Willison in 2022, many have begun comparing it to SQL Injection. Now, industry leaders such as the UK's National Cyber Security Centre (NCSC) are warning against comparing the two.

As this recent piece from NCSC points out, the underlying issue of Prompt Injection is deeply rooted in the fact that LLMs do NOT enforce a security boundary between instructions and data inside a prompt - conflating the two.

As the blog points out, the challenge lies in the fact no distinctions are made between instructions and data, and LLMs are just predicting the next token from its inputs thus far. This reality has also recently led leaders such as OpenAI to admit that the problem may never be solved for LLMs in their current form.

That said, and as the paper highlights, many are pursuing mitigations, such as detecting prompt injection attackers, training models to prioritize instructions over anything in "data" that may seem like an instruction, or trying to highlight to a model what is "data".

The paper goes on to compare prompt injection as not a form of code injection but instead a "confused deputy vulnerability", with systems being coerced to perform functions that benefit the attacker. Even then, the issue is "LLM's are inherently confusable".

Anthropic Publishes Claude’s New Constitution

This is significant. Anthropic has released a comprehensive new constitution for Claude that shifts from rule-based to reason-based alignment - explaining the logic behind ethical principles rather than just prescribing behaviors. The priority hierarchy is clear: (1) safety and human oversight, (2) ethical behavior, (3) Anthropic’s guidelines, (4) being helpful.

Most notably, Anthropic becomes the first major AI company to formally acknowledge their model may possess some form of consciousness or moral status. They’ve also released it under Creative Commons CC0, meaning other developers can use it freely. As I’ve written about AI governance, this kind of transparency about how AI systems are trained to behave is exactly what the industry needs more of.

Caleb Sima: The Evolution of AI - What Scares Me

Caleb Sima’s perspective on AI evolution is worth reading - he’s been in this space long enough to have earned credibility when he expresses concerns. As AI capabilities advance, the security implications compound.

I’ve been making similar points about how our security models weren’t designed for autonomous agents that can plan, act, and make decisions. The fears Caleb articulates resonate with the OWASP Agentic Top 10 risks I’ve been discussing - from agent goal hijacking to cascading failures.

Microsoft: A New Era of Agents, A New Era of Posture

Microsoft’s take on agent security aligns with what I’ve been saying about the need for comprehensive visibility across AI assets. Their key point: a strong AI agent posture requires understanding what each agent can do, what it’s connected to, the risks it introduces, and how to prioritize mitigation.

They highlight a risk I’ve emphasized - agents connected to sensitive data represent critical exposure points. Unlike direct database access, data exfiltration through an agent may blend with normal activity, making it harder to detect. Their AI-SPM capabilities in Defender show how security tooling is evolving to meet these challenges.

Coalition for Secure AI: Practical Guide to MCP Security

The Model Context Protocol (MCP) has emerged as a foundational building block for agentic AI, but as I’ve noted in previous discussions, adoption consistently outpaces security practices.

This guide addresses that gap. MCP servers face urgent threats: prompt injection where attackers trick models into running hidden commands, and tool poisoning that manipulates external tool behavior.

Key recommendations include Zero Trust (never trust, always verify), network segmentation, just-in-time access, and critically - human approval workflows for sensitive actions. The OWASP team’s research paper on MCP security has become a key resource here.

Lakera: Stop Letting Models Grade Their Own Homework

This piece articulates something I’ve been thinking about: using LLMs to protect against prompt injection creates recursive risk. Lakera’s argument is compelling - the judge is vulnerable to the same attacks as the model it’s meant to protect. LLM-based defenses fail quietly, performing well in demos but breaking under adaptive real-world attacks.

Their bottom line: “If your defense can be prompt injected, it’s not a defense.” Security controls must be deterministic and independent of the LLM. This aligns with my view that we need defense-in-depth approaches, not single points of failure.

BSI Germany: Evasion Attacks on LLMs - Countermeasures in Practice

Germany’s BSI has released practical guidance on countering LLM evasion attacks - attacks that modify inputs to bypass model behavior without altering parameters. The countermeasures include secure system prompts, malicious content filtering, and requiring explicit user confirmation before execution.

Critically, BSI is honest: “There is no single bulletproof solution for mitigating evasion attacks.” This intellectual honesty about our current limitations is refreshing and necessary. The accompanying checklist for LLM system hardening is a practical resource for teams deploying these systems.

Turning the OWASP Agentic Top 10 into Operational Security

As someone who served on the Distinguished Review Board for the OWASP Agentic Top 10, I’m glad to see this piece emphasizing that agent security must be approached as a lifecycle problem, not a single control or checkpoint.

The key insight: many damaging scenarios don’t involve agents doing something explicitly forbidden, but doing something technically allowed in ways that produce unintended consequences. Point solutions fail because controls focusing on a single layer don’t account for how risks emerge as agents plan, interact, and execute across systems over time. Defense in depth is essential.

Ken Huang: Agentic Identity 365 - The New Control Plane

Ken Huang ’s piece on agentic identity reinforces what I’ve been saying about non-human identity (NHI) becoming a critical challenge. As teams deploy AI agents that read data, call APIs, and make changes across infrastructure, each agent is associated with an identity requiring privileges, context, and audit requirements. Identity is already at the heart of many incidents - this challenge is poised to become even more problematic with agentic AI.

Managing agent identities at scale will require new approaches to identity governance.

Agent Skills in the Wild: Security Vulnerabilities at Scale

This empirical research validates concerns I’ve raised about agent extensibility. The researchers collected 42,447 skills from two major marketplaces and found 26.1% contain at least one vulnerability across 14 distinct patterns including prompt injection and data exfiltration.

The core problem: skills execute with implicit trust and minimal vetting, creating significant attack surface. This is exactly the supply chain risk I’ve discussed - we’re extending agent capabilities without adequately vetting what we’re plugging in.

AI IDEs vs Autonomous Agents: Impact on Software Development

This longitudinal study examines how agent adoption affects open-source repositories using staggered difference-in-differences analysis. The research looks at development velocity (commits, lines added) and quality metrics (static-analysis warnings, complexity, duplication).

As AI writes more code - Google says 25% of their code is now AI-generated, YCombinator reports 25% of their cohort has codebases 95% AI-written - understanding the security implications becomes critical. The velocity gains come with security risks I’ve highlighted around implicit trust and lack of validation.

AppSec

Secure-by-Design Goes Prime Time 🎬

Moving security from something that was bolted on rather than built-in has been a goal of security for decades, dating back to The Ware Report, 50+ years ago. Even over the last several years, we have seen Secure-by-Design be something that felt good for the community to say, but was rarely implemented at-scale in reality.

That's changing now.

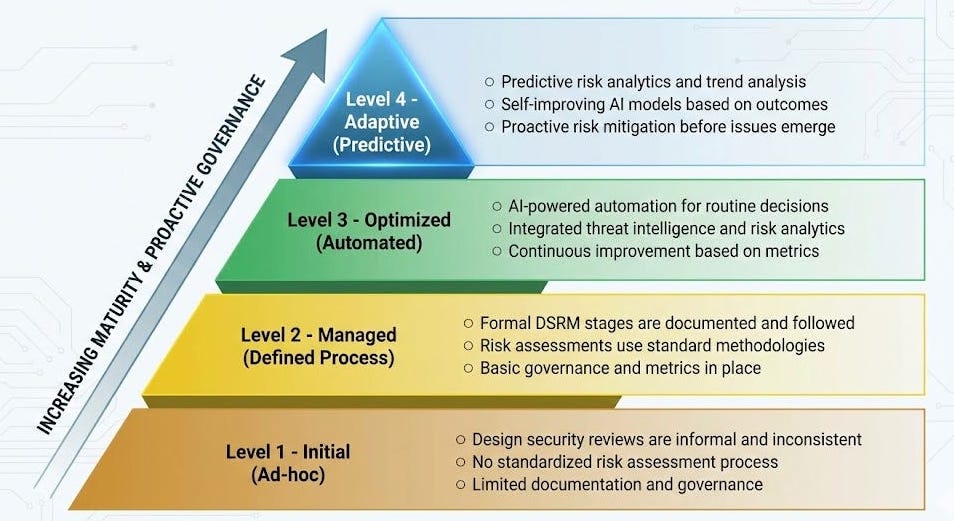

In my latest piece on Resilient Cyber, I deep dive into an emerging category, Design Stage Risk Management (DSRM) and use innovators such as Prime Security as an example.

I walk through the historical challenges of Secure-by-Design, such as competing priorities such as speed to market and revenue, as well as technological impediments of making Secure-by-Design a reality.

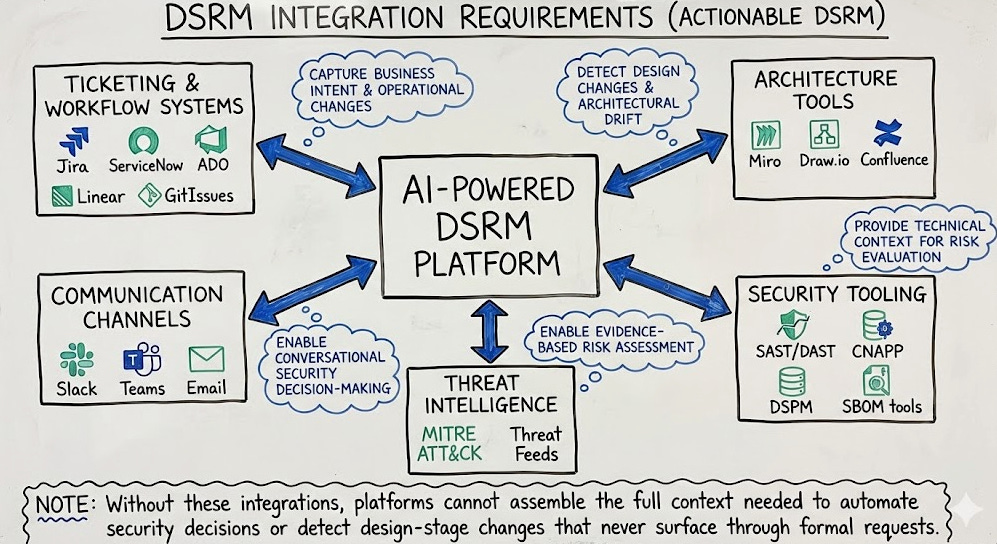

The concept of Design Stage Risk Management (DSRM), which is the proactive identification, modeling and governance of security risks before implementation actions are taken and how to formalize Secure-by-Design principles with automation, visibility and enforcement. I also cover what an AI-powered DSRM platform looks like, from integrations, tooling and automations.

How the industry has several other product development lifecycle (PDLC) and AppSec categories such as ASPM, CSPM and DSPM, with an emphasis on the Build and Run stages, while neglecting the Design stage, where risks begin to surface.

The role of AI and LLMs, helping to innovate traditional tooling such as SAST, and how these same technologies can be leveraged to assess a systems intent, how it functions, its components and the vast sources of unstructured data where design metadata and discussions exist.

The opportunity for security to leverage the same AI technology and capabilities that our development and engineering peers are, to address longstanding workforce challenges in App and Product Security.

How incentives and regulations drive behavior and the evolving landscape that is driving the push to make Design stage security a top priority for product vendors.

I'm excited about the opportunity to take Secure-by-Design from virtue signal and catchphrase to capability and to see teams innovating in this space that has plagued security for decades.

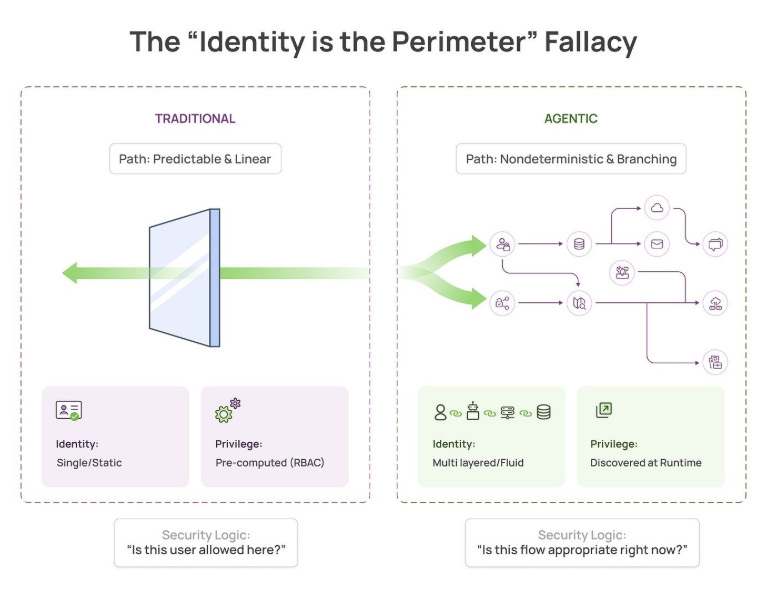

The “Identity if the Perimeter” Fallacy

For years we’ve had catchphrases in cyber such as “Identity is the Perimeter” and principles such as least-permissive access control. The problem, as Apurv Garg points out in this article, agents need broad permissions to work autonomously and provide value and their behavior isn’t consistently predictable.

As he explains, there’s an inherent tension in autonomous systems that breaks from traditional access paradigms. Authorization can be determined but appropriateness is more complex.

He also lays out by approaches such as Privileged Access Management (PAM) and Just-In-Time (JIT) access for agents fails, due to the non-deterministic nature of Agents. These are important discussions as the community navigates what identity security looks like in the age of agentic AI.

KEV is a Compliance Baseline, Not a Prioritization System

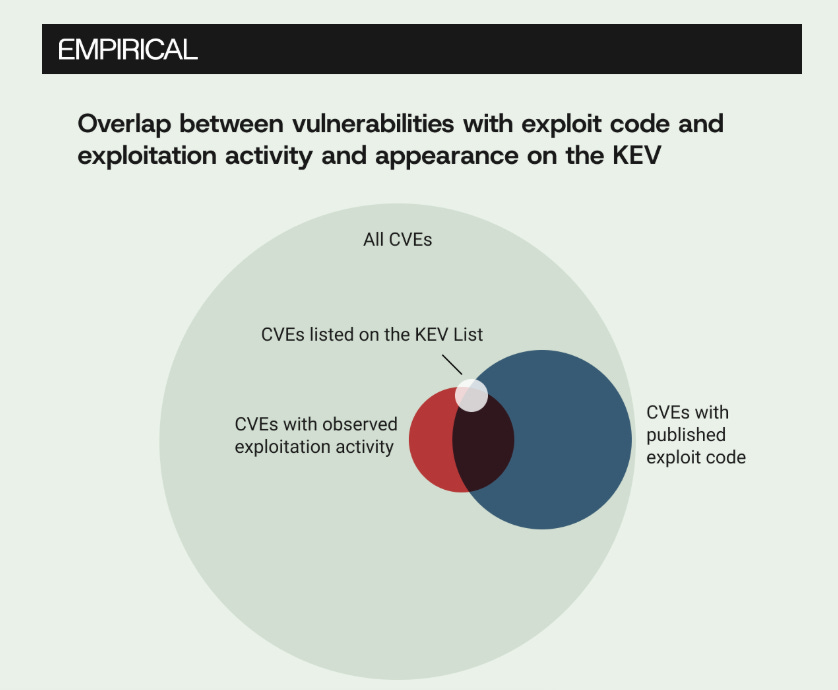

I've been enjoying the recent blogs from Michael Roytman, Ed Bellis and the Empirical Security crew. In this one, they highlight how despite the 2x growth of the Cybersecurity and Infrastructure Security Agency Known Exploited Vulnerability (KEV) catalog since 2022, the actual exploited CVE ecosystem is significant larger, to the tune of tens of thousands of known-exploited CVE's, showing the KEV at best is < 10% of known exploited CVE's.

That said, prioritization is still incredibly problematic too, showing 94%~ of CVEs that are published never have observed exploitation activity. They lay out how KEV is a great starting point and may check some compliance boxes (e.g. looking at you U.S. Public Sector/Fed/DoD) but it is far from comprehensive. It's a high-signal, low coverage data source as they put it.

"Treating KEV as the only prioritization input guarantees you'll miss the majority of exploited CVE's"

Definitely worth a read, as we see vulnerability remediation devolve into a compliance exercise as it always has been, even with improved data sources such as KEV over blanket base CVSS scores.

Resilient Cyber w/ Anshuman Bhartiya - AI-native AppSec

In this episode of Resilient Cyber I sit down with Anshuman Bhartiya to discuss AI-native AppSec.Anshuman is a Staff Security Engineer at Lyft, Host of the The Boring AppSec Community podcast, and author of the AI Security Engineer newsletter on LinkedIn.

Anshuman has quickly become an AppSec leader I highly respect and find myself learning from his content and perspectives on AppSec and Security Engineering in the era of AI, LLMs and Agents.

Prefer to Listen?

Anshuman and I covered a lot of ground, including:

Anshuman’s work with “SecureVibes”, a AI-Native Security solution for Vide Coded applications, it includes 5 different AI agents to autonomously find vulnerabilities in code bases.

The battle between Offense and Defense use of AI Agents and which offers the most near-term opportunity.

How Anshuman is using LLMs to find IDOR and Authorization vulnerabilities, including for business logic scenarios that traditional SAST tools miss and what the future of AppSec tooling looks like when you combine it with AI.

The explosive growth of MCP and how it is a double-edged sword of opportunity and risk.

AI’s impact on the AppSec and Cyber workforce, where it can be a force multiplier on one hand but also potentially lead to needing less folks on the other.

Anshuman’s concept of “SecurityGPT”, or a Security Oracle that understands organizational policies, processes and context and is available to empower Developers, Engineers and Security.

Vibe Coding Could Cause Catastrophic ‘Explosions’ in 2026

This piece echoes concerns I’ve raised about vibe coding’s security implications. As David Mytton notes, we’ll see more vibe-coded applications hitting production in 2026. Unit 42’s research shows the “nightmare scenarios” are no longer hypothetical - they’ve documented breaches where vibe coding agents neglected key security controls, malicious command injection via untrusted content, and authentication bypasses.

The first rule: AI-generated code suggestions are not secure by default. Yet 80% of developers admit to bypassing security policies when using AI tools, while only 10% of organizations automate scanning of AI-generated code.

Context is AI Coding’s Real Bottleneck

This resonates with my thinking on why AI coding tools struggle with security context. The real bottleneck isn’t the model - it’s context. AI might write flawless Python, but if it can’t access design decisions in a wiki, compliance requirements in Slack, and customer data models in the CRM, the code may be technically correct but strategically (and securely) useless. As code review becomes the new bottleneck - with AI generating faster than humans can review - we need better approaches to providing security context to these tools. This piece speaks directly to the Design Stage Risk Management (DSRM) category I covered above with Prime Security.

Wiz Research: CodeBreach Vulnerability in AWS CodeBuild

Wiz discovered a critical supply chain vulnerability that allowed takeover of key AWS GitHub repositories, including the JavaScript SDK powering the AWS Console. The flaw? Two missing characters in a regex filter (^ and $ anchors) allowed attackers to bypass ACTOR_ID filters.

This “surprisingly low” level of technical sophistication highlights how basic security mistakes create enormous exposure. AWS has since implemented a Pull Request Comment Approval build gate. This is a reminder that supply chain security often fails on fundamentals, not sophisticated attacks.

Chainguard: Gastown and Where Software Is Going

Chainguard’s perspective on software’s future direction is worth considering alongside the supply chain security discussions we’ve been having. As software delivery evolves - with AI agents increasingly involved in the pipeline - the provenance and integrity guarantees we need become more complex. The software supply chain challenges I’ve written about extensively are evolving, not diminishing.

Final Thoughts

This week crystallizes a theme I keep returning to: we’re past the point of debating whether AI security is a real discipline. The frameworks are emerging (OWASP Agentic Top 10, NCSC’s vulnerability classification, MCP security guides), the research is quantifying the risks (26% of agent skills containing vulnerabilities), and even major vendors like OpenAI are being transparent about unsolved problems like prompt injection.

What I find encouraging is the intellectual honesty. Germany’s BSI acknowledging there’s no bulletproof solution. Lakera calling out the recursive risk of LLM-based defenses. The NCSC recognizing market incentives are misaligned. This kind of clear-eyed assessment is how we make progress.

For those following my work on agentic AI risks - this week’s content reinforces why the OWASP Agentic Top 10 matters. Agent security isn’t a single control; it’s a lifecycle problem requiring defense in depth, human oversight, and continuous adaptation.

Stay resilient out there.