Resilient Cyber Newsletter #81

Tech and National Security Collide, 2025 Cyber Company Growth, Prompt Injection Unsolvable, Industrialization of Exploit Generation, AI-Code Security Anti-Patterns and Path to 1M CVEs

Welcome

Welcome to issue #81 of the Resilient Cyber Newsletter.

It’s truly hard to believe January is almost over already. I’ve got a lot of great resources and discussions this week to share, including the intersection of tech and national security, what growth looked like for some of the hottest cyber companies in 2025, the impact of AI and LLMs on coding and exploit generation and our path to 1M CVE’s.

So let’s get down to business!

CSA AI Summit 2026

Just a quick note, I’ll be speaking at the CSA AI Summit next week, giving a talk titled “Securing AI Where It Acts: Why Agents Now Define AI Risk”. You can find my abstract below. Be sure to check out the event and my talk if you’re interested in AI and Agentic AI Security!

Abstract: In this session we will walk through the evolution from LLMs and GenAI to Agentic AI and how this new paradigm amplifies both the risks and the attack surface of AI adoption. We will also provide actionable recommendations and takeaways for organizations to enable secure agentic AI adoption.

If you can’t make the event, I wrote a blog capturing my key topics of discussion and takeaways as well! Check it out “Securing AI Where it Acts: Why Agents Now Define AI Risk”.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 31,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Cyber Leadership & Market Dynamics 📊

Aikido Security Raises $60M at $1B Valuation

Belgian application security startup Aikido Security has reached unicorn status, raising $60 million in Series C funding at a $1 billion valuation. The round was led by Singular with participation from Bain Capital Ventures, Berkley SB, and European VCs. CEO Willem Delbare highlighted their focus on developer-centric security, moving away from legacy tools that are expensive and hard to deploy. F

Founded in 2022, Aikido has grown to over 10,000 customers, including notable names like MasterCard, Toyota, and Remitly, with ARR increasing fivefold in 2024.

Tech and National Security Continue to Collide

The world of tech and national security continue to collide. Last week we saw U.S. Cyber stocks falling pre-market after China banned U.S. cyber software. This came amid accusations of hacking and cyberwarfare from China and it impacted U.S. firms that were banned, such as:

CrowdStrike

Palo Alto Networks

Fortinet

Wiz

CheckPoint

And others. This is driven in part by Chinese efforts to avoid potential U.S. risks tied to cyber, but also to bolster China’s own domestic tech and cyber capabilities rather than relying on foreign tech, a trend we see the U.S. looking to do themselves in some key sectors, such as their domestic buildouts to limit reliance on Taiwanese semiconductor giants such as TSMC.

Now, news this week broke that Brussels is moving to bar Chinese tech suppliers from the EU’s critical infrastructure.

2025 Cyber Company Growth

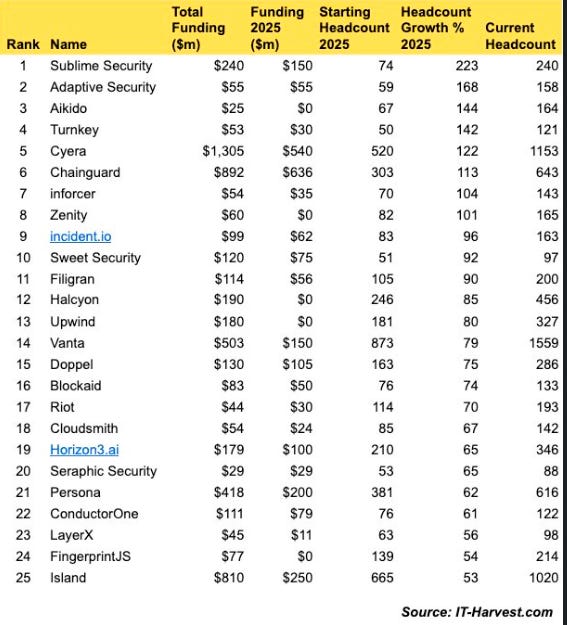

It is often insightful to take a look at how cyber companies are growing YoY. I recently came across this snapshot from Richard Stiennon that showed the top 25 based on total funding, funding in 2025, starting and end of year headcount/headcount growth etc.

You’ll notice many familiar names, including Aikido who I mentioned above just announced a new funding round, various Resilient Cyber partners, as well as Zenity, the team I myself joined full time as the VP of Security Strategy to kick off 2026, where we focus on Agentic AI Security.

As some in the comments of the post point out, headcount growth doesn’t necessarily correlate to revenue growth, especially in the age of AI where there’s an emphasis on revenue and margin growth over pure headcount, which of course is an expense. That said, headcount growth can sometimes be a signal of a firms success and growing revenues as well, but not always, as we all know.

AI is making output per person the real scaling metric; headcount growth is now often a lagging indicator

AI Is Making Output Per Person the Real Scaling Metric

Speaking of headcount growth, I recently came across this post from CTech where they interviewed VCs from lool ventures, where they emphasized the output per person being the real scaling metric now, rather than just headcount.

“In software-heavy companies, AI is making output per person the real scaling metric; headcount growth is now often a lagging indicator (or even a sign you haven’t redesigned the workflow),” said Lee Ben-Gal and Haim Bachar, Investors at lool ventures.”

The speakers discussed how in Israel, high-tech employment is flat but high-tech exports hit record levels, and they anticipate 2026 will be a “all-time record year for exists/M&A value”.

SACR Cybersecurity 2026 Outlook

My friend Francis Odum and his team just dropped their Software Analyst's annual cybersecurity outlook. It predicts M&A activity could reach $96B in 2026, driven by platform consolidation and private equity activity.

Key themes include AI-native security platforms gaining ground against legacy vendors, continued consolidation in endpoint and identity markets, and the rise of 'security platforms' over point solutions.

The report notes that cybersecurity spending will remain resilient despite broader tech budget pressures, with cloud security and identity management leading growth areas.

I like to keep an eye out on what my fellow cyber analysts think, and often unsurprisingly their predictions and thoughts align with my own. For example Francis and team discuss the rise and emphasis on runtime, and I recently wrote a piece titled “AppSec’s Runtime Revolution” where I took a look at the rise of Cloud Application Detection & Response (CADR) and used Oligo Security as a case study for the topic.

AI's Trillion-Dollar Opportunity: Context Graphs

Foundation Capital argues that the next trillion-dollar platforms won't come from adding AI to existing data, but from capturing 'decision traces' - the exceptions, overrides, precedents, and cross-system context that currently live in Slack threads and people's heads.

They introduce the concept of a 'context graph': a living record of decision traces stitched across entities and time so precedent becomes searchable. Key insight: Agents don't just need rules; they need access to decision traces showing how rules were applied, where exceptions were granted, and how conflicts were resolved.

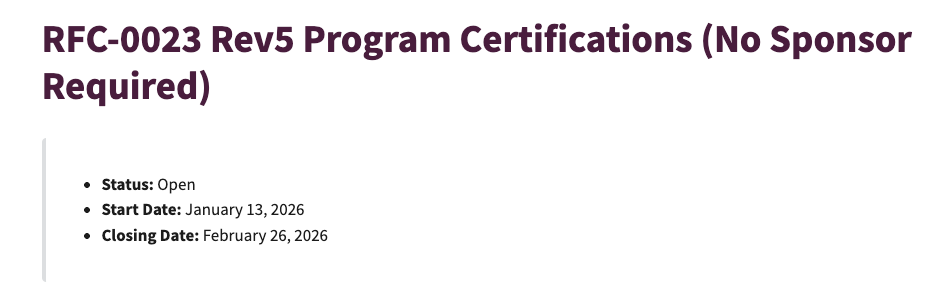

FedRAMP Considers a No Sponsor Path

You’ve likely seen me discuss the major changes that the U.S. Government’s primary Cloud Security/Compliance program FedRAMP is undergoing. The latest RFC-0023 got a lot of attention because it opens the door for CSP’s to pursue FedRAMP potentially without requiring a sponsor.

Having a sponsor has historically been a major impediment for software vendors looking to become FedRAMP authorized, especially SMBs who lack resources or direct personal connections with Federal agencies.

This RFC would allow a path that doesn’t require a sponsor, but it is specifically for CSPs who have completed a significant portion of the FedRAMP Rev5 controls and are lacking a sponsor due to budgetary constraints.

If this passes and becomes formal, it could allow agencies much broader access to innovative software solutions and also remove one of the primary impediments for vendors looking to sell to the U.S. government.

AI

OpenAI Says Prompt Injection May Never Be ‘Solved’ for Browser Agents

OpenAI has issued a stark warning about prompt injection attacks being a 'central security risk' for AI browser agents like ChatGPT Atlas. The company recently shipped a security update after internal automated red-teaming uncovered a new class of prompt-injection attacks.

OpenAI built an automated attacker using LLMs trained with reinforcement learning to discover weaknesses. A demonstration showed how a malicious email could direct an agent to send a resignation letter instead of an out-of-office reply. The UK National Cyber Security Centre echoed these concerns, warning that prompt-injection attacks may never be fully mitigated.

AI Security Isn’t Bullshit

David Campbell offers a counterpoint to a recent piece by Sander Schulhoff who argued AI security is bullshit. David counters that by arguing that AI security represents a genuine paradigm shift requiring new approaches. He highlights real threats including prompt injection attacks, model poisoning, and data exfiltration through AI systems.

Campbell argues that while skepticism is healthy, dismissing the entire field ignores demonstrated vulnerabilities in production AI systems. He emphasizes that traditional security tools were not designed for the probabilistic, non-deterministic nature of AI systems.

The key theme from David is that we’re focusing on the wrong thing. Most of the discussion of AI security has been model-centric, rather than focused on agents, tools, architectures etc. Agentic AI changes that with tool use and the ability to act autonomously and do. As he argues, we’re securing the mouth, rather than the hands, and that is backwards from how we should approach AI security.

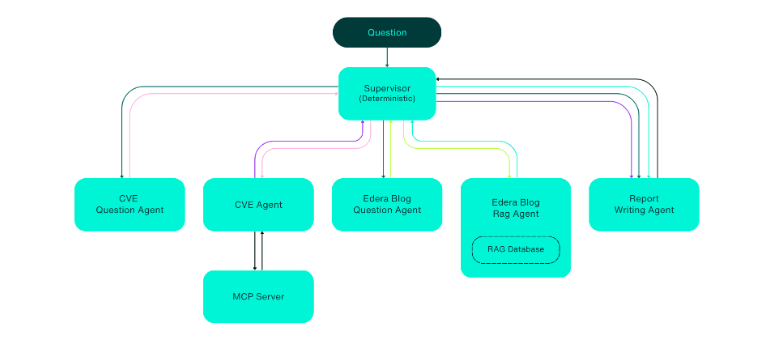

Performant Isolation for Secure AI Agents

Edera has published research on securing AI agents without sacrificing performance. The key insight: running AI agents directly on shared machines or in shared-kernel container environments like Docker poses substantial security risks. AI agents can inadvertently or maliciously run harmful commands, exfiltrate API keys, or expose confidential information.

Edera proposes isolated execution environments where each agent operates in its own isolated zone without access to the host OS. Their research shows that running agents in Edera can actually be faster than Docker while providing strong isolation.

Demystifying Evals for AI Agents

Anthropic has published a comprehensive guide on evaluating AI agents. Key insights include: Good evaluations help teams ship AI agents more confidently by making problems visible before they affect users.

The guide covers three types of graders (code-based, model-based, and human), the distinction between capability evals and regression evals, and specific techniques for evaluating coding agents, conversational agents, research agents, and computer use agents. Critical advice: start with 20-50 simple tasks drawn from real failures, write unambiguous tasks with reference solutions, and build balanced problem sets.

AI Red Teaming Explained by AI Red Teamers

When we look at the space of AI security, one of the hottest niches is “AI Red Teaming”. However, ask 5 people what that means and you may get 5 different answers. I enjoyed this HackerOne interview with Luke Stephens and commentary from Joseph Thacker, who’s work I’ve cited before clearing up what AI Red Teaming actually is.

AI red teaming is the practice of attacking systems and applications that include AI components in order to identify real security weaknesses. The focus is not on the AI model in isolation, but on how AI is embedded into products and how those features behave under adversarial use.

They do a great job walking through what AI Red Teaming is, and what it isn’t.

What Is Agentic SOC and Why the Name Barely Matters

Blink Ops explores the emerging concept of the Agentic SOC - a security operations center augmented by AI agents that can autonomously investigate, triage, and respond to threats.

The article argues that while the terminology (Agentic SOC, AI SOC, SOC AI) varies, the underlying shift is what matters: moving from humans using tools to humans supervising agents that use tools. Key considerations include trust boundaries, escalation policies, and maintaining human oversight for high-stakes decisions.

Rethinking SOC Capacity: How AI Changes the Human Cost Curve

Prophet Security examines how AI fundamentally changes the economics of security operations. Traditional SOC scaling has been linear: more alerts require more analysts. AI changes this by enabling a hybrid model where AI handles initial triage and investigation while humans focus on complex decisions and response.

The article proposes a new capacity model where AI handles 80-90% of initial alert processing, allowing human analysts to focus on the 10-20% requiring judgment and context that AI cannot reliably provide.

MCP Dominates Even as Security Risk Rises

While MCP continues to see widespread adoption and formalization within the ecosystem, including Anthropic transferring ownership of MCP to The Linux Foundation, this piece highlights linger security concerns. This includes CISO’s discussing how with LLMs the risks were related to potentially providing the wrong answer, or damaging brand reputation by doing so.

With the introduction of protocols such as MCP and agents, systems don’t just think, but now act, interacting with enterprise systems, data, resources and more. All of this means the risks are now much more significant. MCP and Agents have essentially significantly exposed the attack surface related to AI and enterprise environments and security is catching on to this reality. I had previously shared an excellent report from Clutch Security which showed the massive rise of MCP adoption, how most of the MCP servers are hosted locally on endpoints of developers and the significant risks this posed to organizations.

This piece discusses the continued adoption and growth of MCP, insecure adoption practices and how security concerns aren’t slowing the adoption curve.

AppSec

AI-Code Security Anti-Patterns for LLMs

We've all seen the unprecedented adoption of AI coding tools and platforms. If you're in AppSec, you've likely seen me and others discussing and sharing resources highlighting the concerns around AI-generated code being vulnerable as well.

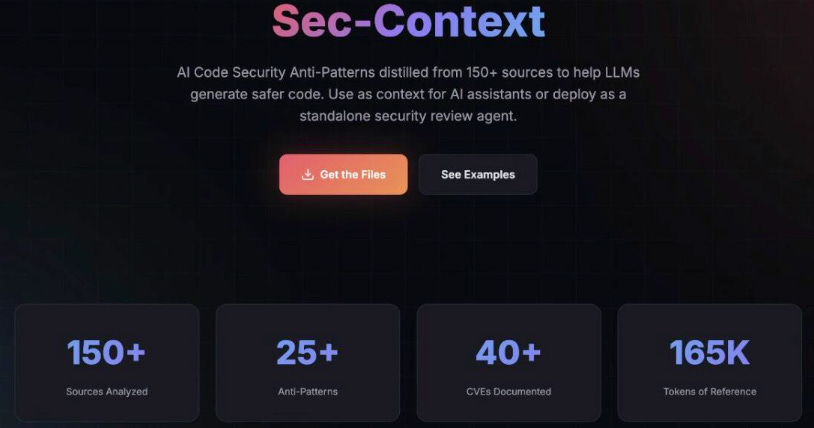

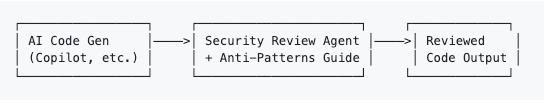

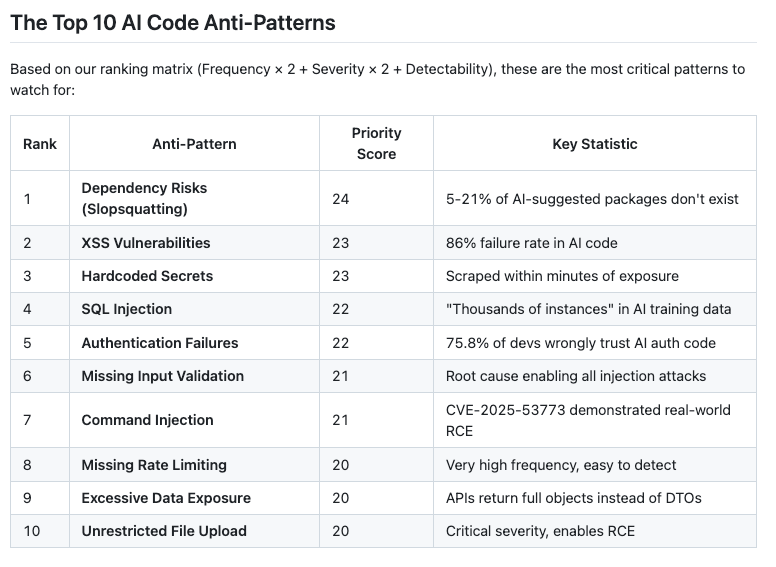

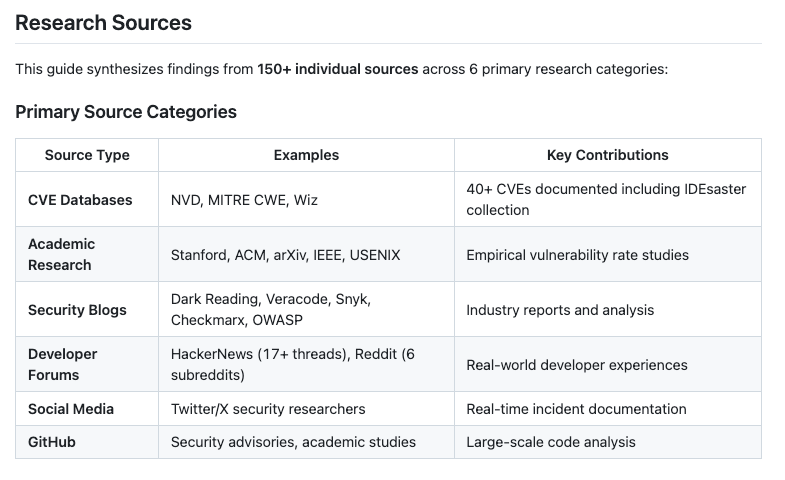

That's why this resource from Jason Haddix is so awesome. He open sourced and shared AI-Code Security Patterns for LLMs, which is a comprehensive security reference distilled from 150+ sources to help LLMs generate more secure code.

It can be used with large context window models, pieces for smaller contexts or ideally as a standalone security review agent, which takes the code as input and checks it against all25+ anti-patterns, providing a list of identifiers vulnerabilities and specific remediation steps, acting as a secure path from development to production.

This is really an awesome resource for the community and I hope organizations take the opportunity to leverage it to enable secure AI-generated code at-scale.

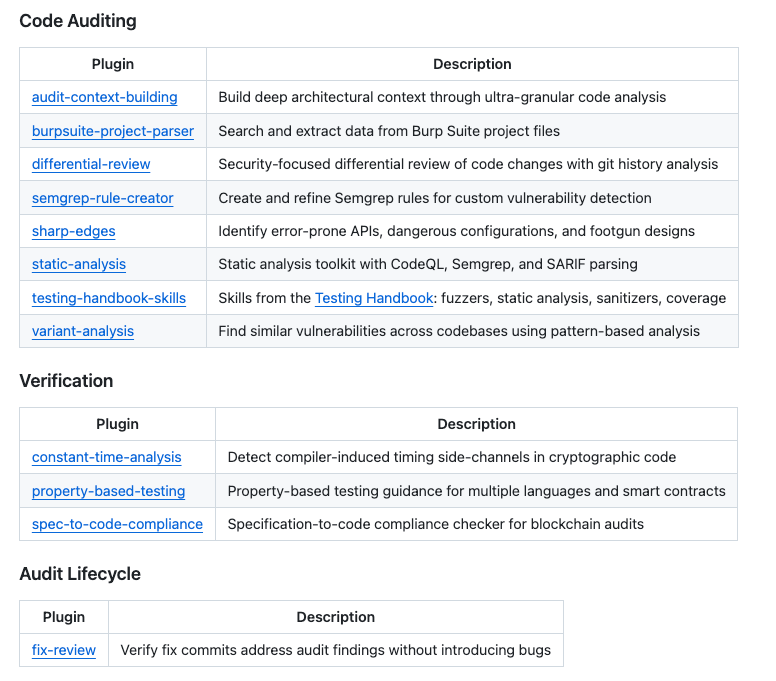

Trail of Bits Open Sources Cyber Capabilities

We have seen the quick rise of “Skills” as a way to provide guidance and structure when working with leading tools such as Claude, and how to produce repeatable patterns and outcomes as well as share them with others.

Cyber firm Trail of Bits did something really awesome by recently open sourcing their Skills via a Claude Code plugin marketplace, to provide enhanced AI-assisted security analysis, testing and development workflows.

Their plugins include capabilities such as for code analysis, searching for vulnerabilities auditing commit histories and more.

On the Coming Industrialization of Exploit Generation with LLMs

We’ve seen a lot of promising (and scary) research and demonstrations around AI’s ability to identify vulnerabilities and even exploit them. This latest example comes from Sean Heelan, who used Open 4.5 and GPT-5.2 to write exploits for zero day vulnerabilities he discovered in QuickJS.

Through his exercises the LLMs found over 40 distinct exploits across 6 scenarios and GPT-5.2 solved all of the scenarios and Opus 4.5 solved all but two. He argues were on track for the “industrialization” of exploits, with this demonstrating in under an hour and for $30 USD you can develop exploits for zero day vulnerabilities.

“We should start assuming that in the near future the limiting factor on a state or group’s ability to develop exploits, break into networks, escalate privileges and remain in those networks, is going to be their token throughput over time, and not the number of hackers they employ.

Nothing is certain, but we would be better off having wasted effort thinking through this scenario and have it not happen, than be unprepared if it does.”

Are we on the path to 1M CVE’s?

Franceso Cipollone recently made an interesting post where he used insights from Vulnerability Researcher Jerry Gamblin to look at how we may be on a path to 1,000,000 CVE’s.

Every year CVEs grow exponentially, and teams struggle to keep up. This is why were seeing the shift to prioritization, reachability, new categories such as CTEM and the emphasis on runtime security - all topics I have discussed in various deep dive articles I’ve done.

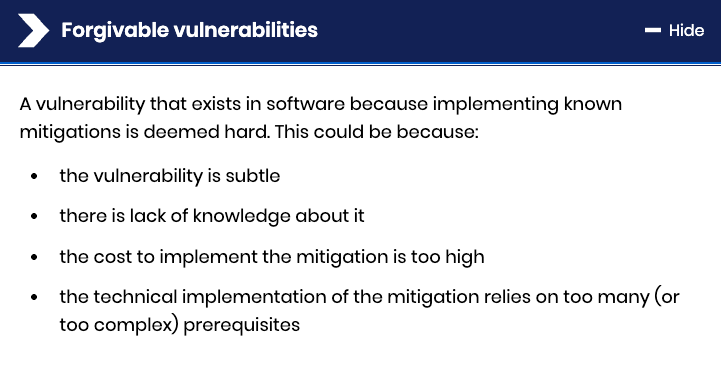

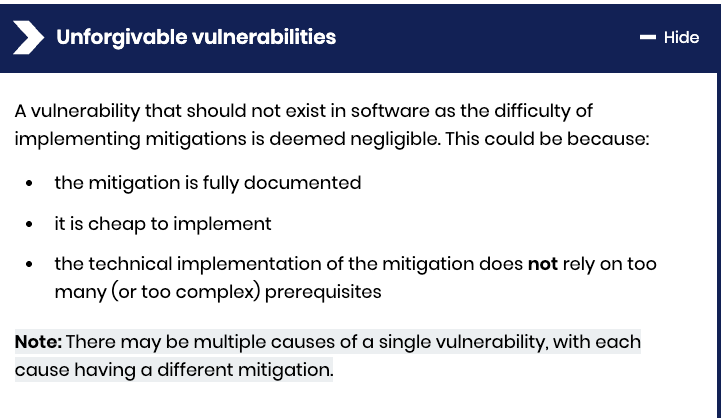

A Method to Assess “forgivable” vs. “unforgivable” vulnerabilities

Is there a such thing as unforgivable vulnerabilities? The UK’s National Cyber Security Centre seems to think so. In fact, they are looking to eradicate entire classes of vulnerabilities, a pursuit echoed by others prior such as Bob Lord and Jen Easterly at CISA.

They even defined Forgivable vs. Unforgivable Vulnerabilities in their blog:

This is an interesting read around vulnerabilities, trends, ease of implementation and more.

When AI Writes Almost All Code, What Happens to Software Engineering?

Gergely Orosz explores the seismic shift happening in software engineering as AI writes more code. Key observations: LLMs like Opus 4.5 and GPT 5.2 are creating 'a-ha' moments for experienced developers.

The creator of Claude Code reports that 100% of his code contributions last month were AI-written. The article examines the 'bad' (declining value of certain expertise), the 'good' (tech lead traits, product-minded thinking become MORE valuable), and the 'ugly' (more code means more problems, potential work-life balance challenges as coding becomes mobile-enabled).

Securing Vibe Coding Tools with SHIELD

Unit 42 introduces SHIELD - a framework for securing 'vibe coding' tools where developers describe what they want in natural language and AI generates the code. The research identifies key risks: AI-generated code often lacks security best practices, may include vulnerable dependencies, and can expose secrets through prompts.

SHIELD provides guardrails including pre-generation policy checks, real-time code scanning during generation, and post-generation security validation. The framework emphasizes that vibe coding's speed benefits must be balanced with security controls.

This is honestly one of the most comprehensive takes on AI security I've seen. The point about securing the hands not the mouth really reframes the entire convo - makes me think back to when my team tried securing an LLM chatbot last year and completely overlooked the API integrations it had access too. The industrialization of exploit generation part is genuinely terrifying when thinking about the economics of token throughput vs traditional pen testing.