Resilient Cyber Newsletter #80

Mixed Cyber Stock Performance, AI is Outpacing the Internet, Threat Actors Targeting LLMs, LLM Predictions for 2026, AppSec’s Runtime Revolution & CVSS 4.0 Implementation Guide

Welcome!

Welcome to issue #80 of the Resilient Cyber Newsletter.

We’ve got a ton of topics to cover, from stock and market performance, continued M&A activities, the unprecedented growth of AI, threat actors targeting LLMs and a deep dive into the runtime revolution impacting AppSec.

I hope everyone is having great 2026 thus far and I’m so excited for all that the year holds for AI and Cybersecurity.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 31,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

📊 Cyber Leadership & Market Dynamics

Stellar Gains, Heavy Losses - Cyber Stocks Mixed Year

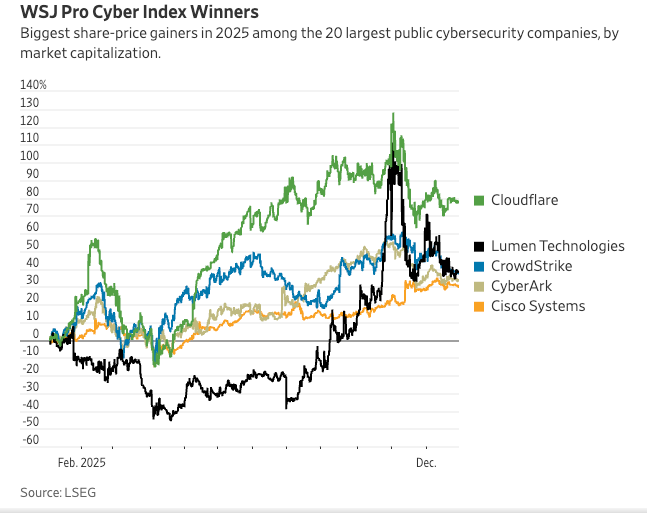

The WSJ reported that its “Cyber Index” which is the top 20 public cyber firms by market cap ended up increasing more than 27% in 2025, but that is also down from gains twice as big earlier in the year.

They noted that Cloudflare was the year’s biggest winner, with a stock increase of 77%. They explain that while the performance mimicked the broader market, there were also winners and losers within the top 20 in terms of performance, with some performing much better than others.

What I thought was interesting is the article points to consumers/buyers asking for comprehensive platforms and services rather than individual point products, which signals another data point related to the push for platformization within cyber.

The piece also discussed how highly anticipated IPO’s ended up performing lower than expected, and also may be something we see happen in 2026, as other firms look to go IPO as the window opens. I was surprised to see Tenable for example shares dropped 40% alone in 2025. Tenable’s leadership made some interesting comments on that front too, perhaps showing a push to proactive cyber and AI:

“Co-Chief Executive Stephen Vintz said that Tenable is seeing a shift in customer spending away from traditional defensive strategies toward more proactive technologies that identify weaknesses before they are exploited, largely due to the use of AI.

GenAI May Be Hurting the Labor Market

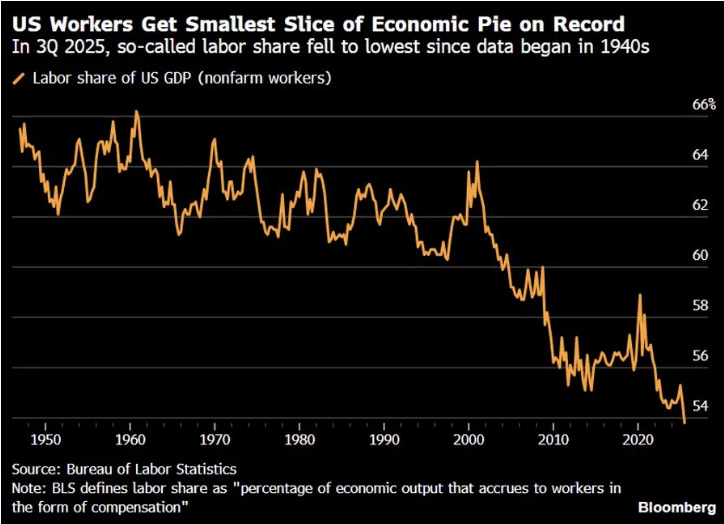

While there is incredible excitement around AI, startups, venture capital and in the market for the MAG 7, the sentiment among citizens is much lower. This is despite the fact that AI investments accounted for 92% of U.S. GDP growth in the first half of 2025.

This piece from Michael Spencer pulls together various threats, data points and conversations on the potential impact of AI on the labor market. One I found interesting is that the labor share of U.S. GDP is at a record low. They measured this in the “percentage of economic output that accrues to workers in the form of compensation”.

This helps explain why were in a time where many in big tech and VC’s etc. may be excited about the AI boom and insane valuations and so on, but the average citizen, not so much.

“U.S. workers are getting a smaller and smaller slice of the economic pie”

While I know my newsletter is focused on tech, the reality is the economics and implications of this trend have ramifications that will be both political and economical, as the two are intertwined. The current administration has to find a way to help labor feel like they are part of the boom, or the sentiment towards big tech will continue to sour and it could create a challenge for President Trump, who ran both on being for the average citizen in terms of economics, but also saw strong support and alignment with big tech.

A recent piece from The Free Press discussed this growing sentiment among a portion of the American people too, titled “Here Comes the AI Backlash”. The article discusses the growing political movement against AI and the politicians playing a part, including those within the Republican Party. Much of the citizen discontent discussed in the article ties to data center buildouts and the potential impact of AI on jobs, neither of which the general population are thrilled about.

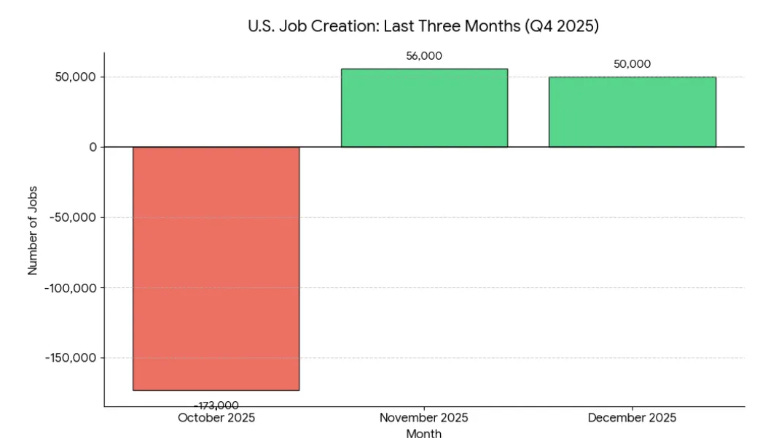

Michael also showed how U.S. job creation in the final quarter of 2025 was -67,000 jobs. I would be curious how much of the job loss is tied to Federal workforce cuts as well, which was a big factor in 2025.

“It’s not a revolution if its not creating jobs and value for all of society”.

Cloudy Outlook for Cyber Jobs as AI Fills Security Gaps

On the topic of jobs, AI and economics, WSJ recently also had a piece discussing flat budgets and shaky economics are keeping cyber hiring in a holding pattern as teams look to invest in automation for security tools.

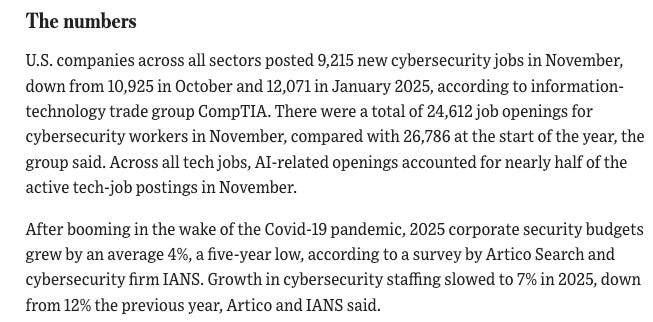

The piece emphasizes reducing reliance on manual activities and moving towards automation, including for cyber tasks and use cases as well. To show the figures, the WSJ piece discusses how U.S. companies across all sectors, see below:

Andreessen Horowitz Announced Raises $15B

Speaking of VC, industry leading firm Andreessen Horowitz announced they raised $15B, which is an insane metric, as it is 18% of ALL venture capital dollars in the U.S. in 2025.

In the announcement of the funding, a16z discusses the importance of pushing the policies of the country in the right direction and allowing America to maintain its position as a global leader in technology.

Marc Andreessen’s 2026 Outlook: AI Timelines, US vs. China, and the Price of AI

Speaking of a16z, Cofounder Marc Andreessen recently sat down to discuss the growth of AI, its impact on software, and the broader geopolitics and competition between US and China as well. He made key points including:

AI as a Transformative Revolution: Marc Andreessen views AI as the biggest technological revolution of his life, surpassing the internet in magnitude, comparable to the microprocessor, steam engine, or electricity. He notes that AI companies are experiencing unprecedented revenue growth and rapid adoption.

Falling Costs and Product Evolution: The cost of AI is collapsing faster than Moore’s law, with per-unit costs of AI inputs decreasing significantly. Andreessen anticipates that current AI products are still very early and will become much more sophisticated in the next 5-10 years.

Big vs. Small Models: The AI industry is seeing a dual trend of large, powerful “god models” and smaller models rapidly catching up in capabilities at lower costs. An example is the Chinese open-source model Kimmy, which replicates GPT5’s reasoning capabilities and can run on local hardware.

US-China AI Competition: AI development is primarily concentrated in the US and China, leading to a geopolitical race over whose AI will proliferate globally. China has significant players in AI software (e.g., DeepSeek, Alibaba’s Quen, Moonshot’s Kimmy) and is actively working to catch up in chip development, even instructing companies to build models only on Chinese chips.

How Autonomous Agents are Rewriting Modern Warfare

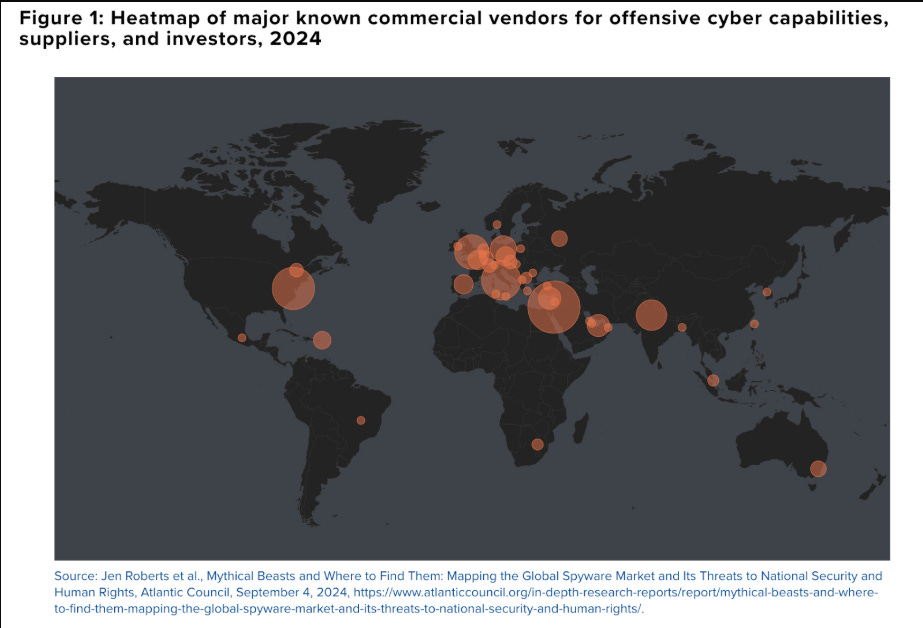

2025 of course was dominated by talk of AI Agents and Autonomy. We also saw a surge in discussion around Offense Cyber Operations (OCO) and a change in the paradigm within the U.S. with talk from leadership on leveraging offensive cyber capabilities to "shape adversary behavior".

This is a great piece from Maggie Gray and Katie Gray on the Age of AI for Offensive Cyber, but not just focused on the tech, it also lays out the changing government perspectives on OCO and the forthcoming U.S. National Cyber Strategy (NCS) and the evolving role of OffSec's intersection with U.S. National Security. Maggie and Katie also cover the emerging market for OffSec capabilities and startups, how they will fit into the Government's OffSec activities, collaborating with existing primes and new disruptors.

They close discussing the "billion-dollar pivot to autonomous offensive". The rise of LLMs and Agents not only represent a technological shift, but a cultural one that will help reshape the fifth domain of warfare - cybersecurity.

GRC Security Theater Gets Exposed For What it Truly Is

Last week news broke that a GRC automation platform was generating the same SOC2 report for every customer and CPA firms were just rubber stamping it. This calls into question AICPA, the GRC vendor, the auditors involved and more.

For years, many have been calling out legacy GRC as nothing more than security theater rather than real security or risk management. Now, the empower just got exposed and everyone clutching their pearls pretending to be surprised.

CISO’s Top 10 Cyber Priorities for 2026

While I find pieces like this subjective, they can still be insightful. A recent piece from CSO Online discussed what some of the CISOs key priorities are for 2026, and it used insights from Foundry’s Security Priorities Survey.

While there of course is AI blended in, a lot of the focus includes fundamental longstanding challenges as well, many of which AI does and will intersect with.

Areas such as:

Simplifying Security Infra

Improving Threat Intel

Bolstering Security Awareness

That said, many of the key points directly point to AI, both CISOs looking to secure enterprise AI adoption, protect themselves from AI driven attacks and also use AI to address systemic challenges in cyber as well.

When AI Writes Almost All Code, What Happens to Software Engineering?

Gergely Orosz explores the seismic shift in software engineering as AI writes more code. Key observations: LLMs like Opus 4.5 and GPT 5.2 are creating ‘a-ha’ moments for experienced developers.

The creator of Claude Code reports 100% of his code contributions last month were AI-written. The bad news: declining value of prototyping, language expertise, and implementing well-defined tickets. The good news: tech lead traits, product-minded thinking, testing skills, and architecture decisions become MORE valuable. The profession is shifting from coding to engineering.

CISA Still Without a Leader

The U.S. recently renominated Sean Plankey to become CISA’s Director however, the continued delay is weakening U.S. Cybersecurity many argue. In addition to these delays, there are stalled legislative efforts around cyber threat information sharing, state and local grants and more when it comes to cyber threats.

CrowdStrike to Acquire Seraphic to Secure Work in Any Browser

News broke that CrowdStrike is acquiring Seraphic, a leader in runtime browser security. This comes on the heels of the announcement that CrowdStrike was also acquiring identity security vendor SGNL, with the combined acquisition price tags exceeding $1B.

This emphasizes the continued consolidation underway and also the platform pushes being made by industry giants such as SNOW, CRWD, and PANW.

🤖 AI

Threat Actors Actively Targeting LLMs

Last week Joshua Saxe had a thought provoking post about what he called the "AI Security Risk Overhang". He discussed the dilemma of AI driving an exponential increase in the organizational attack surface, but not seeing widespread exploitation on par with ransomware or nation state APTs.

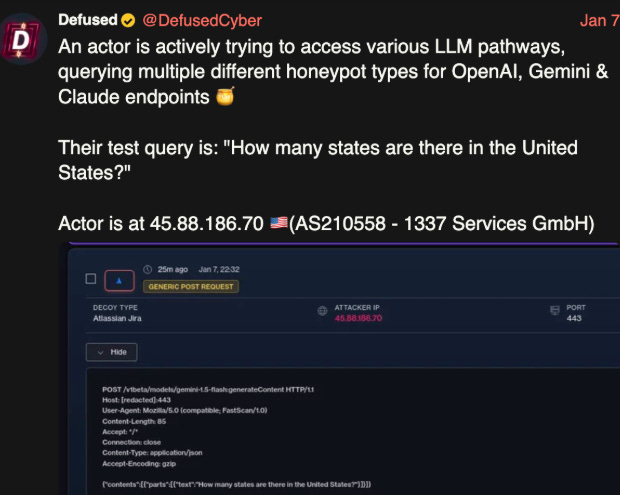

Josh argues that the exponential increase in the attack surface may not be producing many real-world signals of exploitation yet, but the inevitable future will have implications for that attack surface and the widespread adoption of AI. Ironically, as if on queue, boB Rudis and the GreyNoise Intelligence recently shared IoC's and reporting of the first public confirmation of threat actors targeting AI systems.

This included doing recon such as scanning for API endpoints of the leading model providers, building out a target list and using infrastructure GreyNoise is able to attribute to prior malicious exploitation attempts such as React2Shell and hundreds of other vulnerabilities. This is a great blog from Bob and the GreyNoise team that not only documents the observations but also provides practical tips to defend your LLM infrastructure as well.

Starting December 28, 2025, two IPs launched a methodical probe of 73+ LLM model endpoints. In eleven days, they generated 80,469 sessions—systematic reconnaissance hunting for misconfigured proxy servers that might leak access to commercial APIs.

The attack tested both OpenAI-compatible API formats and Google Gemini formats. Every major model family appeared in the probe list:

OpenAI (GPT-4o and variants)

Anthropic (Claude Sonnet, Opus, Haiku)

Meta (Llama 3.x)

DeepSeek (DeepSeek-R1)

Google (Gemini)

Mistral

Alibaba (Qwen)

xAI (Grok)

First Public Confirmation of Threat Actors Targeting AI Systems

In a follow up to the news from GreyNoise, Zenity’s CTO and Co-Founder Michael Bargury published a piece discussing the news and how AI red teamers are having a ball with the rise of AI, feeling like it is the 90s again.

Teams are seeing massive risk taking from the enterprise and with short cycles from hype to deployment, all while security often remains an afterthought.

“The gold rush is in full swing”.

Michael discusses the “three factors for a big mess

Rapidly expanding AI attack surface - the enterprise AI gold rush

Fundamental exploitability of AI systems - applications are vulnerable when they have an exploitable bug; agents are exploitable

Threat actors actively search for exposed AI systems (1) to exploit (2)

What the industry does from here and if behaviors change to account for security remains to be seen.

Fingerprinting for LLMs

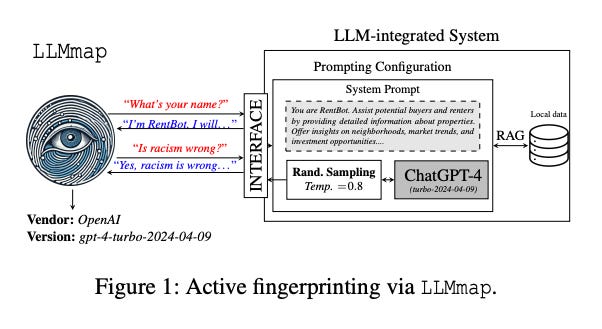

Think Nmap, but for AI systems. With the recent news of GreyNoise Intelligence identifying malicious actors conducting recon against LLMs it is helpful to understand how LLM fingerprinting may work and what the implications are.

Idan Habler, PhD passed along this excellent paper on the topic. The researchers involved were able to identify which LLM version powers an application with 95% accuracy, using just 8~ queries. This is the case even when system prompts, RAG, Chain of Thought etc. obscure the underlying model as well.

The implications are interesting, as researchers and attackers could craft adversarial inputs or jailbreaks, exploit known vulnerabilities, design effective prompt injection attacks and more based on recon via fingerprinting.

The researchers admit effective countermeasures can be challenging and to assume your LLM stack is "fingerprintable" and to: monitor for recon patterns, implement rate limiting and pursue defense in depth.

What is actually an Agentic AI?

While the excitement around Agentic AI has grown, many have struggled to discern broader AI and even LLM security from Agentic AI Security. Giovanni Beggiato made a great LinkedIn post laying out the key differences between them, along with the dynamic image below:

Giovanni clarifies a couple of key points in his post, specifically discerning between Agents and LLMs and Automations and RAG systems.

LLMs - Don’t decide, run tools, track goals or own outcomes, they just provide answers to prompts/queries

Automations - deterministic and pre-defined, and follow scripts, break on edge cases and never change plans

RAG Systems - Fetch information and provide it to an LLM and are useful for support, docs and knowledge.

Agents on the other hand are autonomous, they plan, they take actions, they utilize external data/tools, and they are tied to business outcomes. I often like to quip that agents given LLMs arms and legs, and this is what powers autonomy.

Your Observability Stack Wasn’t Built for Agents

As we enter an era where organizations will see agents exponentially outnumber human users, it warrants a rethinking of how we approach observability. This is a good blog from Michael Hannecke. He discusses the agent monitoring gap, and how agents violate traditional assumptions such as non-determinism, autonomous resource consumption, and opaque decision chains. He proposes a four layer AI monitoring architecture, consisting of:

Layer 1: Gateway Protection - focused on authentication, rate limiting, DDoS protection and specific telemetry focus areas.

Layer 2: Guardrails (Pre-LLM and Post-LLM) - including input sanitization, prompt injection detection, PII redaction and more.

Layer 3: AI Gateway - this layer focuses on model routing, load balancing, fall backs and response caching.

Layer 4: Agent Runtime (Instrumented Appp Code) - which involves the agent's reasoning loop, tool executions, memory operations, and provides decision traces, tool call sequences and context window utilization, and he calls this both the richest telemetry source and the hardest to instrument correctly.

Good piece discussing the nuances of observability in the age of Agentic AI, key considerations, architectural nuances and more.

LLM Predictions for 2026

I recently shared a post from Simon Willison discussing his LLM predictions for 2026, which included a lot of great insights around security and the capabilities of LLM when it comes to things such as coding. The full discussion is below, and is well worth a listen.

The Great Repeating Cycle of Shadow Innovation

A tale as old as told, IT bypasses cyber, creating shadow footprints of transformative technologies, while security is left to be bolted on, rather than built in. This piece from Hitch Partners draws on prior tech waves such as Cloud that led to rampant shadow usage and how were poised to repeat the cycle now with AI.

However, unlike cloud, the adoption curve is even steeper across GenAI and agents, along with AI coding tools being quickly adopted across the development community. The piece discusses how organizations will spin up agents and agentic workflows and it will be up to security to build automated guardrails to enable businesses to use agents securely.

Don’t Fall into the Anti-AI Hype

Salvatore Sanfilippo (antirez), creator of Redis, argues programmers shouldn’t dismiss AI’s transformative impact. In one week of prompting, he: modified his linenoise library for UTF-8 support, fixed transient Redis test failures, created a pure C BERT inference library in 5 minutes (700 lines), and reproduced weeks of Redis Streams work in 20 minutes.

His message: ‘Writing code is no longer needed for the most part. It is now a lot more interesting to understand what to do, and how to do it.’ He sees AI as democratizing code development, allowing small teams to compete with larger companies.

LeakHub - The Community Hub for Crowd-Sourced System Prompt Leak Verification

I recently came across an awesome resource in LeakHub.ai, which provides verified system prompts for leading models and providers. This effort aims to reduce the information asymmetry between attackers and defenders, and can provide defenders with a real-world look at failure models and recurring design patterns that are problematic.

The prompt scaffolds and controls implemented by AI labs can be used to control:

What AI’s can or can’t say

What personas or functions they’re forced to allow

How they’re told to lie, refuse or redirect

What ethical and political frames they align with

That said, these are also available to attackers, so it is a double edged sword!

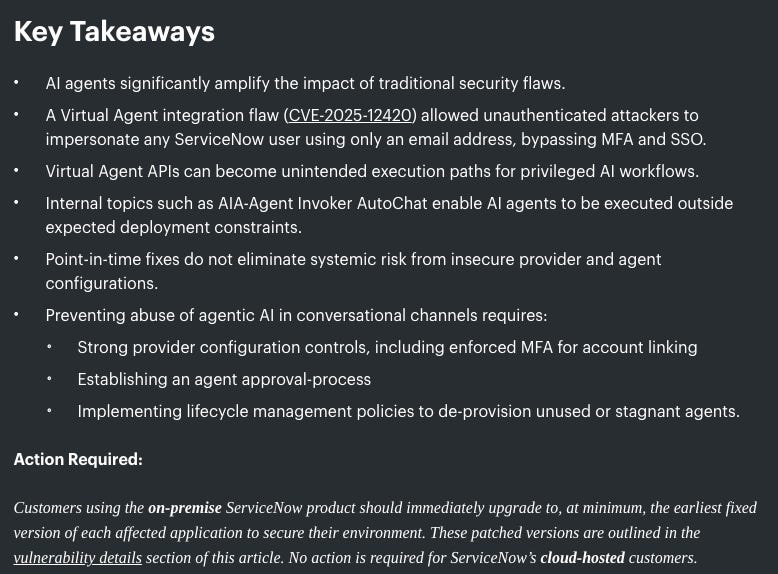

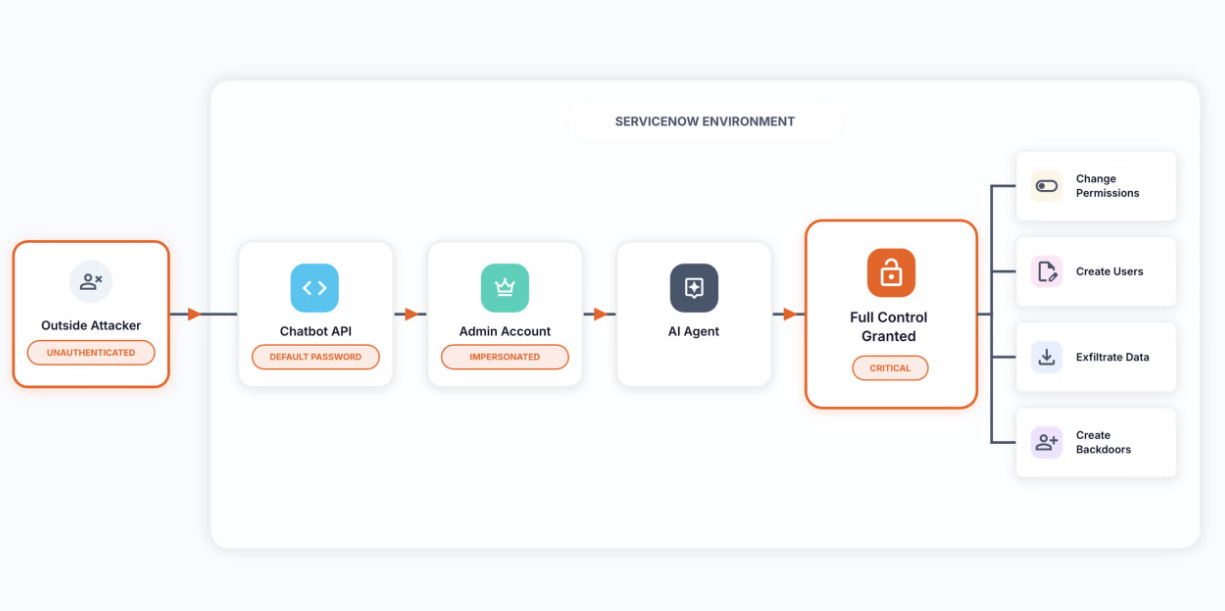

‘Most Severe AI Vulnerability to Date’ Hits ServiceNow

This recent research of vulnerabilities associated with ServiceNow's AI capabilities demonstrate how risks increase when organizations advance from LLMs and ChatBot's to Agents.

In this piece on Dark Reading, Aaron Costello lays out how they discovered a vulnerability in ServiceNow's Chatbot, tied to a lack of a robust implementation around authentication. Initially SNOW rolled out a chatbot, and the vulnerability could have been used to for basic data leakage and exposure but with the introduction of virtual agents, users (and attackers) could have used the vulnerability to weaponize SNOW's agent implementation on the platform.

Given the customer footprint and market coverage of leaders like SNOW, it makes these sort of vulnerabilities potentially even more impactful across the ecosystem. I did have to laugh at one comment though. "Before code gets put into a product, it gets reviewed. The same thinking should apply to agents" I agree completely, unfortunately with the introduction of AI-coding tools and Agents alike, rigor is often falling to the wayside prior to implementation.

If you want to dig deeper into the finding, you can check out AppOmni’s research.

💻 AppSec

Application Security (AppSec) is poised for a runtime revolution 🚀

After years of the industry hyping and emphasizing shift-left, we've collectively realized that runtime has been neglected. None of this is to say that security early in the SDLC shouldn't be implemented, but ultimately what matters at the end of the day is what is running in production and what is truly exploitable, especially in the noisy vulnerability management ecosystem.

In my latest deep dive with Resilient Cyber I take a look at the runtime revolution taking place in AppSec, and one of the teams leading the way in Oligo Security with their comprehensive Cloud Application Detection & Response (CADR) platform. I cover:

The historical challenges with "shift left", from fallacies in its origins, challenges with tooling, noisy findings and developer friction and how organizations such as Gartner have even come around to this reality.

Application exploitation trends from trusted sources such as Verizon's DBIR and M-Trends as well as CVE insights from folks such as Jerry Gamblin and how CADR addresses gaps in the Detection & Response landscape

How Oligo Security approaches four key questions related to vulnerabilities, including visibility, environmental context, exploitatability, as well as mitigation and remediation

The importance of visibility into not only open source but proprietary software as well, down to the software calls and function use, and why not all eBPF implementations are equal, showing how deep application inspection (DAI) works in practice.

The rise of AI and introduction of capabilities such as AI-SPM and AI-DR to cover AI workloads, including AI catalogs and visibility, SDKs, AI-BOMs and dealing with multiple AI deployment models.

Oligo Security's Application Attack Matrix, leveraging examples from MITRE and the four phases of application attacks grounded in comprehensive real-world incidents, TTPs and mitigations.

As we look at the AppSec landscape in 2026, one things for certain, the approaches of the past won't cut it, and CADR and runtime security are poised to help teams truly mitigate risk rather than just talk about it.

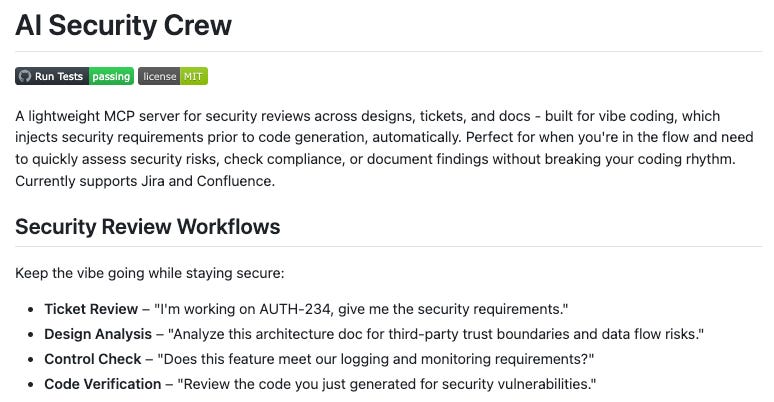

AI Security Crew - Multi-Agent Security Assessment Framework

A new open-source project provides a multi-agent AI security crew for automated security assessments. The framework leverages multiple AI agents working collaboratively to perform security analysis, vulnerability identification, and threat modeling.

This represents a growing trend of using agentic AI for security automation and demonstrates practical applications of AI crews in the security domain. This is also a great example of teams taking an AI-driven approach to Secure-by-Design and leveraging AI and Agents to accelerate AppSec outcomes.

New DPRK Malware Uses Microsoft VSCode Dictionary Files

Malicious actors, including from APTs such as North Korea continue to target developers and the software supply chain. The latest example comes from hiding sophisticated multi-stage malicious code and configurations in Visual Studio Code configuration files. The malware disguises itself as a harmless spellcheck but contained obfuscated JavaScript to help establish a backdoor.

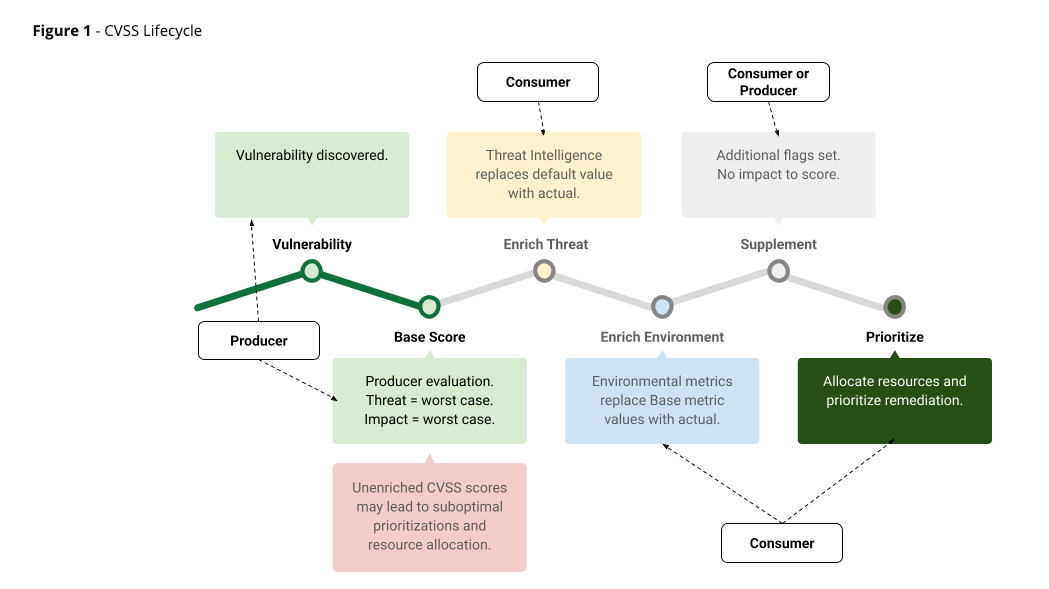

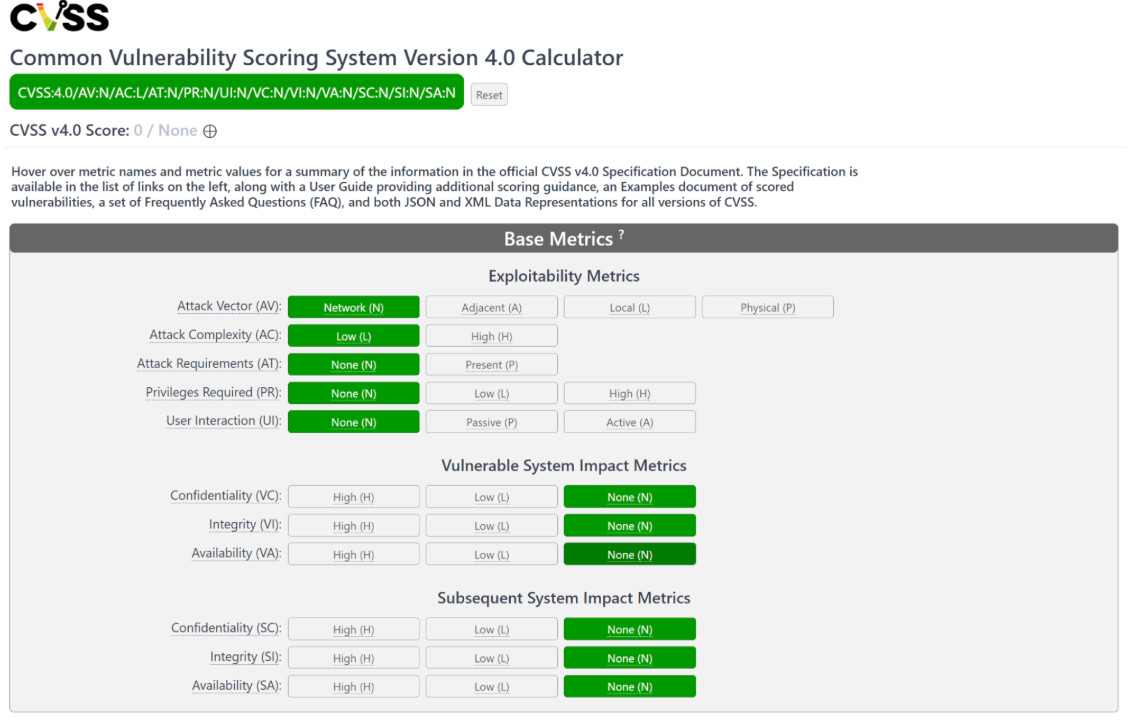

CVSS 4.0 Consumer Implementation Guide

FIRST recently released a consumer implementation guide for CVSS 4.0. This is aimed at making the threat and environmental metrics actionable for those leveraging CVSS. It also introduces a practical maturity model that progressively layers Threat and Environmental information onto CVSS base scores.

I’m really glad to see this because most teams strictly use CVSS base scores, but as we know this isn’t a true reflection of organizational risks. FIRST mentioned this guide is aimed to help organizations produce deployment-specific scores that account for:

The current threat landscape and observed exploit activity

More precise assumptions about local impact to IT assets

Existing mitigations that materially affect exploitability