Resilient Cyber Newsletter #77

SEC Drops SolarWinds Lawsuit, [un]prompted AI Security Conference, Cloudflare’s Take on Security Slowdowns, “IDEsaster” & Cybersecurity = National Security

Welcome!

Welcome to issue #77 of the Resilient Cyber Newsletter. This is our final issue of 2025, as we wrap up a crazy year in the world of cybersecurity.

This week we cover topics such as the SEC dropping their lawsuit against SolarWinds and CISO Tim Brown, critiques of the U.S. decision to sell advanced chips to China, Cloudflare sharing insights on how their internal security impeded their ability to recover from an incident and a letter from a Congressman emphasizing the need for securing the open source ecosystem.

All of this and more, so I hope you enjoy the resources/discussions and have a Merry Christmas and Happy Holidays with loved ones - from my family to yours.

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Cyber Leadership & Market Dynamics

The U.S. Can’t Get Xi Hooked on NVIDIA Chips

Relinquishing the U.S. lead in frontier AI models while actively supporting China's military and economic advancement. That's the case Dmitri Alperovitch makes in this recent WSJ piece when it comes to exporting H200 chips to China.

Rather than make China "dependent" on NVIDIA's tech, Dmitri argues it will bolster China while diminishing the U.S. lead on AI. Dmitri points to large hyperscalers decoupling their reliance on NVIDIA for their latest models and using their own chips and architectures.

He argues China will use the H200s to accelerate their role in AI while concurrently building up their own domestic capacity and capabilities for chips. Given how pivotal both of the global powers have stated AI is to their economic and national security, it is easy to see why this move by the currently Presidential administration in the U.S. is highly debated.

What CISO’s Should Know About the SolarWinds Lawsuit Dismissal

We recently saw the SEC dismiss their lawsuit against SolarWinds and its CISO Tim Brown, after a long drawn out process. This piece from CSO Online argues the event may be a turning point for not only CISOs and the cyber community when it comes to cyber accountability, but also regulators and boards as well.

While the article says many CISOs and security leaders may be taking a sigh of relief, it doesn’t mark the end of concerns around accountability for security leaders and should still be considered a shared responsibility across the organization, including CISOs.

Speaking of Tim Brown and the community, Chenxi Wang and Joe Sullivan sat down with Tim to dive into the news, pointing out all Tim had to deal with during the ordeal, not only professionally, but personally as well, due to the stress and toll it took on him.

[un]prompted - The AI Security Practitioner Conference

Really excited to share I'll be on the review board for CfP's to this one of a kind event. It's an AI security practitioner-focused conference on March 3rd/4th in Salesforce Tower SF. The talk tracks and speaker lineup thus far looks truly outstanding.

Track 1 - Building Secure AI Systems, featuring folks such as Caleb Sima, Chris Wysopal, Ken Huang and Tim Brown

Track 2 - Attacking AI Systems, with names such as Michael Bargury, Ads Dawson, Philip A. Dursey and Rich Mogull

Track 3 - Using AI for Offensive Security, including Robert Hansen, Daniel Cuthbert, HD Moore and Casey Ellis

Track 4 - Using AI for Defensive Security features Heather Adkins, Anton Chuvakin, Ronald Gula, Daniel Miessler 🛡️ and John Yeoh

Track 5 - Strategy, Governance & Organizational Reality with Phil Venables, Jason Clinton, Larry Whiteside Jr. and Gary Hayslip

Track 6 - Practical Tools & Creative Solutions with Sounil Yu, 👑 Kymberlee Price, Joe Sullivan and Thomas Roccia

The event focuses on what actually works in AI and will range from deeply technical to policy level discussions. This is genuinely one of the most impressive lineups I've seen on the industry's most critical and timely topics, big shoutout to CFP leads and organizers Gadi Evron, Ryan Moon, Aaron Zollman and Sounil Yu. Be sure to submit CFP's by January 28th!

Check out the full event -> https://unpromptedcon.org/

Cloudflare’s Post-Mortem - Security Impeded Recovery?

In mid November, Cloudfare experienced an incident that impacted their ability to delivery network traffic for over two hours. It happened again three weeks later in early December, which impacted their ability to serve traffic to almost 30% of the applications behind their network.

They recently published a new blog they called “Code Orange: Fail Small”, where they discuss their goals of making their network and services more resilient. In the blog, they discuss how their efforts to protect customers from the vulnerability in the open source framework React initiated the incident due to configuration changes and software updates.

In the same blog, they also discuss how their security measures actually slowed down their ability to respond to the situation:

“During the incidents, it took us too long to resolve the problem. In both cases, this was worsened by our security systems preventing team members from accessing the tools they needed to fix the problem, and in some cases, circular dependencies slowed us down as some internal systems also became unavailable.

As a security company, all our tools are behind authentication layers with fine-grained access controls to ensure customer data is safe and to prevent unauthorized access. This is the right thing to do, but at the same time, our current processes and systems slowed us down when speed was a top priority.”

This is a really interesting read that demonstrates how having a high assurance and secure environment can lead to complexities in dependencies and slow down an organizations ability to move fast when things start to face disruptions.

Are we in an Identity Crisis?

Speaking of Identity and IAM Security, my friend Cole Grolmus had a great discussion with SailPoint CEO Mark McClain which peeled back how SailPoint is looking at the modern identity security landscape, why traditional approaches no longer can keep up with the scale, complexity and speed of the modern enterprise.

Losing Deals to the Status Quo

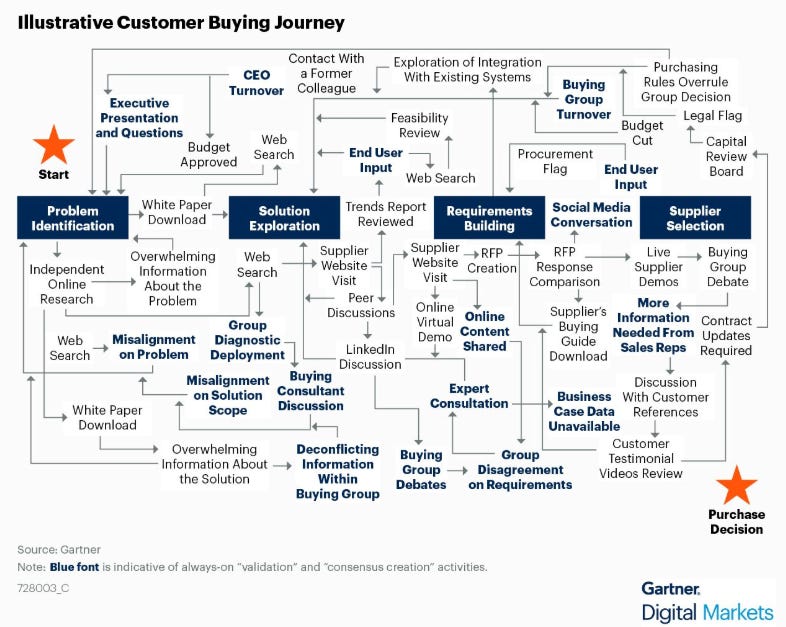

While we may hear headlines about rapid growth from $0-$1B in the age of AI, the reality is the buying journey in most enterprise environments is incredibly complex and challenging. This includes a lot of potential paths where deals can wither on the vine, chase an elusive consensus, run into internal politics and more.

My friend Ross Haleliuk shared this great reminder from Gartner of just how chaotic the purchasing process can look.

AI

2025 Resilient Cyber AI Security Rewind

As promised, I've compiled a recap of most of the interviews, deep dives and analysis I've done as part of Resilient Cyber in 2025, and what a year it's been. AI, LLMs and Agents have continued to impact everything from venture capital, startups, Identity, SecOps and more.

I was joined by folks such as Chenxi Wang, Ph.D., Ed Sim and Sid Trivedi to walk through the venture capital and investment opportunities at the intersection of Cybersecurity and AI, while James Berthoty laid out his 2025 AI Security Report.

In one of the most discussed topics of using AI for Security, Grant Oviatt, Kamal Shah, Lior Div and Nathan Burke laid out why they think AO SOC is the future of SecOps.

Industry AppSec legend Jim Manico explained how to use AI as a force multiplier in AppSec, while Varun Badhwar, Amod Gupta, and Henrik Plate went beyond the buzzwords related to how AI and Agents are and will change AppSec moving forward.

Michael Bargury explained why we need a new security paradigm to secure the Agentic AI revolution and Vineeth Sai Narajala and Christian Posta went deep on the risks of AI, Agents and the Model Context Protocol (MCP).

Leaders such as Edward Merrett, Elad Schulman and Sounil Yu discussed the need to secure AI consumption and usage and modernizing principles such as need-to-know.

Snehal Antani came at things from the Offensive Security (OffSec) angle around Autonomous Pen Testing and Andrew Carney laid out key findings from Defense Advanced Research Projects Agency (DARPA)'s AI Cyber Challenge (AIxCC).

Not to be forgotten, Rob T. Lee covers the workforce implications of AI and the always interesting Daniel Miessler 🛡️ explains how he personally uses AI to scale productivity.

In addition to these interviews, I covered major guides, frameworks and publications from orgs such as OWASP GenAI Security Project, Cloud Security Alliance, IBM and others on AI's impact in cybersecurity.

I've compiled all of it in the blog below, so I hope you enjoy. Huge thanks to so many industry leaders and folks who took their time to come chat and share their expertise and experiences with not just me but the community as well 🙏

How did we get to where we are in AI?

As things continue to evolve at what feels like a crazy pace, it’s helpful to reflect back on how we got here. This was a really great talk from Jeff Dean, Chief Scientist of Google DeepMind

Jeff walks through the major phases and evolutions of AI from neural network, back propagation, open source tooling and of LLMs, Transformers and Chain of Thought Reasoning. He also discusses current models such as Gemini and where the modern models excel, such as coding.

This is a great resource to close out the year, along with other talks from Stanford University’s AI Club.

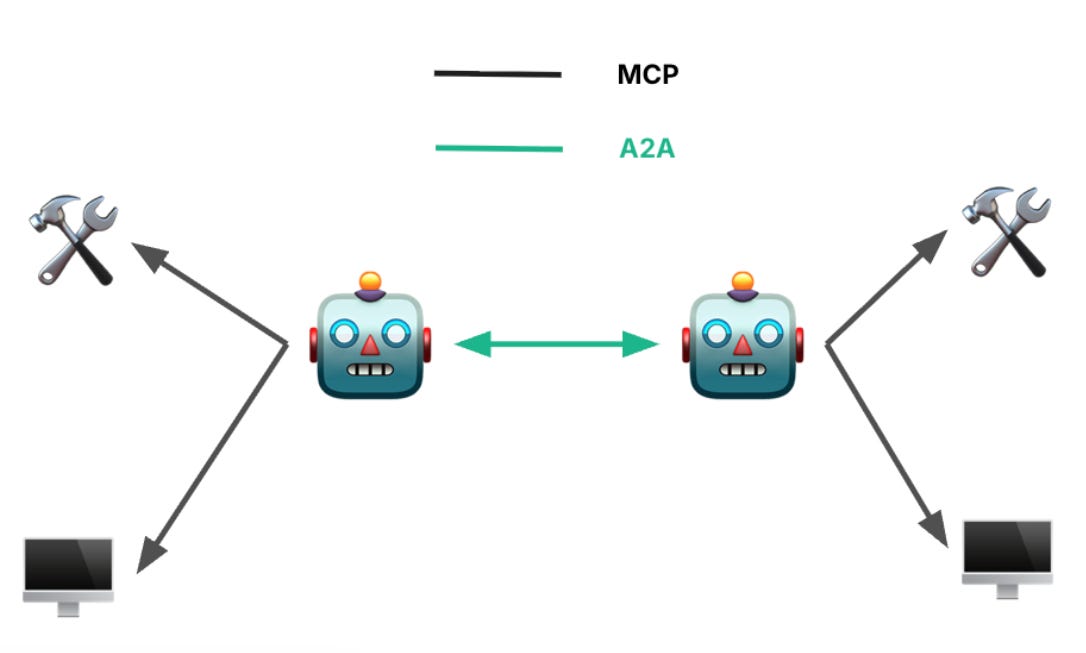

A Security Engineer’s Guide to the A2A Protocol

As we continue to see the rapid interest and adoption of Agentic AI, we have seen the rise of protocols to support agentic architectures. Among those is the Agent-to-Agent (A2A) protocol, originally from Google.

This piece from Kurt Boberg is a great primer for security engineers to understand A2A and covers core concepts such as:

What A2A is, who it is for and why we need it

Does it compete with MCP?

Core abstractions and security points of interest

Where the attack surface lies and potential protocol-level issues

An A2A audit checklist

This is a great short read on the core aspects and security considerations of A2A.

AI SOC Solutions Market

The SOC market has arguably been one of the hottest and most discussed cyber categories when it comes to leveraging AI. That said it is a noisy market, due to the sheer number of firms pursuing AI SOC solutions and players in the space.

This piece from Qevlar AI (which is itself a AI SOC vendor) covers the evolution of the market and key questions to ask when looking to choose an AI solution for your SOC. They point out that there are now more than 70 standalone AI SOC platforms today. This includes everything from the largest industry players down to many startups and founders looking to disrupt the space, many of whom boast significant venture capital backing too.

The shift from assistance to agency

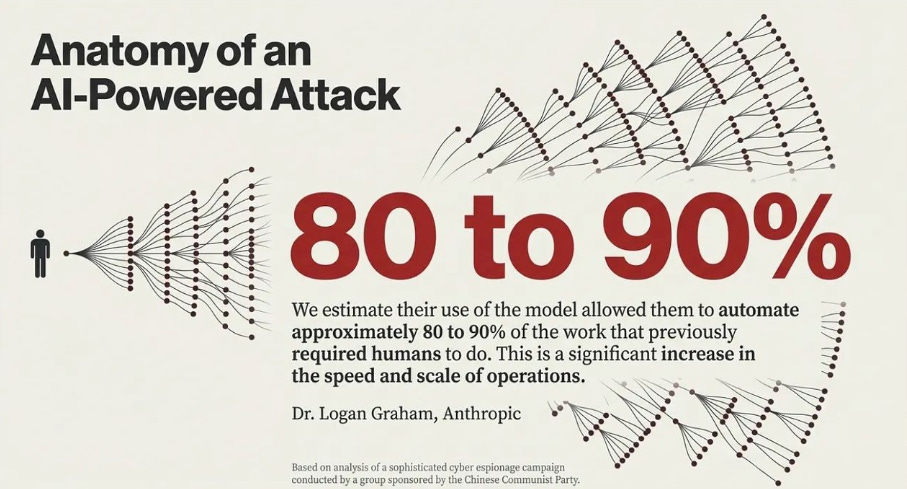

Recently Anthropic made headlines when they laid out the details of the first AI-driven autonomous cyber espionage campaign. While the details are debated, the implications aren’t. Rob T. Lee of SANS Institute penned an excellent piece recently discussing the details of the incident, as well as a recent House Homeland of Security Subcomittee testimony.

It features Logan Graham, Royal Hansen, Eddy Zervigon and Michael Coates. The group discussed the rapid advancement in AI-driven cyber attacks and the challenges defenders face.

Among those are the fact that attackers are advancing through the spectrum of autonomy with Agentic AI faster than defenders, information asymmetry where attackers often know more than defenders about their own environments, and the fact that attackers can be “wrong” without it being as consequential.

Failed attempts at autonomy for attackers just means taking another swing at things, botched autonomy for defenders means potential business disruptions and diminished trust among peers - the very things we’re trying to prevent.

There’s a gap between machine speed attacks and machine speed defenses, largely driven by our understandable but often risk averse nature in cyber, and the verifiability challenge in defenses compared to attacks.

This was a point excellently made by Sergej Epp recently, pointing out the binary nature of verifying offensive objectives compared to the opaque and problematic nature of verifying the success of defense measures in security.

To address the increased asymmetry between attackers and defenders, we must shorten the cycles of maturing through the phases of adopting autonomy in defense.

That’s easier said than done though, especially in large complex enterprise environments.

AI IDE’s and “IDEsaster”💥

We know AI-driven development has fundamentally changed the modern SDLC. Widespread adoption of AI IDE's and Agentic coding tools is rampant, with many citing productivity gains. We've also seen a ton of vulnerabilities highlighted in the AI IDE's, as well as risks in MCP, configurations and novel attack vectors.

This piece from Ari Marzuk is a fascinating and alarming one.He found 100% of tested AI IDE's and coding assistants integrated with IDEs were vulnerable to IDEsaster, including security vulnerabilities in 10+ market leading products that are used by MILLIONS of users.

He walks through how legacy features in base IDEs can be weaponized with AI agents to lead to data exfiltration, command execution, and RCEs. In the blog, he walks through various case studies of leading base IDEA's, with examples tied to context hijacking via prompt injection, IDE setting overwrites and multi-root workspace settings.

Really great read demonstrating the complexities and challenges associated with the expanded attack surface that accompanies the introduction of Agents to IDEs.

Anthropic Releases Open Source Tool to Automate Behavioral Evals

Anthropic recently announced that they were releasing an open source agentic framework dubbed “Bloom” which can generate behavioral evaluations of frontier AI models.

They stated it can be used to separate baseline models from intentionally misaligned models and as an example of the tools value, they released benchmark results for four alignment behaviors across 16 different models.

This builds on another recent release named “Petri”, which is an open source tool that lets researchers automatically explore AI model behavior profiles through diverse multi-turn conversations with simulated users and tools.

Agentic AI Already Hinting At Cyber’s Pending Identity Crisis

IAM has already been a longstanding challenge in cyber, accounting for a large portion of annual incidents and attack vectors. Now, the industry is collectively feeling that the rise of Agentic AI is going to exacerbate the already problematic space.

This piece from CSO Online discusses those challenges, such as the fact that:

“An estimated 95% of enterprises have not deployed identity protections for their autonomous agents”.

This is a topic I have discussed in prior articles myself, such as “The State of Non-Human Identity (NHI) Security”.

AppSec

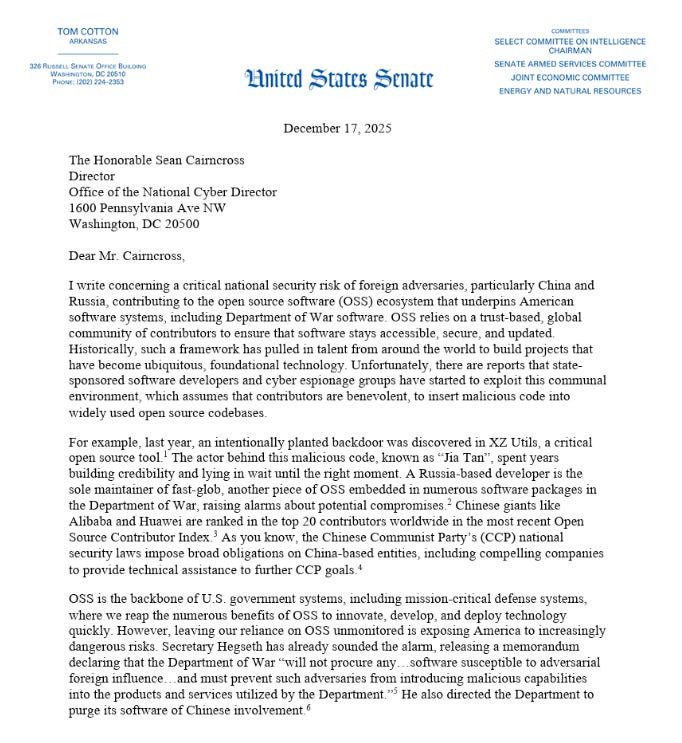

Cybersecurity = National Security

We continue to see the brittle open source ecosystem be targeted by nation state actors. Open source powers everything from consumer goods to critical infrastructure. This is a point Tony Turner and I made in our book “Software Transparency” and the problem has only continued to grow.

Malicious actors continue to pursue novel attack vectors, some technical, some social and often a mix thereof. The list of incidents is long and only expanding.Now, Senators and the Secretary of the DoW are raising alarms about the opaque and problematic challenges of open source consumption.

However, as Josh Bressers Chinmayi Sharma and others have raised OSS by its very nature is opaque and lends itself to anonymity. The U.S. Government absolutely should have better visibility of the software it consumes, including what’s in the products it buys from commercial vendors, most of which is overwhelmingly comprised of OSS as well.

The problem is far from easy to mitigate though, and goes well beyond visibility into proficiencies in detection and response at runtime as well. It will be interesting to see what approaches the U.S. Government and Department of War take to try and address these risks in national security systems and beyond.

Endor Labs Brings Malware Detection Into AI Coding Workflows with Cursor Hooks

We all know AI coding assistants have changed the software development landscape drastically in the past 12-18 months. This includes autonomously selecting open source dependencies, executing commands and potentially introducing risks developers may not be watching closely, or even concerned about.

That’s why it is cool to see teams such as Endor Labs working with leading AI coding platforms such as Cursor. Endor recently announced they integrate with Cursor’s new hooks system which helps inspect dependencies for malware while the AI coding agent is attempting to install them, which prevents malware from ever making its way to the developers endpoint.

Development endpoints have become a common attack vector and target for malicious actors as part of the broader supply chain an can lead to cascading impacts if attackers can move laterally from the device, or contribute backdoors or malicious code to codebases as well.

Cursor launched “Hooks for Security and Platform Teams”, which provides capabilities focused on areas such as:

MCP Governance and Visibility

Code Security and Best Practices

Dependency Security

Agent Security & Safety

Secrets Management

NPM Packages with 56,000 Downloads Caught Stealing WhatsApp Messages

The team at Koi Security continues to identify widespread malicious packages and extensions in the ecosystem. The latest example is a npm package that is a WhatsApp Web API library, that has over 56,000 downloads and functional code. It’s been available for 6 months and they’ve determined it is a sophisticated malware that steals WhatsApp credentials, intercepting messages, harvesting contacts and even installing backdoors.