Resilient Cyber Newsletter #76

Saviynt $700M Series B, AI Impacts Buy vs. Build, U.S. White House AI EO, State of GenAI in the Enterprise, OWASP Agentic AI Top 10, 20254 CWE Top 25 & Fixing the Broken VulnMgt Ecosystem

Welcome

Welcome to issue #76 of the Resilient Cyber Newsletter.

We’re winding down a wild 2025, with a TON of M&A activity over the year, a lot of exciting advancements at the intersection of AI and Cybersecurity and a continuously challenging landscape around AppSec and incidents.

I’ve truly enjoyed sharing my perspective with you all through the year and I’m excited about what 2026 holds!

Personal Note:

With the year coming to a close, I wanted to reflect a bit on what a journey the last few years have been professionally and personally.

To say the last several years of professional growth and development have been a whirlwind would be an understatement.

After the fallout of the SolarWinds incident and among the chaos of Log4j and many other supply chain attacks, in 2023 Tony Turner and I teamed up to write Software Transparency, covering everything from open source, commercial software, SaaS, SBOM’s and more. The problem has only grown in scale and complexity since.

A year later, in 2024, looking around at how fundamentally broken vulnerability management was, and still is, Dr. Nikki Robinson, DSc, PhD and I wrote Effective Vulnerability Management. Diving into topics such as KEV, EPSS, Reachability Analysis, Risk Based Vulnerability Management and how to build an effective vulnerability management program.

In 2025, Ken Huang approached me to partner up on Securing AI Agents, tackling the hottest topic and potentially biggest transformation in recent decades in not just cybersecurity, but in technology overall.

During that time, I also started the Resilient Cyber Substack, publishing 75+ newsletters, 200~ interviews with industry leaders, including investors, founders, researchers and CISO’s along with publishing many long form deep dives and industry analysis articles on all things cybersecurity leadership, market dynamics, AI and AppSec.

I’m incredibly thankful for the community I’ve built around me throughout that process.I continue to learn from the resources you all share, the conversations we have and our mutual efforts to create a more resilient digital society.

And, we’ve still got plenty of work to do!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Cyber Leadership & Market Dynamics

Identity Management “Startup” Saviynt Closes $700M Series B

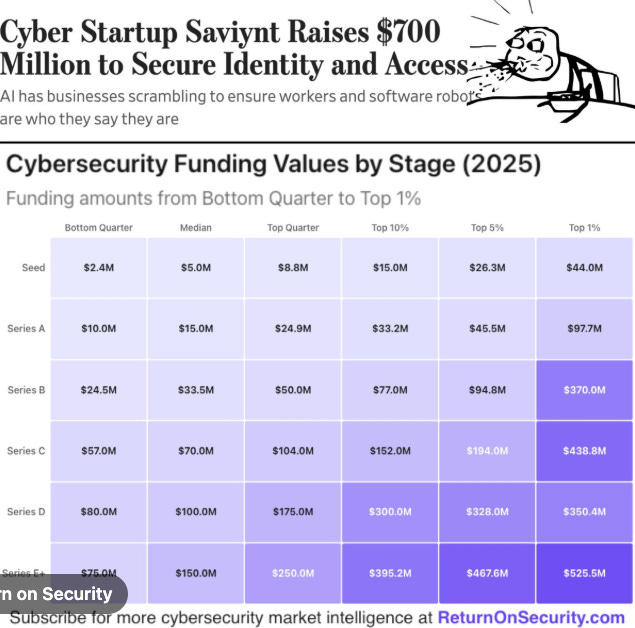

In one of the largest Series B rounds I’m aware of, Saviynt, who focuses on identity security caught headlines with a massive $700M round. KKR was the lead investor along with Sixth Street Growth, TenEleven and returning participants as well.

In the announcement their CEO emphasized that:

“The demand for secure, governed identity has never been greater, and this growth investment gives us the resources to meet it head-on.”

We continue to hear phrases such as “identity is the new perimeter”, and we’ve seen other massive moves in the Identity space this year, such as Veza being acquired by PANW as well.

Safe to say, the identity security space is highly competitive and also densely populated.

My friend Mike Privette of Return on Security (RoS) discussed the round in a recent LinkedIn post, explaining how it is one of the largest Series B rounds in cyber history at nearly 20x the 2025 median.

As he explains, this is more of a PE round than tradition VC Series B, with KKR being the lead investor. While this example is an outlier and not the norm, Mike did note that t he median Series B in 2025 is up 12$ compared to 2024.

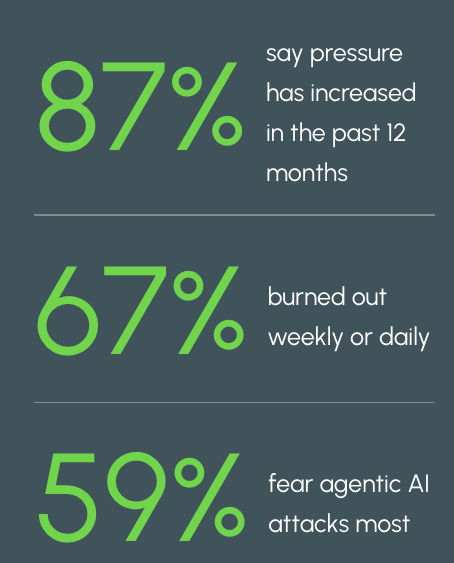

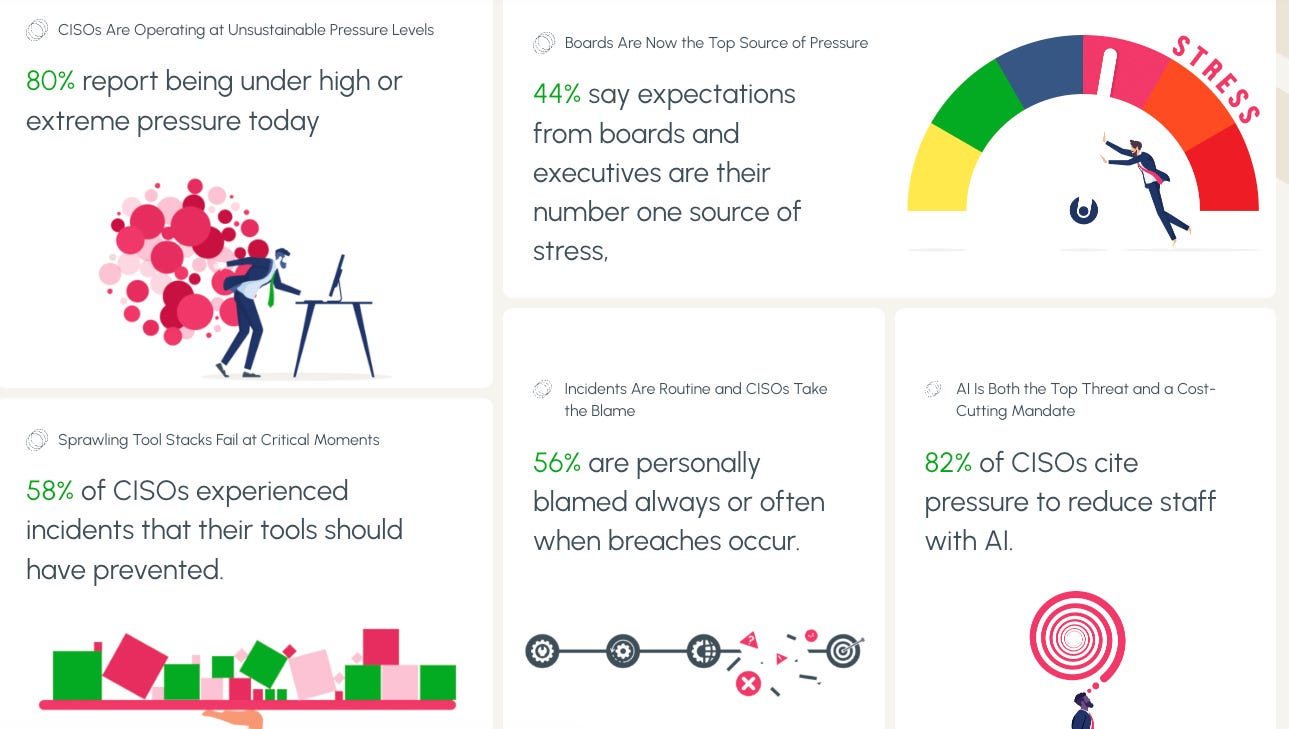

2025 CISO Pressure Index

It’s common by now to hear a lot of discussions about the pressure CISOs and security leaders face. However, there often aren’t specific surveys and studies on the topic, which is why I found this resource from Nagomi interesting.

They found that CISOs said:

Be sure to check out the full report!

Build vs. Buy is Dead - AI Just Killed It

We continue to see revived conversations about Build vs. Buy in the age of AI. I recently had shared an excellent article from Caleb Sima, where he laid out how AI is going to lead to the rise of “zombie tools”, where people chasing resume driven development, or being short sighted about the long tail of product sustainment look to build their own internal products versus buying them leads to a sprawl of orphaned zombie tools.

This recent VentureBeat piece that my friend Chenxi Wang shared with me digs into the topic too. It frames scenarios where individuals within the org can quickly spin up prototypes or versions of products quickly with agentic coding tools. The piece discusses the “old framework”, where organizations grappled with the decision to Build or Buy, with building being expensive and taxing on the existing technical talent. Now, with the rise of AI coding tools, many feel they can take on building due to a collapsing of the cost and complexity of doing so.

What I liked with this piece is it lays out a new potential sequence, as seen below:

Build something lightweight with AI.

Use it to understand what you actually need.

Then decide whether to buy (and you’ll know exactly why).

This framing is a good way to think of it, because it recognizes that Building is a long term strategic investment, but doing quick efforts to build, iterate and tinker before determining what you need and then determining what to buy is a great way to drive better decisions when you finally do commit to making a procurement, while also not framing AI developed prototypes as longterm replacements for commercial grade software products or platform.

This gets to the heart of the matter, that most organizations don’t even know what they need to begin with - but AI may let them prototype to figure it out.

Transforming Product Security with the First Agentic Security Architect

Continuing the momentum in the product security space, Prime Security recently announced their $20M Series A, led by Scale Venture Partners along with Foundation Capital and others.

Prime is an interesting player, focusing on moving Secure-by-Design from theory to tactic and making it actionable by living and breathing in the space and data systems are actually designed in to provide critical context before systems are ever developed or deployed.

Defending Your Cyber Systems and Your Mental Attack Surface with Chris Hughes

When your firewall forgets to buckle up, the crash doesn’t happen in the network first, it happens in your blindspots.

In this episode of Hacker Valley Studio, I got to join host Ron Eddings. I attempted to help reframe vulnerability work as exposure management, connect technical risk to human resilience, and broke down the scoring and runtime tools security teams actually need today.

I also provided clear takeaways on EPSS, reachability analysis, ADR, AI’s double-edged role, and the one habits I swear by. This episode fuses attack-surface reality with mental-attack-surface strategy so you walk away with both tactical moves and daily practices that protect systems and people.

Offensive Security Takes Center Stage in the AI Era

Most experienced security leaders openly admit the way we assess systems and posture is broken. It historically involved snapshot-in-time assessments, paper-based compliance and other inefficient methods. While we move in months, systems change in minutes and attackers exploit what is actually running in production (a fact that is also leading to the rise of new categories such as Application Detection & Response).

In this piece on CSO, various CISO’s and security leaders weigh in on the emphasis they’re playing on Offensive Security (OffSec) and the importance of iterative real-time assessment of their production environments. AI is driving that even more, both from the perspective of attackers leveraging it, as well as innovative companies leveraging AI and Agents to bring ongoing Pen Testing and OffSec to reality. Good examples include Horizon3, XBOW and others.

With the evolution of AI and Agents, we have the potential to take historically cumbersome activities such as Threat Modeling, Pen Testing and Purple Teaming and make them iterative and ongoing, as part of the SDLC, and if compute and inference costs continue to collapse, we will see them become even more economically practical to pursue via AI as well.

Trouble for Microsoft When It Comes to Agentic AI?

This piece states that Microsoft has cut its sales targets for its Agentic AI Copilot software significantly, potentially up to 50% as it struggles to get interest from customers. The piece also states it is falling behind others such as ChatGPT and Google’s Gemini.

ServiceNow (SNOW) To Acquire Armis for $7 Billion

News recently broke that industry giant SNOW is in talks to acquire Armis for a potential $7 billion deal. Armis was last valued at $6.1 billion. Armis focuses on securing and managing connected devices and just raised $435 million in a funding round in recent months, and had discussed their IPO ambitions openly.

As the CNC article notes, while many may have IPO ambitions, more often than not, most cyber companies opt to stay in the private market given the choppiness of the public markets, as well as increased scrutiny it brings.

This news comes as SNOW was recently announced to be acquiring identity security company Veza as well, demonstrating SNOW is on an acquisition spree, focusing on critical areas of cyber from devices and endpoints to identity.

The Container Vulnerability Ecosystem Stays Hot

While the secure base images and container security ecosystem has been dominated by teams such as Chainguard, in recent years we’ve seen several existing and new players enter the field, further validating this category.

The latest example comes from Echo, who announced going from $0-$50M Series A in a LinkedIn post from their Cofounder & CEO Eilon Elhadad. The team brings strong venture support too from players such as Notable, Hyperwise, SentinelOne and SVCI.

AI

The White House Drops an EO for a U.S. National AI Regulatory Framework

I’ve been sharing a bit lately about the ongoing debate about State vs. Federal AI regulation here in the U.S. In the absence of a Federal regulatory framework for AI, many states have rolled out their own AI regulations and requirements.

Many, including the current administration argue it is going to hinder innovation, be too costly and cumbersome for industry and stifle the U.S. ability to compete internationally both economically and from a national security perspective.

This latest EO titled “Ensuring a National Policy Framework for AI” looks to address the lack of a Federal level AI regulation framework and also block state level efforts that may impede overarching Federal goals.

The U.S. Federal government (and the tech sector) are looking to avoid a patchwork quilt of countless state level AI regulations that will be costly and problematic to navigate and comply with and could slow down the U.S. raise to out innovate China in particular when it comes to AI.

The EO calls for a “minimally burdensome” national policy framework for AI. It also stresses the need to be able to innovate:

“To win, US AI companies must be free to innovate without cumbersome regulation.”’

The EO does allow for various State AI laws too though, specifically in areas dealing with child safety, State procurement etc.

AI Eats the World

I inevitably find myself consuming more long form video and audio content as I am learning and staying up to date on the industry, cyber and AI. Few do macro analysis related to trends better than Benedict Evans.

In this excellent talk with a16z Benedict dives into all things AI, speculation around bubbles, where AI is or isn’t generating ROI, its role as the next platform shift and much more.

2025 State of GenAI in the Enterprise

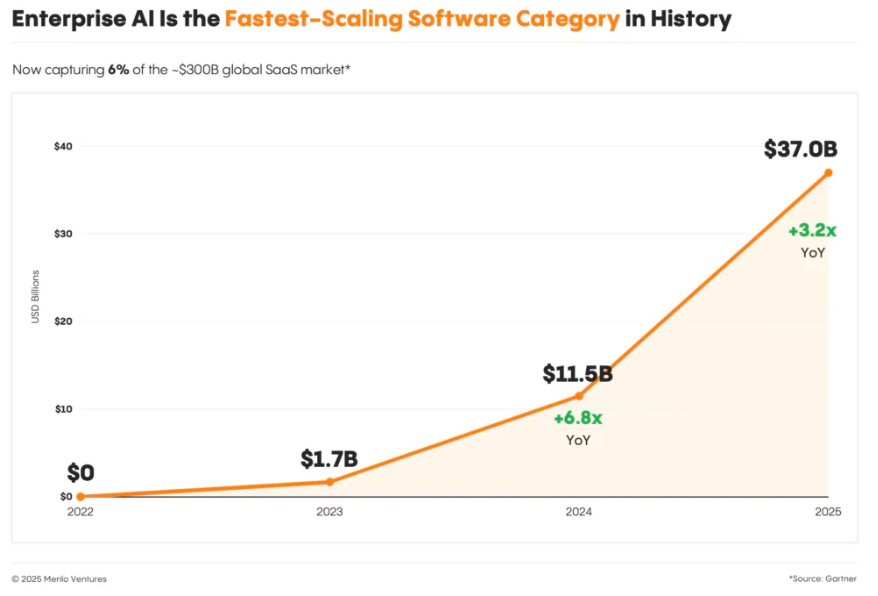

AI is experiencing adoption and usage at an unprecedented pace, and the latest Menlo Ventures 2025 State of GenAI in the Enterprise Report lays out how.

There’s some really excellent insights in this latest report, including:

↳ GenAI spending within the enterprise grew 3.2x from $11.5 billion in 2024 to $37 billion in 2025. That includes over 10 products generating over $1 billion in ARR and 50 generating over $100 million in ARR.

↳ Foundation models saw the most spending but other key areas included model training and AI infrastructure, however Application layer AI spending outpaced the Infrastructure Layer, coming in at $19 billion across horizontal, departmental and vertical use cases.

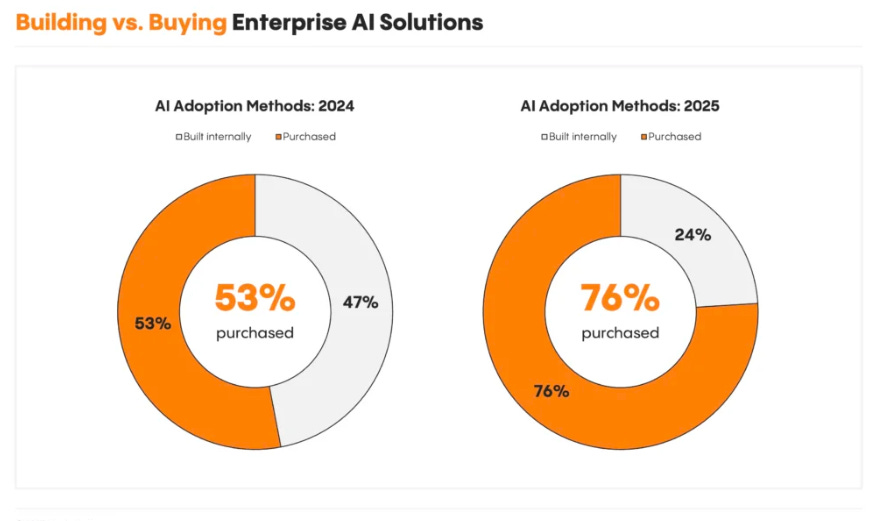

↳ The age old Build vs. Buy paradigm is changing, as organizations went from building 47% of their enterprise AI solutions in 2024 down to only 24% in 2025, and not only are they buying, they’re doing so at higher rates than traditional SaaS, with 47% of AI buyers converting from pilot to Closed/Won.

↳ Coding continues to dominate the department AI spending, pulling down $4 billion or 55% of ALL department AI spend in 2025 (this has AppSec implications!), most of this is focused on Copilots by poised to shift to Agents as they mature.

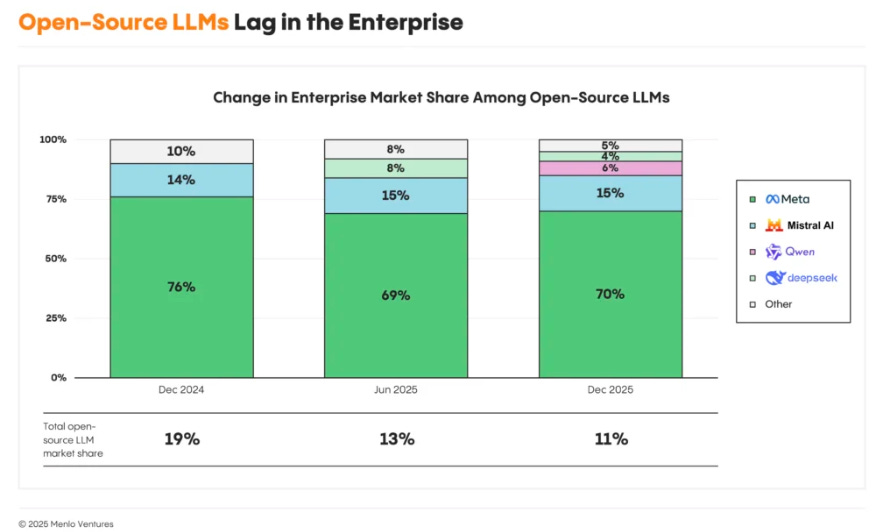

↳ For all the talk of Open vs. Closed Source Models, Closed Source is the clear leader, with open-source LLMs only holding 11% of the market share in 2025 and dropping.

The report is full of these insights and more, many of which have downstream takeaways for security leaders and practitioners, as we position to secure this unprecedented level of adoption and usage in AI

Unpacking the OWASP Top 10 For Agentic AI

Recently I shared how the OWASP GenAI Security Project released the first official Top 10 for Agentic AI. We’re entering what many suspect will be the decade of AI agents, with Agentic AI poised to disrupt nearly every industry and vertical.

While doing so, during adoption and implementation, Agents inevitably will also introduce a significantly expanded attack surface and complexity, along with novel risks and threats.

We continue to see Agentic AI as one of the most discussed and exciting areas of the AI evolution, with potential across nearly every industry and vertical, including Cybersecurity.

However, it comes with significant risks as well, which OWASP has codified into the following Top 10:

↳ Agent Goal Hijack

↳Tool Misuse & Exploitation

↳Identity & Privilege Abuse

↳Agentic Supply Chain Vulnerabilities

↳Unexpected Code Execution (RCE)

↳Memory & Context Poisoning

↳Insecure Inter-Agent Communication

↳Cascading Failures

↳Human-Agent Trust Exploitation

↳Rogue Agents

This builds on foundational work from the OWASP Agentic Security Initiative (ASI) prior, such as the Agentic AI - Threats and Mitigations publication.

This is an amazing community-driven effort with contributions by many experts and industry leaders such as John Sotiropoulos, Keren Katz, Ron F Del Rosario, Kayla Underkoffler, Allie Howe and Idan Habler, PhD among others.

I’ve been honored to get to serve on the ASI Expert Review Board and collaboration with friends and leaders such as Steve Wilson, Michael Bargury and others.

In my latest article I break down the Top 10 for Agentic AI, including key takeaways, broader industry context and related resources and concepts.

If you’re looking for a tl;dr - this is it!

Zenity Expands AI Security with Incident Intelligence, Agentic Browser Support and New Open Source Tool

The team at Zenity continues to impress me with their rapid innovations in the Agentic AI Security space. Building on their industry leading platform and research that I routinely find helpful, they announced a trio of capabilities recently:

An intelligence layer for correlating AI-driven security incidents.

Expanded coverage for Agentic Browsers (something that quickly got on the radar of CISOs and Security leaders due to the significant risks Agentic Browsers pose!)

An open source tool from Zenity Labs to evaluate emerging LLM manipulation techniques.

Jason Stanley, who leads AI Research Deployment for ServiceNow actually had an excellent blog titled “Incident Agents, Pattern Cards, and the Broken Flow from AI Failures to Defenses” where he used Zenity’s intelligence layer as an example of addressing gaps when it comes to Agentic AI for cybersecurity defense and incident use cases. Be sure to give Jason Stanley a follow on Substack.

Securing AI Agents with Cisco’s Open Source A2A Scanner

While MCP has dominated a lot of the discussion about protocols supporting Agentic AI in 2025, another critical protocol is A2A, which focuses on inter-agent communications, while MCP is more so focused on tool use and resources.

Cisco defines A2A as:

“The A2A protocol defines a standardized mechanism by which agents (that may have been built on different models or platforms) can communicate and work together”.

The team at Cisco, including my friend Vineeth Sai Narajala, recently announced their open source A2A scanner which can validate agent identities and inspect their communications for threats.

I’ve had Vineeth on the Resilient Cyber Show in the past to dive into all things AI, Agents and Protocols.

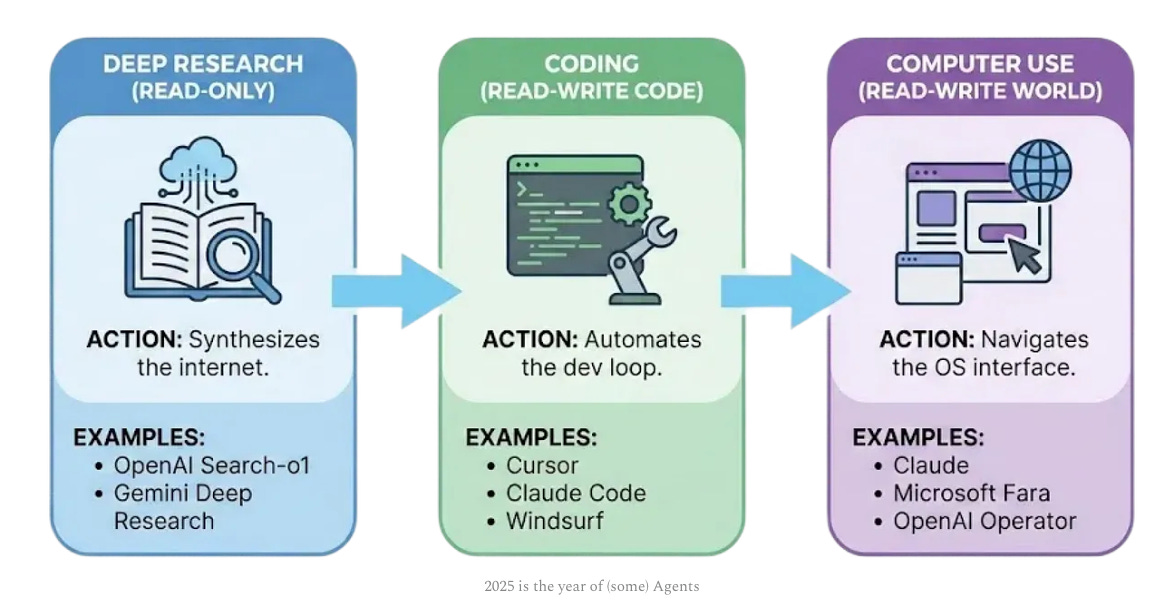

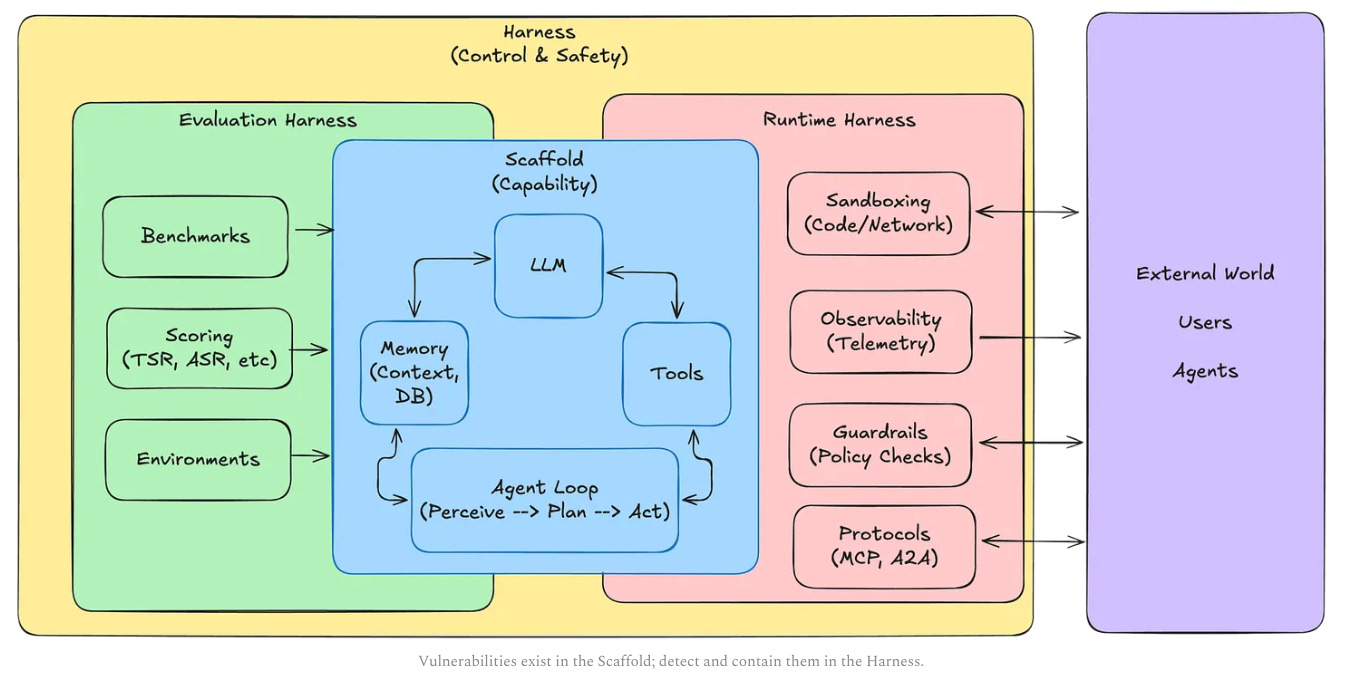

Your AI Agent Just Got Pwned

I recently ran across Matthew Maisel‘s incredible blog titled “Your AI Agent Just Got Pwned: A Security Engineer’s Guide to Building Trustworthy Autonomous Systems”. It’s an incredibly detailed piece, diving into the control and safety of agentic systems and the nuances on everything from evaluations to runtime.

Matt dives into the brittleness of real-world task success in agents, how we can engineer trustworthy agentic systems and ties his perspective to many real-world agentic risks and vulnerabilities that have been disclosed in leading platforms and products.

This is genuinely one of the best pieces I’ve read on Agentic AI Security in 2025.

AI Agents and the Core of IAM: Key Takeaways from the Gartner IAM Summit 2025

Identity continues to dominate a lot of the discussion in cybersecurity, with major acquisitions, new entrants and strategic moves by large players. This is true in the Agentic AI space too, and Gartner recently hosted a 2025 IAM Summit. Astrix’s Field CTO Jonathan Sander shares the key takeaways in a helpful blog.

Jonathan emphasized that the key theme of the event was still that Identity is the core of everything, but the new twist this year of AI Agents, which makes sense, given it is a theme that has dominated many conversations beyond just Identity in 2025. The conversations also included the need to map connections between the low level technical elements of IAM, to broader business functions and value.

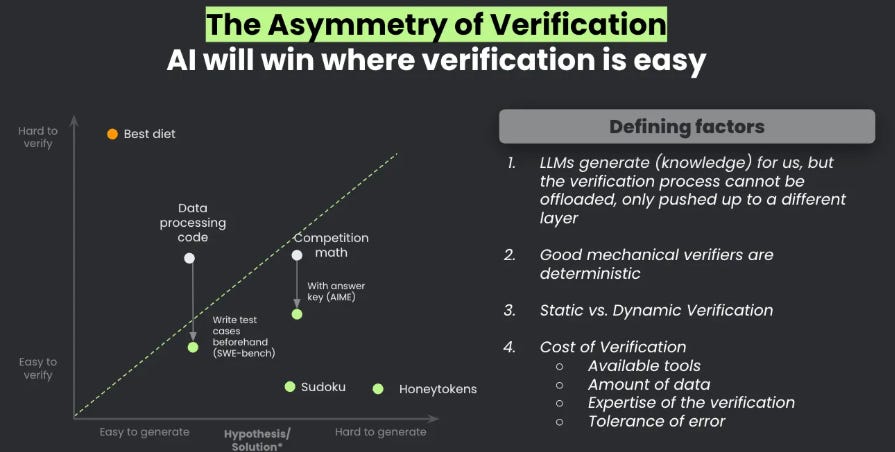

Winning the AI Cyber Race: Verifiability is All You Need

We continue to see a lot of theoretical and not so theoretical discussions about AI in Cyber, and who is or will win, defenders or attackers. A critical part of the challenge that often gets neglected is that AI thrives on verifiability.

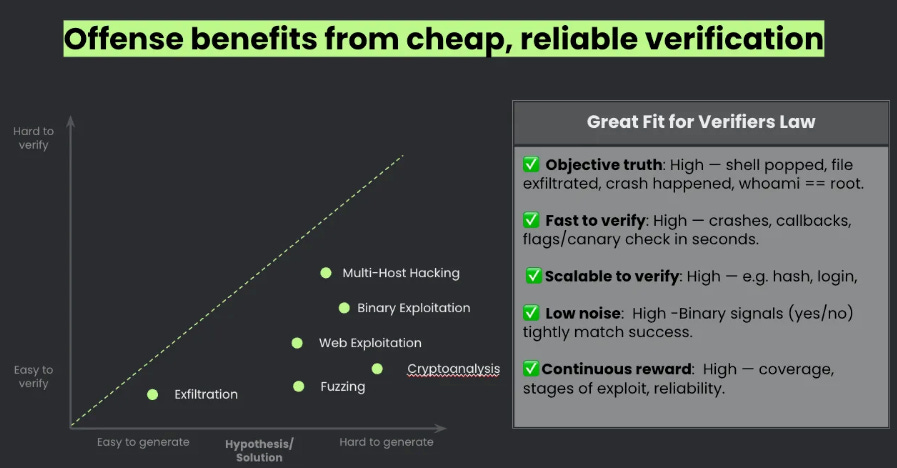

As Sergej Epp lays out in an excellent article, attackers often have it much easier when it comes to verifiability. Did you get a shell or not? Did you compromise a host or not? Did you exploit a vulnerability or not?

Defenders? Not so much

We’re often drowning in massive noisy data, thousands and millions of alerts, notifications, false positives and logs. As Sergej says:

“Spring is here, and offense is winning”

He cites a quote from OpenAI’s Jason Wei which states “The ease of training AI to solve a task is proportional to how verifiable that task is”.

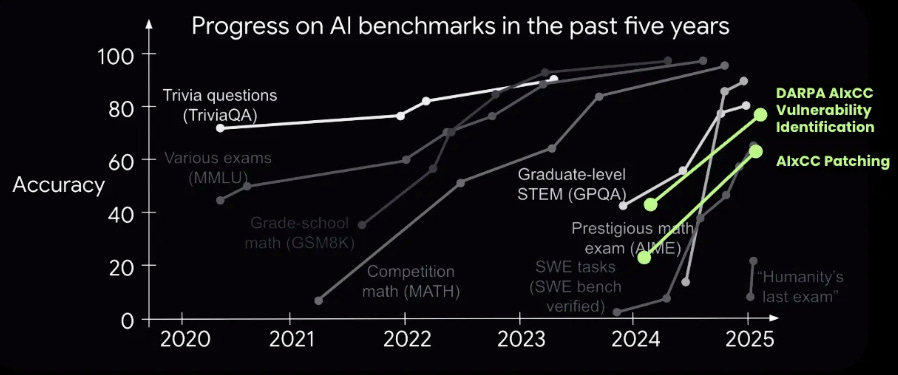

This is in part why we continue to see AI, LLMs and Agents scale massively in terms of benchmark scoring at CtFs and other binary activities that have clear verifiable measures of success.

As we all know in cyber defense, things are far murky and opaque. His blog provided the below images:

As Sergej lays out, until cyber defenders address the verifiability gap and challenges, offense use of AI is likely to far outpace defenses success, which is a very troubling projection, given how defenders already struggle.

However, as Sergej noted in his blog:

“Here’s the uncomfortable truth: most defensive security tasks sit in the hard to verify quadrant.”

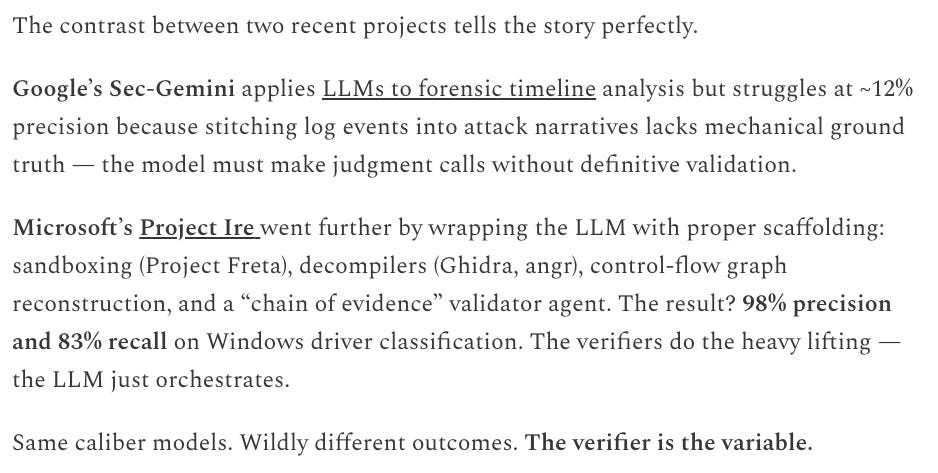

Sergej does go on to stress that the key here is coupling LLM’s and Agents and proper tool scaffolding, and showed two examples:

AppSec

MITRE Releases 2025 CWE Top 25

Every year, similar to other industry AppSec resources, MITRE updates their “CWE Top 25”, which are the Top 25 Common Weaknesses and Enumerations (CWE). This represents:

“This annual list identifies the most critical weaknesses adversaries exploit to compromise systems, steal data, or disrupt services.”

MITRE and Cybersecurity and Infrastructure Security Agency recently shared the 2025 CWE Top 2025. Below are some quick takeaways.

↳ XSS continues to dominate the top spot

↳Missing Authorization jumped 5 positions to Number 4.

This is defined as “The product does not perform an authorization check when an actor attempts to access a resource or perform an action.” There’s strong parallels here with the recent update of the OWASP Top 10, which has Broken Access Control (#1) and Authentication Failures (#7).

So despite the past several years of industry hype around Zero Trust, we seem to be not doing so hot there, and Agents are poised to exacerbate these fundamental problems at-scale.

The CWE Top 25 is a great resource for defenders to prioritize and target high priority vulnerabilities, as well as for vendors to potentially due the same, driving Secure-by-Design architectural, design and development decisions.

Fixing the Broken Vulnerability Management System

In this episode I got to hangout with my friend and Vulnerability Researcher Patrick Garrity 👾🛹💙 of VulnCheck to do a roundup of the latest trends, analysis and insights into the vulnerability and exploitation ecosystem throughout the past year.

We covered a lot of great topics, including:

The most notable vulnerability trends over 2025, including what has changed, or stayed the same in the past year.

Continued challenges around the NIST NVD and CVE, the sprawl of competing vulnerability databases and vulnerability identification schemes, challenges with funding, centralized vs. decentralized approaches and what the future holds.

What the life of a vulnerability researcher looks like under the hood, including participating in coordinated vulnerability disclosure.

Efforts from Patrick’s team at VulnCheck, including their Known Exploited Vulnerability catalog, covering gaps from the CISA KEV, as well as https://research.vulncheck.com that provides excellent graphs and visualizations, such as the one below showing vulnerability exploitation timelines.

Patrick’s thoughts on what the vulnerability management landscape may look like in 2026.

Rethinking AppSec for AI-Driven Applications

I recently had a chance to join Fred Sass and the Security Compass crew for a wide ranging discussion on all things AI and AppSec. We covered topics such as:

The impact AI is having on AppSec

The diverse regulatory landscape

Key frameworks and best-practices to explore

How AppSec needs to evolve in the era of AI-driven development

And much more. This was a fun conversation, and we tried to cover a lot of ground.