Resilient Cyber Newsletter #75

AI-Driven Zombie Tools, Cyber’s Largest Series A Ever, The ROI of AI, Agentic AI Security Scoping Map, Agentic Exposure Management & 2025 CVE Growth Report

Welcome!

Welcome to issue #75 of the Resilient Cyber Newsletter.

We’re back after taking a brief break for the holiday season and taking a week off. I hope everyone is back, excited and recharged because we have a lot of ground to cover from major fundraising announcements, innovations at the intersection of AI and cybersecurity and of course, more AppSec and supply chain security incidents and innovations alike.

So, here we go!

Board Expectations Are Now a Bigger Threat Than the Threats Themselves

Cybersecurity leaders aren’t getting squeezed by the attack surface as much as they’re getting squeezed from their stakeholders. Nagomi’s new CISO Pressure Index shows a role pushed past the edge, with nonstop incidents and tool overload taking a back seat to a deeper issue: board expectations that keep rising while support keeps shrinking.

CISOs say the personal cost is showing up in the business. Burnout is hitting readiness, and many feel their jobs are on the line every time an incident breaks through defenses that were “in place.”

The biggest friction point emerges in the boardroom. Directors want clean answers and business-friendly metrics, while CISOs juggle sprawling stacks, lean teams, and little time to reset between incidents. That disconnect fuels blame, weakens trust, and leaves leaders exposed in ways no tooling fix can solve.

Nagomi’s report digs into how this gap formed, why it’s getting worse, and what has to change if organizations want security leadership that can actually keep up.

Cyber Leadership & Market Dynamics

The Era of the Zombie Tool

While the AI wave may be “new” and novel, one thing that isn’t is the age old debate of Build vs. Buy, which involves enterprises and organizations making the decision to either build a product/tool or buy one. Historically, organizations had to decide to either build a tool internally, or to buy one from the market. Generally, teams that didn’t have extensive internal expertise and resources often opted to buy, due to the economics of sustaining a tool, and the expertise needed to develop and sustain them.

But, AI has both changed, and complicated that, given developing things is “easier”, but maintaining them is anything but. Industry cybersecurity leader Caleb Sima recently wrote an excellent piece on this dynamic, discussing “Zombie Tools”, as he called them.

“But this speed is a Trojan horse. While AI has democratized the ability to build, it has effectively weaponized the ‘Build Trap.’ It disguises a massive future maintenance burden as immediate, low-cost progress. We are about to witness a crisis of ‘zombie tools’ and the explosion of OpEx, driven not by necessity, but by a hidden psychological bias in how we value engineering work. Here is why your ‘free’ internal tool is about to become your most expensive liability.”

Caleb discusses it from the security perspective, highlighting that if engineers are shifted to building and maintaining an internal tool, that has a cost, and it also distracts them from tackling organizations risks and posture problems. His below quote really summarizes it well:

Caleb also highlights common factors driving this behavior, such as “career-driven development” and identity bias, in short, the perceived benefits to individuals who get to build and create, and being associated with making things.

Caleb covers the Build vs. Buy paradigm with an AI twist in a really excellent way and this is a great read for those looking to understand what is and will continue to unfold in enterprises as folks are deluded into thinking they can just build something versus needing to buy it, while ignoring the long tail challenges and complexities that come along with doing so.

302 Days from Stealth to the Largest Cyber Series A in History

AI SecOps startup 7AI made some big headlines this week when they announced an insanely large $130 million Series A, the largest Series A in cybersecurity history. They discussed evolving from a passion to proof when it comes to the potential for agents to transform security operations (SecOps).

They unpack how the math is broken, hiring and throwing more and more bodies at SecOps, along with detection tools and chasing down threats and alerts, leading to fatigue and burnout while risks keep materializing.

They also tout processing more than 2.5 million alerts and conducting over 650,000 security investigations and driving. down investigation timelines from hours to minutes while reducing false positives by 95-99% - all impressive metrics.

The announcement also claims they have conducted the worlds largest agentic security deployment, with DXC Technology and in only 8 weeks.

The founders here boast a strong history of building companies and they’re backed by strong investors so this is definitely one to keep an eye on.

The ROI of AI in 2025

AI has absolutely dominated the headlines and investments, both within and beyond cyber, accounting for a large portion of the U.S. GDP in 2025. But what is the ROI of AI? That is a question many are wondering and even debating, especially with earlier reports by MIT and others citing how many AI efforts end up failing or falling short of their goals for the organization.

That’s why this report from Google was super insightful on the topic.

There’s a ton of data in the report so I recommend reading it, but the key insights are summarized below:

A few key security findings worth highlighting are involved as well.

This includes the fact that SecOps and Cyber are listed among the most relevant cross-industry AI agent use cases:

This isn’t too surprising, as I have written quite a bit about the dominant role of AI in SecOps in particular, in prior articles such as:

Additionally, Data Privacy and Security continues to be one of the leading blockers to adoption and also a key consideration when evaluating LLM providers for use. This is refreshing, validating the fact that organizations and consumers are thinking about the security implications of AI adoption, while also looking to keep pace and innovate.

CMMC - A $6.7B Market by 2033?

I recently came across an interesting post from Micah Dickson, who claimed that the Department of War (DOW)’s Cybersecurity Maturity Model Certification (CMMC) program creates a $6.7B industry by 2033.

He argues it creates two massive markets, a “total CMMC compliance market”, (e.g. internal staff, software, hardware, consulting, assessments) and a “third-party services market” (consultants and assessments). He broke them down as seen below:

THE TWO MARKETS:

Total CMMC Compliance Market (all costs):

2024: $2.1B (internal staff, software, hardware, consulting, assessments)

2033 Projected: $6.7B

Growth Rate: 15.8% CAGR

Third-Party Services Market (consulting and assessments):

Pre-2024: $56M (voluntary early adopters)

2026: $800M (phased rollout)

2031 Projected: $3.5B

Growth Rate: 62x expansion

CMMC is poised to impact 220,000 defense contractors in the defense industrial base (DIB), with tens of thousands of them requiring assessments from 3PAO’s, with only 70~ firms currently authorized to conduct assessments.

On one hand these are very interesting metrics in terms of opportunities for those conducting CMMC compliance consulting and assessment services, but I think it also highlights the massive financial burden that will be imposed on the DIB as part of CMMC, and of which many SMB’s will simply not be able to afford to do, and could end up limiting the pool of vendors the DoW gets to work with, and their access to innovating technologies and services as a result.

Design Driven Product Security?

Over the last several years, we’ve seen phrases such as “Secure-by-Design” transition from longstanding ideas to some looking to put them into action. This notably has been led by folks such as CISA, with their Secure-by-Design initiative and pledge, led during the time by then Director, Jen Easterly.

We’re now seeing companies focused on trying to materialize the concept via startups and products, with the latest example being Clover, but others as well, such as Prime Security. A key emphasis is meeting builders where they live and work and where conversations, decisions and potential risks manifest, such as in design docs, Slack threads and tickets among other sources.

Clover recently discussed the concept in their blog about coming out of stealth and bringing $36 million in funding to help try and tackle this challenge.

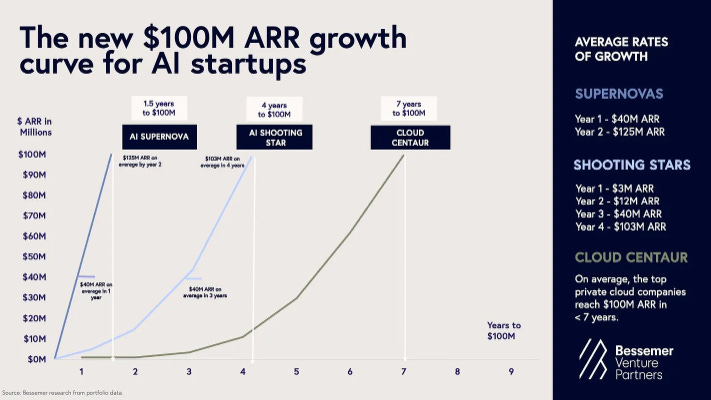

Most cyber companies simply can’t scale as fast as the new AI startups

Earlier this year I shared Bessemer’s 2025 State of AI report, in which they discussed the new $100M ARR growth curve for AI startups. This both spoke to what is a new era of venture backed startup growth and what “good” looks like.

The problem is, this doesn’t apply to every industry, especially cybersecurity, due to factors that make our industry unique, due to its focus on reducing risk, the emphasis on trust and that even our industry dominant names such as Zscaler and Crowdstrike are far from overnight successes, or in Bessemer’s terminology, “supernovas”.

In typical Ross Haleliuk fashion, my friend Ross breaks down what makes Cyber unique and how despite the new normal being driven by AI, most cybersecurity startups won’t match the growth rate of AI startups and why.

SailPoint’s Authorization Opportunity

Identity and Access Management (IAM) remain fundamental to sound cybersecurity, and now many argue Identity is the most critical aspect of cyber. There’s a strong case to be made too, given credential compromise and identity-related attacks continue to dominate reporting year-over-year (YoY).

We also continue to hear catchy phrases highlighting this, such as:

“Hackers don’t hack in, they log in”

“Identity is the new perimeter”

We’ve seen acquisitions in the space too, such as ServiceNow’s (SNOW) acquisition of Veza for example. In this piece from Strategy of Security, my friend Cole makes the case that authorization is about to have a moment and SailPoint is here for it, building on top of their already existing nearly billion dollars of ARR.

Cole argues that authentication is important but authentication is key (and I agree). Cole dives into the complexities of managing permissions and entitlements (e.g. authorization), and how the growth of apps, API’s, and AI will only exacerbate these challenges, and how SailPoint is among those strongly positioned to address the rising challenge.

Some metrics Cole highlight the scale at which SailPoint is operating at too:

125 million identities in production

5 billion entitlements under management

35 billion SaaS account changes processed (last twelve months)

AI

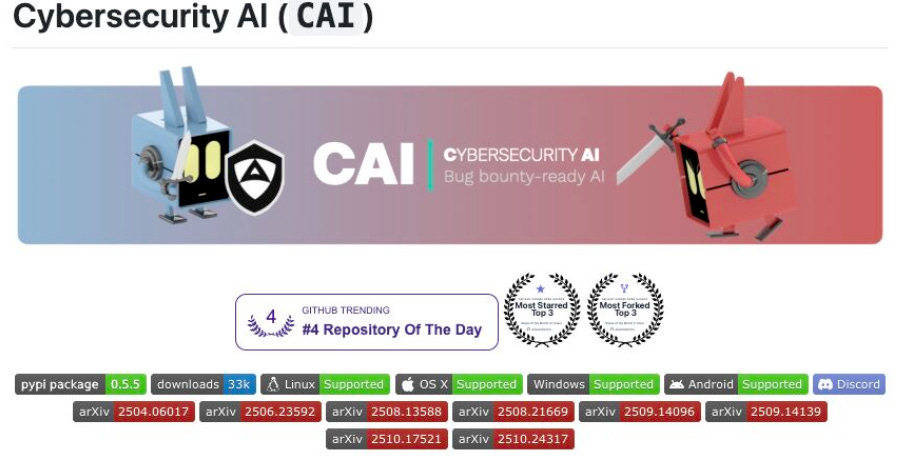

Cybersecurity AI (CAI)

I recently came across this excellent repo for CAI, which is an “open-source framework that empowers security professionals to build and deploy AI-powered offensive and defense automation”.

It includes:

🤖 300+ AI Models: Support for OpenAI, Anthropic, DeepSeek, Ollama, and more

🔧 Built-in Security Tools: Ready-to-use tools for reconnaissance, exploitation, and privilege escalation

🎯 Agent-based Architecture: Modular framework design to build specialized agents for different security tasks

🛡️ Guardrails Protection: Built-in defenses against prompt injection and dangerous command execution

What’s really cool is to see the widespread adoption and use, where it is citing various implementations across the industry, from discovering vulnerabilities to conducting comprehensive security testing.

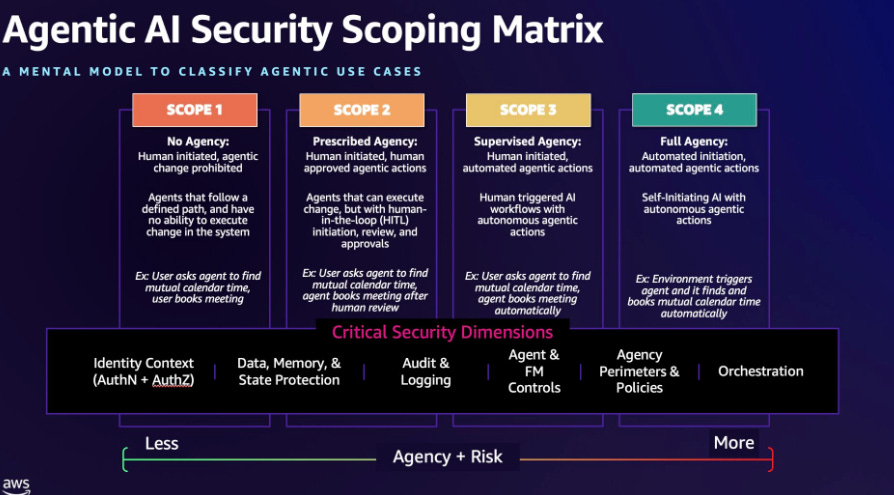

The Agentic AI Security Scoping Matrix

We know we’re entering the Agentic AI era, with excitement from nearly every industry and sector when it comes to Agentic AI, including investors, startups and enterprise leaders.

However, there are a lot of potential risks that go along with the opportunities that Agentic AI presents. This is something Ken Huang and I have tried to highlight in our book, Securing Agentic AI, as well as the efforts of others, including Michael Bargury, Gadi Evron, Idan Habler, PhD, and Vineeth Sai Narajala.

That said, it can be challenging to think about how to secure these complex environments, architectures, and potential attack surfaces.

That’s why AWS’s new Agentic AI Security Scoping Matrix is a great resource for the community. It involves “four architectural scopes that represent the evolution of agentic AI systems based on two critical dimensions: level of human oversight compared with autonomy and the level of agency the AI system is permitted to act within.”

This is a great blog from Aaron Brown and Matt Saner on the matrix, and how practitioners can use it to map and minimize Agentic AI security risks, as well as understand the broader Agentic AI paradigm shift.

AppSec

Elevating CTEM with Agentic Exposure Management

We’ve watched the industry mature from legacy Vulnerability Management towards Continuous Threat and Exposure Management (CTEM).

It’s a topic I’ve written about before, leveraging Zafran Security‘s guide for evolving from VM to CTEM. Looking to answer critical questions such as what matters most, is the organization actually exposed, what are potential impacts and conducting critical activities from asset identification through proactive threat management.

None of these are new concepts, and they may even be “simple”, but are from from easy in practice.

Execution at-scale has been the bottleneck, but agents offer the promise to change that.

That’s why I had a lot of fun digging into Zafran’s new Agentic Exposure Management capability in my latest deep dive on Resilient Cyber. It’s a great example of cybersecurity being an early adopter and innovator of an emerging technology, rather than a laggard, like we historically are.

I walk through some of the promising use cases, such as early zero day exposure assessment, automating the ability to validate exploitability and streamlining efforts to identify assets, while leveraging their AI-Native Exposure Graph. They’re also pioneering agentic remediation, streamlining activities from validating exploitable vulnerabilities and assets through automated patching potential as well.

The launch comes on the heels of Zafran’s $60M Series C with Menlo Ventures, Sequoia Capital and Cyberstarts and Founders Sanaz Yashar, Ben Seri and Snir Havdala doubling down on their ambitions to be leaders in this space.

This is really exciting stuff when it comes to transforming vulnerability and risk management and it wasn’t even possible when I wrote the book Effective Vulnerability Management not long ago, and now it’s awesome to see the intersection of AI and Cyber in action.

Sha Hulud Strikes Again

As it turns out, attackers hate Thanksgiving, or at least letting us take a breather to celebrate it.

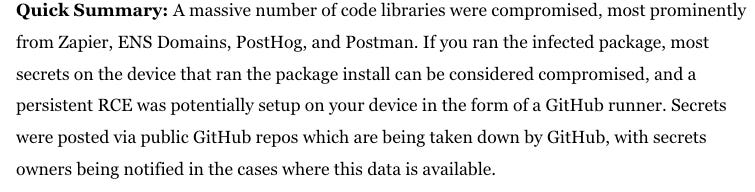

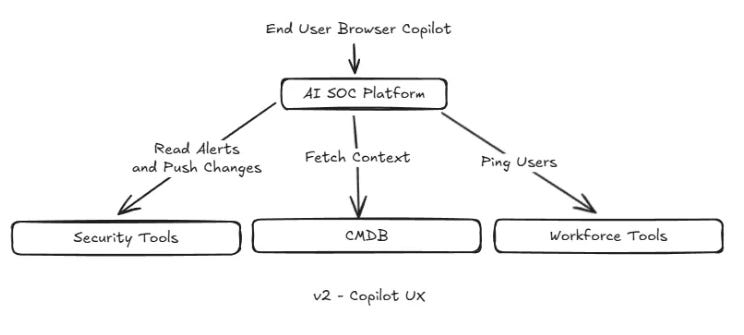

The AppSec community was in a whirlwind the past holiday week, as another major software supply chain incident was underway, with the return of “Sha Hulud”. Rather than try and rehash my perspective, I would recommend checking out James Berthoty recap blog, which is a quick summary below, as well as a bunch of tools, resources and analysis from the vendor community.

I will also say, Alex Matrosov of Binarly has had some excellent diagrams and resources on the incident, with the below examples. The first is Shai-Hulud 2.0 and the second is a reflection (pun intended) on Ken Thompson’s “Reflections on Trusting Trust” paper.

2025 Lonestar AppSec Conference Playlist

If you’re like me, you’re a big fan of cybersecurity conference recordings and playlists, especially for folks like myself, who have a young family and often can’t make it to every conference or event.

That is why I was psyched to see the LASON 2025 playlist live, which includes a lot of excellent talks and speakers, including folks such as Dustin Lehr, Andrew Hoog, Jim Manico and Shannon Lietz among others, covering all things AI and AppSec.

One example is Jim’s talk below, titled “Securing AI-Generated Code in the Development Cycle (React Focus)”.

AWS’s Security Agent Hits the Scene

I’ve been digging into AWS’s recent announcement of their “Security Agent”. As they put it, it “conducts automated application security reviews tailored to your organizational requirements and delivers context-aware penetration testing on demand”.

What’s interesting, with the automated pen testing, the agent is reviewing your organizational security requirements, design documents and source code to curate tailored assessments. It goes beyond automated, custom-tailored pen testing too, with features such as design and code security require, tied to your security specifications.

These capabilities span many different startup and product categories, with some focusing on the agentic pen testing, and others using agents for source code and design document reviews.

This is definitely a big move by AWS and has implications for players in the adjacent categories, given it natively integrates with the broader AWS platform, one of the most dominant IaaS hosting environments in the world.

Engineering in the Age of AI

The fact that AI, LLMs and Agents are drastically transforming the modern SDLC and development is common knowledge. However, how are engineering leaders actually navigating the changes, what are some key metrics, both positive and negative?

This report from Cortex, originally shared on LinkedIn by my friend, Varun Badhwar who is the CEO of Endor Labs dives into that. One key theme is that yes, everyone is moving faster and producing more code than ever. That said, it is coming with challenging tradeoffs when it comes to code quality and stability, and remember, security is a subset of quality!

Some of their key findings:

PRs per author increased 20% YoY

This included a 30% increase in change failure rates and 9% increase in cycle time

Incidents per PR increased by 23.5%

Only 45% of organizations even have a formal AI usage policy

This is despite 90% of engineering orgs having adopted AI

Some overall excellent insights here, including widespread adoption without any standardization, tool sprawl and chaos, speed tradeoffs with quality, and recovery taking long when things do break, and they are, more often than they used to.

Emerging Categories: The Evolution of the AI SOC

AI SOC continues to be a hot topic across the industry, and one I have covered in prior article such as:

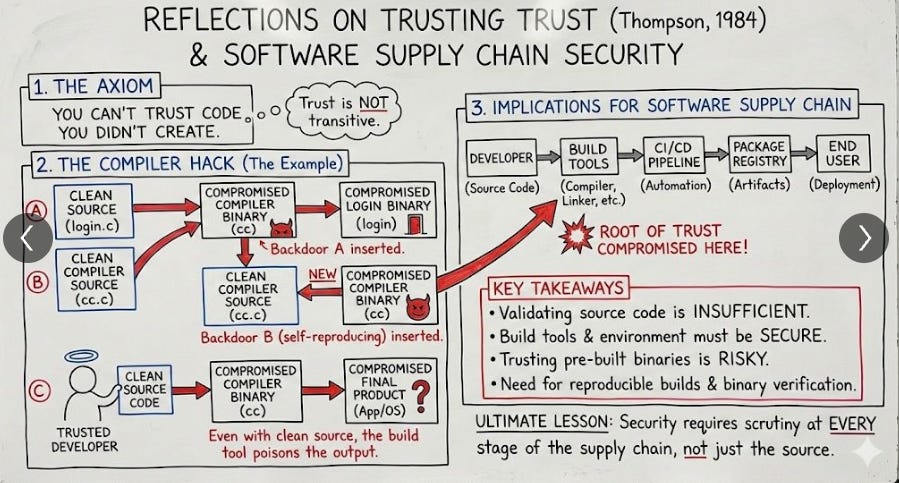

This recent piece from James Berthoty also dives into the topic, covering challenges of SOC’s traditionally, gaps in the first wave of AI-driven SOC platforms, and what the newest wave of players are doing to innovate differently.

James provides the below image to show how the “second generation of AI SOC tools function more as contextual copilots than SOAR + AI”.

This is a good read to understand key capabilities and challenges AI SOC is looking to address and how.

2025 CVE Growth Report

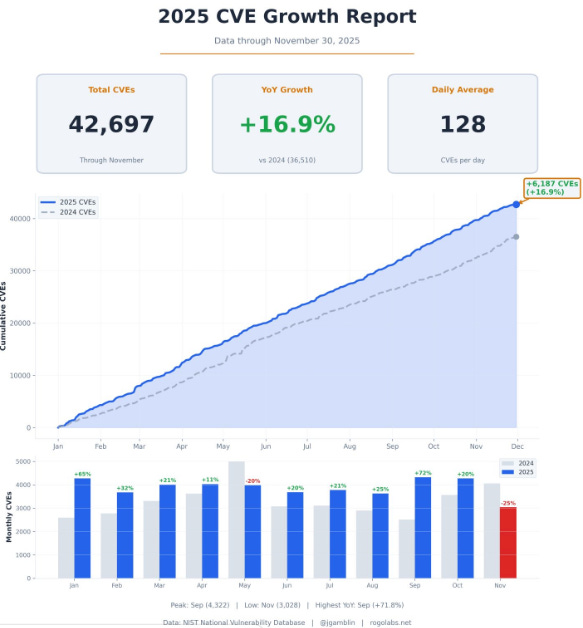

You’re likely sick of me sharing CVE insights from Researcher Jerry Gamblin by now, but I make no apologies. He routinely shares some of the best vulnerability and CVE insights in the industry.

He recently shared a 2025 CVE Growth Report, through November 30th 2025, showing +16.9% YoY CVE growth, with a daily average of 128 and over 42,000 CVE’s this year thus far.

It will be really interesting to see figures through 2026 and beyond as more AI-driven development occurs, often without guardrails, oversight or security rigor.

Subscribed. This is a fantastic resource for industry-related information. Grateful to stumble across this today.