Resilient Cyber Newsletter #64

AI Security M&A, LLM Hallucinations, Moat Erosion, State of SaaS Security, AI Automated CVE Exploits, npm Supply Chain Attack & A Day in the Life of a Hacker

Welcome!

Welcome to Issue #64 of the Resilient Cyber Newsletter.

This week we’ve got a lot of activity to cover, from more AI security M&A, innovative firms emerging from stealth with strong funding, insights into AI hallucinations, supply chain attacks and more much.

As always, I hope you enjoy, please be sure to subscribe and share with a friend!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 40,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

See What Really Matters: Maze’s AI Agents Triage Vulnerabilities for You

Security teams spend countless hours chasing vulnerabilities - most of which will never be exploited. A recent Maze case study on CVE‑2025‑27363 shows how our AI Agents investigate vulnerabilities like an expert human would to confirm if an issue is exploitable in your environment. If it’s irrelevant, it stays low priority. If it's actionable risk, it gets flagged fast.

That means less false positives, efficient remediation, and smarter security posture - without the usual guesswork.

Cyber Leadership & Market Dynamics

Another AI Acquisition Hits the Headlines

We recently saw an acquisition by EDR leader CrowdStrike. SentinelOne, a peer/competitor, has announced the acquisition of Observo AI. Their announcement focused on “rethinking security data from the ground up.”

SentinelOne discussed the need for real-time data pipelines across multiple disparate environments, including endpoints, on-prem, cloud, various CSPs, and more, as well as the need to leverage AI and Agents to modernize security operations (SecOps).

ObservoAI focuses on visibility by functioning as an “AI Data Pipeline for Security and DevOps” and being able to collect data from multiple sources and facilitate use cases such as a security data lake, among others. This move by SentinelOne, so closely following similar moves from CrowdStrike, highlights how critical the leaders view both data and visibility, and leveraging AI to position themselves as leaders in SecOps moving forward.

Unpacking the 2025 AI Security Acquisitions

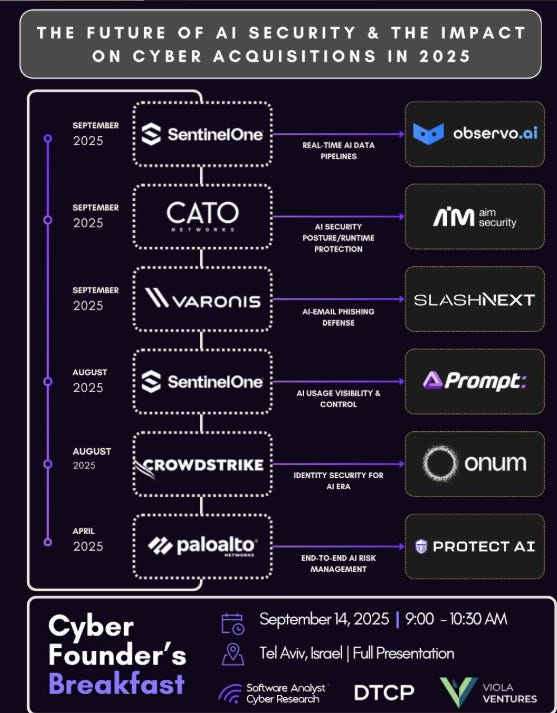

Speaking of AI acquisitions, there have already been quite a few in 2025. This piece from James Berthoty dives into the acquisitions, summarizing them and also discussing the strategic implications of the acquisitions for the acquiring firm and the industry as a whole when it comes to trends related to AI Security.

Francis Odum also provided a helpful image representing some of the major AI security M&A activities. The image was presented at an upcoming “Cyber Founder’s Breakfast” in Tel Aviv on September 14th.

National Cyber Director: U.S. Strategy Needs to Shift Cyber Risk from Americans to its Adversaries

“Collectively, we’ve made great progress in identifying, responding to and remediating threats, but we still lack strategic coherence and direction,” he said at the Billington Cybersecurity Summit. “A lot has been done, but it has not been sufficient. We’ve admired the problem for too long, and now it’s time to do something about it.”

This was a quote from the new U.S. National Cyber Director, Sean Cairncross, on Tuesday at the Billington Cybersecurity Summit. In 2023, the prior administration emphasized shifting the burden from individuals in the U.S. to private sector software suppliers and vendors in its National Cyber Strategy (NCS).

Sean is now calling for a modern strategy to advance U.S. interests and thwart adversaries in cyberspace. This includes emphasizing “creating an enduring advantage” over China and other key U.S. adversaries. Sean discussed how U.S. adversaries have acted with impunity, and this needs to change for U.S. security and stability.

This has strong undertones of “hack back” and economic and other measures to impose costs on those waging attacks on the U.S. in cyberspace.

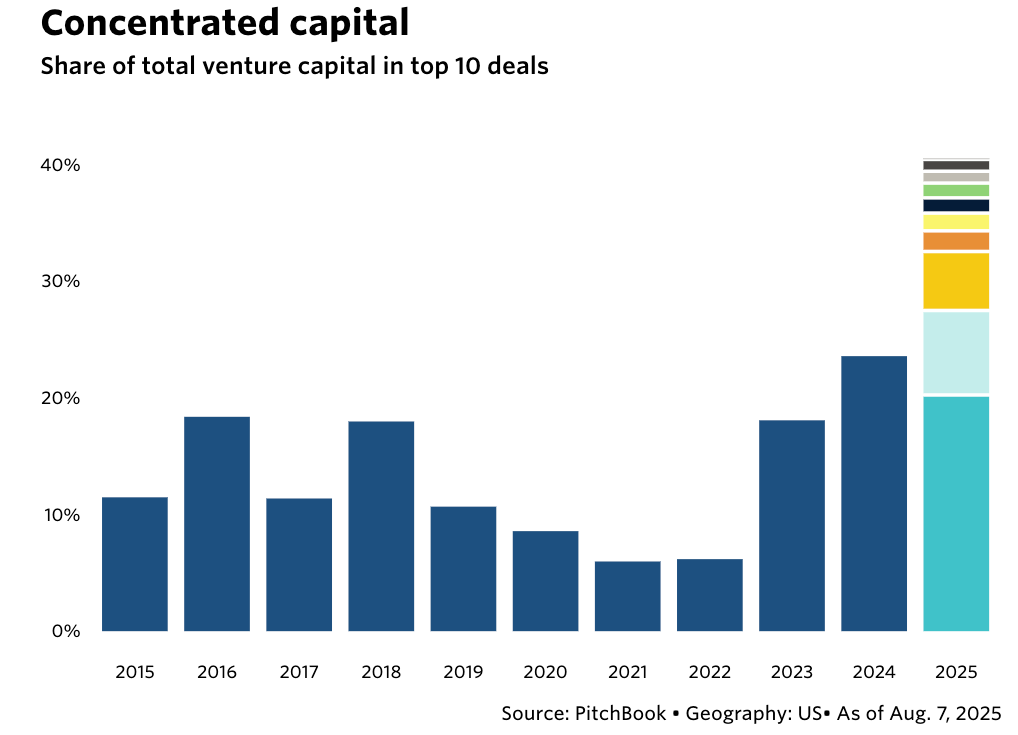

Power Law at Play

Venture Capital often involves discussions of the “power law,” where a small subset of investments leads to an outsized portion of the returns. As it turns out, the same is happening with the VC investment allocations themselves.

This piece from Pitchbook highlights how 41% of all VC dollars deployed in 2025 so far have gone to just ten startups. This represents a 75% increase from the share awarded to the top 10 companies in 2024 and the most in a decade.

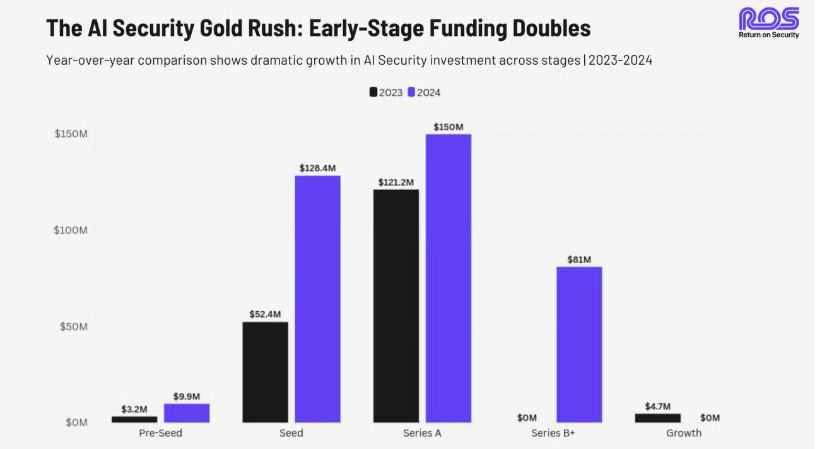

It is worth pointing out that 8/10 companies are AI companies, and AI of course has complete dominated the entire venture, startup and technology ecosystem for the last several years, including in Cybersecurity. This was pointed out by my friend Mike Privette over at Return on Security, in his “State of the Cybersecurity Market in 2024” deep dive.

My friend Sid Trivedi, who brought this article to my attention, pointed out at the same time that:

Down rounds hit a decade high, including 16% of all deals

Nearly half of unicorns haven’t raised since 2022

Of course, this could mean the unicorns are self-sustaining and don’t need capital, but it is much more likely they have hit scaling headwinds and are struggling to continue their earlier momentum.

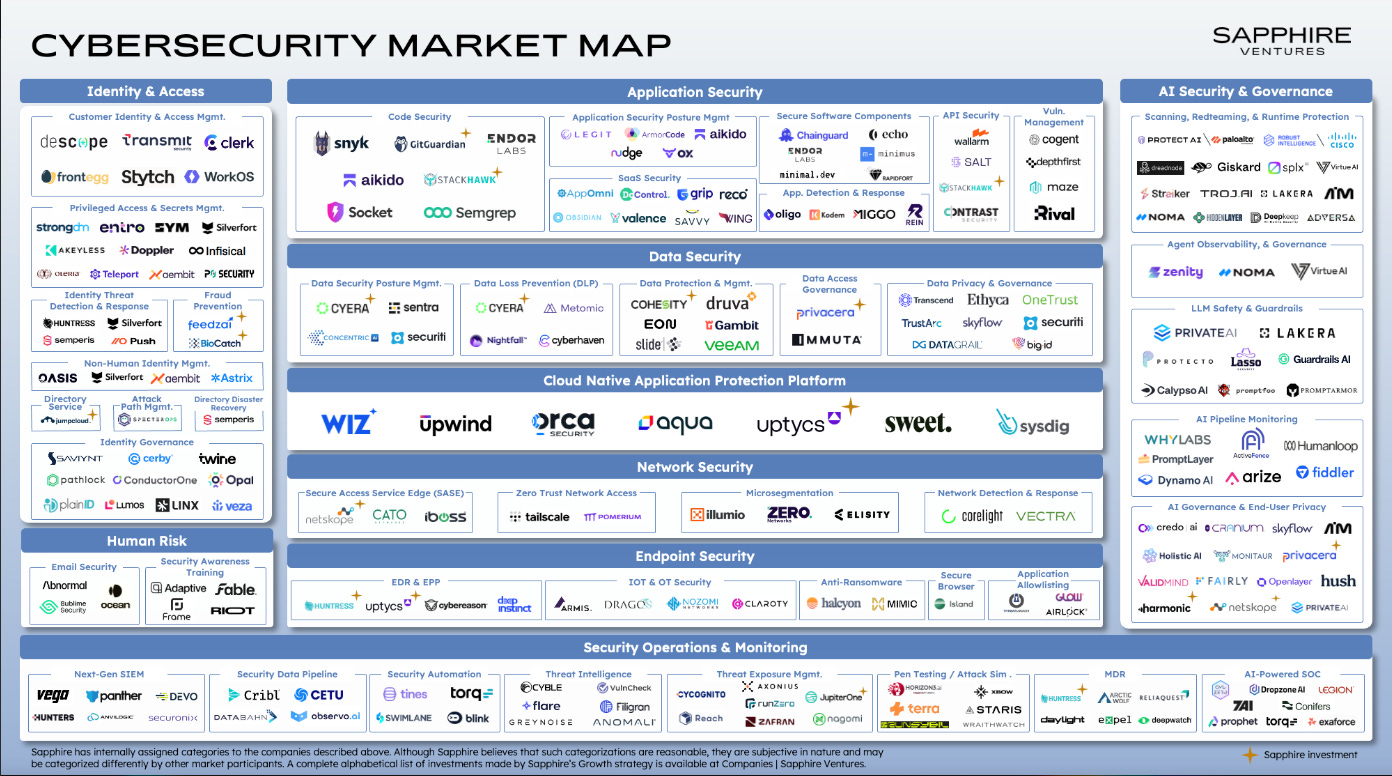

State of Cybersecurity: Growth, Risk and Resilience

This piece from Kevin Burke of Sapphire Ventures argues that Cyber is still one of the hottest categories in all of tech, being driven by factors such as:

Security is a top priority for CIOs

The cost of security failures continues to rise

The attack surface continues to expand

Geopolitical tensions spilling into cyberspace

They provided a Cyber Market Map showing the various areas of the ecosystem and discussing how these factors are leading to increased investment in cyber by IT departments and investors. They cited 2024 as the fifth straight year in which over $10B in capital was deployed into security startups.

As the map shows, cybersecurity is a broad and diverse ecosystem with many niche categories. No single vendor dominates them all, even if there are dominant vendors across a subset of some of the various market categories.

Sapphire cites several key themes they are tracking in terms of startups and opportunity, including:

AI Agents for the SOC

Data Security

AI Model Security

Email Security Startup AegisAI Emerges from Stealth

News this week broke that email security startup AegisAI emerged from stealth, boasting investments from industry leaders including my friend Sid Trivedi over at Foundation Capital.

Email security continues to be a space ripe for disruption, and I recently had a chance to chat with Cy Khormaee, one of the AegisAI founders, about what he described as the “three waves of email security.” His firm focuses on the latest AI-native wave in the space, including agentic detection, decreased false positives, and moving away from traditional email security gateway headaches.

The founding team boasts a background at organizations such as Google, where they focused on reCAPTCHA, anti-fraud, and more. Be on the lookout for a Resilient Cyber episode, in which Cy and I will dive deeper into the state of email security.

Koi Raises $48M to Reinvent Endpoint Security for the Modern Software Stack

Speaking of announcements, Amit Assaraf and the team at Koi announced a $48M fundraise by folks from Battery Ventures, Team8, Picture Capital, and others.

In their announcement, Koi argues that Endpoint Security is long overdue for an upgrade, given how much the space has evolved over the past decade. They point out the prevalence of non-binary software and factors such as code, OS packages, AI models, extensions, and the introduction of MCP as complicating the space and creating an unguarded attack surface.

I’ve had a chance to chat with Amit Assaraf of their founding team. I’m excited to see how they tackle this space and disrupt some incumbents with a modern innovative approach to a longstanding industry challenge.

AI

Resilient Cyber w/ Rob T. Lee - Navigating AI’s Impact on Cyber & the Workforce

In this episode of Resilient Cyber, I sat down with the SANS Institute's Chief of Research (COR) & Chief AI Officer (CAIO), Rob T. Lee to discuss AI's impact on cybersecurity and the workforce.

We discussed SANS Critical AI Security Guidelines, the opportunities and obstacles AI presents for cybersecurity, and how practitioners should navigate AI's impact on the workforce.

Listen on Spotify, YouTube, or Apple Podcasts.

Rob and I discussed:

SANS Critical AI Security Guidelines, including what categories of activities and security they cover for securing AI and how the resource can be used by practitioners

The six categories of controls in the guideline: Access Control, Data Protection, Deployment Strategies, Inference Security, Monitoring and GRC

Why not using AI is actually the biggest risk of AI, and how security can transition from being a laggard to an early adopter and innovator when it comes to AI

The double edged sword of AI, offering opportunities to innovate in problematic areas of cybersecurity, but also needing to be secured itself

AI’s current and potential impact on the cybersecurity workforce and how practitioners should be preparing, including what resources and training SANS offers when it comes to AI security

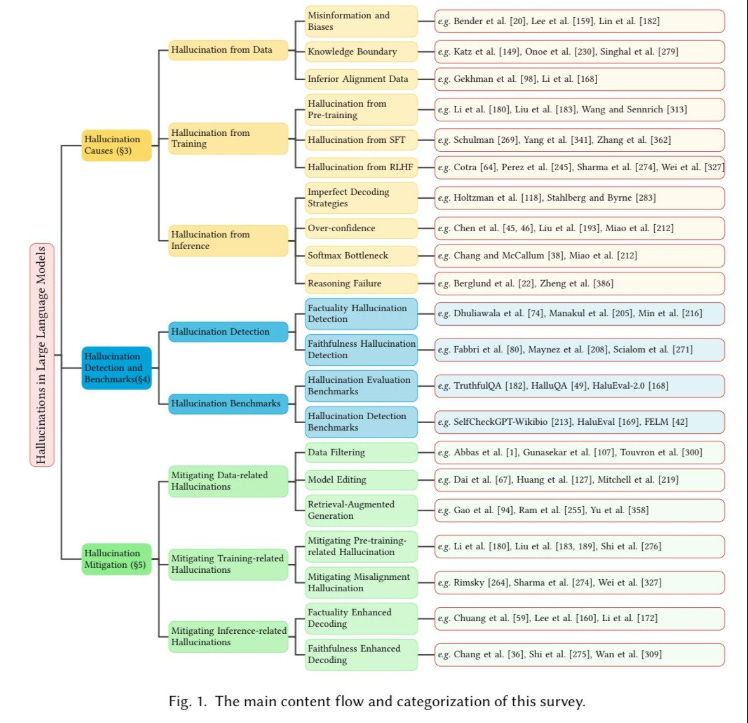

Hallucination is a Feature not a Bug

One of the biggest critiques of LLMs is that they “hallucinate”, or in less technical terms, they make stuff up. As it turns out, this is a feature of LLMs, not a bug. A recent paper by OpenAI and Georgia Tech titled “Why Language Models Hallucinate” was published, and it makes this case by using various examples to demonstrate how models hallucinate.

It cites causes such as pre-training and post-training bias and socio-technical issues tied to how we evaluate models. It calls for realigning evaluations and giving models credit in benchmarks when they admit uncertainty rather than rewarding certainty when answers aren’t factually correct.

On one hand, hallucinations can make LLMs creative, but introducing potential risks could compromise their legitimacy and value in real-world enterprises and other use cases.

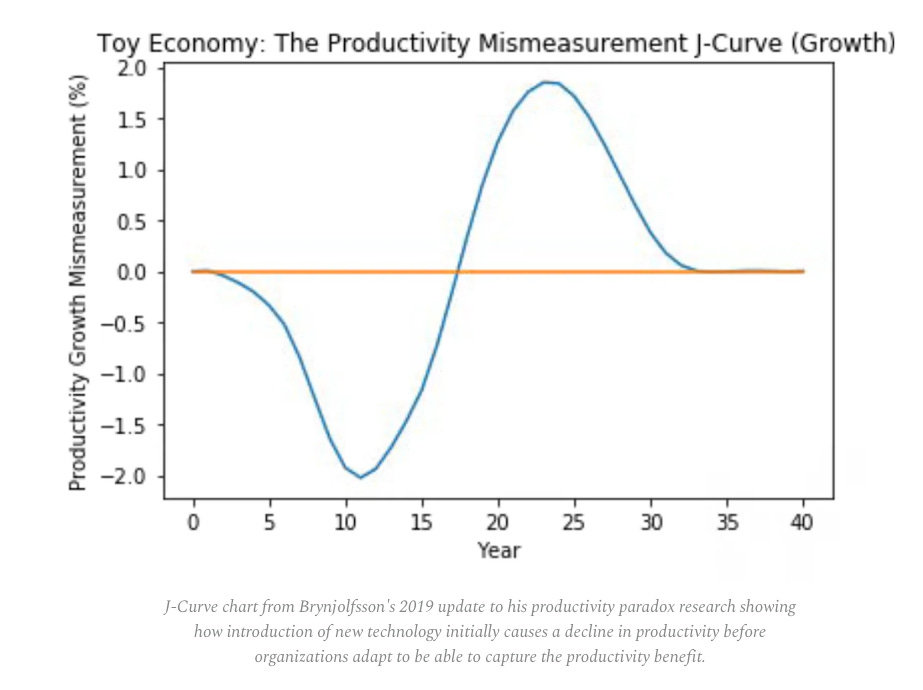

The AI Productivity Paradox: High Adoption, Low Transformation

Everywhere we look and read, we hear about the rampant adoption of GenAI, LLMs, and soon-to-be Agentic AI. But, how much of that leads to organizational transformation and improved business outcomes?

As it turns out, not too much, quite yet.

This piece from Sequoia points to two recent studies, MIT’s “The GenAI Divide” and Brynjofsson’s “Canaries in the Coal Mine” as examples showing that organizations are rapidly adopting AI, mainly at the individual contributor/employee level but improved organizational productivity gains and concrete outcomes remain elusive.

Each report cites common issues plaguing the space, including learning gaps among AI tools, failing to learn and improve over time within organizations, struggles to cross the Pilot-to-Production Chasms, and the “shadow AI” economy, where AI usage is widespread across the organization but via personal AI subscriptions, not enterprise adoption and tooling.

The article states that the challenge is explained in earlier research and technological waves, where it isn’t the new tools that immediately lead to improved outcomes and productivity, but a slower maturity necessary to introduce new ways of working.

The research also highlighted AI’s impact primarily on junior-level career folks, again driving home the point that deep domain expertise is still incredibly valuable, a point you’ll see in another piece I share shortly.

Key points emphasized from the research and the Sequoia piece to bridge the divide include focusing on learning, not just generating information, building for workflows, not just users, to understand organizational workflows and processes truly, and embracing the shadow AI economy to get insights from early adopters and users to drive future improvements in AI products.

Sequoia argues AI founders who internalize these concepts and take action will be able to:

“Covert today’s demos into tomorrow’s defensible moats”

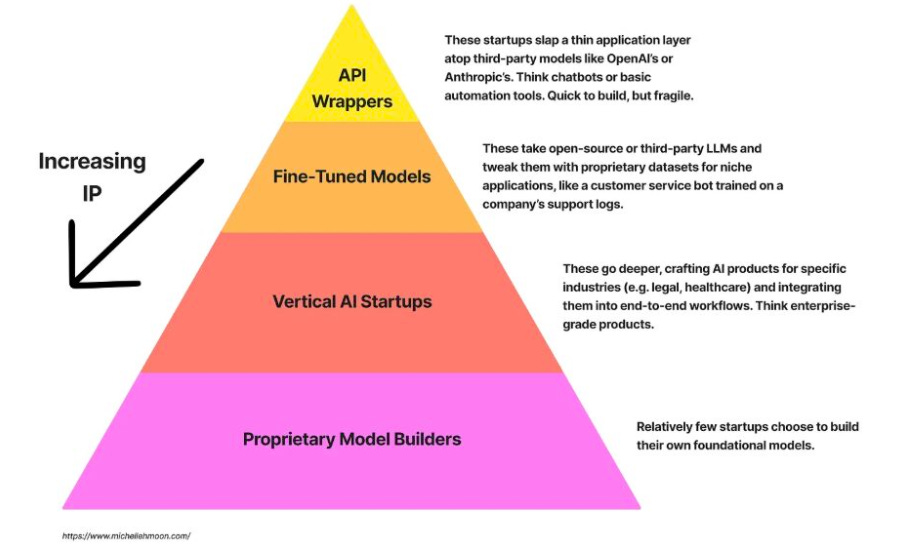

The Erosion of Defensibility

When it comes to AI's impact, there's a lot of talk about moats, defensibility, and capabilities. This piece from Michelle Moon is one of the best pieces I've found on the topic. She discusses the anatomy of modern AI startups, including API Wrappers, Fine-Tuned Models, Vertical AI Startups, and Proprietary Model Builders.

Michelle argues that the first two, in particular, have moats that are eroding quickly as LLMs improve in capability and cost. Some point to proprietary data as their moat, but most overestimate the value and uniqueness of their data. Michelle closes the piece by stating that the real moat is domain expertise—or truly understanding the problem/domain and servicing it the right way.

This points to firms with deep domain expertise as real differentiators. I think it also ties to other ongoing discussions, such as those of the "forward deployed engineer" or embedding services into delivery to complement product deployments.

AppSec

Resilient Cyber w/ Cory Michal (AppOmni) - Unpacking the SaaS Security Supply Chain Landscape

In this episode of Resilient Cyber, I sit down with SaaS Security leader AppOmni's VP of Information Security, Cory Michal, to discuss the State of SaaS and Software Supply Chain Security.

This comes on the heels of the Salesloft/Salesforce SaaS supply chain attacks and AppOmni's recent State of SaaS Security 2025 Report.

Prefer to Listen? Spotify & Apple Podcasts

Cory and I discussed:

The recent Salesloft Drift/Salesforce incident that impacted 700~ organizations and involved compromised OAuth tokens

Challenges involving OAuth in SaaS environments, such as over-permissive access, limited monitoring and unsecured storage of secrets

The broader rising trend of SaaS supply chain attacks

The false sense of security organizations have when it comes to compliance of SaaS vendors, and the unaccounted for risks associated with integrations, credentials, configurations, data and more

AppOmni’s State of SaaS Security Report and key takeaways

The rise of Non-Human Identities (NHI)’s and Agentic AI and its implications for SaaS access control and incidents

The lack of widespread SSPM adoption and the oversights and gaps that leaves for organizations when it comes to SaaS security

From CVE Entries to Verifiable Exploits: An Automated Multi-Agent Framework for Reproducing CVEs

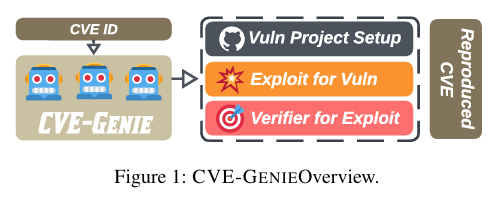

I recently shared a project from researchers dubbed “Auto Exploit” that could quickly create exploits for CVEs within minutes and for just a handful of dollars. Now, a new research publication and project titled “CVE-GENIE”, which gathers resources on CVEs, automatically reconstructs vulnerable environments and produces verifiable exploits in minutes and for as low as $2.77 per CVE.

On one hand, this is an excellent resource for researchers and defenders, but it is easy to see how malicious actors could use it to accelerate their exploitation. The researchers successfully reproduced 51% (428 of 841) CVEs published in 2024-2025, including verifiable exploits, all for a few dollars.

The researchers' multi-agent framework includes four stages in an end-to-end automated pipeline: Processor, Building, Exploiter, and CTF Verifier.

npm Supply Chain Attack Rattles Industry

This week, news broke that the open source maintainer account (Qix) was compromised via phishing. This led to attackers injecting malicious code into 25 popular npm packages, such as chalk and debug. This was big news this week because the impacted packages see hundreds of millions of weekly downloads.

The attackers were primarily targeting crypto applications and engaged in Wallet Hijacking, Targeted Assets, and hidden execution, as described in the blog from Endor Labs. Recommended follow-ups include downgrading from the impacted versions and auditing projects to check for affected versions in application dependency trees.

I found this video from Low Level on YouTube helpful to explain what happened and related thoughts:

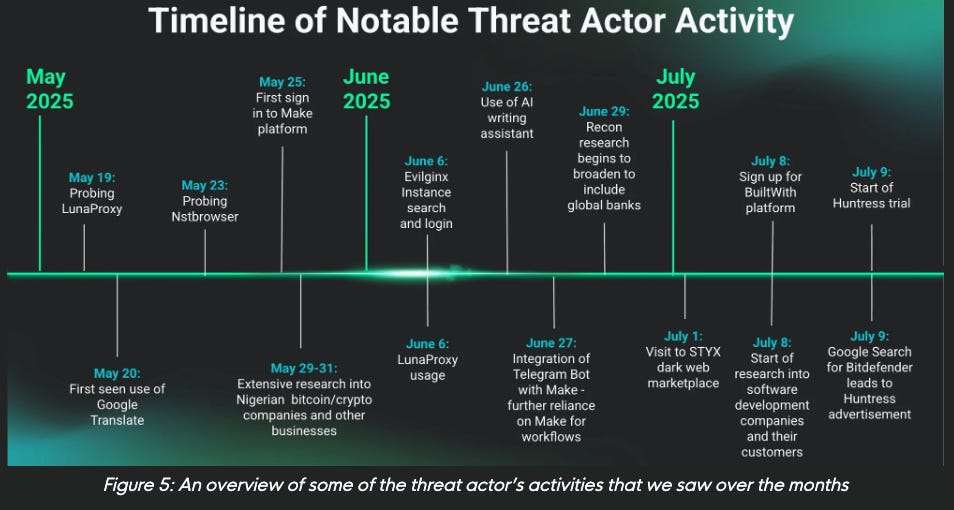

How an Attacker’s Blunder Gave us a Rare Look Inside Their Day-to-Day Operations

In a really rare and ironic situation, it appears a hacker mistakenly downloaded Huntress onto their operating machine, providing detailed insights into their malicious activities and how they carry them out.

The blog highlights the attackers activities, including how they used AI for operational efficiency, looked for man-in-the middle attack frameworks and more. This is helpful insights for those focused on SecOps and looking to understand how attackers may be operating and how to better defend against them.