Resilient Cyber Newsletter #62

Netskope Poised for IPO, Cyber Earnings Calls, MIT State of AI, AI-driven Attacks, Monitoring MCP Traffic & Critical Takeaways from Black Hat

Welcome!

Welcome to issue #62 of the Resilient Cyber Newsletter.

It’s been quite the week, with a lot of market activity from IPO intentions, earning calls, and more. There is also some evidence of malicious activities involving LLMs and coding assistants, so buckle up, as we have a lot to cover this week!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Permiso’s new ITDR playbook: turn identity blind spots into detection wins.

We’ve broken down the 5 categories of authentication anomalies that catch the vast majority of identity attacks and paired them with ready-to-use detection rules and thresholds. No guesswork, just practical implementation guidance.

Why it matters:

Detection rates for compromised identities have dropped from 90% to 60% in the past year.

Attackers don’t need to break in: 90% of successful breaches start with logging in.

Once inside, they can begin lateral movement in as little as 30 minutes.

This playbook shows how to close that gap with risk-based response procedures and investigation workflows that actually work in practice.

Start detecting what others miss, and grab your copy today.

Cyber Leadership & Market Dynamics

Secure Browser Successes

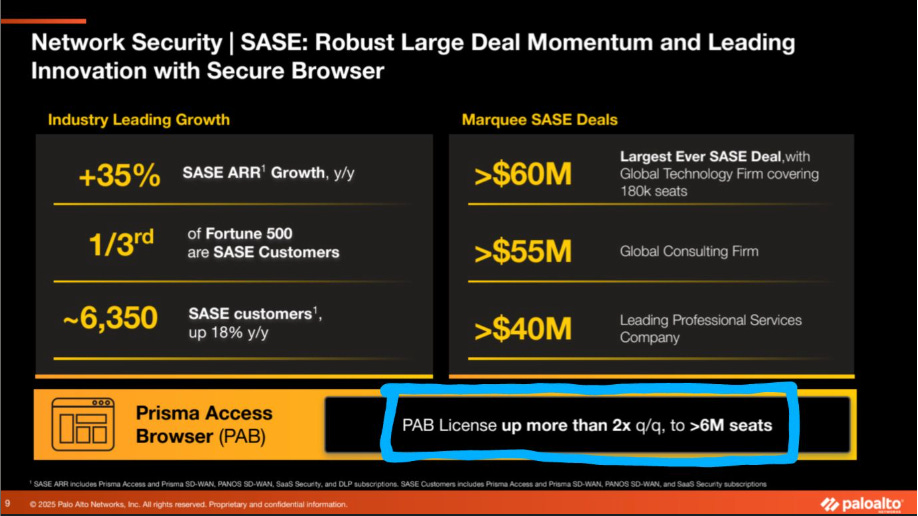

As most know, Palo Alto Networks (PANW) has set out to build a platform and consolidate various security tools and capabilities into a single comprehensive vendor suite. Their track record of success so far is hard to argue with, and this latest demonstration shows how an acquisition they made, this time focused on browser security, is paying off.

It was recently shared that their Prisma Access Browser (PAB) licenses were up more than 2x quarter-over-quarter. They have expanded to 6 million seated users globally, with 3 million licenses sold in a quarter.

PANW aside, browser security is and will continue to be a key focus area for cybersecurity, as the browser has become the de facto operating environment for so much enterprise activity and business workflows.

Netskope Poised to go Public

Cybersecurity leader Netskope recently filed an S-1, indicating its intentions to IPO/go public.

As usual, my friend Cole Grolmus at Strategy of Security was on top of it, highlighting that Netskope disclosed a $707M ARR, growing at 35%, including more than 30% of the Fortune 100.

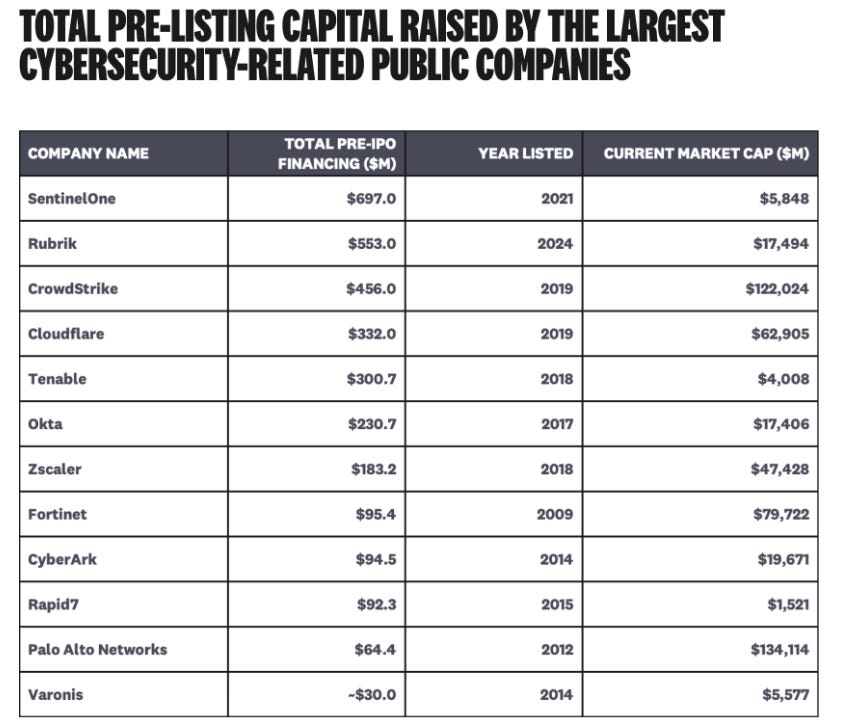

Going public in cyber is no small feat, and Cole recently authored an article titled “How Much Capital Does it Take for a Cybersecurity Company to Go Public?”. In that article, Cole shares some insights into the level of capital necessary for a cyber company to go public:

A decade ago, companies did it while raising less than $100M. Cole emphasizes that “it's expensive to build important cybersecurity companies.” What is interesting about Cole’s piece is that he also shows that 8/10 and 26/30 of the most funded cyber companies of all time are still private.

Cole states that not only is it expensive, but it will only get more expensive to establish a public cyber company, as competition is incredibly intense, growth curves are steep, and all of this comes with massive expectations.

What I like is that Cole closes the article quoting Marc Andreessen:

Raising money isn’t the end; it is just the level setting for the big expectations that come along with building the business, and it isn’t for the faint of heart.

Netskope’s S-1 Filing Marks a Pivotal Moment

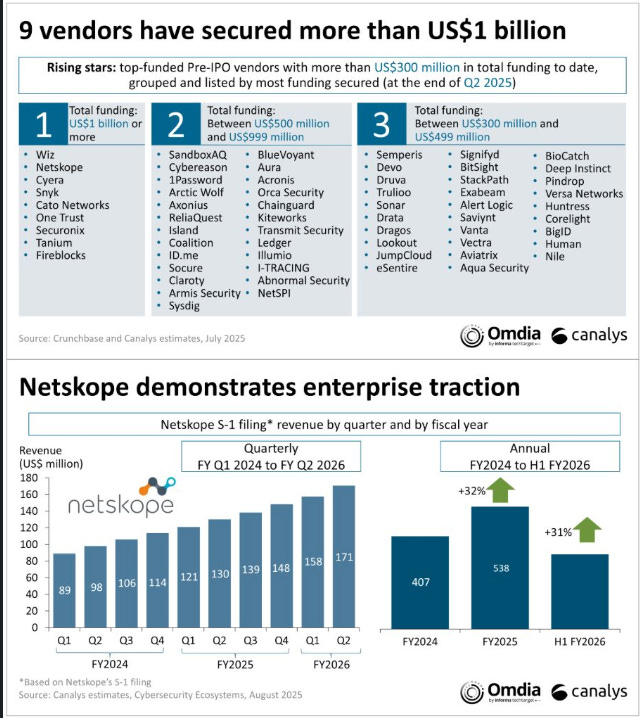

Building on Cole's analysis, Matt Ball from Canalys shared some excellent insights this week on Netskope’s S-1 filing. As Matt points out, Netskope is the second vendor to file in 2025 and the second among a cohort of nine pre-IPO cyber companies with over $1 billion in funding.

Matt highlights that Netskope reported $707 million in ARR in July 2025, a 33% increase from their previous reporting. Matt emphasized the role partners (e.g., the “channel”) have played in Netskope’s growth and success. What is interesting is not only that Matt discusses the importance of partners for Netskope, but also that he stated:

“For every dollar or product sold, partners typically generate up to $2 in consult and managed services, rising to $4-6 for some platforms.”

This emphasized the importance of partnerships, not only for the product vendor but also for the integrators and service partners.

CrowdStrike Q2 Earnings Results

Another industry-leading firm that provided insights into its performance in Q2 was CrowdStrike. Their CEO George Kurtz, took to LI to share some key metrics, such as:

Record net new ARR of $221M, growing their ending ARR to $4.66B, which is a 20% increase YoY

Proof points pointing towards customers leaning towards platform consolidation

The Falcon platform crossing $430M ARR and 95% YoY growth (pretty insane stat!)

Falcon Cloud Security passing $700M ARR with 35% YoY growth

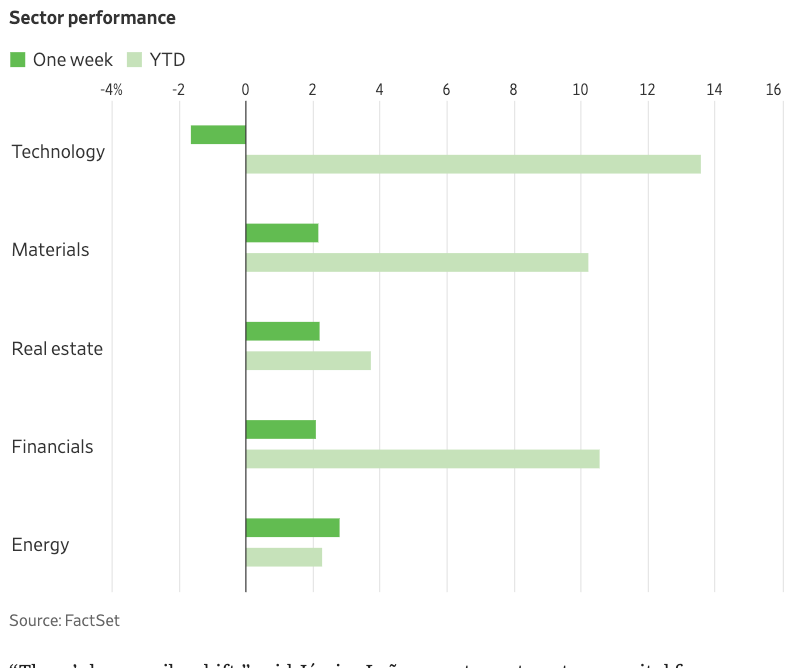

Tech Rally Shows Signs of Losing Steam

Amidst potential doubts in AI, some point out that the tech stock rally may be losing steam. This piece from the Wall Street Journal (WSJ) points out that the Mag 7 (e.g., Amazon, Alphabet, Apple, Meta, MSFT, NVIDIA, and Tesla) have seen some recent slowdowns. The piece points out the importance of the upcoming NVIDIA earnings report.

Some have pointed out that the recent doubts and potential shift among investors are similar to earlier this year, when the release of China’s DeepSeek rattled U.S. markets. Many also want to see if the Federal Reserve will lower interest rates. This topic has not just been financial but political as of late.

The article also highlights Sam Altman’s commentary about being in an AI Bubble and the news that Meta froze its hiring of AI staff. While we’re far from any negative trend in AI and tech, there are signs that things may be losing some steam or coming down to earth a bit from their historic initial run.

Resilient Cyber w/ Gianna Whitver and Maria Velasquez: The State of Cybersecurity Marketing

In this episode of Resilient Cyber, I sit down with Gianna Whitver and Maria Velasquez to chat about the state of marketing in the cybersecurity industry, as well as their popular event "Cyber Marketing Con"

In this episode, we discussed:

The background of the CyberMarketingCon and what led Gianna and Maria to Co-Found the event and community

Where marketers typically fall short and what can be done to drive more effective marketing and selling to security practitioners and leaders

What practitioners can learn their marketing peers when it comes to communication, empathy, story telling and building relationships

The importance of marketing and brand and broader GTM for security vendors to stand out from their competitors

What to keep an eye out for at the upcoming CyberMarketingCon in December in Austin Texas

MIT AI Report Rocks the Industry

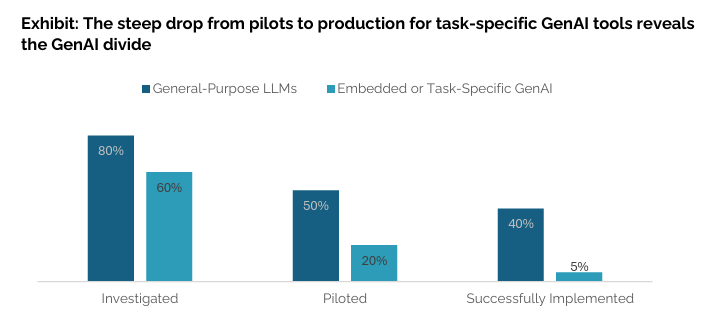

Among the key factors contributing to some of the potential doubt or deflated expectations around AI is a recent MIT report titled “State of AI In Business: The GenAI Divide.”

The report found that despite $30-$40 billion in enterprise investment into GenAI, 95% of organizations are getting zero return. It stated that only 5% of integrated AI pilots provide any measurable P&L impact.

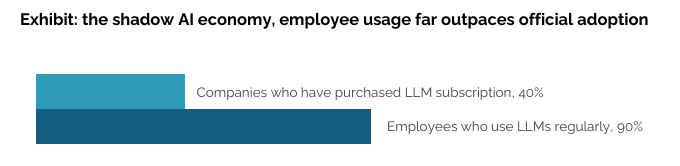

The report also highlighted how pervasive shadow AI is, with 90% of employees using LLMs regularly, despite only 40% of organizations having purchased an LLM subscription.

The report points out that while tools such as ChatGPT and Copilots are seeing widespread adoption (80%+), custom and vendor AI solutions are being quietly rejected. Only 20% reach the pilot stage, and only 5% reach production, giving the impression that AI and LLM usage is more oriented toward individuals than the enterprise.

The report cites common patterns contributing to what they define as the GenAI Divide. They are:

Limited disruption

Enterprise paradox

Investment bias

Implementation advantage

“Most organizations fall on the wrong side of the GenAI Divide. Adoption is high, but disruption is low.”

I found this quote telling. Much like the early days of the cloud, when everyone “lifted and shifted” things into the cloud, GenAI adoption is generally being pursued out of FOMO or a sense of not wanting to be left behind among peers and the industry, but with no real expertise or business use case rationale.

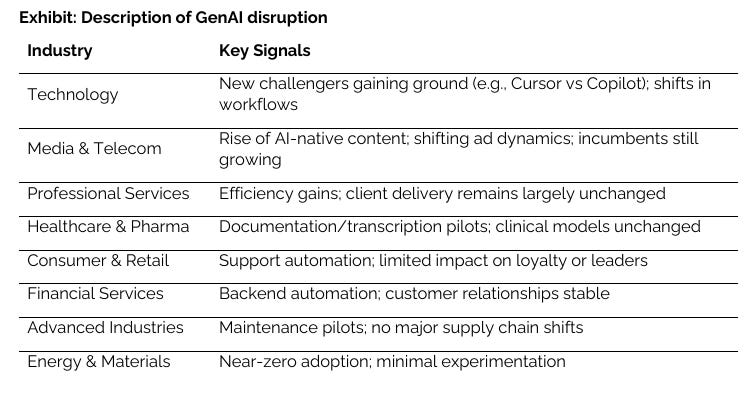

One thing I thought was helpful from the report was their attempt to describe GenAI disruption, which is a term often thrown around with little actual correlating examples:

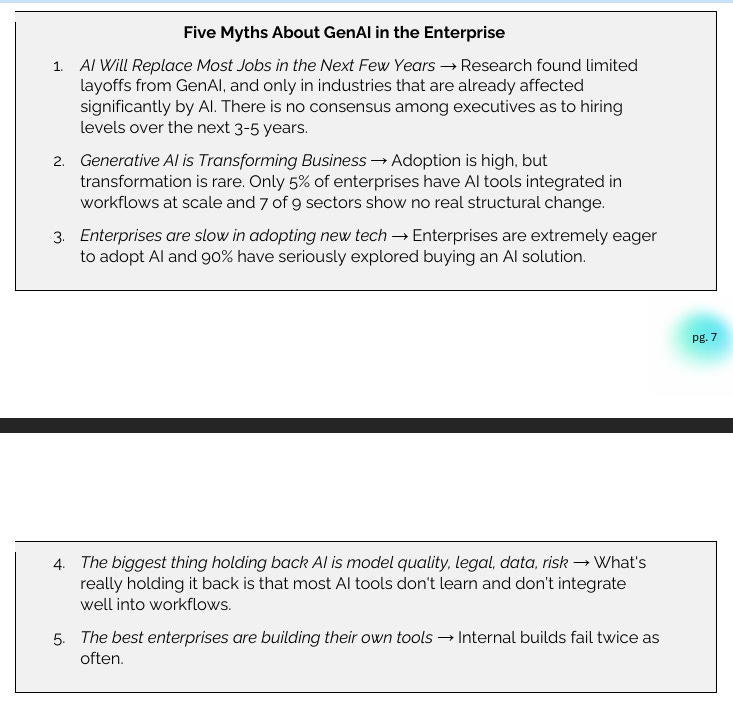

The following call out of five myths about GenAI in the Enterprise were particularly telling, as they hit as the familiar tropes about AI disrupting the workforce or business, both of which seem to be much more mixed in reality:

One funny thing is that GenAI struggles with the same problems Cyber often does. Surveyed executives struggle with how they justify GenAI spending since it “doesn’t directly move more revenue or decrease measurable costs”—welcome to Cybersecurity folks, where leaders often struggle to justify Return on Security Investment (ROSI) to their leadership.

Betting On Yourself and Building a $40B+ Zero Trust Giant in ZScaler

I’m always looking to learn from the industry leaders who have achieved outsized outcomes and are shaping the cybersecurity ecosystem. A great recent example came from an episode of “Inside the Network” with Rahendra Ramsinghani, Ross Trivedi, and Ross Haleliuk, where they interviewed Zscaler’s CEO and Founder, Jay Chaudhry.

Jay walked through his humble beginnings, from tilling fields with animals to immigrating to the U.S., betting himself and his wife to start a company, navigating multiple exits, and now leading one of the top-tier cybersecurity companies in Zscaler.

It’s a great peek inside one of the top builders in the cybersecurity industry!

AI

Betting Against the Models

While many security startups are now focused on “security for AI agents,” Shrivu Shankar argues that this is a bubble and a flawed approach due to the failure to believe in the rapid evolution of models.

In the piece, he makes several predictions, including:

Foundation Model Providers (FMP)’s Will Solve Their Own Security Flaws (e.g., prompt injections and jailbreaks)

Restricting an Agent’s Context Defeats Its Purpose

The real threat isn’t the agent, it’s the ecosystem

I agree and disagree with some of the predictions here, or at least their boldness. While I agree that the 6-12 month patch cycle makes third-party products difficult to keep up with, I do not see some classes of vulnerabilities, most notably prompt injections, as becoming irrelevant. They seem to be a fundamental aspect of how LLMs work; unless we see a drastic change in their entire design, we may be dealing with both direct and indirect prompt injection for some time.

I agree that restricting agents' context does limit their utility. I also agree with the authors' stance that rampant adoption of coding agents shows they’re prioritizing productivity over lockdown/rigor—but to be fair, that is always the case, as security is merely one consideration for the business, with competing priorities such as growth, revenue, and productivity often taking priority over security fears. As he writes, the best solutions will be those that enable safe use of maximum context, not those that attempt to restrict it.

He is spot on that looking at agents in isolation for some posture assessment is flawed. Agents are meant to “give arms and legs” and make them actionable. You need to assess what actions agents are allowed to take and/or are taking to truly understand their potential risks.

This is a very thought-provoking piece, especially when we look at this niche of AI security, focused on securing agents, and the implications for the bets being made with capital investments.

Monitoring MCP Traffic via MCPSpy

By now, most are familiar with the Model Context Protocol (MCP) from Anthropic. It enables countless promising use cases for agentic workflows and the ability to give LLMs "arms and legs" to perform semi- or fully autonomous activities, connect with external tools and data sources, and more.

That said, folks such as Invariant Labs, Christian Posta, Idan Habler, PhD, Vineeth Sai Narajala, and others have raised concerns about the ability to abuse MCP or use it maliciously or as part of attacks.

This is a really cool open-source tool, "MCPSpy," by Alex Ilgayev. It uses eBPF to monitor MCP traffic. Alex discusses why we should care about MCP, the challenges with MCP observability, and the need to monitor MCP traffic, all of which led him to create this open-source tool for the community.

Anthropic says a Hacker used AI to automate an “Unprecedented” Crime Spree

Major outlets such as NBC recently broke a story that Anthropic, which created Claude, reported that a hacker used the chatbot to identify, hack, and extort 17 different companies.

The attacker allegedly used Claude to identify companies vulnerable to attack and then create malicious software to steal sensitive data from them. It then used Claude to analyze the files to determine which ones could be used to extort the target companies, analyze their financial documents to determine how much the extortion demands should be, and even use Claude to help write the extortion emails.

While the companies named aren’t being shared, it is stated that the extortion amounts ranged from $75,000 to more than $500,000.

AppSec

Nx Build System Supply Chain Attack

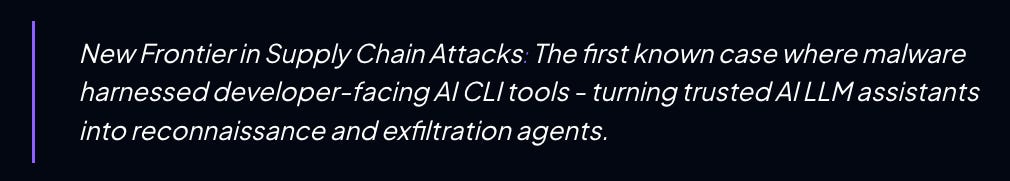

This week, StepSecurity and others, such as Wiz, broke news that the popular Nx build system package was compromised with data-stealing malware. While the malware carried out everyday malicious activities such as stealing secrets and sensitive data, it was unique in that it weaponized AI CLI tools such as Claude, Gemini, and Q to help look for secrets to exfiltrate.

As StepSecurity noted in their blog, this is the first known case where malware looks to leverage AI CLI and LLM assistants on endpoints and systems for malicious activity. Aside from the novelty of the activity, it also involves a widely used open source package in Nx, which they note is downloaded 4 million times per week.

Once installed, it scours infected endpoints for environment variables, hostname, OS details, and platform information. It also looks for cryptocurrency wallets, developer credentials, and sensitive data.

It instructed the LLM assistants to look for sensitive data and dump it into a temporary text file. Then, it created a public GitHub repo and moved the data there. All while making the exposed data publicly accessible in the repo, it provided it to the attackers as well as anyone else who may come across it. StepSecurity identified thousands of public GitHub repos where exfiltrated credentials are now exposed.

This is a really interesting combination of a software supply chain attack coupled with weaponizing AI against developers and organizations. It shows how LLM assistants can not only improve developer productivity but can also introduce novel attack techniques.

This also emphasizes the need to limit permissions and access of LLM assistants in the event they are used maliciously like this in future attacks, which is troublesome, given it could impact their utility for developers too.

I definitely recommend reading the full blog.

Beyond the Hype: Critical Takeaways from Black Hat and DEFCON

Since I didn’t attend Black Hat this year, I’ve been watching for good takeaways, event summaries, key trends, and observations. One came from my friend James Berthoty in a piece he published on The New Stack. (I’m glad to see James getting this increased exposure and outlet, too, as his insights are usually solid.)

The five key takeaways James summarized in his article are:

Continuous Threat Exposure Management (CTEM) Is the New Cloud Security Posture Management (CSPM)

Innovative Runtime Solutions Add Layer of Vital Visibility

The SOC is HOT

The Code Security Divide is Getting Clearer

Agentic AI Security is Whatever You Say It Is

James points to trends he, I, and others have written about extensively, including the need for vulnerability prioritization with context, the importance of runtime visibility, the gold rush about AI <> SOC, and the confusing hype cycle we’re in around Agentic AI.

Passing the Security Vibe Check: The Dangers of Vibe Coding

Many are watching with skepticism as we see the rise of “vibe coding” or just leaning into AI coding tools and assistants without necessarily conducting due diligence and rigorous validation from a security perspective.

The Databricks team recently published a solid blog post exploring the concept and some of its potential dangers. The blog highlights how AI can generate inherently vulnerable code and how vulnerabilities can be avoided using security-centric prompting.

An Enterprise Guide to Browser Security in 2025

Earlier in the market dynamics section of this newsletter, I spoke about Palo’s impressive browser security growth. Browser security is an increasingly growing category, as many realize how critical the browser is for attackers and defenders alike.

That’s why this piece from Francis Odum was timely, breaking down the state of browser security in 2025. Francis discussed:

The rise of agentic browsers

The need for security when it comes to the agentic era of browsing

How GenAI is an increased catalyst for enterprise browser security

How the browser remains a blind spot in many of today’s security stacks

This is a good primer for understanding the rise of agentic browsers and browser security more broadly.

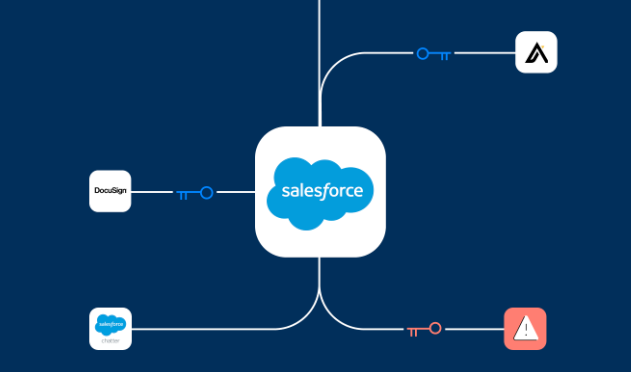

Lessons from The GTIG Advisory on the Salesforce OAuth Token Breach

Google’s Threat Intelligence Group (GTIG) recently issued an advisory on an attack and an APT targeting Salesforce instances. Oleg Mogilevsky from Astrix Security published a great concise piece breaking down the key takeaways from the advisory.

As the blog summarizes, the attack involved a threat actor dubbed “UNC6395,” who gained access to compromised OAuth tokens tied to a Salesloft Drift third-party application and was able to export large volumes of data from Salesforce instances. They looked for credentials and secrets to use in follow-on attacks, making it very likely we may see subsequent issues, but we do not have a straightforward way to tie them to this incident.

It’s noted that the vulnerability wasn’t in the Salesforce platform itself, but a third-party application associated with Salesforce. Salesloft and Salesforce revoked all Drift access tokens and removed the application from their “AppExchange”.

Gartner Got Shift Left Wrong

My friend and co-author of “Software Transparency” took to LinkedIn recently to point out that Gartner got it wrong when they claimed “Shift Left is Dead.” As Tony rightly points out, the original intent of Shift Left was never to throw a bunch of tooling into a pipeline and dump contextless findings onto developers.

It aimed to integrate security throughout the SDLC, from the earliest activities through deployment and production environments. Unfortunately, it got conflated with a product rather than a process, which is true for many cyber trends. See Zero Trust, for example, which is often viewed as a product: “We have ZScaler, so we’re doing Zero Trust” (not to pick on ZScaler, but you get my point).

We often dilute valid concepts in cyber due to marketing or attempts to sell products, because doing the actual work is hard. Tony points this out by stating that the emphasis was put on tooling rather than activities such as identifying architectural flaws or design weaknesses before development.

“Gartner’s pronouncement that Shift Left is dead stems from their limited definition of what it meant in the first place”.

Tony concludes by discussing that we should reclaim Shift Left with its original intent, which includes security belonging at every stage of the SDLC, the higher ROI of security investments early, providing developers with context, not just tools, and also emphasizing functional operations teams.