Resilient Cyber Newsletter #56

Cyber Platform Dynamics, Services-as-a-Software Reflections, Impact of AI on Developer Productivity, FedRAMP Vulnerability Management Evolution, & Runtime Reachability Deep Dive

Welcome!

Welcome to issue #56 of the Resilient Cyber Newsletter.

It’s been a really exciting past week with a lot of great resources discussing topics such as the potential for Services-as-a-Software, AI’s intersection with venture capital, and Vulnerability Management, including updates from FedRAMP, deep dives on reachability and more.

So, grab a coffee, here we go!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

The Cost of CVEs 2025: How much are CVEs costing your business?

Vulnerabilities are more than just security risks—they’re an expensive, ongoing drain on resources. Chainguard just released an in-depth report, The Cost of CVEs 2025, revealing how vulns are costing organizations of all sizes and industries tens of millions each year. From patch cycles and downtime to compliance overhead and incident response, the report breaks down the true, often overlooked, financial impact of vulnerability management.

Based on data from industry leading security and engineering teams, this report quantifies the organizational toll of chasing CVEs in today's threat landscape. Spoiler: patching everything is neither scalable nor sustainable. It’s time to rethink traditional approaches and consider upstream strategies that eliminate vulnerabilities before they ever make it to production.

This is an excellent report for security leaders to benchmark their current vuln management strategy—and explore how leading teams are shifting left to reduce risk and cut costs.

Cyber Leadership & Market Dynamics

Resilient Cyber w/ Ed Sim - The Intersection of Venture, AI, and Cyber

In this episode, I sat down with Boldstart Ventures Founder and GP Ed Sim. Ed is also the author of "What’s Hot in Enterprise IT/VC,” which I’ve been reading for some time and strongly recommend checking out. We dove into the intersection of Venture Capital, AI, and Cybersecurity.

Ed and I started the conversation discussing boldstart ventures, their role as an inception fund and their recent announcement of a $250M fund for those building the “autonomous enterprise”

Ed and I explored how AI is having an outsized impact on the investment and venture landscape and why that is, with it poised to be the largest platform shift of our lifetimes.

Ed laid out his 5 P framework, for evaluating what teams/founders to bet on and back and what he’s learned through decades of investment experience and expertise.

One of his recent success stories was investing in Protect AI, which recently was acquired by Palo Alto for roughly $700M, with the news breaking around RSA. Ed discussed the origin story of Protect AI and what the acquisition signals for the cybersecurity and AI security market.

One paradigm unfolding right now is that between AI Native firms and Incumbents, and it is a race between speed of innovation and speed of distribution. We discussed how this race is and can play out and what will help determines who wins or loses.

We wrapped up the conversation discussing the rise of AI driven development with coding agents, copilots, and LLMs and the implications for the AppSec space.

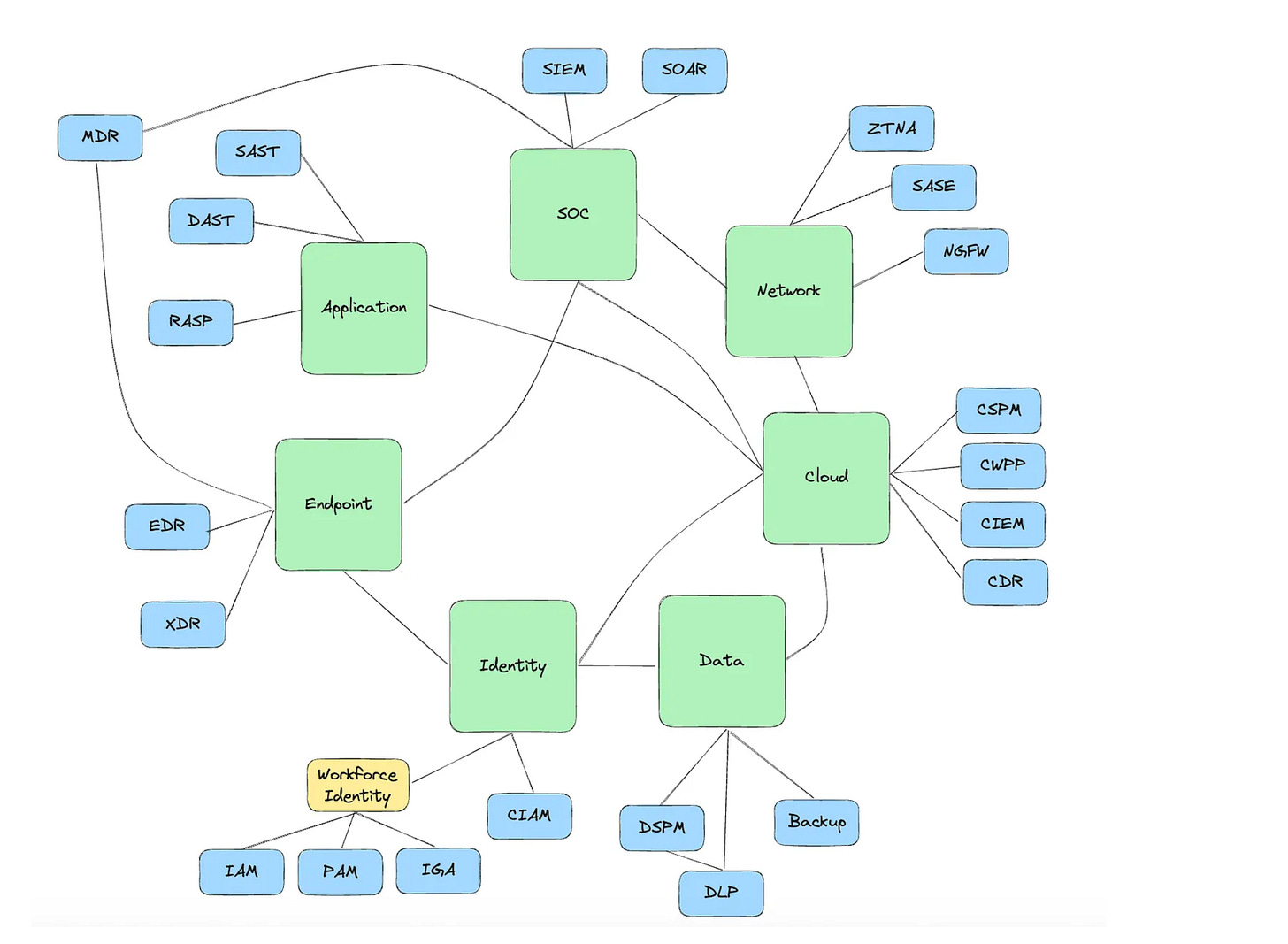

You Don’t Start a Platform, You Earn the Right to Become One

We’ve heard a ton in recent years about the age-old debate in cyber of Platform vs. Point Products, or, framed another way, Best of Breed vs. Consolidation. However, no company starts as a platform and instead is formed around a core competency and capability, and then expands from there.

Ross Haleliuk and Shashwat Sehgal make this point exceptionally well in their recent piece on Venture In Security. They discuss the “Four Stages of Building a Platform”, which they define as:

Stage 1: The one-trick pony that opens doors (Seed to Series A)

Stage 2: Expanding to adjacent use cases (Series B to Series E/Growth Stage)

Stage 3: Becoming a platform by IPO (late stage/pre-IPO)

Stage 4: The $100B+ mega platform (post-IPO/public scale)

However, few make it fully down this path, falling victim to various risks and challenges or not aspiring to make a platform play. Some of the challenges cited in the article include trying to expand too quickly without establishing a core capability and customer base, and never earning the right to do so due to not differentiating themselves in their initially targeted use case.

The image below shows that companies start with a core focus and expand into new feature sets and adjacencies.

The $4.6T Services-as-Software opportunity: Lessons from year one

I wrote a deep dive piece late in 2024 titled “Agentic AI’s Intersection with Cybersecurity: Looking at one of the hottest trends in 2024-2025 and its potential implications for cybersecurity”.

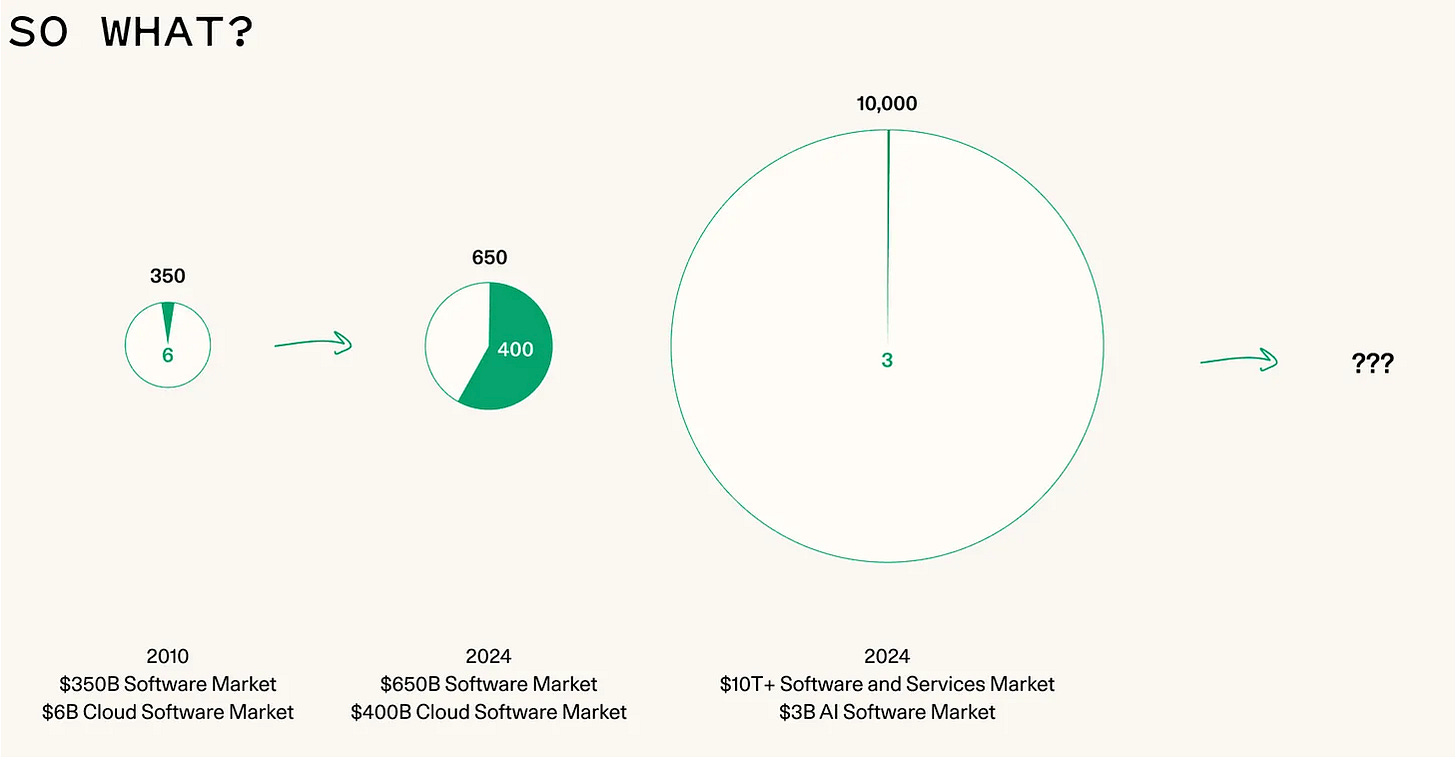

In that piece, I discussed the concept of “Services-as-a-Software”, a phrase that was getting a lot of excitement from venture capital firms such as Sequoia and others, and it was because the Services market has a much larger Total Addressable Market (TAM) than software, as seen in the image below:

While the software market is measured in Billions, the services market is estimated to be in trillions, making it a massive target and compelling space for venture capital and other investors, startups, founders, and more.

This piece from Ashu Garg and Jaya Gupta of Foundation Capital caught my attention this week. They reflected on the Services-as-a-Software opportunity one year into focusing on the space. As they discuss, thousands of AI-native startup companies have set out to use agents to replace human workers, whether SREs, SDRs, Accountants, etc.

While they don’t cite Cyber workers, the issue is real here, too, with startups aiming at roles from SecOps, GRC, AppSec, and more. Usually, it is phrased as “augment” rather than “replace,” partially because the technology simply isn’t mature and proven, but additionally to quell (valid) concerns from the workforce that AI is coming for their jobs.

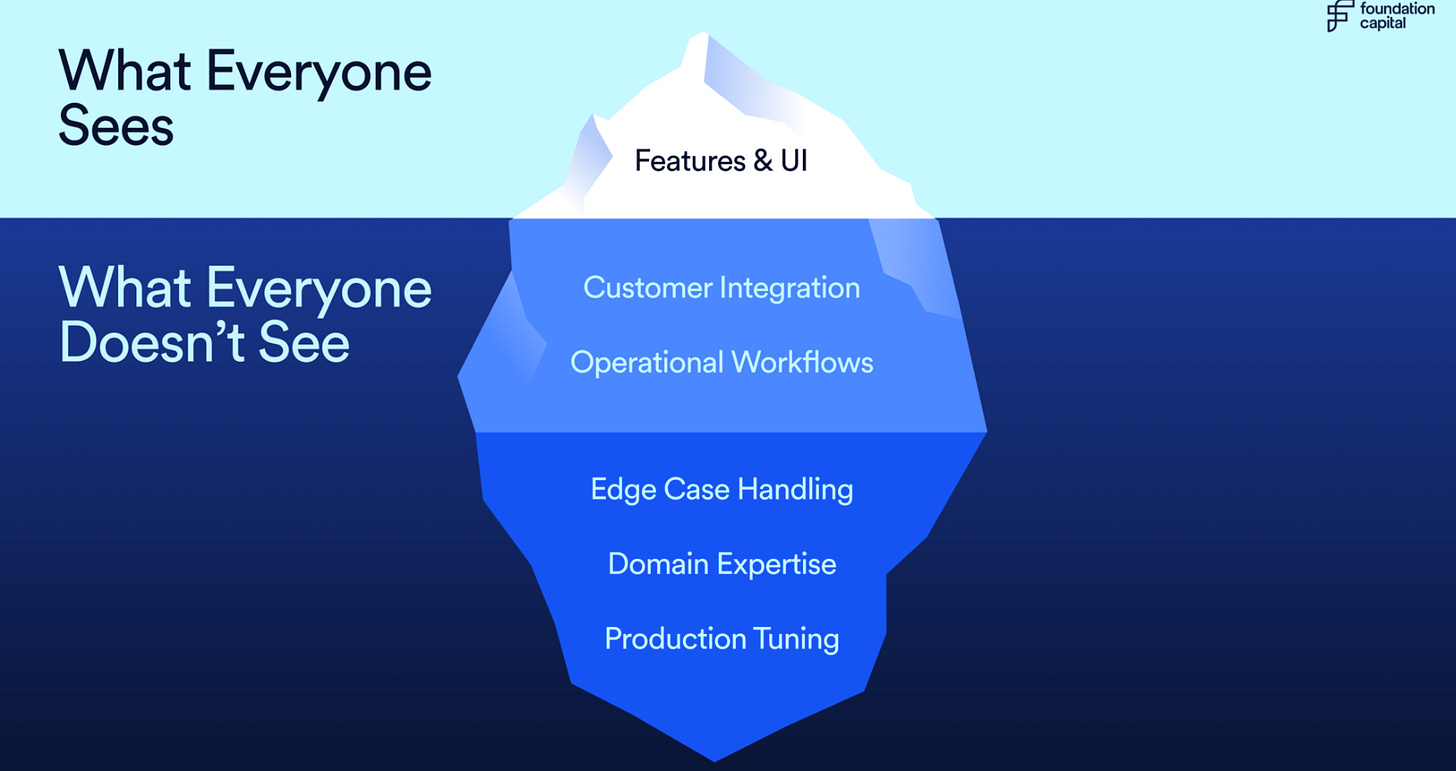

As they discuss, the rise of AI-driven development and LLMs is impacting SaaS companies' ability to differentiate themselves based on features alone. As they say, what you build is no longer your moat; it is how you integrate, embed, and operate that is the differentiation (I would argue that to some extent, it always has been, but nonetheless).

The authors argue that three consistent patterns separate Services-as-a-Software companies with real traction from those just riding the hype.

Product differentiation comes from implementation

The line between pre-sales and post-sales no longer exists

Companies are aligning their pricing with customer outcomes

These patterns include the commoditization of development through AI-driven copilots and LLMs, which forces companies to compete on business outcomes rather than features alone. They cite the rise of forward-deployed engineers (a trend many are copying from Palantir) to help navigate customer environments, manage edge cases, improve feedback loops, and bring insights back to the core product roadmap.

Another major change includes companies revising pricing models to focus on customer outcomes, which reflects AI doing work, not just a tool to facilitate workflows and business processes. The authors discuss a spectrum moving from Seat/Access-based pricing, Usage-based pricing, Workflow-based pricing, and Outcome-based pricing. Each model has unique considerations, such as tokens and queries used, documents processed, or reports written, and at the highest end, business outcomes delivered.

While not cited in the Foundation article, a good talk on this topic came from a Sequoia event a couple of months ago, featuring Paid CEO Manny Medina

As the article discusses, outcome-based pricing is a high bar though, due to unique organizational processes, requirements and more, leaving more vendors orienting around usage and workflow-based pricing for the time being.

They close the article discussing how there needs to be a shift to focus on speed-to-value over “vibe revenue”, with companies needing to focus on the iteration of getting feedback and insights from on the ground engagement with customers and bringing it back to the product and offering to further optimize it, with an eye on the price, as described below:

Why fight so hard to perfect that loop? Because the prize isn’t the familiar $200B SaaS pool; it’s the $4.6T enterprises pour each year into salaries and outsourced services – the very labor-intelligent agents are now poised to absorb.

AI Agent Security <> Investments

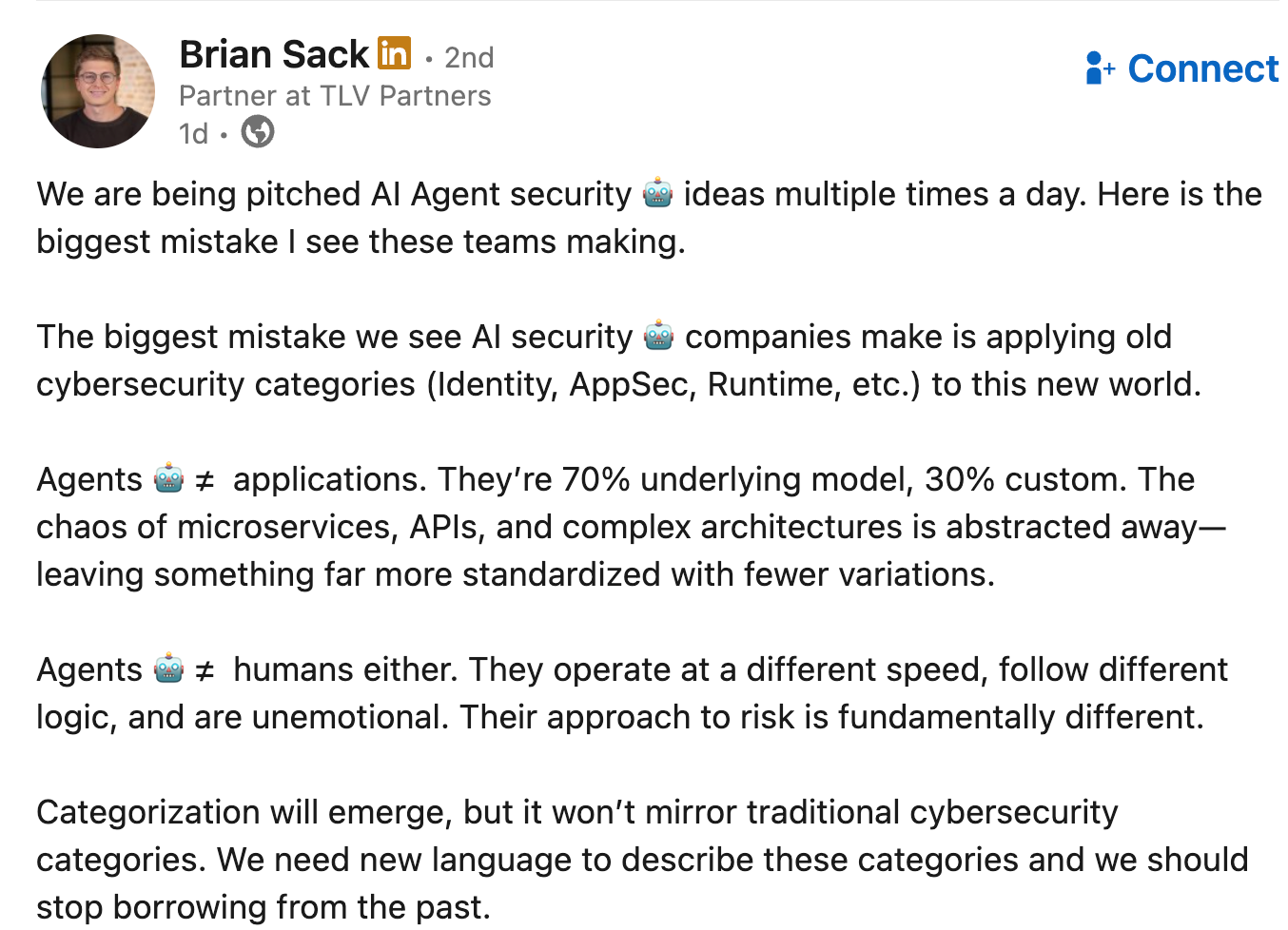

I stumbled across this LinkedIn post from Brian Sack and it caught my attention. No doubt AI and Agentic AI are dominating the landscape when it comes to venture, investments and startups, as discussed by others such as Mike Privette over at Return on Security.

However, this post from Brian highlights some key points that startups pitching investors need to keep in mind. The paradigm and operating model for agentic workflows and innovations is and will be fundamentally different than traditional IT and security workflows.

DHS, National Guard Confirm Salt Typhoon Attacks on Guard Networks

Officials with the National Guard Bureau and DHS both confirmed that the China-based Salt Typhoon hacking group targeted national guard networks between March and December of 2024. This includes potentially impacting National Guard unit networks and other critical infrastructure they protect.

This continues a trend of Salt Typhoon impacting U.S. critical infrastructure and China more broadly likely lying in wait to potentially invoke exploitation in the future potential conflicts between U.S. and China, which are heavily discussed in excellent books such as Dmitri Alperovitch’s “World on the Brink: How America Can Beat China in the Race for the Twenty-First Century”.

AI

Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity

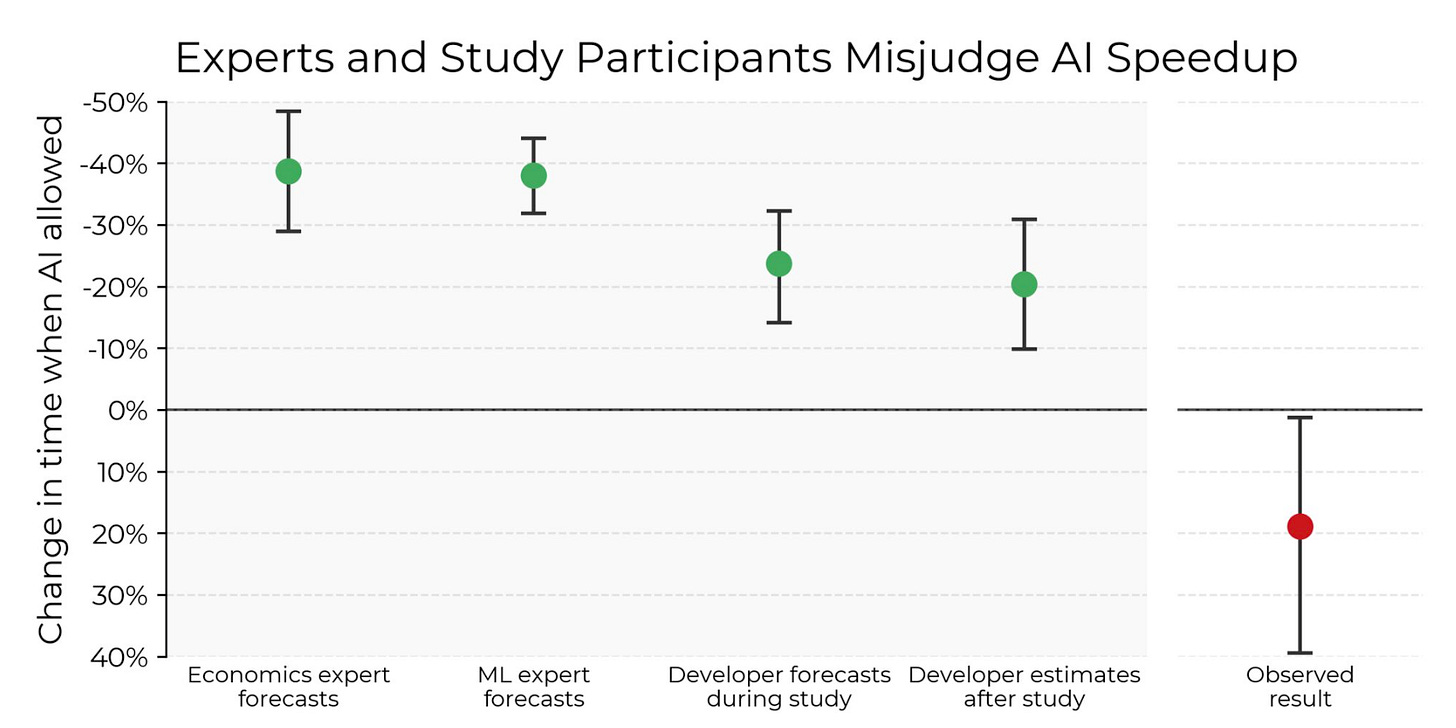

We know the industry has seen rampant adoption of AI coding tools, for LLMs, copilots and coding assistants, with companies seeing rapid revenue growth and an expanding developer base as customers and users. Much of this is all underpinned by claims or productivity gains. However, most of these claims are anecdotal and not formally measured or researched quite yet, which is why this new research paper made headlines this week.

The study involved 16 experiences open-source developers (albeit a small sample size, no doubt) looking to navigate 246 tasks. Each developer has 5~ years of experience and has moderate experience with AI tooling.

The findings are what caught folks attention, where the Developers used Cursor Pro and Claude 3.5/3.7 Sonnet. The developers estimated the use of AI would reduce completion time by 24%, but the findings however showed that the use of AI increased completion time by 19%, slowing developers down. The researchers point out these findings not only contradict assumptions by the Developers by also contradict predictions from experts in economics and ML who projected AI will speed development by 38%-39%.

Much of the overhead in the study occurred not due to the code being unusable but the developers dealing with overhead of prompting, waiting, reviewing and fixing the code that was provided before it could be used in production. This includes Developers spending 9% of their time cleaning up the code AI produced. It also found only 44% of the code produced from the AI tooling was usable.

Red Teaming AI Red Teaming

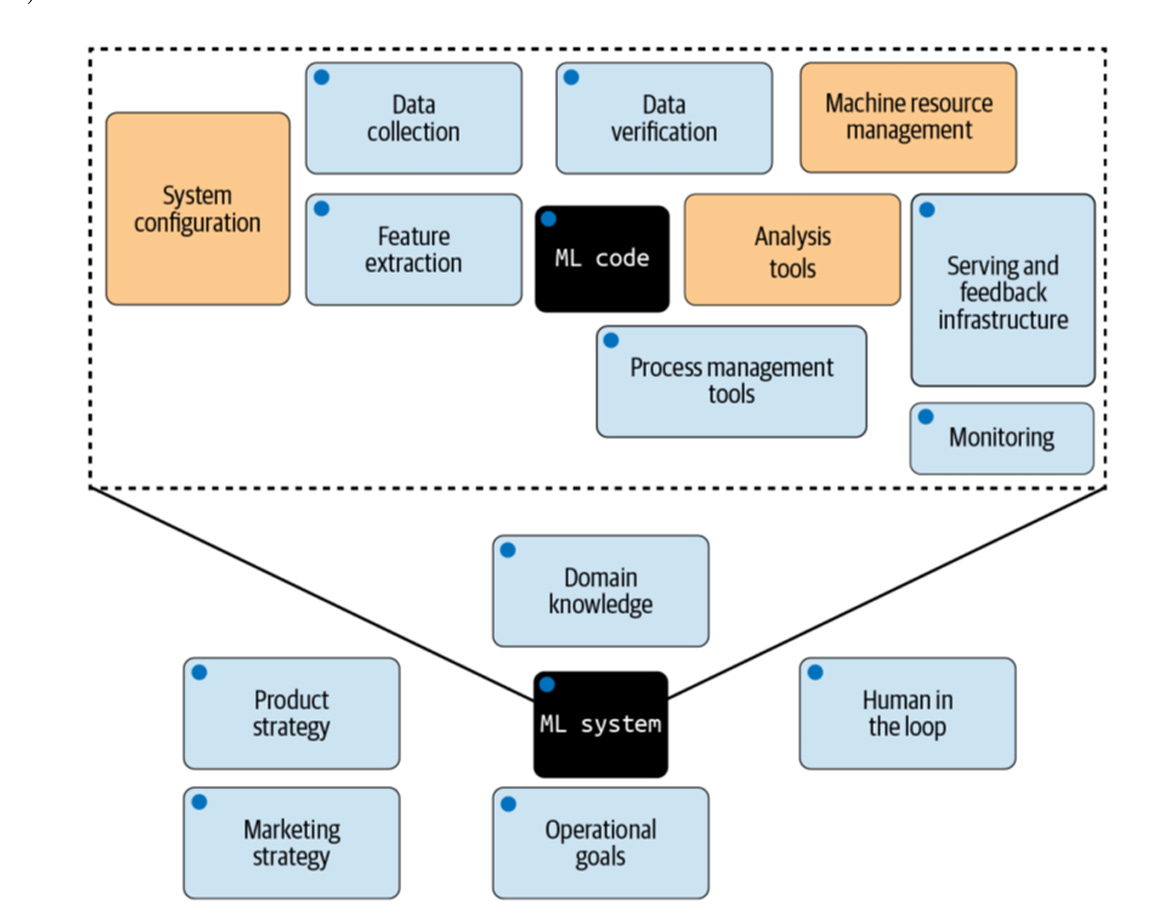

We've heard a lot about "AI Red Teaming" in the last 18-24 months. However, it has overwhelmingly focused on model-centric vulnerabilities. This is problematic, especially with the rise of Agents, enabling protocols (e.g., A2A, MCP, et), underlying Cloud infrastructure, organizational context, users, and more.

In short, it's a myopic way to red team AI, as laid out by Brian Pendleton, D.Sc., and team in this new paper titled "Red Teaming AI Red Teaming". They recommend a much more comprehensive approach, including multifunctional teams, a study of systemic vulnerabilities, and the interdependencies of technical and social factors.

Defense in depth is alive and well, or should be!

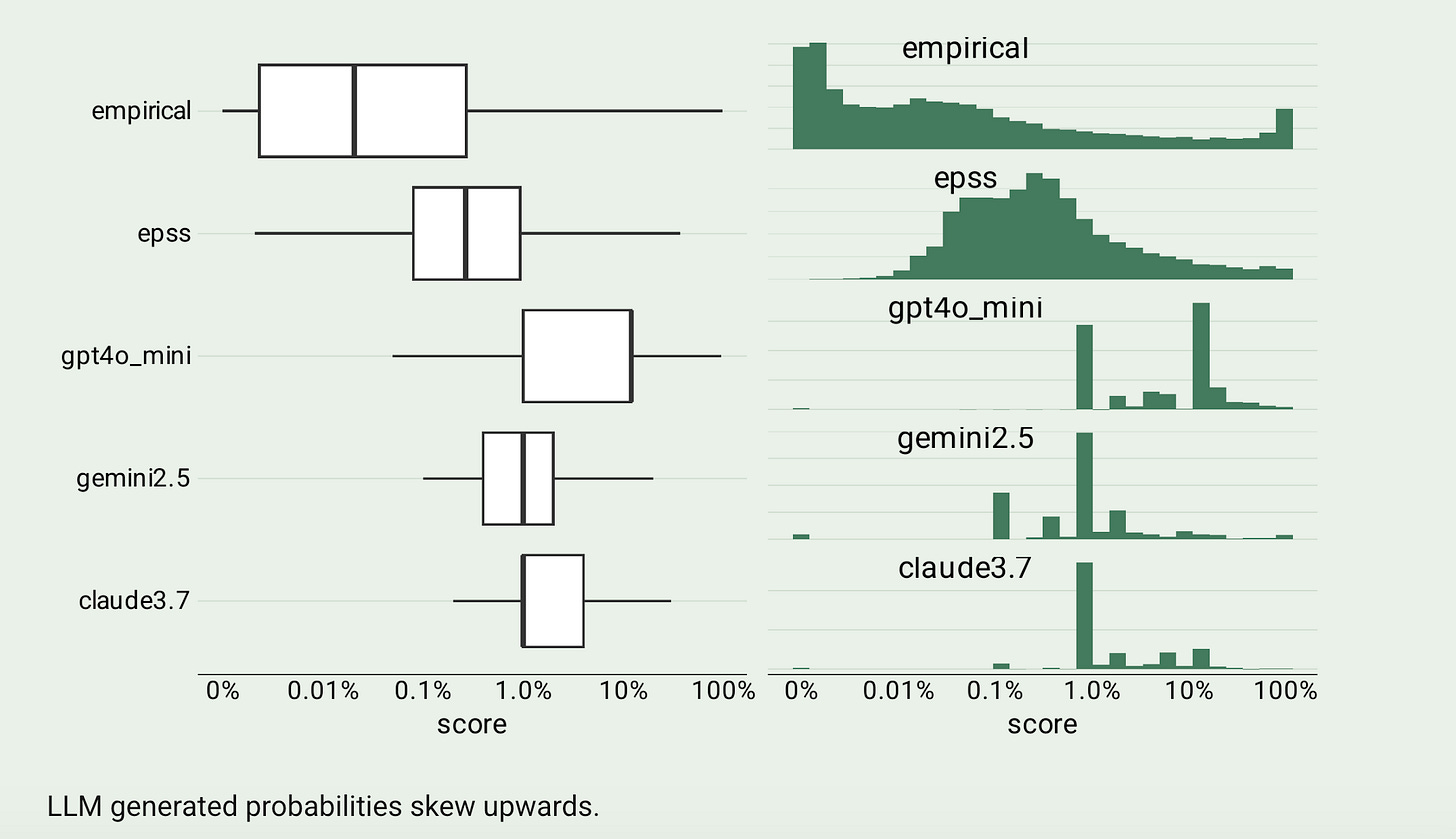

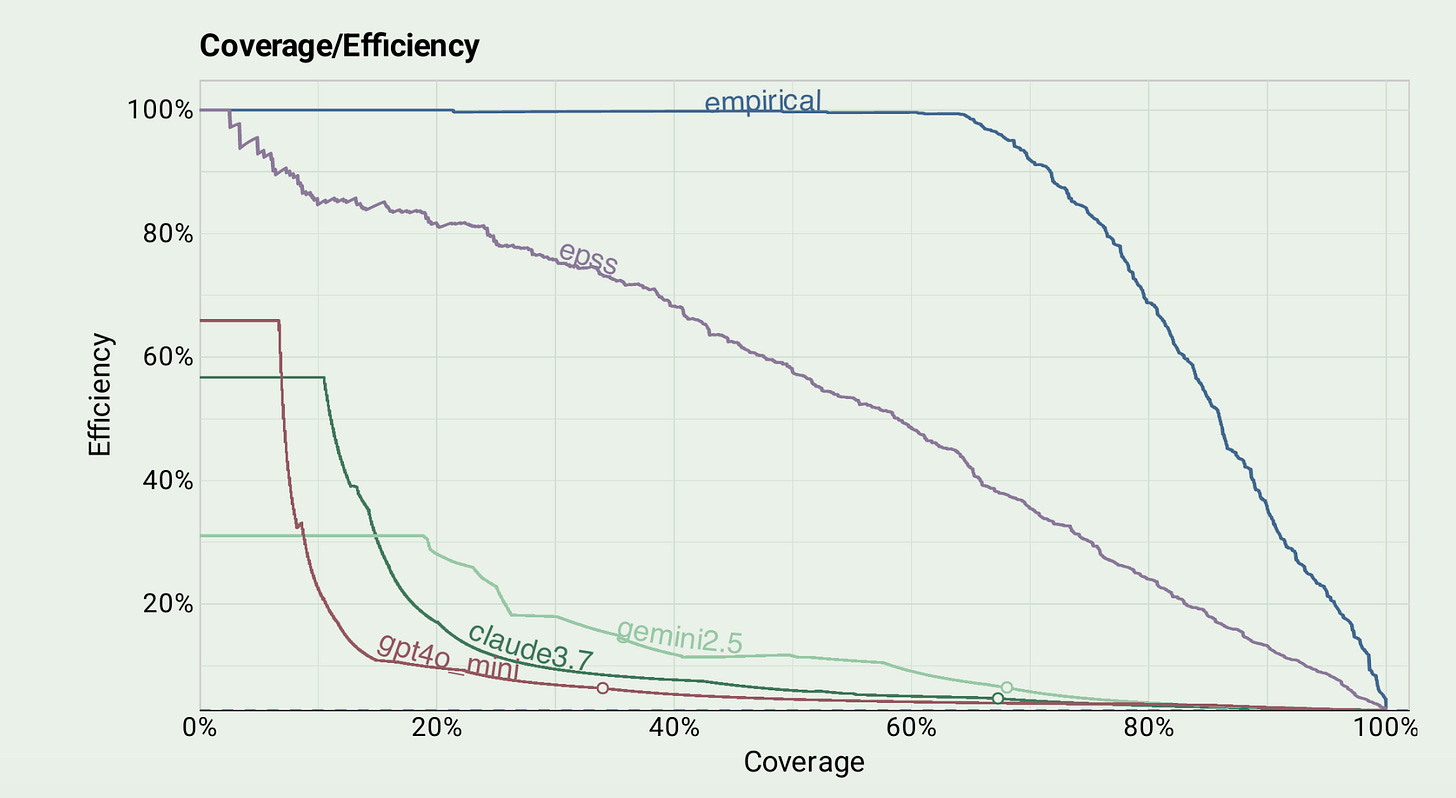

Benchmarking LLMs on the Vulnerability Prioritization Task

We seem to be living in an era where AI and LLMs are the answer to everything…and shouldn’t be. This recent research from the Empirical Security team is a good reminder of that, as they compared the performance of LLMs to the Exploit Prediction Scoring System (EPSS) and found that LLM’s underperform compared to LLMs.

Not only were they not as effective at prediction but there are also substantial inference costs involved, where EPSS is a free community resource. The LLMs generated probabilities that skew upwards, over actual exploitation, meaning they not only would be more costly due to inference, but also would likely lead to increased false positives (FP) and wasted time on remediation and toil for developers, the exact thing we need to be moving away from as a community in AppSec too.

Anthropic Proposes Transparency Framework for Frontier AI Models

Anthropic’s CEO recently announced that the Anthropic team is proposing a targeted framework that involves a series of transparency rules around safety and secure development of frontier AI models. They’re aiming for it to also be lightweight and flexible and not impact innovation either.

It is proposed due to the reality that industry, governments, and academia may be working on safety standards and evaluation methods, these likely will take several years to be fully matured, implemented and adopted or required.

Their proposal would require developers to publicly release a secure development framework which details how they assess and mitigate unreasonable risks, and also publish a system card which summarizes their testing and evaluation procedures.

AppSec, Vulnerability Management and Supply Chain

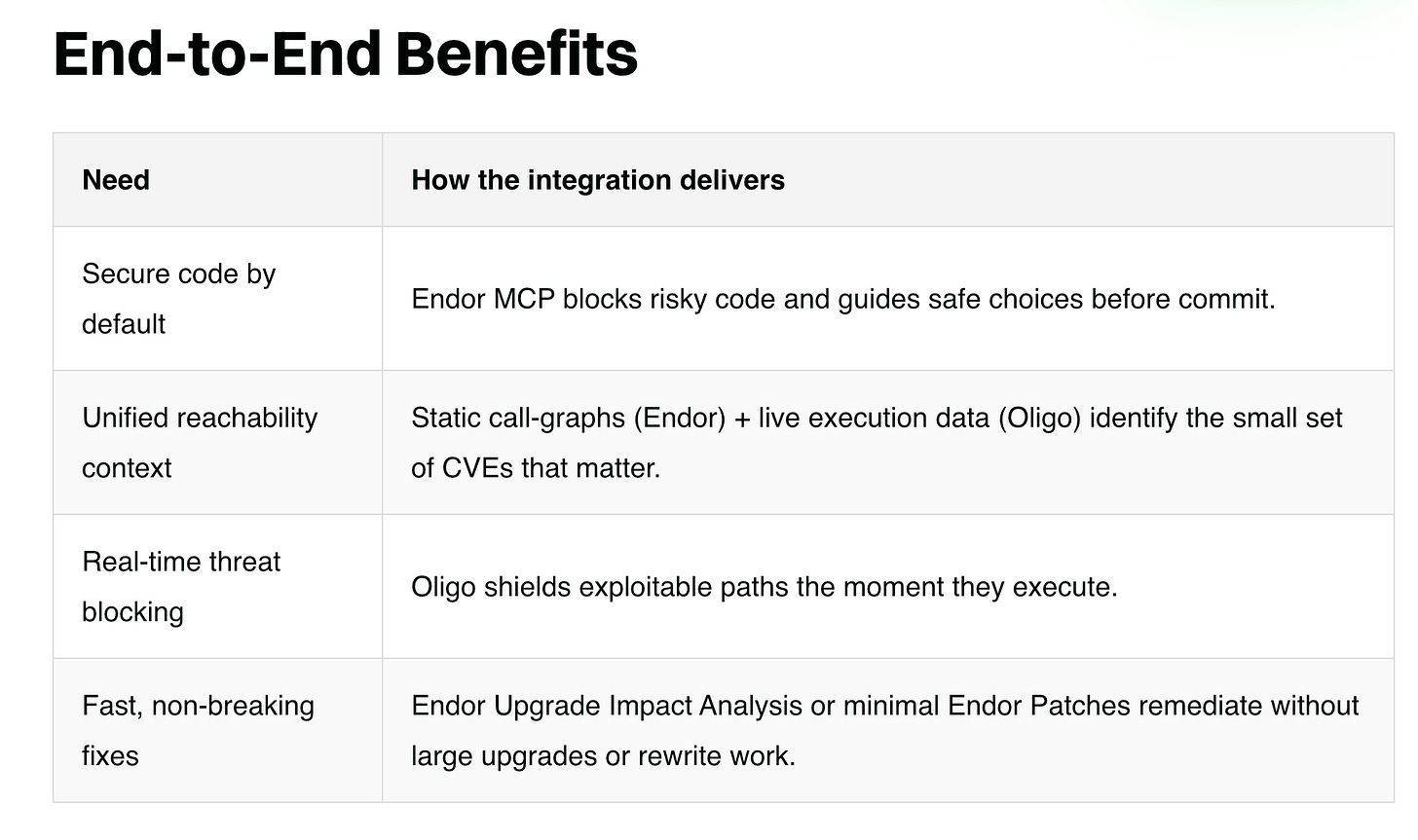

Endor Labs & Oligo: Closing the Loop Between Secure Code and Secure Runtime

If you’ve been following me for a while, you’ve likely heard me mention both teams, Endor Labs and Oligo. I currently serve as the Chief Security Advisor at Endor Labs, and they’re a leader in the Software Composition Analysis (SCA) and AppSec space. In recent years, runtime security has also gotten more attention, as organizations look to not just “shift left” but also remain aware of runtime risks and vulnerabilities as well.

In this joint blog between the teams, they discuss how Endor Labs uses their MCP server to enable AI-assistant context rich data including call graphs, function-level reachability and signed container images in leading IDE’s such as Cursor. Moving further in the SDLC, Oligo comes into play via the CI to provide an SBOM of what’s actually running, and provides that SBOM to Endor, include package ID’s, licensing and known CVE’s, coupled with Endor’s static reachability graph.

This duo of Endor and Oligo provides runtime prevention coupled with context-rich remediation opportunities, providing end-to-end coverage for AppSec teams.

I love to see this partnership between two industry leaders each bringing their unique capabilities and differentiation to bear for AppSec teams to reduce organizational risks.

Continuous Vulnerability Management Standard

Everyone is talking about the latest FedRAMP® Continuous Vulnerability Management Standard and how FedRAMP is modernizing its approach to vulnerability management.

I'm a huge advocate of this, of course, having written a book titled "Effective Vulnerability Management" from Wiley

That said, before discussing the fundamental changes they're making, I wanted to give a shout-out to Pete Waterman and the FedRAMP team for this line in particular:

"FedRAMP now works with the community to understand the impact of its policies and adjust them based on real-world experiences"

This seems trivial and a given, but the truth is many compliance frameworks and policy makers rarely take the time to truly listen to practitioners in the trenches doing the work, and revise and iterate compliance policies accordingly.

This is often why you have compliance requirements that are out of touch with reality and usually don't make sense, or worse, create more risks than they mitigate. You should read this if you're a Cloud Service Provider (CSP) working with the U.S. Government or DoD.

It demonstrates that FedRAMP is listening to the industry and moving towards factors such as known exploitation, exploitability, reachability, mitigating controls, and organizational context over legacy approaches such as CVSS base scores.

I’ll likely be doing a standalone deep dive piece on this updated FedRAMP Vulnerability Management guidance soon and why it is long overdue, and also helpful, so stay tuned for that.

Miggo Predictive Vulnerability Database

We all know when it comes to vulnerability management, focusing on context such as known exploitability, exploitability, reachability, business context and more is key. That is why it is cool to see runtime and Application Detection & Response (ADR) vendor Miggo share their predictive vulnerability database.

The database aims to not just document CVE’s but also provide insights such as how a vulnerability works, how it can be exploited and what defenders can do about it. This includes providing data such as function-level evidence, root cause analysis, exploit condition simulations and even tailored WAF rules for mitigation.

Much of the data seems to be free, but also there seems to be an aspect of it that allows teams to pay for additional resources (e.g. WAF rules for example). Even that aside, the free insights are key to helping AppSec teams dig deeper and better understand vulnerabilities and what they can do about them.

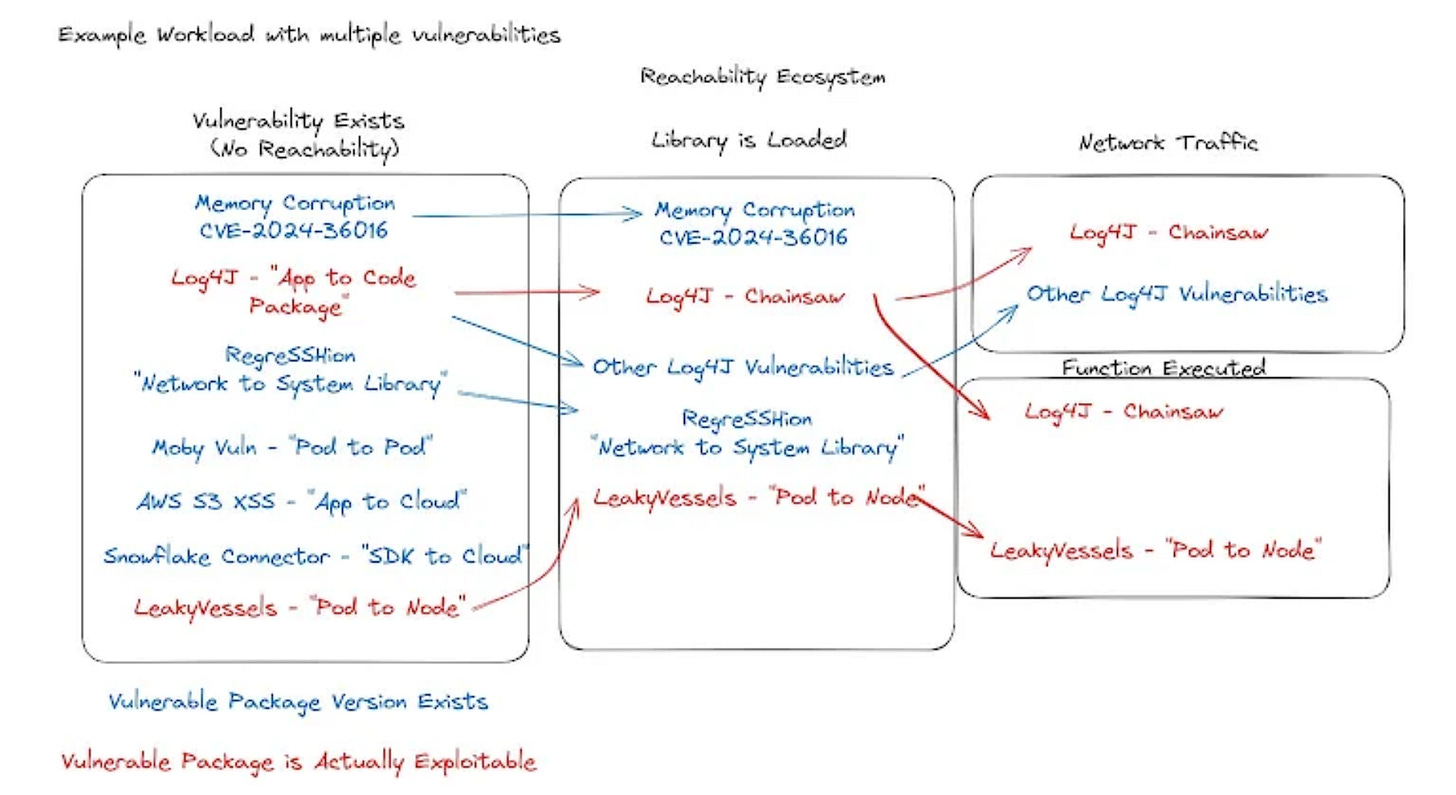

Everything to Know about Runtime Reachability

We’ve seen an industry shift (pun intended) from the hyper-focus on “shift left” to now accepting that runtime visibility, reachability and security is key as well, especially knowing production runtime workloads and environments are a core target for attackers, coupled with many getting fatigue from how poorly shift left has been implemented with noisy tools and a lack of context.

In this piece on Hacker News, my friend James Berthoty provides a masterclass on “everything you need to know about runtime reachability”.

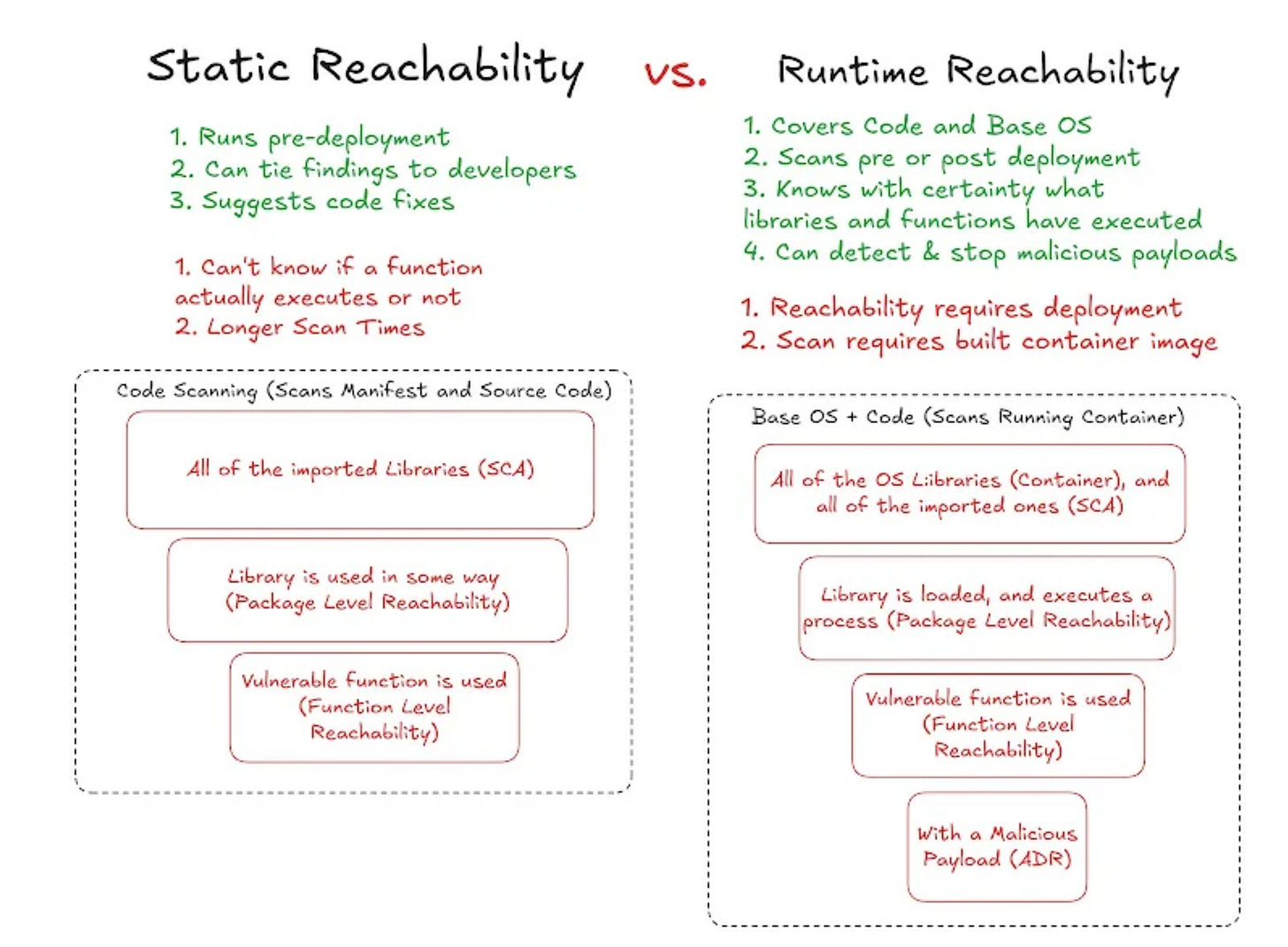

As James describes it, reachability is essentially showing exploitable vulnerabilities (e.g. they can be reached/exploited) and he compares static and runtime reachability and he even argues that if the goal is to fix exploitable vulnerabilities, then runtime is the way to do so. I won’t go as far as James, because I think there is value in fixing things before they make it to production (and potentially get exploited), as well as earlier in the SDLC, but I understand the sentiment of what James means here.

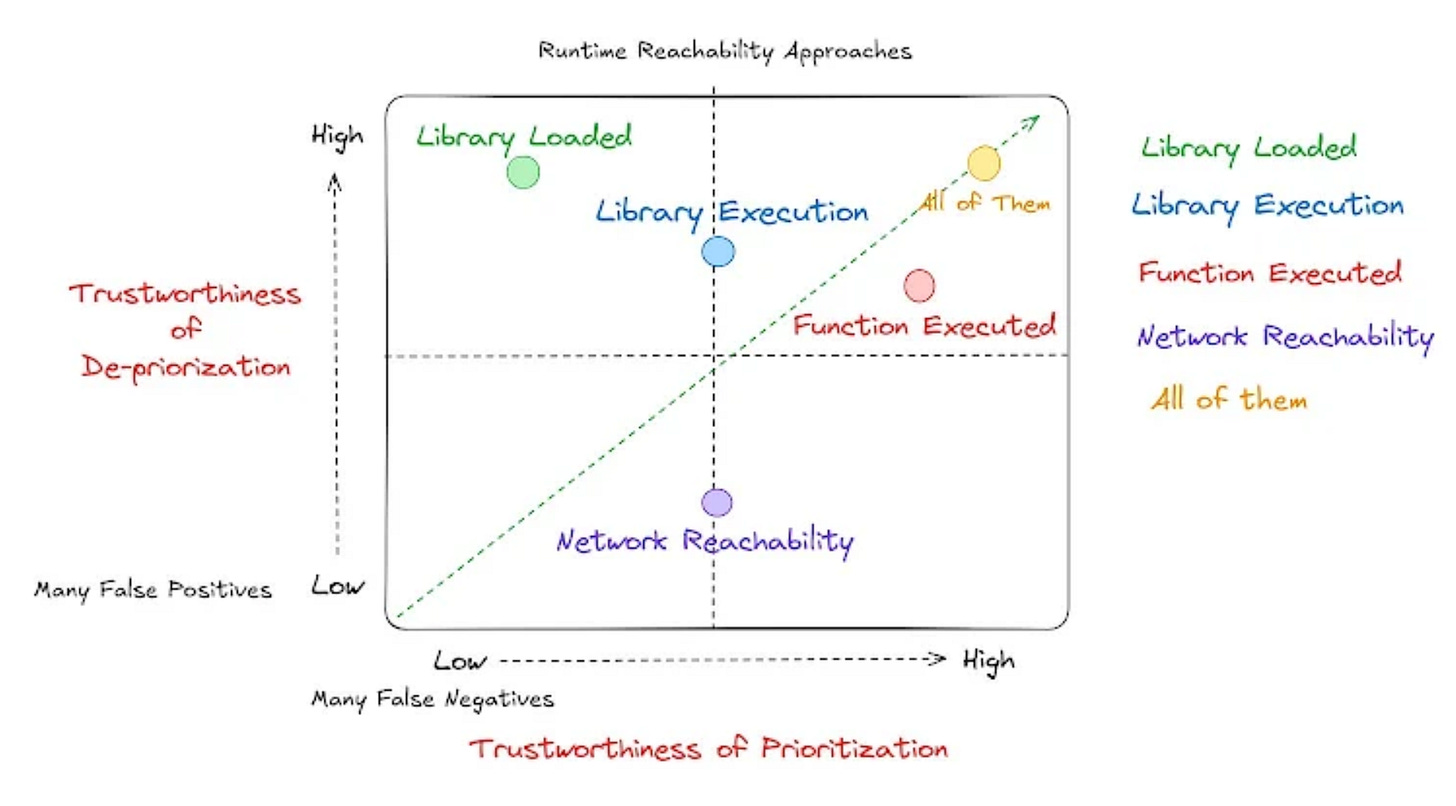

He lays out flavors of runtime reachability, as seen below:

They include a spectrum of trustworthiness of de-prioritization as well as a trustworthiness of prioritization, which is key as organizations want to make sure they focus on the right things and reduce risks to organizations while also not ignoring things which can have an impact.

James provides the below diagram to depict the key differences between Static and Runtime Reachability:

As you can see, the core differences include both when they occur in the SDLC, as well as what they can and the type of insights they can provide (or not). As James discusses, the context of exploitability can be complex, involving factors such as where the vulnerability exists in the workload, cloud or application environments.

To put it shortly, reachability is complex and there is no silver bullet, and James concludes emphasizing that you need all of them depending on the vulnerability.