Resilient Cyber Newsletter #53

Competing with Layer Zero in Cyber, DoD’s RMF Revamp, Using AI for Secure Coding, CVE Cost Conundrums, & AI’s Rise to the Top of Bug Bounty

Welcome!

Welcome to issue #53 of the Resilient Cyber Newsletter.

It’s been quite the heat wave where I live, but despite the heat the industry continues to be as active as ever. I share a lot of great resources this week, taking a look at things such as competing with industry leaders, how to securely leverage AI-development and insights into how an Autonomous Pen Testing company is now on top of one of the leading bug bounty boards along with insights into the true costs of CVE management and more.

So, let’s get to it!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Endor Labs is the modern AppSec platform purpose-built for today’s fast-moving, AI- and open-source-driven software development.

We unify intelligent code reviews, static analysis, risk-based prioritization, and efficient remediation into a single, connected view of your entire software estate. This comprehensive graph spans open source, AI-generated, and human-written code, giving teams the visibility to identify changes, assess risks, and act quickly with clear, actionable fixes.

All of this is powered by flexible policies and APIs designed to scale with your development workflows.

Cyber Leadership & Market Dynamics

Identity Security Evolutions

I recently had a chance to chat with Okta’s Regional CSO, Matthew Immler, on the evolving identity security landscape—and how it’s being reshaped by everything from GenAI to nation-state threats.

One theme stuck with me: Identity is the new perimeter. And as non-human identities (NHIs) and AI agents become more embedded in our systems, the attack surface isn’t just expanding—it’s shifting.

Okta’s approach is interesting. Their “Auth for GenAI” initiative focuses on empowering developers to safely build GenAI features with secure-by-default authentication, without slowing down innovation.

We also explored a darker use case—how GenAI is powering DPRK’s IT contractor scams. Okta’s threat research team has done deep work here, and their recommendations for detection and prevention are worth a closer look.

What stood out most was how Okta leverages its massive global customer base for insights—using trends from real-world environments to shape their roadmap and improve defenses across the board.

Big thanks to the Okta team for the conversation—and for pushing the envelope in identity-first security.

Be sure to check out more of what they're up to!

A Letter to Security Vendors: Stop Making it Hard to Buy Your Product!

If you’ve explored new security products and solutions, you’ve inevitably encountered challenges and realized how difficult it can be to procure them. Discovery calls, sales engineering engagements, demos, and more, all often before ever being able to touch a product, while also knocking our NDA’s and contractual legal hoops.

This piece from

pleads for security vendors to make their products easier to use. The procurement and sales process needs to change and adapt to suit the security customer, who is eager to get hands-on with a product and does not need to jump through countless hurdles and be bogged down in lengthy sales processes before seeing if a product is a good fit for their environment and their organizational security needs.Competing with Layer Zero in Cybersecurity

While the concept of “layer zero” may not be familiar to some, it generally refers to the foundational layer of infrastructure and technology that other tools and software depend on. Think of identity providers, cloud service providers (CSPs), etc., at least that is how

frames it in his latest piece.Ross lays out the challenges of security vendors competing with layer zero providers. Some of the advantages layer zero players enjoy, such as being the fundamental bedrock that other technologies run atop of, as well as often controlling core capabilities such as identity, compute, networking, data and more, as well as being able to bundle their security “offerings” into their existing products and sales channels, often a compelling option for those looking to consolidate products and lean into platforms over point solutions.

He also discusses several disadvantages of layer zero providers, such as the fact that security isn’t their core competency or focus in many cases, generic use cases that aren’t purpose built for customers, given their broad user base, functioning as black boxes with limited understanding for customers, and vendor lock/consolidation risks as well as the inability to move quickly and be more dynamic due to a broad customer base that demands stability.

Ross goes on to lay out useful recommendations for product vendors planning to compete with/alongside layer zero providers. Many layer zero providers aren’t singularly or heavily focused on security, which creates opportunities or gaps that startups can build upon and address for the ecosystem.

Ross closes out the piece, laying out why it is often more valuable to work WITH than AGAINST layer zero, and I agree with him. Not only can it accelerate your market traction and growth, but often the layer zero players, including industry leaders within cyber, which has its own layer zero on top of broader tech layer zero providers (some inception type sh*t), end up acquiring the innovative startups that are meeting existing industry gaps.

You don’t want to bite the hand that feeds you, or will eventually.

Crash (exploit) and burn: Securing the offensive cyber supply chain to counter China in cyberspace

We continue to see nation states bump into one another, leveraging cyber operations to exert geopolitical control and muscle. That said, this really insightful piece from The Atlantic Council examines whether the U.S. has the supply chain and acquisition capabilities to back up this activity, especially compared to China.

The piece emphasizes how zero-day vulnerabilities have now become a strategic resource, being used by military and intelligence services, all of which require a robust offensive cyber supply chain.

See key findings and recommendations from the report below:

DoD Looking to Revamp its Risk Management Framework (RMF)

To some, you may be wondering what the hell RMF is or why I’m sharing this. To put it briefly, the RMF is the process the DoD/Federal Government uses to authorize systems and software to operate on production networks and with production data.

The DoD’s Chief Information Officer (CIO) is currently seeking input from industry on how to revamp the RMF process after years of complaints from industry and DoD leadership themselves about its pain points, including manual cumbersome tasks, a lack of automation, and extended delays in getting innovative products and solutions into the hands of warfighters and DoD System Owners.

This includes significant changes to the DoD’s RMF process for external software, which I’ve written extensively about in an article titled “Buckle Up For the DoD’s Software Fast Track ATSO (SWFT).”

AI

Agentic Security Hub

2025 has been dubbed the year of "Agentic AI."

With that, there have been many discussions and resources around Agentic AI Security, so much so that figuring out where and how to get started can feel overwhelming. That's why this resource from Vineeth Sai Narajala is incredibly timely and helpful!

🗺️ It provides a comprehensive mapping between National Institute of Standards and Technology (NIST)'s AI RMF and OWASP® Foundation AISVS, complete with an interactive graph and integration matrix

🔐 Coverage of OWASP's AI SVS and its 13 control categories

🛠️ Interactive AI Agent Architecture Diagrams with examples for: Sequential, Hierarchical, Collaborative Swarm, Reactive, and Knowledge Intensive Reference Architectures

🔥 A collection of comprehensive security controls and mitigations for Agentic AI systems and environments

This is such a cool resource for the community!

Shout out to folks who's inputs and efforts helped shape it Apostol Vassilev, Martin Stanley, CISSP, Matthew Versaggi and Idan Habler, PhD among others.

AI Innovative vs. AI Native

We are watching a trend where organizations and vendors are scrambling to adopt AI. For some, this means building from the ground up organically around AI, while others are retrofitting and upgrading existing products and platforms to incorporate AI features and functionality.

This paradigm can be thought of as AI Innovative vs. AI Native. The former includes companies that are making strides in upgrading their existing offerings to leverage AI, while the latter are born in the AI era and organically build around AI from the start.

produced a simple graphic that helps distinguish between the two well.James and I also sat down last week to dive into his AI Security Market analysis report, which is a great deep dive into the state of AI Security, including use cases, attack types, a market map and leaders, as well as a AI Security tool buying flow chart, you can catch the full conversation below:

Using AI for Secure Code Creation: Enhancing Software Security

When it comes to secure coding and applications, few have the reputation of Jim Manicode. That’s why when I stumbled across a talk he recently gave at the Copenhagen Developers Festival on using AI for secure code creation, I knew I had to give it a listen and share it.

Jim lays out how AI is fundamentally software development and must be adopted, or you will likely be out of a job in the future, as those who do adopt it for software development are benefiting from its massive benefits as a force multiplier.

That said, Jim also emphasized that AI is like a truly amazing junior developer who needs proper guidance and oversight to produce functional and secure code.

Jim discusses some challenges in AI generated code, including an emphasis on how they are trained on large corpus of open source code, much of which is low quality, has flaws, and has vulnerabilities, which leads to the same in the outputs, especially without proper oversight and secure usage. He lays out how much of this problem has to do with using raw prompts rather than robust and proficient prompt engineering.

This includes identifying security requirements, listing specific security standards the code should adhere to and clearly defining security outcomes in your prompts.

There is still the need to perform automated testing (e.g. SAST, SCA, IaC, et. al) to validate the security of the generated code and Jim discussed even using other models to review the code that is generated as well.

Throughout the talk, Jim emphasized the importance of deep contextual architectural knowledge and how folks with these skillsets are not only not going to go away but will be in critical demand. One thing I couldn’t help but think as I listened to Jim’s masterclass was how I suspect 90% of people using these AI coding tools will not be providing such verbose and security-conscious prompts, likely perpetuating the massive attack surface the rapidly generated AI code is going to contribute to.

AI Testing Guide

The OWASP® Foundation team continues to be an industry leader in providing helpful and timely resources for AI Security. One of the latest examples is the draft OWASP AI Testing Guide. It's an open-source effort that provides comprehensive, structured methodologies and best practices for testing AI systems.

➡️ It leans into and complements existing efforts such as the GenAI Red Teaming Guide, AI Exchange, AI Security and Privacy Guide, and Top 10 for LLMs, all from OWASP.

➡️ Covers key activities such as Threat Modeling for AI systems and laying out an AI Testing Guide Framework to identify and mitigate risks from prompt injection, data leakage, hallucinations, and more.

There's no denying AI has some unique considerations, from non-deterministic behavior and agentic autonomy.

Shout out to Matteo Meucci and Marco Morana for leading this effort, and they're actively seeking input and contributors!

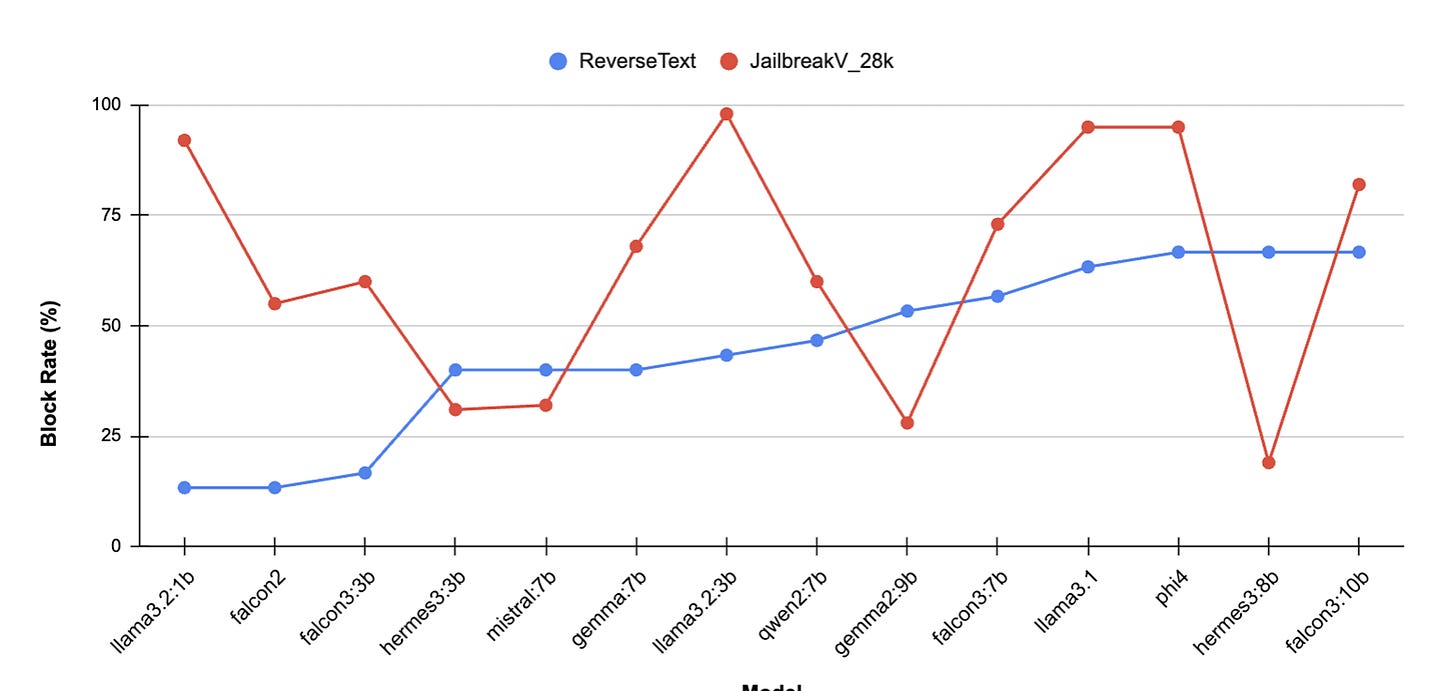

Security Steerability is All You Need 🛞

From a security perspective, one of the main challenges with LLMs is manipulating system and user prompts. They often involve prompt injections, jailbreaks, or perturbations to get the models to disclose data they shouldn't or behave in a manner that wasn't intended or authorized. This is an interesting paper by Itay Hazan, Idan Habler, PhD, Ron Bitton, PhD, and Itsik Mantin discussing the concept of "Security Steerability."

Defined as: "An LLM's ability to adhere to and govern the predefined system prompts scope and boundaries that do not fall under universal security."

They used two novel datasets to assess policy adherence and boundaries, prompting to see how various open-source models performed against manipulations and jailbreak attempts and mitigating these threats.

AppSec, Vulnerability Management, and Software Supply Chain

💰 CVE Cost Conundrums 🤕

Let's face it, Vulnerability Management is a headache. It's time-consuming, burdensome, and distracting from an organization's core competencies of delivering value to customers and stakeholders.

But, just how costly is it?

It's pretty bad, as highlighted in Chainguard's "Cost of CVEs 2025" report.

I break it all down in my latest article, including:

CVEs are seeing double-digit year-over-year (YoY) growth, with organizations struggling to keep up and vulnerability backlogs getting out of control

Chainguard found that organizations that outsourced CVE remediation realized a $2.1 MILLION annual savings

Chainguard lays out the "CVE Doom Cycle", where organizations pull down images/containers, run security scans, dump hundreds and thousands of CVEs on engineering, blocking velocity and business outcomes - rinse and repeat.

The fact that image hardening is...hard, as demonstrated by Chainguard's recent "This Sh*t Is Hard" blog, constantly maintaining hardened images, minimizing dependencies and meeting SLA's

The business enablement benefits of outsourcing CVE management, unlocking tens of millions in value by focusing on increased revenue, faster innovation, and decreased risks.

Key benefits such as decreased customer escalations and improved customer trust

Many more excellent insights here in this detailed report from Chainguard. It's clear Chainguard's Container offering resonates with customers and is validated by other vendors now looking to provide a similar offering to the market.

The challenge for others, however, is that Chainguard has a first-mover advantage and has demonstrated that it has NO plans to slow down 🚀

Check out the full report → https://lnkd.in/e-inSKrU

Securing the Modern Workspace

In this episode of Resilient Cyber, we chat with Patrick Duffy, Product Manager at Material Security on Securing the Modern Workspace.

The conversation will include discussions about the increased adoption of cloud office suites, limitations of traditional security approaches, and a deep dive into how Material Security is tackling issues such as securing email and data, identity threat detection and posture management.

The Road to Top 1: How XBOW Did It

In a recent issue of the Resilient Cyber Newsletter, I shared how the AI-driven Autonomous Pen Testing company XBOW took the top spot on the HackerOne bug bounty board.

In this detailed write-up from XBOW, they explain how they achieved the top spot. It included rigorous benchmarking, testing XBOW with existing CTF challenges, building internal benchmarks, and looking for zero-day vulnerabilities in open source projects.

From there, they scaled discovery and scoping capabilities with the many potential targets and environments that HackerOne makes available. Knowing false positives from scans are a significant problem in vulnerability management, XBOW created “validators” that automated peer reviews to confirm vulnerabilities that XBOW found.

As this process matured, XBOW’s validated findings reported to HackerOne grew exponentially, see below:

XBOW states they submitted nearly 1,060 fully automated vulnerabilities and then reviewed by their security team before submission to ensure they aligned with HackerOne’s policies around automated tooling and scan submissions.

You can see the status of their submissions below, with some of them pending review, some being triaged, some resolved, and so on:

This informative and transparent piece from XBOW demonstrates how an automated pen testing tool has overtaken human researchers on one of the leading bug bounty boards in the world. This is an exciting time for Cybersecurity and AI's potential to disrupt our ecosystem.

It is also scary, as malicious actors actively use similar capabilities for their nefarious purposes.

Agentic AI Identity Security Cheetsheet

Identity for agents has been a really hot topic of discussion this year so far, with agents poised to drastically outnumber human users, and security practitioners and researchers raising alarms around potential identity security gaps and concerns for agents.

That said, it can be a confusing space to make sense of, with a lot of different vendors looking to tackle the problem as well as various risks and considerations to keep in mind. This Agentic AI Identity Security Cheatsheet from

a great quick reference on the topic.The piece goes on to discuss key considerations when it comes to Agentic AI identity, core capabilities vendors and offerings should have, a rich set of industry and market resources, as well as calling our vendors and leaders in the space, such as the crew at Astrix Security, which I’m fortunate enough to get to collaborate with!