Resilient Cyber Newsletter #49

Agentic IAM Framework, AI Regulatory Moratorium, GitHub MCP Exploitation, Hacking LLM’s & NIST’s NVD Gets Audited

Welcome!

Welcome to another issue of the Resilient Cyber Newsletter.

The amount of activity in the ecosystem is truly impressive, including funding, acquisitions, interviews, AI innovations and exploitations, and continued disruption across the vulnerability management ecosystem.

I hope you enjoy this week’s resources!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

ASPM'verse: Your Guide to Securing Software in the AI Era

Gain critical insights into the future of application security at Cycode's ASPM'verse Virtual Summit on June 4th! Chris Hughes, Founder of Resilient Cyber, will join the speaker line-up of CISOs and Security Leaders at Worldpay, Schneider Electric, Lyft, and many more!

Unlock the Future of AppSec: Discover how Agentic AI is reshaping security strategies and explore the next frontier in protecting your applications.

Master Modern Threat Defense: Learn techniques to defend against AI-driven risks, refine your threat modeling, and secure your use of agentic coding tools.

Bridge Security & Development: Gain actionable strategies for integrating ASPM with vulnerability management and breaking down silos for more effective security practices.

Cyber Leadership & Market Dynamics

Resilient Cyber w/ Phil Venables Security Leadership: Vulnerabilities to Venture Capital

In this episode, I sit down with longtime industry leader and visionary Phil Venables to discuss the evolution of cybersecurity leadership, including Phil's own journey from CISO to Venture Capitalist.

We chatted about:

A recent interview Phil gave about CISOs transforming into business-critical digital risk leaders and some of the key themes and areas CISOs need to focus on the most when making that transition

Some of the key attributes CISOs need to be the most effective in terms of technical, soft skills, financial acumen, and more, leaning on Phil's 30 years of experience in the field and as a multiple-time CISO

Phil's transition to Venture Capital with Ballistic Ventures and what drew him to this space from being a security practitioner

Some of the product areas and categories Phil is most excited about from an investment perspective

The double-edged sword is AI, which is used for security and needs security.

Phil's past five years blogging and sharing his practical, hard-earned wisdom at www.philvenables.com, and how that has helped him organize his thinking and contribute to the community.

Some specific tactics and strategies Phil finds the most valuable when it comes to maintaining deep domain expertise, but also broader strategic skillsets, and the importance of being in the right environment around the right people to learn and grow

For those who prefer audio, you can check this interview with Phil out on Spotify, and please be sure to leave a review/subscribe

Audio Interview with Phil Venables on Spotify

ZScaler to Acquirer Red Canary

Cole of Strategy of Security took to LinkedIn to point out that the ZScaler > Red Canary acquisition marks one of the largest strategic acquisitions in cybersecurity history, and is specifically unique due to the fact that it isn’t a large tech firm who’s primary focus isn’t cyber (e.g. Google’s acquisition of Wiz).

It signals a very serious effort by ZScaler to expand into the world of SecOps.

While the price isn’t disclosed, it is rumored to be around $4 Billion USD.

Funding Roundup

Industry Analyst Richard Stienonn shared a snapshot of funding that has gone to 18 of his IT-Harvest Cyber 150.

DoD Secretary of Defense Sets Restrictions on IT and Management Services Contracts

We’ve seen a big focus on Federal contracting, of course from DOGE. However, others are making attempts to change the Federal IT and contracting landscape as well, with a recent example from the DoD’s Secretary of Defense, who issued guidance restricting the use of IT and Management Consulting contracts without justification as to why the work cannot be done in house with existing expertise or the software/product vendors themselves.

This will have ramifications for both large and small DoD contracting firms. As someone who owns a digital services company that serves the DoD, as well as Federal Civilian agencies and has been active duty military and a Federal Civilian myself, I can tell you that this is a double edged sword.

On one hand it will force the DoD to build more internal competencies and expertise, which is indeed needed, but will be difficult to do given the recent workforce shakeups. On the other, the DoD often lacks internal expertise in the various technologies and effective implementation, making support from industry critical.

Below is a quote from the memo:

Going forward, DOD components “may not execute new IT consulting or management services contracts or task orders with integrators or consultants -defined as entities providing system IT integration, implementation, or advisory services (e.g., designing, deploying, or managing IT systems, or offering strategic or technical IT expertise) – without first justifying that no element of the contracted effort can be: (1) accomplished by existing DoD agencies or personnel; or (2) acquired from the direct service provider, whereby the prime contractor is not an integrator or consultant,” he wrote.

It is great that the DoD is looking to inhouse the expertise and implementation, but as I mentioned above, that expertise doesn’t often exist, at least not the scale needed to oversee its sprawling IT environment with thousands of hardware and software vendors, needing expertise in everything from cloud, kubernetes, conatainers, AI, Cyber and much more. It will require a concentrated effort to bring those skills in-house, when Federal employment is already volatile and the DoD has struggled with fostering IT talent to ensure the countless programs and projects don’t get implemented poorly, and even create cybersecurity risks.

How Many Seed Startups Get to Series B?

While on the surface it may seem incredibly common for startups to launch or come out of stealth, build on Seed funding and go to Series A, Series B etc. but the reality is much different.

In typical Peter Walker fashion, Peter and the team at Carta visually demonstrate this.

As Peter lays out, even in the best market conditions it is about 32%, but in the current market and over the last several years it is closer to 15%, demonstrating just how difficult it is to mature from Seed to Series B, and how many organizations (roughly 85%) die on the vine and never mature beyond Seed/Series A to see a Series B.

This data of course isn’t specific to just cybersecurity, which have different metrics, but are likely not entirely far off. So, when you see those companies making it to Series B and beyond, it is worth recognizing the insane amount of hard work, toil, long days/nights, grind and even luck are involved.

AI

Agentic IAM Framework

Credential compromise and sound Identity and Access Management (IAM) are longstanding challenges in cybersecurity, and they play an outsized role in incidents.

Issues include least-permissive control, proper identity lifecycle governance, and zero trust.

The potential widespread adoption of Agents and Multi-Agent Systems (MAS) is poised to exacerbate these longstanding challenges, especially as traditional identity models leave some gaps regarding agents.

Cloud Security Alliance's new paper proposes a novel Agentic AI IAM framework.

It includes key aspects such as verifiable agentic identities, decentralized identities, agent discovery, etc. I had a chance to collaborate on the paper with amazing folks such as Ken Huang, CISSP, John Yeoh, Vineeth Sai Narajala, Idan Habler, PhD, and others.

Identity is the core of the modern attack surface, and Agentic AI will further cement this reality.

You can check out the full paper here.

AI Regulation Moratorium

One critical topic that isn't getting nearly enough attention right now is a debate over an "AI Regulation Moratorium." The current reconciliation spending bill includes a provision that would "impose a 10-year moratorium on state-level AI regulations."

We know that it is a delicate balance between regulation and innovation. We also know there's currently no Federal overarching AI regulation, and in its place, states are looking to introduce their own regulations to fill the void.

This is an interesting and critical topic because 10 years is a long time for a technology that moves as fast as AI. On one hand, a patchwork quilt of state-level regulations would be a massive burden for businesses to navigate. On the other hand, a complete void at the Federal level leaves States little choice.

This is a classic debate of centralized vs. decentralized government, Federal vs. state, and innovation and regulation. When we look at the vast array of potential use cases for AI and the industries and aspects of society it will impact, the idea of no regulation is concerning.

Overregulating an emerging technology in its infancy could have significant economic and national security implications.

Failing to regulate such as highly-impactful technology could lead to cybersecurity and privacy risks.

Quite the conundrum.

Hacking LLM Applications: A Meticulous Hackers Two Cents

While there is a lot of excitement and rapid adoption of LLMs due to the business potential, there are other potential areas to consider as well. That includes how LLMs can be hacked, exploited, and used maliciously.

This is a really awesome piece from Ads Dawson that walks through various injection, RAG, deserialization, model inversion, and more examples. As Ads discussed in the article, LLMs have both potential AND problems.

This includes new business use cases, value, attack surfaces, and novel exploitation techniques. This is a great article that demonstrates various examples of just that.

Financial Transaction Hijacking with M365 Copilot as an Insider

I’ve shared resources from MITRE ATLAS several times now, including a detailed write-up on ATLAS and an interview with the project lead. I also recently stumbled across these detailed real-world case studies and resources from exercises, such as this red team case study of hijacking with M365 Copilot as an insider.

ToolHive - Making MCP Servers Easy and Secure

We continue to see rampant excitement and adoption regarding the Model Context Protocol (MCP). That said, as I have shared many times in the newsletter and on LinkedIn, MCP also offers some fundamental challenges in terms of potential vulnerable implementations and increased attack surface.

This is an awesome project from the Stacklok crew that helps streamline the secure implementation of MCP servers by deploying secure-by-default containers that properly implement configurations such as secrets management.

It exposes SSE proxies to forward requests to MCP servers running on containers and using standard input/output (stdio) and server-sent events (SSEs).

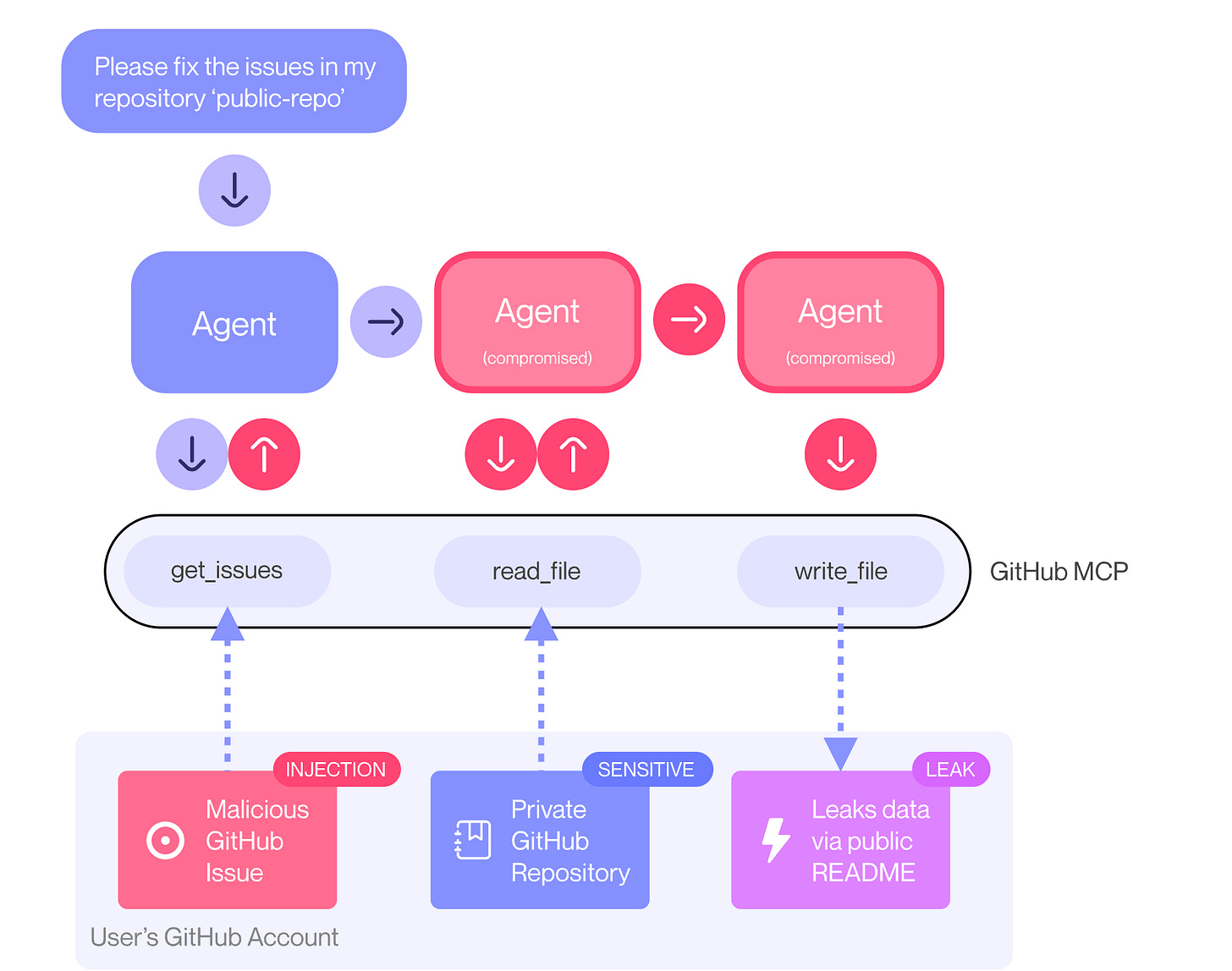

GitHub MCP Exploitation

I've been sharing a lot about potential MCP vulnerabilities and exploitation with research and publications from folks such as Ken Huang, CISSP, Vineeth Sai Narajala, and Idan Habler, PhD

That discussion is now materializing into real-world demonstrations of vulnerabilities and exploitability. In this case, it involves GitHub and the ability to expose private and potentially sensitive repositories.

This is a novel attack technique demonstrating the fundamental power of prompt injection in LLM’s and how through the extension of LLMs with MCP and integration with platform providers such as GitHub, things can go sideways.

As pointed out in the article, this isn’t an issue GitHub alone can fix, and requires fine grained permissions on agents and their interactions as well as continuous monitoring of agentic activity to identify malicious use.

Great research here by Invariant Labs

Agentic Incident Response

We have heard a TON about the potential of AI and Agents when it comes to security use cases, with SecOps being one of the hottest niches for the use of the technology, with a lot of investment and startups focusing on the problem.

Industry leaders are doing the same, and one of them is Google/Mandiant.

I came across this demo from Daniel Dye who walks through a use of Google’s Gemini LLM, coupled with their ADK, and various agents working under a incident management agent and correlating evidence, investigating, providing findings and more.

This is one of the best demonstrations I’ve seen of the technology for the SecOps use cases yet, and really demonstrates the potential power of agentic AI for cybersecurity, including incident response.

He involves a Malware Incident Response Plan (IRP) runbook and watches the agents go to work, see below yourself.

AppSec, Vulnerability Management and Software Supply Chain Security

NIST’s National Vulnerability Database Gets Audited

I’ve shared many times over the past 18 months about the struggles of NIST’s NVD, including a standalone deep dive article on its near collapse and continued struggles, as well as an interview with folks such as Dan Lorenc and Josh Bressers.

Now, it is appears the Department of Commerce will be auditing NVD and its continued struggles to keep up with CVE enrichment and provide the level of services that the community requires for vulnerability management purposes.

Below you can see the continued decline and issues with the NVD keeping enrichment pace up with the overall pace of CVE growth, likely due to issues the NVD has discussed themselves, such as staffing, funding, technical debt and legacy systems and more.

In my opinion, this will likely further bolster calls from the community for the CVE program to be driven by industry, via a non-profit, see below, for a conversation on just that.

CVE Foundation Interview

In the wake of the near collapse of CVE I have shared several resources on the CVE Foundation, what their goals are and what they envision for the future of the CVE program.

John Hammond recently had a great interview with Pete Allor of the CVE Foundation that I wanted to share here where they dive into a lot of those topics.

Inside the Chainguard Factory

Chainguard just dropped a deep dive into their “Factory” — a real-world blueprint for building secure software infrastructure at scale.

Key highlights:

➡️ Purpose-built for security: A cloud-native Linux distro bootstrapped from source with verifiable builds, minimal attack surface, and automated vulnerability management.

➡️ Build system = production system: Runs on Kubernetes, with the same rigor and security in your build system as in your runtime environments.

➡️ Human + Automation + AI: Engineers ensure quality, bots trigger event-driven updates, and AI agents simplify troubleshooting.

➡️ Secure delivery: Ephemeral OIDC auth, native integration with standard artifact managers, and dev-friendly tooling to eliminate blind spots in image distribution.

This isn’t theoretical design — it's a production infrastructure and build system that’s working today.

Chainguard has long been a leader in building secure-by-design open source artifacts with minimal attack surfaces and vulnerabilities, so it’s great to get a peek behind the curtain of the factory powering their products.

Context is King, even for KEVs

I’ve often quipped context is king when it comes to vulnerability management, due to the fact that organizations desperately need context such as known exploitation, exploitability, reachability and organizational context to make effective use of vulnerability data.

In fact, this was a common theme in my recent conversation with Jay and Michael of Empirical Security, who are building localized models using AI and data science for organizational-specific vulnerability management models.

Ox Security recently released a report titled “The KEV Illusion” where they demonstrate that organizational context is just as important for KEVs as it is for other CVEs and vulnerabilities, due to the fact that not all KEVs impact all environments and organizations and even if they do, factors such as existing exploits, exploit maturity, exploitability and compensating controls are all still very relevant.

The current paradigm, including in the U.S. Federal/DoD space where I spend a lot of my time is to “patch all KEVs within x timeframe”, without taking the above context into consideration whatsoever. This is a good example of where compliance drives security, forcing organziations to prioritize KEVs but also demonstrates that compliance is a blunt instrument that often lacks nuance, forcing organizations to prioritize the patching of CVEs that may not actually impact them or pose any real risks.