Resilient Cyber Newsletter #38

FedRAMP in the Crosshairs, Vibe Coding Conundrums, Agentic AI Threat Navigator, AI Workforce Implications, & the State of Secret Sprawl

Welcome!

Welcome to another issue of the Resilient Cyber Newsletter.

It feels like things in the industry continue to heat up, as spring is nearly upon us, there’s a lot of talk about events such as RSA, we’re seeing vendors preparing to make major product announcements and more.

That said, let’s get to it!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Elevate Your Security with YubiKeys!

Cyberthreats are more sophisticated than ever. Passwords? They're outdated and can be cracked in a minute. Cybercriminals are intercepting SMS codes and bypassing authentication apps.

While businesses invest in network security, they often overlook the "front door" — the login.

Yubico believes the future is passwordless. YubiKeys offer unparalleled protection against phishing for individuals, SMBs, and enterprises. They deliver a fast, frictionless experience that users love.

Yubico is offering Resilient Cyber Followers a limited-time Buy-one-Get-one Offer.

Say NO to modern cyber threats.

Cyber Leadership & Market Dynamics

FedRAMP In the Crosshairs

As Federal programs increasingly come under scrutiny, one latest example is FedRAMP, the program responsible for authorizing commercial cloud service providers/offerings for use by U.S. Federal agencies and mission owners.

Its being reported that the FedRAMP program is being reduced to “statutory minimums”, including a significant reduction or elimination of the contract staff supporting the FedRAMP PMO at GSA.

This is an interesting evolution, given that we know FedRAMP has struggled with velocity for years, only having 375~ approved service offerings in a market of tens of thousands, significant hindering the Governments ability to access commercial cloud services. A drastic reduction in support staff without significant changes to the process and introduction of automated assessment innovations will only further exacerbate this challenge, which seems to be at odds with the administrations push to streamline and expedite the Governments access to commercial technology.

This article from Meritalk also discussed that some CSP’s reported their monthly Continuous Monitoring (ConMon) syncs with the FedRAMP PMO have been cancelled or rescheduled due to some of the disruptions. Meaning, not only are there implications for authorizations, but the actual monitoring of risks associated with the SaaS/Cloud the Government consumes could also be left unmitigated if ConMon activities don’t continue, or perhaps the intent is to have all of the Federal agencies monitor the CSP’s themselves, which would diminish the value of FedRAMP authorizations and the PMO.

Ironically in the report it is mentioned that a report internal to TTS/GSA discusses the need to “unlock more throughput” for FedRAMp authorizations, to get the government access to more software, but a reduction of the staff supporting the PMO is likely to do the opposite, and exacerbate longstanding challenges.

7 Key Trends Defining the Cyber Market Today

While I’m not a huge fan of article on trends, given everyone has a different perspective and many will focus on trends that tend to align with their agenda, either as a practitioner, vendor, investor and so on, this article does discuss some trends I think are relevant and their potential implications, including:

Increased M&A activity in support of platforms

Market leaders are gaining market share

The cyber VS pipeline remains strong

Platforms vs. Point Products - Why not both?

Prospects for standalone SIEM products are dim

AI/ML systems become new attack surfaces requiring protection

The rise of single-vendor SASE

The article cites that enterprise security budgets are supposed to increase roughly 15% in 2024, although I am unsure where they got this figure from, as we have seen mixed figures and projections. Nonetheless, it touches on several key trends such as the large vendors in the ecosystem leaning into the platform narrative around security tool sprawl and potential cost consolidation, but investments remain strong among VC’s for startups in security as well.

We also have the reality that there is no single winner in the argument for Platforms vs. Point products and each will remain viable and part of enterprise tech stacks as long as security exists.

Did Trump Admin Order U.S. Cyber Command and CISA to Stand Down on Russia

Last week a story broke and was heavily polarized (what isn’t these days), on whether or not the Trump administration did or didn’t order a stand down by U.S. Cyber Command and CISA when it came to Russian planning and offensive cyber activities.

Kim Zetter wrote an excellent article diving into the story, tying together multiple sources, discussing potential implications and more, for those who have been following it.

State of Startups 2024/2025: Everything You Need to Know

By now, you know I’m a fan of Carta’s Peter Walker and all of the amazing insights he shared related to startups, investments, scaling and more. He recently joined a show to discuss Carta’s “State of Startups” report for 2024/2025, and it is full of awesome insights!

AI

Resilient Cyber w/ Lior Div & Nate Burke - Agentic AI and the Future of Cyber

In this episode, we sit down with Lior Div and Nate Burke of 7AI to discuss Agentic AI, Service-as-Software, and the future of Cybersecurity. Lior is the CEO/Co-Founder of 7AI and a former CEO/Co-Founder of Cybereason, while Nate brings a background as a CMO with firms such as Axonius, Nagomi, and now 7AI.

Lior and Nate bring a wealth of experience and expertise from various startups and industry-leading firms, which made for an excellent conversation.

We discussed:

The rise of AI and Agentic AI and its implications for cybersecurity.

Why the 7AI team chose to focus on SecOps in particular and the importance of tackling toil work to reduce cognitive overload, address workforce challenges, and improve security outcomes.

The importance of distinguishing between Human and Non-Human work, and why the idea of eliminating analysts is the wrong approach.

Being reactive and leveraging Agentic AI for threat hunting and proactive security activities.

The unique culture that comes from having the 7AI team in-person on-site together, allowing them to go from idea to production in a single day while responding quickly to design partners and customer requests.

Challenges of building with Agentic AI and how the space is quickly evolving and growing.

Key perspectives from Nate as a CMO regarding messaging around AI and getting security to be an early adopter rather than a laggard when it comes to this emerging technology.

Insights from Lior on building 7AI compared to his previous role, founding Cybereason, which went on to become an industry giant and leader in the EDR space.

DOGE Has Deployed Its GSAi Custom Chatbot for 1,500 Federal Workers

One group that has and continues to dominate the headlines is the U.S. Department of Government Efficiency (DOGE), tied to massive layoffs, RIF’s, investigating potential Fraud, Waste and Abuse (FWA), and being a politically polarizing entity all at once.

It is now being reported that DOGE has deployed a custom chatbot to thousands of Federal workers as they look to automate tasks, starting with deployment/use at the General Services Administration (GSA). The article discusses how the intend to eventually use the tool to analyze contract and procurement data, which isn’t surprising given the group has been applying significant scrutiny to existing and new contracts, as well as employee and contractor activities, at a scale that inevitably demands automation.

It is stated that GSA users are able to use models such as Claude Haiku 3.5, and Meta LLaMa 3.2, depending what they are trying to accomplish. Being in cyber, my mind immediately jumps to the potential security concerns of the tool, and the article does state that employees received a memo about how to use the product and informing them that non-public Federal data should not be put into the tool, however, as we all know, memos are far from an actual security control and users will do what users do.

25% of Startups in YC’s Current Cohort have Codebases Almost Entirely AI-generated

A story broke this week how 1/4th of Y Combinator’s current cohort of startups have codebases that are almost entirely (95%) AI generated. This comes on the back of what is being called “vibe coding” where Developers (if we want to call them that, remains a question) are using LLM’s and describing what they want created without actually writing most or any of the code themselves.

I caught this comment as part of a discussion on YC’s YouTube channel, in an episode “Vide Coding is the Future”, which is worth listening to, as the guests discuss the reality of startups heavily relying on LLM’s to develop their code/apps, and also the implications for things such as speed to market and competition.

Security folks of course took to socials this week to discuss implications of applications being created by individuals who don’t know how to code in some cases, or the sprawl of potential vulnerable code that comes from being fully reliant on LLM’s, given we know LLM’s are heavily trained on OSS, which often inherently has vulnerabilities.

I would love to see the results of these codebases when put under the microscope of AppSec scanning tools, to see how vulnerable they are, or aren’t.

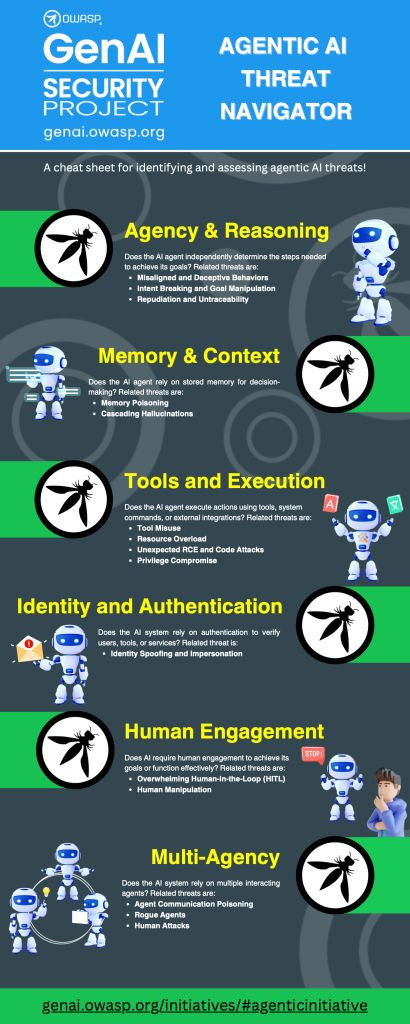

Agentic AI Threat Navigator

Agentic AI continues to be the buzz of 2025 (and beyond) and I have previously shared resources from OWASP’s GenAI Security Project, such as the Agentic AI Threats and Mitigations publication.

The team recently released this awesome visualization that consolidations some of the key attack surface areas and considerations for AI Agents, including:

Reasoning - the AI agent’s decision making process

Memory - how the AI retains and recalls information/context

Tools - usage of external tools and APIs by the AI

Identity - authentication and identity management of the agent

Human Oversight - Human-in-the-Loop supervision and control

Multi-Agent Interactions - dynamics and risks when multiple AI agents collaborate or compete

VulnWatch: AI-Enhanced Prioritization of Vulnerabilities

Organizations continue to explore and innovate with ways to use AI for various cyber use cases. That includes vulnerability prioritization, with a good example coming from industry leader Databricks.

They published a blow showing how they use AI to:

Automatically identify and rank vulnerabilities in third-party libraries

The impact of AI on reducing manual effort for the security team

Key benefits of prioritizing vulnerabilities based on severity and relevance to Databricks infrastructure

They discuss the state of vulnerabilities, as the numbers continue to climb and become unrealistic for organizations to keep pace with, as well as efforts around prioritization, as < 5% of all vulnerabilities are ever exploited.

Their full process includes various activities such as gathering and processing vulnerability data, analyzing relevant features, using the AI to get CVE data, and scoring CVE’s based on severity, all the way through creating tickets for follow up for the team.

They take advantage of various vulnerability sources and add enrichment based on factors such as the availability of patches, exploits, social media activity and more. They go on to lay out a complex scoring methodology they employ to rank severities based on the services impacted by a library.

They even dive into choosing the LLM(s) you employ and how to handle do scoring at scale. Really fascinating piece!

How AI Tools are Reshaping the Coding Workforce

We have seen a lot of disruption in the developer community with the rapid adoption of AI coding tools. From “productivity” boosts, to the NVIDIA CEO saying he wouldn’t advise early career entrants to learn to code, to organizations stating that they plan to scale back their developer hiring significantly.

This piece from WSJ looks at some of the impacts from AI coding tools on the developer workforce. This includes offerings such as GitHub’s Copilot being adopted by nearly 80,000 organizations in just 2~ years and more and more organizations actively or looking to potentially slim down there development teams due to the toolings ability to maximize coding capabilities and output.

In the piece, leaders are cited as stating the tooling can replace some entry level percetange of the workforce now, and may eventually be able to replace all entry level code generation (which, I think is a stretch, but it shows you where we may be headed).

Developers are shifting their focus on to how to use the AI tools and honing in prompting skillsets, as opposed to a heavy focus on traditional programming. It cites statements from Alphabet’s CEO, who stated more than 25% of the new code they generate is via AI. We’re even seeing the unemployment rate in IT rise, above the broader economic data of 4% for the economy as a whole and some attribute it to the growing use of AI.

There are some interesting and concerning side effects of the widespread use of AI coding tools, which are discussed in this article I recently came across titled “New Junior Developers Can’t Actually Code”. The author discusses how nearly every junior dev they talk to are using these tools and have apps and code that works but when they try and discuss with them why something is working a particular way, edge cases and more, the developers often can’t explain it, given they didn’t really write it and may not understand what they used the tools to build.

This has some real implications for the future of debugging, troubleshooting, vulnerability management and more and ties to the piece we discussed above about YC’s cohort leaning into AI coding tools and “vibe coding”.

Using MSFT’s Copilot to Expose Thousands of Private GitHub Repos

While productivity may be up, it isn’t without its own novel risks and challenges as well. AI security firm Lasso recently reported how they were able to use MSFT’s Copilot to expose thousands of private GitHub repos.

They demonstrate through the combination of ChatGPT and Bing, which is used for indexing, data about now private repositories could be retrieved despite the repositories now being private at the time of the inquiry. They referred to this as “zombie data”, which is data from repos which are now private or deleted but still accessible due to indexing by Bing and ChatGPT.

They called this the “Wayback Copilot Flow”, showing how indexing can retrieve information that may be intended to be private due to indexing, even if the repo was only made public briefly.

Lasso then goes on to automate identifying the “zombie” repos and the below findings are alarming, especially if a large portion of the repos were intended to be private and either were originally mistakenly created as public, or inadvertently set to public before being made private.

This brings a new meaning to the commonly used saying that once you put something on the Internet, it is forever. This is even more complicated and nuanced since GitHub is the largest developer platform in the world and of course ties back to MSFT, as does Copilot.

They reported their findings to MSFT, but the vendor categorized it as a Low finding, despite the potential that many of the repos may have sensitive data that can still be weaponized or exploited, such as tokens, keys and secrets.

AI In Cybersecurity: Looking Beyond the SOC

While much of the early focus on AI in Cyber has focused on SOC, there are many other potential use cases as well, such as GRC and AppSec among others. This article from Michael Roytman, who recently founded his own startup named Empirical Security looks at just that.

The overall theme of Michael’s piece looks to move from reactive (SOC) use cases to more proactive approaches when it comes to security. Michael makes the case that the SOC has made an early easy target for AI use cases, as organizations look to drive down metrics such as mean-time-to-detect (MTTD) and mean-time-to-respond (MTTR) to show early value with AI adoption for security, but that we must move to more proactive approaches with AI for cyber as well.

Failing to do so keeps security in a reactive rather than proactive mode, and contributes to the age old saying of “bolted on not built in” to some extent, although it doesn’t mean we shouldn’t look to use AI for SecOps use cases too.

Michael goes on to discuss leveraging Ai for forecasting potential threats, rather than just responding to them faster, and focusing on areas such as vulnerabilities, misconfigurations and the potential for exploitation.

Michael closes the piece discussing five ways to extend AI beyond the SOC, which are:

Closing the loop (on repeated findings and issues)

Adopting integrating platforms

Fostering cross-functional collaboration

Automate cyber hygiene

Aligning budgets and metrics

AppSec, Vulnerability Management and Software Supply Chain Security (SSCS)

State of Secret Sprawl

Secret sprawl continues to be a challenge for organizations, as secrets are increasingly playing a role in security incidents and breaches. I've shared GitGuardian's State of Secret Sprawl report over the last few years. It is always full of excellent insights, and the latest edition is no different.

They found that:

23,770,171 new secrets were detected in PUBLIC GitHub comments in 2024, which is a 25% increase from just the year prior

70% of VALID secrets detected in public repos in 2022 are still active today

15% of ALL commit authors leaked a secret

35% of ALL private repos contain hardcoded secrets as well

The challenges with secrets sprawl and management continue to grow year-over-year (YoY), with organizations struggling with proper secret hygiene and posture management, making secrets a ripe target for exploitation and abuse by attackers

Software Development Teams Struggle as Security Debt Reaches Critical Levels

AppSec company Veracode’s 15th annual “State of Software Security (SoSS)” report was recently released and continues to show organizations are struggling to keep up when it comes to third party code, open source, their supply chain and vulnerability management.

Their report is incredibly comprehensive, looking at 1.3 million unique applications with over 1267 million raw findings from both SAST, DAST and SCA. Some of the key findings include:

The average time to fix security flaws continues to grow, reaching 252 days on average, which is a 47% increase in the last 5 years, and a 327% rise since the report began 15 years ago.

This continues to demonstrate that organizations can’t keep pace, vulnerabilities continue to mount, sitting ripe for exploitation by malicious actors

70% of critical security debt is tied to third-party code and software supply chains

The typical organization takes five months to fix just half of all detected security flaws

Some of the challenges they cited that they believe are contributing to these metrics include expanding codebases and complexity, competing priorities, distributed architectures and a shortage of skilled AppSec resources.

They also cite the challenges of the “productivity” boost with AI generated code and copilots, leading to more code, and as a result, more vulnerabilities. I have been discussing this trend, and how many of the LLM’s are trained on open source, which is rife with vulnerabilities, only accelerating the attack surface expansion.

Evaluating the AI AppSec Engineering Hype

If you’re like me, you’ve likely been watching the AI wave with a mix of excitement and skepticism about its potential and impact. This piece from James Berthoty of Latio Tech provides insight into a recent guide he writes discussing “auto-fixing” and the various tiers from LLM Integrations, Highly Agentic to Determinism + AI.

The guide looks at the various different technical approaches vendors are taking to integrate AI into AppSec products and to address AppSec challenges around vulnerabilities. James covers both using AI to do static code analysis, as well as using AI to create fixed for discovered issues.

As we look to see the impact AI will have on AppSec, and what vendors are doing in terms of capabilities and innovations, this is a really excellent report shedding light on the art of possible.