Resilient Cyber Newsletter #35

Zombie “Unicorns,” AI Opportunity vs. AI Safety, Securing Agentic AI, Supply Chains & AI, & 2024 Trends in Vulnerability Exploitation

Welcome!

Welcome to issue #35 of the Resilient Cyber Newsletter.

We’ve got a lot of great insights this week, from zombie “unicorn” startups headed to the graveyard, a fiery speech by the U.S. Vice President on AI opportunities vs. safety, a handful of AI security publications from OWASP and insights into how Developers view AppSec.

So here we go!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 30,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Cortex Cloud: Real-Time Security from Code to Cloud to SOC

Cloud threats are evolving fast. With the majority of security exposures occurring in the cloud and attacks surging, traditional security models just can’t keep up.

Introducing Cortex Cloud, the next version of Prisma Cloud merged with best-in-class Cortex CDR to deliver real-time cloud security. Built on Cortex, organizations can seamlessly adopt natively integrated capabilities as part of the world’s most complete enterprise-to-cloud SecOps platform.

Security teams gain a context-driven defense that delivers real-time cloud security – continuous protection from code to cloud to SOC.

Don’t wait days to resolve security incidents while attackers move minutes. Stop attacks with real-time cloud security.

Cyber Leadership & Market Dynamics

The Unicorn Boom Is Over, and Startups Are Getting Desperate

We continue to hear how the heyday of zero interest rates (ZIRP), COVID, and other unique factors are over, and startups now face a different landscape. This was a key takeaway from my recent conversation with Mike Privette of Return on Security on his 2024 State of the Cyber Market Report.

This piece from Bloomberg really hammers the point home.

It points out how, in 2021, well over 1,000 VC-backed startups had reached valuations above $1 Billion (aka “Unicorn” status). However, as we have seen in recent years, times have changed; there is more emphasis on profitability and sustainability than just growth at all costs, interest rates have remained persistently higher, and the IPO “window” has all but closed for many tech and cyber companies.

This article from Katie Roof at Bloomberg piece discusses how several among that group have now been acquired for less than $1 Billion, and some have outright gone under. They call this era the “zombie unicorn,” where a record 1,200 VC-backed unicorns have yet to go public or get acquired, and some are starting to take desperate measures, such as taking unfavorable terms.

We saw IPO’s peak in 2021 with a massive spike from 2020, followed by a sharp drop that is yet to go anywhere close to 2021, let along prior windows.

Katie points out that almost all of the unicorns from 2021 have now taken “down rounds,” where their valuations are lower than in the past. Fewer than 30% have raised any financing in the past three years, likely due to difficulties in doing so. The article discusses how the challenges of down rounds and layoffs run contrary to the rosy picture of growth and momentum that founders and startups paint for investors, causing fundraising to be even more difficult.

This is a vicious cycle that impacts recruiting and retention, too. Startups leverage equity as a heavy, enticing portion of the compensation, and as momentum is lost, that equity angle loses its luster.

We continue to see the timeline from unicorn valuation to IPO grow too, although some suspect it may change with the change in administrations and economic excitement, but that also remains to be seen.

The article closes with a damning article on where many of the previous shiny and exciting “unicorns” may be headed.

“Unless we have another irrational valuation environment created by zero interest rates” like we had in the pandemic, he says, many of these zombie unicorns are “going to wind up in the graveyard.”

For some great additional context, Peter Walker, Head of Insights at Carta made a LinkedIn post providing insights into the numbers and the options startups have. If you aren’t already following Peter, I strongly suggest you do!

Among his points is the fact that there were 616 unicorns on Carta in 2021 and since then 14 have shut down entirely, and nearly half that have fundraised since 2021 have taken a down round.

Ouch.

WTF? Why the cybersecurity sector is overrun with acronyms

Everyone in cyber knows about the acronym soup. FedRAMP, HIPAA, HITRUST, SOC2, CNAPP, CSPM, CWPP, EDR, CVSS, EPSS, KEV, and the list goes on and on (literally). This article examines why the industry is overrun with acronyms and some of the challenges it causes.

Key challenges include complicating communications, leading to confusion and outright headaches. This could occur during incident response, crisis, or organizational policies and processes.

The article discusses many valid reasons why we use acronyms and what led to their creation.

UK Orders Apple to Build a Backdoor in Encrypted iCloud Backups - and U.S. Responds

The UK recently ordered Apple to build a backdoor into encrypted iCloud backups, which should be alarming to cyber and privacy advocates everywhere. Luckily, the U.S. government doesn’t seem on board with this invasive push, as Sen. Ron Wyden (D-Ore) is leading an effort to propose legislation to safeguard the U.S. better. citizens’ digital communications.

Sen. Wyden wants to reform the Clarifying Lawful Overseas Use of Data (CLOUD Act).

31 Myths and Realities Around Platformization Versus Best-of-Breed

The heated debate around Platforms vs. Best-of-Breed continues.

Although I personally think it is a false dichotomy and have written about the true reality, which is all companies generally start as point products, evolve organically to expand capabilities or acquire others to add to their portfolio of capabilities. Some continue growing market share and presence on this path, while others get acquired by industry leaders and rolled into existing platforms. New entrants tackling new threats or industry gaps emerge and the cycle continues infinitely.

That said, there are some nuances and this article has some interesting perspectives from some of my friends in the security industry discussing key myths and realities around the debate.

The Point Product vs Platform debate is best articulated in this image from my friend Ross at Venture in Security, in his article “Platform vs Best oof Breed is a Wrong Way of Looking at the Industry”.

As you can see above, it is a cycle, and it repeats indefinitely, as new companies emerge, companies evolve, some get acquired, develop more organically and rinse and repeat.

AI

Focusing on AI Opportunity Not Just Risks

I’ve discussed previously how the Trump administration quickly revoked the prior AI Executive Order (EO) under Biden which had a big focus on safety and security, following a similar trend as the EU.

Trump’s team published a new AI EO titled “Removing Barriers to American AI Innovation” which focused unleashing the private sectors ability to innovate with AI and removing what The White House dubbed unnecessarily burdensome requirements when it comes to AI.

Vice President JD Vance delivered a speech recently in the EU directly tackling the topic of AI opportunity vs. safety and discussing the EU’s emphasis on being a regulatory superpower and hindering AI before it even has a chance to get going.

He tied AI to everything from economic prosperity to national security, while also emphasizing AI’s ability to improve countless aspects of society and included discussions about the workforce and potential impacts there as well.

For the risk averse, this talk certainly will be unsettling, but for those focused on innovation, speed to market, and the full potential of AI, then the talk likely is an exciting one.

JD emphasized safety shouldn’t go out the window, but it can’t overshadow the emphasis on opportunity. This was a visible uncomfortable talk for many in the EU who have an opposing viewpoint that safety and security of AI should be the primary focus.

LLM And GenAI Data Security Best Practices

Data security is a fundamental part of any sound security program. This includes LLMs and GenAI as well. That is why it is awesome to see the OWASP Top 10 for LLM Apps & GenAI Data Security Initiative publish their “LLM and GenAI Data Security Best Practices” paper.

It covers key topics such as:

Traditional vs. LLM-Specific Data Security

Key Risks of Data Security in LLMs

Practical LLM Data Security Principles

Future Trends & Challenges in LLM Data Security

Secure Deployment Strategies for LLMs

LLM Data Security Governance

I’m honored to be listed among the contributors to the paper. As I have said many times recently, the pace and quality of the GenAI Security publications and guidance coming out of OWASP is second to none!

Supply Chains ⛓️💥 & AI

The topic of supply chain security has gotten less attention lately due to the AI craze.

That said, supply chain risks are still very relevant in the age of AI too. This is an excellent paper from Google that covers:

Development lifecycles for traditional and AI software

AI supply chain risks

Controls for AI supply chain security

Guidance for practitioners

There are a lot of parallels between software supply chain concerns and AI, especially in the open-source realm, and on platforms such as Hugging Face

Governing AI Agents 🤖

We continue to hear about the hype of Agentic AI or AI Agents, which take the promise of LLMs and empower them either semi- or fully autonomously. While there is tremendous potential and nearly unlimited use cases, there are also key security considerations and challenges.

This is an excellent paper covering:

👉 AI Agents: Beyond LLM’s, Goals, Plans, Actions and Concerns

👉 Evergreen Agency Problems such as information asymmetry, authority, loyalty, and delegation

👉 Limits of agency law and theory, including incentive design, monitoring, and enforcement

👉 Implications for AI design and regulation, which include inclusivity, visibility, and liability

I found this to be an incredibly comprehensive and well-researched paper on the challenges surrounding AI agents. We must address many agentic governance issues as we prepare to see pervasive agent use and implementation.

Agentic AI - Threats and Mitigations 🤖

I keep emphasizing the pace of the OWASP Top 10 for LLM Apps team is impressive and I’m not joking. This week I have another publication to share from the team, this one focused on Agentic AI.

2025 has been dubbed the year of "Agentic AI" by leading firms such as Sequoia Capital and others. Everywhere you turn, organizations are investing in Agentic AI startups, innovators are looking to disrupt countless industries, and enterprises are looking to leverage agents' potential.

But what about Agentic AI Threats? That's where the new OWASP® Foundation Agentic AI - Threats and Mitigations publication comes in.

It covers:

👨🏫 Fundamentals of AI Agents

🏛️ Agentic AI Reference Architecture

😈 Agentic AI Threat Model

🗺️ Agentic Threats Taxonomy Navigator

🦺 Mitigation Strategies

This is the most comprehensive paper I've seen on Agentic AI Threats and Mitigations. I'm continuously impressed by the OWASP Top 10 For Large Language Model Applications & Generative AI team. I'm also humbled to get to participate and be listed among the reviewers with this amazing group of passionate security leaders 🙏

There is much more to come from this team in 2025, so stay tuned!

AppSec, Vulnerability Management, and Software Supply Chain

AppSec Industry Analysis & Trends

In this episode of Resilient Cyber, we catch up with Katie Norton, an Industry Analyst at IDC who focuses on DevSecOps and Software Supply Chain Security. We will dive into all things AppSec, including 2024 trends and analysis and 2025 predictions.

Katie and I discussed:

Her role with IDC and transition from Research and Data Analytics into being a Cyber and AppSec Industry Analyst and how that background has served her during her new endeavor.

Key themes and reflections in AppSec through 2024, including disruption among Software Composition Analysis (SCA) and broader AppSec testing vendors.

The age-old Platform vs. Point product debate concerns the iterative and constant cycle of new entrants and innovations that grow, add capabilities, and become platforms or are acquired by larger platform vendors, and the cycle continues infinitely.

Katie's key research areas for 2025 include Application Security Posture Management (ASPM), Platform Engineering, SBOM Management, and Securing AI Applications.

The concept of a “Developer Tax” and the financial and productivity impact legacy security tools and practices are having on organizations while also building silos between us and our Development peers.

The role of AI when it comes to corrective code fixes and the ability of AI-assisted automated remediation tooling to drive down remediation timelines and vulnerability backlogs.

The importance of storytelling, both as an Industry Analyst, and in the broader career field of Cybersecurity.

What Developers Think About Security in 2025 - and Why it Matters

Security teams often struggle to get developers fully engaged with application security. But why? Jit’s latest survey dives into the developer perspective, and the findings make it clear: DevX is everything when it comes to making AppSec work.

📊 Key takeaways:

🔹 Security isn’t a cultural priority for most dev teams.

🔹 Complexity, lack of training, and time constraints are the biggest blockers.

🔹 Automated security testing is preferred, but noisy results and poor integrations slow teams down.

🔹 AI tools like Copilot rank among the least trusted methods for securing code.

I’ve had the chance to sit down with the Jit team before, and I was really impressed with their approach—focusing on developer-first security by making security seamless, integrated, and actually usable in real workflows. Their extensibility, prioritization engine, and gamified approach to security buy-in stood out.

This survey reinforces why shifting security left isn’t just about tools—it’s about enabling developers without disrupting them.

2024 Trends in Vulnerability Exploitation

It is always helpful to reflect on the previous year's vulnerability management and examine trends related to exploitation, incidents, overall vulnerability growth, and more. This piece from my friend Patrick Garrity at VulnCheck does exactly that, and it is full of great insights.

Some of the key takeaways are below:

These are some very concerning trends, including double-digit growth in exploitation before the CVEs are publicly disclosed, let alone have a patch available.

This puts organizations in an incredibly challenging position, given they can’t possibly remediate vulnerabilities before they are publicly known or without an accompanying patch. This means that organizations' best hope is to implement compensating controls, and even that is after they find out about the vulnerability, which, as shown above, could be after it is already being actively exploited.

The final bullet also highlights the disparate and complicated vulnerability ecosystem when it comes to vulnerability disclosures and reporting. While it is great to see so much involvement from security companies, government agencies, and non-profits, it is also impractical for the average organization to actively monitor all of these sources to stay up-to-date on the latest vulnerability disclosures.

Patrick also highlighted that just 1% of CVEs published in 2024 were exploited, once again truly emphasizing that organizations need to align their vulnerability prioritization efforts accordingly to avoid wasting time on a massive amount of vulnerabilities that are never exploited, let alone exploitable.

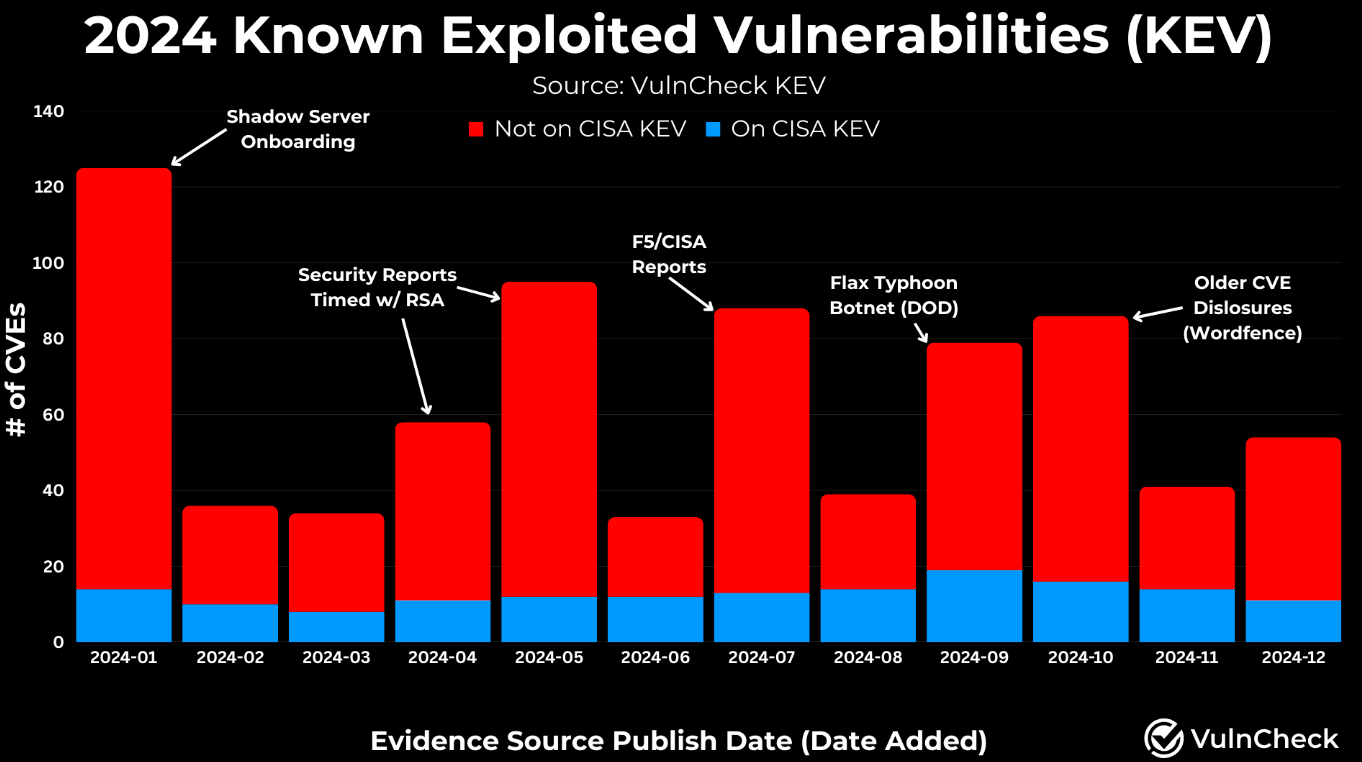

He goes on to take a look at 2024 Known Exploited Vulnerabilities (KEV). While many automatically associate KEV with the CISA KEV, the reality is that KEV only captures a small fraction of the overall number of known exploited vulnerabilities in the wild. This is problematic as many use CISA KEV as their authoritative source for prioritizing known exploited vulnerabilities. Still, as you can see from the image below, you would be missing a TON of known exploited vulnerabilities if you solely rely on KEV.

While knowing what is exploited is key, it is also helpful to understand how quickly vulnerabilities are being exploited. Patrick provides just that in the below diagram. Most alarming in the chart is the fact that nearly 1/4 of CVEs are exploited on or before the day their CVEs were publicly disclosed.

What is wild is this is actually a decrease from 2023’s 27%. Nonetheless, roughly 25% of vulnerabilities are exploited before they are publicly disclosed. Therefore, organizations don’t even know they exist, let alone have done anything to mitigate their risks.

Unpacking my comments above about the disparate ecosystem for vulnerability disclosure, Patrick cites 112 unique sources that provided initial evidence of exploitation.

This includes security companies, non-profits, product companies, government agencies, and social media/blogs. The idea that organizations and vulnerability management programs can track all of these sources is unrealistic. Still, we also know there is a lag time between vulnerability disclosure and CVE publication, leaving organizations blind to the risks in the interim.

Overall, this report from Patrick and VulnCheck provides some excellent insights into the vulnerability ecosystem, which remains as problematic as ever. I suspect 2025 will only worsen with more code, vulnerabilities, exploitation, and incidents.

Securing AI Supply Chain: Like Software, Only Not

While much of the focus around AI security has been on topics such as prompt injection, data leakage, and vulnerabilities, supply chain risks are still very relevant to AI, too.

This recent publication and blog from Google Cloud examine this less-discussed topic, including how AI supply chain risks are real today. It shows examples of compromised models in 2023 and 2024.

It covers:

Development lifecycles for traditional and AI software

AI supply chain risks

Controls for AI supply chain security

Guidance for practitioners

This great publication dives deep into key considerations around AI supply chain security.

Doing More in AppSec by Doing Less

We all know the feeling of trying to do “all” the things in AppSec. But how much truly needs to be done, and are we having diminishing outcomes by trying to do too much rather than focusing on what actually matters?

This is a great talk from CISO and industry leader John Heasman from BSides Knoxville. He discusses how often we end up doing a lot of non-value-added activities and adding burden to our Development and Engineering peers. Perhaps it is time for a different approach.