Resilient Cyber Newsletter #24

Worst Telecom Hack in U.S. History, CISA Red Team Report, State of GenAI in the Enterprise, AI Red Teaming, and Getting Real about AI Governance

Welcome!

Welcome to another issue of the Resilient Cyber Newsletter, this time the Thanksgiving Week edition.

I’m feeling incredibly thankful this year, for the health and happiness of my family, and our fifth little one, who just turned 3 weeks old this week.

I hope everyone has a great holiday season with their friends and family and take some time to recharge and focus on those you love.

That said, let’s dive into all the great resources this week!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 7,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Cybersecurity Leadership & Market Dynamics

The Bipartisan Nature of Cybersecurity Lends itself to Continuity

At least that is the perspective of the Cyber leaders cited in this CSO Online. Many have anxiety regarding potential changes with the forthcoming administration and its implications for various Federal agencies, efforts and more.

That said, several leaders (including James Dempsey who I interviewed last week) suspect we will largely see a lot of continuity between President Biden and President Trump’s approach to cybersecurity, especially in relation to national security.

What CISO’s Need to Know about SEC Breach Disclosure Rules

The focus on CISO liability and involvement in regulatory oversight and reporting continues to increase. One of the most notable examples, of course, is the SEC breach disclosure rules. This includes incidents categorized as unauthorized occurrences or a series of unauthorized occurrences on or conducted through an SEC IT system’s IT systems that jeopardize the confidentiality, integrity, or availability of their systems and/or data they contain.

This is a good high-level article from CSO Online discussing some of the key considerations, including understanding “materiality”, which is a key consideration for incidents that require reporting.

Simple Sabotage Field Manual

"Toil kills purpose faster than mission can replace it"

Such a stellar piece from Alexis Bonnell on bureaucratic sabotage and the impact it has on organizational outcomes. Alexis is discussing a World War II "Simple Sabotage Field Manual" from the predecessor organization of the CIA. So much of what she discusses feels applicable to the current state of Cybersecurity and Compliance in most large highly regulated industries and enterprises:

Tactic 1: The Tyranny of Bureaucracy, emphasizing rigid protocols and procedures, approvals, forms, and signatures, all of which impede timely decision-making or workflows around access, software usage, and deployments.

The epitome of "security theater" personified, grinding organizational change and progress to a screeching halt.

Tactic 2: Committees and the Endless Cycle of Deliberation, leading to no decisive action, endless debating, and an ever-elusive consensus 🔁

We all know this in the form of Change/Configuration Control Boards, Committees, and more, often consistently of people without actual competence and expertise in the things they're governing, all from their ivory tower organizational perch ensuring the status quo never changes, as the arbiters of risk, despite having little to no actual competency or context in the decisions they're expected to make.

Tactic 3: Haggle Over Word: The Paralysis of Precision

Tactic 6: Apply Regulations Rigidly: The Inflexibility of Compliance

"But the control says x!" 🗞️ Getting wrapped around the axel on security framework terminology, phrasing, and minutiae, which often has no actual impact on real risks.

There's much much more in this piece including countermeasures to limit the impact of self-sabotage and this is well worth the read.

We need more digital leaders like Alexis in the public and private sectors, especially in cyber. Our career field suffers from an inherent risk-averse mindset that creates more risk than it mitigates and ultimately impedes organizational outcomes.

There's a reason Harvard studies have quoted Developers calling Security a "soul-withering chore"

We've identified the enemy - and it's us 🤕

Examining the Worst Telecom Attack in U.S. History

In what is now being called the “worst” telecom hack in U.S. history, an incident involving multiple U.S. telecommunications companies, including T-Mobile is continuing to make waves. U.S. Senate Intelligence Committee Chairman Mark Warner has called it the worst in U.S. history “by far”.

A group dubbed “Salt Typhoon” hacked into U.S. telecom providers and intercepted surveillance data intended for law enforcement, as well as sensitive data of high-profile individuals including those in the U.S. government and politics, at a time when politics is already tense.

Claims include not just call records but also being able to listen to phone conversations and read text messages. The group responsible uses custom malware, as well as known vulnerabilities and misconfigurations in publicly exposed assets to compromise services such as Microsoft Exchange,

Key government officials have now begun calling for an investigation by the Cyber Safety Review Board (CSRB) into the incident, its origins, and its impact, and how the U.S. can bolster critical infrastructure, including telecommunications.

Insights from CISA’s Red Team Assessment of a U.S. Critical Infrastructure Sector Organization

In a random but timely coincidence to the above-mentioned incident, CISA published a document capturing insights from recent Red Team assessments they have conducted of U.S. Critical Infrastructure Organizations.

CISA routinely conducts these, often at the request of the organization being assessed to help identify deficiencies and provide recommendations to bolster security.

It should come as no surprise that there were findings, and several of the findings are similar to what the telecoms in the above incident experienced. This particular case involved vulnerabilities, lateral movement, gaps in detection and response, and an over-reliance on EDR that didn’t properly identify the malicious activity, and the organization lacked sufficient network layer protections to mitigate lateral movement.

The report overall is a great read to understand how critical infrastructure organizations can (and are) being exploited and how they can mitigate their risk.

RSA’s Innovation Sandbox - Startups Must Accept $5 Million Investment

News broke last week that participants in the RSA Innovation Sandbox will now be required to accept a $5 Million investment. RSA Conference of course is owned by Crosspoint Capital Partners, which is playing a part. It was stated:

“As part of being a Top 10 Finalize, Applicants are required to accept the $5M RSAC ISB SAFE”

Each year 10 early-stage startups are chosen to appear at the RSA Conference Innovation Sandbox. These companies are often some of the best and most promising the industry can offer. It is in its 20th year, and the finalists have seen over 75 acquisitions and raised more than $16.4 billion in investments.

For a really detailed breakdown of the RSA Innovation Sandbox and the performance of the companies that participate, I recommend this piece “Signal vs. Noise in the RSA Innovation Sandbox” by Mike Privette at Return on Security.

There’s been a lot of takes on the announcement including a well-thought-out one from Sid Trivedi, Partner at Foundational Capital. As Side mentioned, it will be interesting to see how founders and their existing investors perceive the investment. He did note that the high-level terms are very founder-friendly, structured as a YC SAFE with no valuation cap and a sliding scale discount (5-25%) based on a time interval.

There are a lot of great comments in Sid’s post, with some saying it sounds like a pay-to-play scenario, forcing founders to allow Crosspoint to invest in their startups. It of course could go both ways. Some may need/want the $5M investment and/or the exposure that the Innovation Sandbox brings, while others may be reluctant to add Crosspoint as an investor.

One thing is for sure, it benefits Crosspoint, letting them leverage their ownership of the RSA Conference to ensure they get investments in some of the most promising startups in the industry.

AI

State of GenAI in the Enterprise

Venture Capital (VC) firm Menlo Ventures recently published an incredible report that is packed full of great data and insights on the state and future of GenAI adoption in the enterprise.

It shows that organizations are moving from pilots to production, with AI spending surging from $2.3 billion in 2023 to $13.8 billion in 2024 (a 6x increase).

There are also several key insights that I think have cybersecurity implications I discuss below:

Code Generation is dominating AI adoption use cases. This is being driven by the push for Developers and organizations to be more productive, enable rapid feature and product releases, and outpace competitors.

This is great, but more code inevitably means potentially more bugs, especially based on studies we've seen showing the typical hygiene and posture of Co-Pilot and AI-driven code generation and the inherent trust Developers give the outputs, often without validation.

This will drive the need for more effective security testing, automation, and higher fidelity training data for AI Co-Pilot development tooling.

Agents and Agentic AI are proliferating, as organizations look to capitalize on the Services-as-a-Software era, looking to capture the multi-trillion dollar services market.

In-house development of AI solutions rose to 47%, from just 20% in 2023, as organizations build more comfort and competence in AI.

This must be accompanied by AI governance, security, secure architectural considerations, and AI Security Engineering.

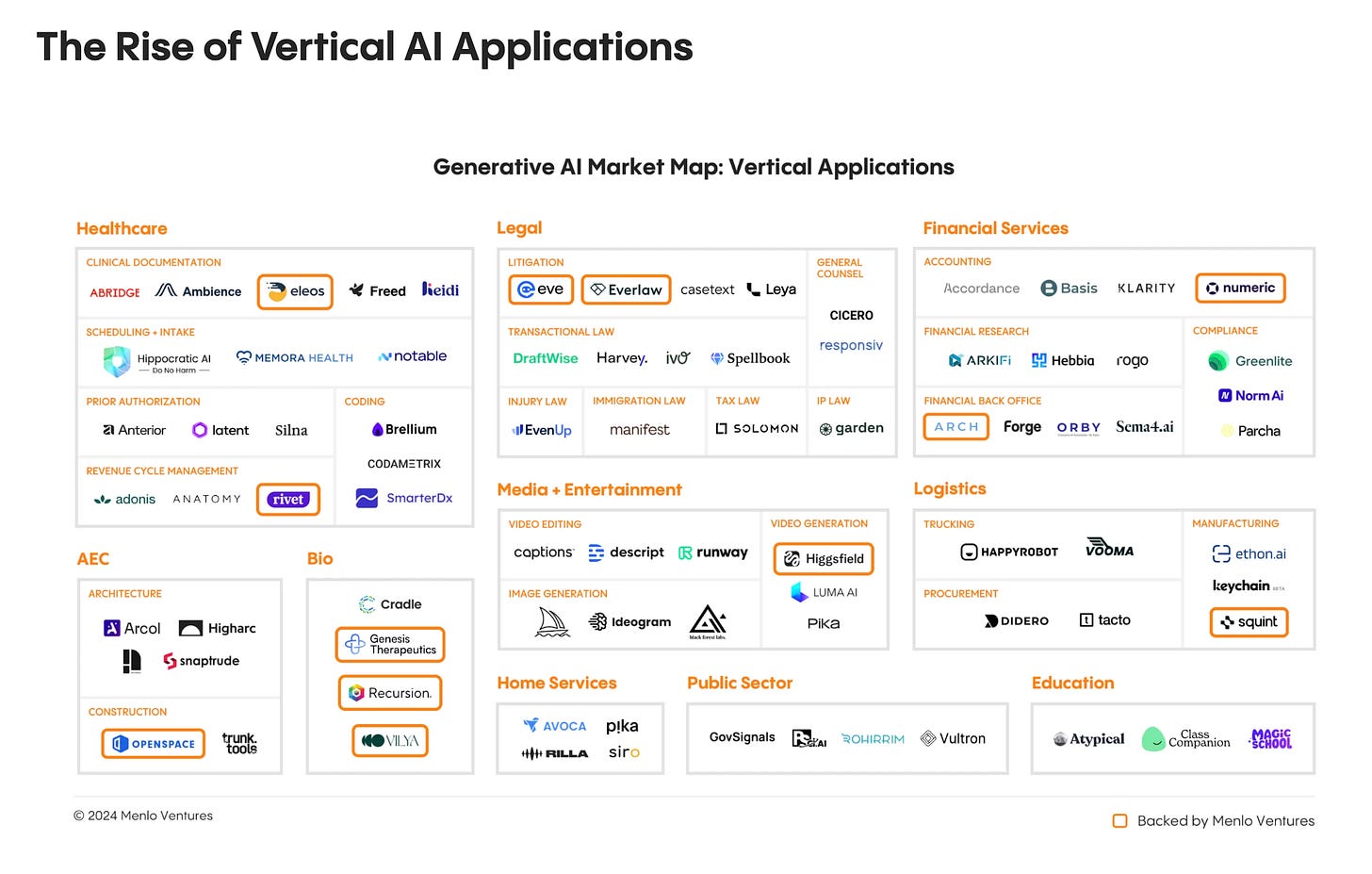

Vertical AI applications are growing rapidly, including in traditional industries that are laggards and late adopters, such as Healthcare, Legal, and the Public Sector.

This will drive the need for key considerations are data sensitivity and exposure from PHI, PII, CUI, and more regulated data types.

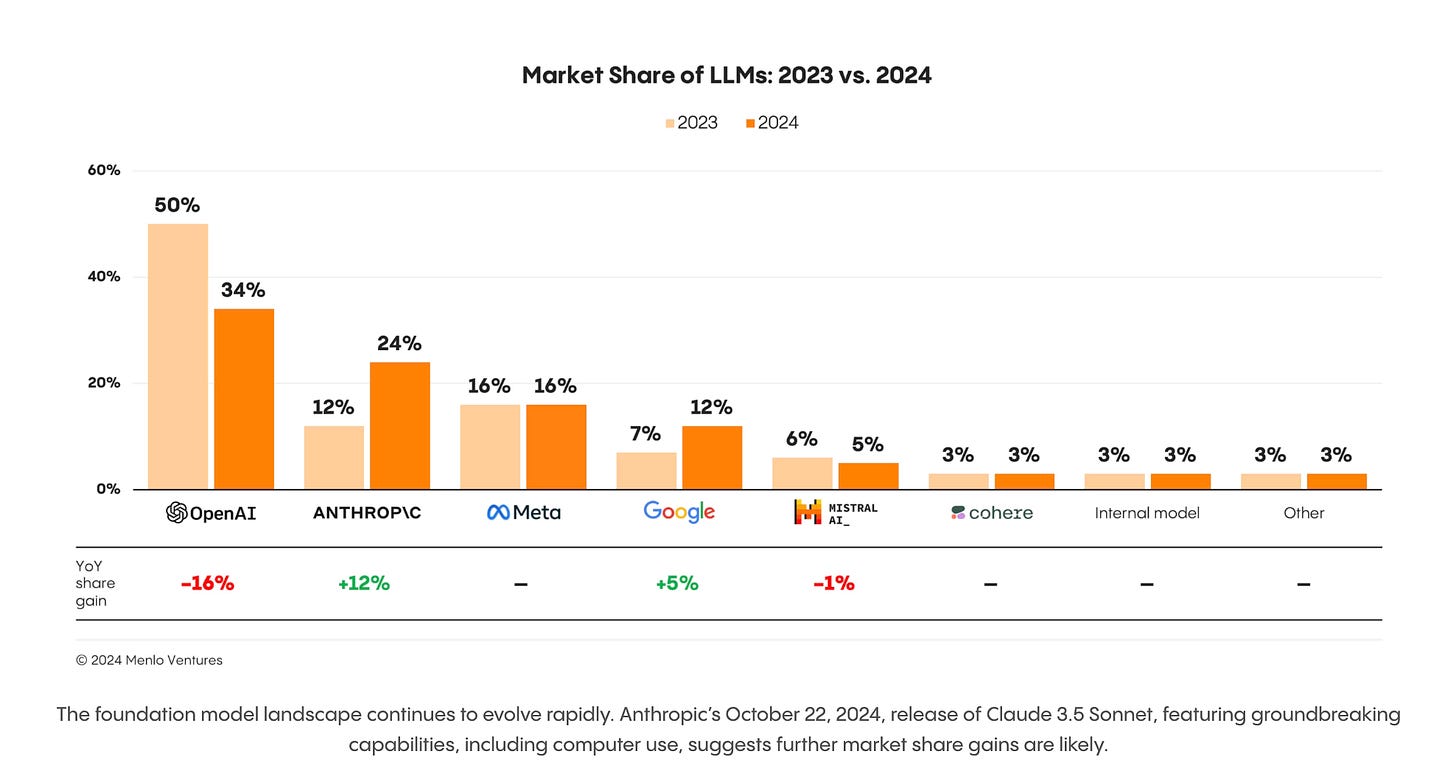

Incumbents like OpenAI are losing ground to others such as Anthropic.

Despite the excitement of open-source models in AI, commercial vendors and models still dominate the landscape with an 81% market share

This means organizations need competence and context for how the various models function, service provider considerations, supply chain, etc.

This space continues to evolve quickly, and it's key that security practitioners and security product vendors alike are watching these trends and preparing accordingly.

Applying LLM’s and GenAI to Cyber

Keeping up with the amount of LLM and GenAI resources, guidance and tools coming out can be incredibly daunting.

That’s why this resource from Dylan Williams is awesome and worth bookmarking.

It includes:

The LLM Bible (Must Read Resources)

Free Training and Courses

Daily LLM Toolkit

Intro to LLM’s and Building with LLM’s Video Collection

LLM Blogs and Deep Dives that are Cyber focused

An excellent resource to keep on hand!

Implementing AI Governance w/ Walter Haydock

In this episode, we sit down with StackAware Founder and AI Governance Expert Walter Haydock. Walter specializes in helping companies navigate AI governance and security certifications, frameworks, and risks. We will dive into key frameworks, risks, lessons learned from working directly with organizations on AI Governance, and more.

We discussed Walter’s pivot with his company StackAware from AppSec and Supply Chain to a focus on AI Governance and from a product-based approach to a services-oriented offering and what that entails.

Walter has been actively helping organizations with AI Governance, including helping them meet emerging and newly formed standards such as ISO 42001. Walter provides field notes, lessons learned and some of the most commonly encountered pain points organizations have around AI Governance.

Organizations have a ton of AI Governance and Security resources to rally around, from OWASP, Cloud Security Alliance, NIST, and more. Walter discusses how he recommends organizations get started and where.

The U.S. and EU have taken drastically different approaches to AI and Cybersecurity, from the EU AI Act, U.S. Cyber EO, Product Liability, and more. We discuss some of the pros and cons of each and why the U.S.’s more relaxed approach may contribute to economic growth, while the EU’s approach to being a regulatory superpower may impede their economic growth.

Walter lays our key credentials practitioners can explore to demonstrate expertise in AI security, including the IAPP AI Governance credential, which he recently took himself.

You can find our more about Walter Haydock by following him on LinkedIn where he shares a lot of great AI Governance and Security insights, as well as his company website www.stackaware.com

Getting Real about AI Governance

Speaking of AI Governance, the Cloud Security Alliance (CSA) recently published a concise and good read on the topic titled “Don’t Panic, Getting Real about AI Governance”.

It takes a look at:

The importance of explaining AI and accountability

Unique AI Challenges

Key Factors in AI Adoption

Relevant frameworks such as the NIST AI Risk Management Framework (AI-RMF)

Breaking Down the AI Hype Cycle, and Unlocking AI’s Untapped Potential

There’s a lot of takes on the state of hype around AI and its potential. That said, Databricks is unique positioned as one of the companies valued in tens of billions seeing tremendous growth as part of this wave, and a company that has been at it before it got “hot”.

I found this discussion with Databricks CEO Ali Ghodsi really insightful in terms of the topic of Super AGI, how organizations can and will unlock AI’s untapped potential, aspects of AI adoption Databricks sees across their large customer base and more.

AI Red Teaming in 2024 and Beyond

As it turns out, I have listened to several insightful conversations around AI and Cyber this week. Among those is an episode of the AI Cybersecurity Podcast by Caleb Sima and Ashish Rajan (if you aren’t subscribed to the show, you should be.

In this particular episode they have Daniel Miessler of Unsupervised Learning (another strong resource I subscribe to) and Joseph Thacker, a Principal AI Engineer from AppOmni on the show to discuss AI Red Teaming.

They bring a lot of great insights and perspectives as it relates to AI Red Teaming and where it stands now, as well as where it is headed. AI Red Teaming is a hot topic that has gotten a lot of attention, including emphasized in the U.S. AI Executive Order (AI EO).

AI Governance - What Boards and Leaders Need to Know

I’ve been really enjoy the perspectives shared by Vijaya Kaza on LinkedIn lately. Her latest article discussing AI Governance and what boards and leaders need to know is another great one.

She lays out five pillars in an acronym she dubs “FIPSS”:

Fairness

Interpretability

Privacy

Safety

Security

And goes into detail on each of the pillars and key considerations. She also discusses regulatory compliance and risk management, with examples such as the EU AI Act and state-specific AI regulations as well. Lastly, she discusses governance from the perspective of business alignment as well as the actual technical implementation of AI.

AppSec, Vulnerability Management and Software Supply Chain

The Future Application Security Engineer

There’s no doubt that the field of AppSec is changing. From historical trends of Cloud, DevSecOps, Shift Left, and now the evolution of GenAI and LLM’s, the career field is a dynamic one.

This is a great piece from Jeevan Singh, one of the sharpest AppSec folks I know. He discusses the future of AppSec Engineers and how current AppSec engineers need a strong foundation in areas such as vulnerability management, IAM, secure code reviews, and tooling.

In the future, the four key skills he sees involve:

AppSec

Software Development

Influence Skills

Program Management

While the first two should come as no surprise, the latter two speak to the need for social skills, being able to coordinate, collaborate and draw support from peers, as well as run a functional AppSec program, as it becomes more and more integral to modern businesses and the way they deliver value to customers.

I had a chance to interview Jeevan a while back on “Scaling AppSec Programs” and the overall field of AppSec, which can be found below:

NIST NVD Woes Continue

The NIST NVD continues to seem to struggle. This time with challenges impacting the NVD API recently, resulting in the below message. This of course builds on a slew of issues with the NVD throughout 2024, including an outright halting of vulnerability enrichment, and then the growth of a backlog of unanalyzed CVE’s.