Resilient Cyber #63

DoD Cyber Overhaul, Salesloft Incident Spirals, AI Security M&A Spree Continues, AI-driven Exploitation & SOC Visibility Triad is Now a Quad

Welcome!

Welcome to issue #63 of the Resilient Cyber Newsletter.

I’ve got a lot of great resources and discussions to share this week, from the continued M&A spree around AI Security to AI’s impact on the workforce, including software engineering, updates to resources such as the SOC Visibility Quad, and more.

As always, I hope you find these helpful, and please be sure to subscribe and share with a friend!

Interested in sponsoring an issue of Resilient Cyber?

This includes reaching over 45,000 subscribers, ranging from Developers, Engineers, Architects, CISO’s/Security Leaders and Business Executives

Reach out below!

Doxxing and social engineering start with exposed PII

From executive impersonation to targeted phishing, most social engineering attacks start with publicly available personal data. And data brokers are making that data accessible to anyone, threat actors included.

DeleteMe helps organizations reduce their attack surface by removing employee PII from hundreds of high-risk data sources. Security teams use it to protect executives, high-risk staff, and entire workforces from identity-based threats.

We also offer individual plans, so you can take control of your own privacy. Use code resilientcyber for 20% off personal coverage.

Cyber Leadership & Market Dynamics

DoD CISO Hints at Major Changes

The DoD CISO recently took to LinkedIn to hint at some major changes to come out of the DoD CIO office, with the hints being focused on the Risk Management Framework (RMF) and the way the DoD authorizes software and systems for production environments.

Above is the visualization from an internal DoD CIO office ideation session she shared, showing a strong focus on modernizing the RMF process, moving away from static snapshot-in-time assessments, paper-based artifacts, and legacy risk management methodologies.

While it remains to be seen what the future of the DoD RMF and broader risk management practices look like, the fact that these conversations are being had at the highest levels of leadership and openly shared publicly is refreshing and promising to those of us who have spent decades dealing with legacy methodologies that fail to reflect actual risk management.

For a deeper dive of my thoughts on this topic, you can see my article:

Salesloft Incident Continues to Spiral, Impacting Zscaler and Palo Alto Networks Among Others

The Salesloft incident originally tied to Salesforce now continues to spiral, with leading security firms Zscaler and Palo Alto Networks announcing they have been impacted as well.

Salesloft Drift is a SaaS product, and the incident involved stolen OAuth tokens and data exfiltration. Google’s Mandiant advised all Salesloft Drift customers to treat any tokens associated with the product as potentially compromised. Many have potentially been impacted, and several, such as Zscaler and PANW, have stated that the compromised credentials allowed limited access to some Salesforce/Customer data.

That said, the incident has been a trending topic in the cyber community, with many pointing out challenges around SaaS, supply chains, OAuth, and more. For example:

Okta published “The Salesloft Incident: A wake-up call for SaaS security and IPSIE adoption”.

SaaS Security firm AppOmni published “Salesloft Drift-Salesofrce Breach: Why Salesforce OAuth Integrations are a Growing Risk”

Elad Erez even hinted that there’s potential the incident may have exposed cybersecurity sales pipelines due to the incident and exfiltrated data involving “Opportunity Records” (see below):

Unsurprisingly, Brian Krebs has some of the most detailed coverage on the incident. He published a comprehensive blog titled “The Ongoing Fallout from a Breach at AI Chatbot Market Salesloft,” where he breaks down the incident and gives insights into communications from the attackers involved.

AI Adoption Linked to 13% Decline in Jobs for U.S. Workers, Stanford Study Reveals

We’ve heard a ton about AI's potential impact on the workforce. While some write it off as corporate greed and organizations using AI as a scapegoat to cut costs (and workers), which is undoubtedly true, it does appear there is merit to the impact that AI is and will have on the workforce, too.

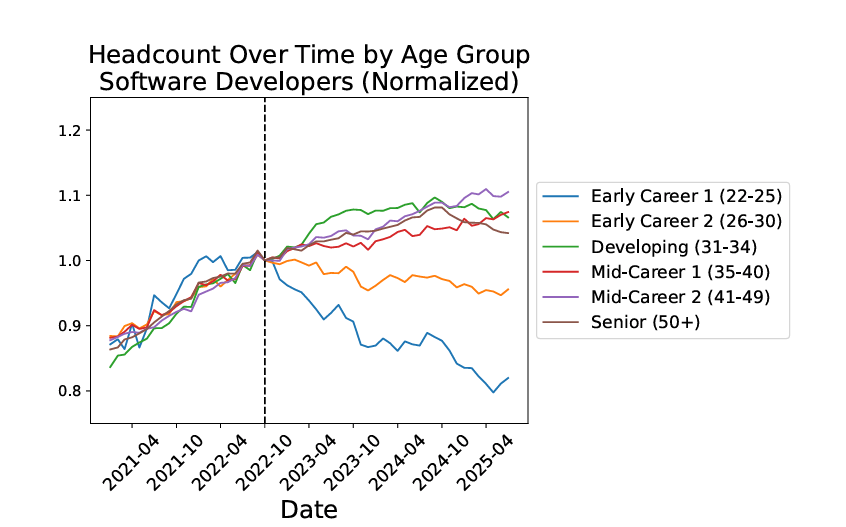

A recent Stanford study found that workers between the ages of 22 and 25 have experienced a 13% relative decline in employment since 2022, most notably in occupations exposed to AI.

These include career fields such as customer service, accounting, and software development, the last of which should not surprise anyone watching the impact of GenAI and LLMs on software development.

The report notes that overall employment remains robust. Still, the effects are notable when it comes to earlier career individuals in the age range noted, along with those involved in AI-exposed career fields. It also states that the impact on younger, more junior workers is because AI’s most significant effect is hitting areas that have “codified knowledge or book learning”, and is less impactful in areas that involve experience and expertise gained over time, which has more nuance.

This does raise some interesting questions about the future of tech and software, as we ask who will replace the seniors if the juniors aren’t being hired at the same rate? As folks age out and/or retire, someone must take their place with that wisdom and first-hand experience.

For an incredible deep dive on the broader state of software engineering, including the role of AI, I would strongly recommend this recent piece from Gergely Orosz titled “State of the Software Engineering Job Market in 2025: A deepdive into today’s tech jobs market, with exclusive data on tech jobs, AI, engineering, Big Tech recruitment, and the growing importance of location and more”.

Varonis Acquires SlashNext for $150 Million in AI Email Security Push

Varonis recently announced that they are acquiring SlashNext, which provides AI-enabled email security solutions focused on stopping phishing attacks. Varonis itself is valued at $6 billion.

Many are eyeing the email security market as one poised for disruption. New startups are emerging, building on the previous wave of leaders such as Sublime, Material, and others. The email security market is projected to grow from $5.2 billion in 2025 to $10.7 billion by 2032.

Crowdstike to Acquire Onum to Transform How Data Powers the Agentic SOC

In other acquisition news, CrowdStrike announced last week that they were acquiring Onum, a vendor focusing on real-time telemetry pipeline management, which will help extend the CrowdStrike Falcon platform. In their announcement, CrowdStrike touted Onum’s ability to filter, enrich, and optimize data as it streams, making it actionable quickly, versus needing to be stored and then analyzed to make it actionable, as has historically been the case.

The announcement also indicates CrowdStrike’s direction, focusing on the SOC in the era of Agentic AI and touting potential benefits such as faster customer onboarding to Falcon Next-Gen SIEM, improved SOC efficiency, and agentic security operations. CrowdStrike also doubled down, citing its intent to build the “platform of the future,” echoing similar efforts from leaders such as Palo Alto, who are making a big platform push.

The AI Security M&A Spree Continues - Aim Security is joining Cato Networks

Following up on significant acquisitions in the AI security space previously, such as Palo Alto’s acquisition of Protect AI and SentinelOne’s acquisition of Prompt Security, AI Security company Aim Security announced that Cato Networks has acquired it.

Speaking of the AI Security M&A spree, CTech recently had a great piece titled “From Prompt to Aim: AI and the Cyberstartups Caught in an Acquisition Frenzy”.

Forbes Cloud 100 List Is Live

The Forbes Cloud 100 for 2025 was recently published, and as you can guess, it is dominated by AI, much like every other headline and discussion in the software and technology ecosystem.

It also features some cyber companies, such as Netskope, Tanium, 1Password, Vanta, Island, Chainguard, and others!

AI

A CISO’s Guide to Vetting AI Security Vendors

By now, it is clear that we're in a supercycle being driven by AI (some are even saying an AI bubble). This means sales teams are using AI phrases and buzzwords in full effect. Agentic, autonomous, agents, automated - all out AI enshitification

This makes it incredibly important for CISOs and security leaders to be equipped to navigate vendor interactions and procurement activities and to have the right questions and topics to cover.

Caleb Sima and Edward Wu wrote a great "CISO's Guide to Vetting AI Security Vendors." It involves a three-pillar framework focused on: The Problem, The Proof, and The Practicality. This includes asking fundamental questions such as what pain the product is solving, whether AI is even necessary, whether it actually works, what the true cost of ownership is, regulatory alignment, and more.

This is an excellent resource for folks who claim to be leveraging AI to improve security outcomes when considering purchasing cyber AI products or cyber products more broadly.

Colorado AI Act Delayed (Slightly)

News recently broke that the Colorado AI Act, one of the more rigorous state-level AI regulations in the U.S., is being pushed back slightly, with a five-month delay. This is being done to “placate” private industry and allow more time for compliance and alignment from the private sector with the emerging AI regulation.

This is part of a broader dialogue underway. There was a push for a Federal “moratorium” on AI Regulations, while states such as CA, NY, and CO moved out with their respective AI regulatory efforts. Knowing that most software vendors operate nationwide business endeavors, these state-level regulations essentially become the “high water mark” that vendors must meet, regardless of what federal regulation does or don’t require.

Similar trends are underway globally, with regions such as the EU leading the pack with their EU AI Act, forcing vendors to align with it due to the global nature of modern commerce.

Agentic AI: A CISO’s Security Nightmare in the Making?

We know 2025 has been dubbed the year of “Agents” or “Agentic AI.” The industry continued to advance from the initial surge of GenAI adoption through semi- or fully autonomous use cases and implementations around agentic AI and its supporting protocols, such as the Model Context Protocol (MCP).

But will Agentic AI become a massive headache for CISOs? This piece, with perspectives from various security leaders, argues that it certainly has the potential to pose a lot of trouble for CISOs and security teams, from integrations to tool calls to potential sensitive data exposure.

Multi-Agent Pen Testing AI for the Web

One of the most interesting aspects of the rise of LLMs and Agentic AI is their impact on the economics of cybersecurity. Yesterday, I shared how researchers developed "Auto Exploit" and created exploits for CVEs in as little as 15 minutes and $1.

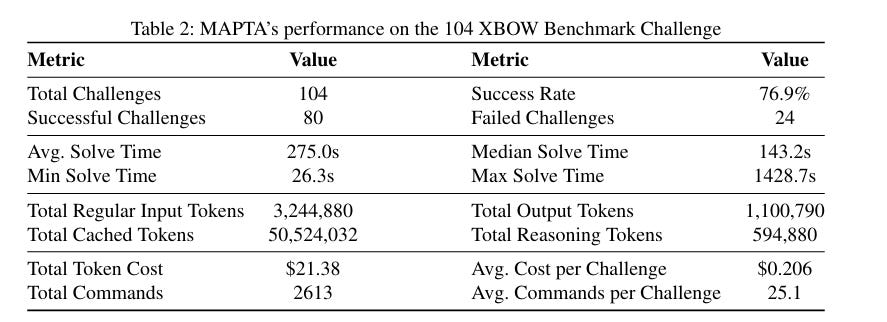

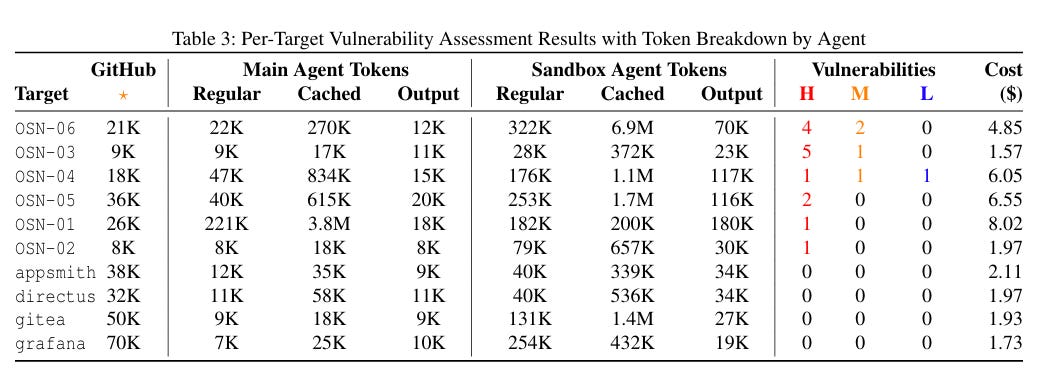

Today, I was checking out this paper, "Multi-Agent Penetration Testing AI for the Web," that Snehal Antani brought to my attention. It has a similar tone. The researchers proposed a multi-agent system for autonomous web app security assessments, using LLMs, agents, and tool calls.

Not only did it perform impressively against a 104-challenge benchmark, but it also saw high success rates against SSRF, misconfigurations, injection attacks, and more. They did all of it at a total cost of $21.38, a median cost of $.073 for successful attempts, $.0357 for failures, and only $.30 per challenge.

These cost dynamics have many implications. This means potential ramifications for the services industries, as testing can be commoditized and costs driven down. It could make robust testing and assessments more attainable for SMB's, who generally live below the "cybersecurity poverty line" (cc: Wendy Nather)

Of course, it also makes attacks, exploits, and malicious activities far cheaper for adversaries. Shout out to Arthur Gervais on the publication.

AppSec

Proof-of-Concept in 15 Minutes? AI Turbocharges Exploitation

I recently shared a link in a prior newsletter about researchers who could use AI to quickly develop exploits for CVEs, in as little as 15 minutes, as part of a project dubbed “Auto Exploit”. This piece from Dark Reading discusses those implications.

This includes enterprises' inability to keep up with the pace of vulnerabilities and exploitation before introducing LLMs and their acceleration of exploitation activities.

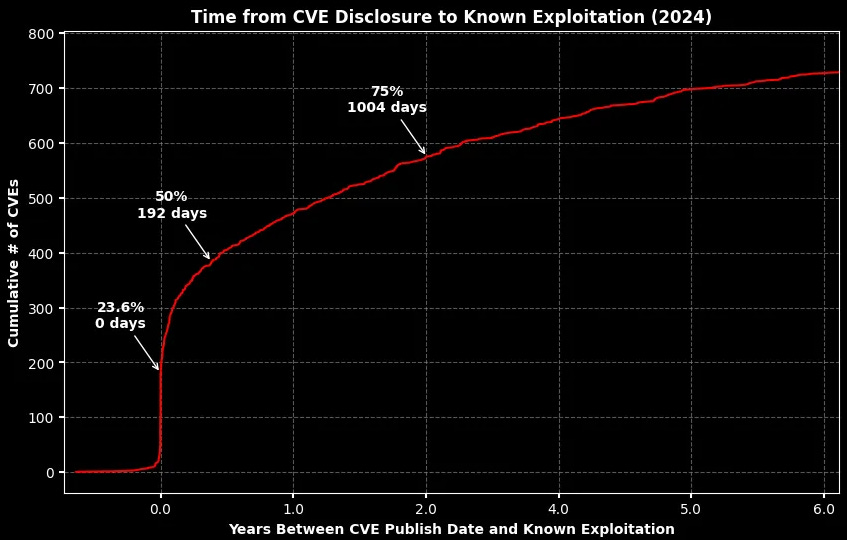

They shared recent data from Patrick Garrity and Vulncheck, showing that the median time to exploit a vulnerability in 2024 was 192 days. In the years to come, it will be interesting to see how this metric is driven down as attackers can use AI to drive down the median time to exploitation.

The research also speaks to the unfortunate economics of cybersecurity. The researchers demonstrated how it costs as little as $1 to develop exploits, and we know it certainly costs more than that on average to defend against them, as organizations have to do vulnerability prioritization, triage, work with system owners to remediate findings, and much more.

The Compliance Era of Vulnerability Management

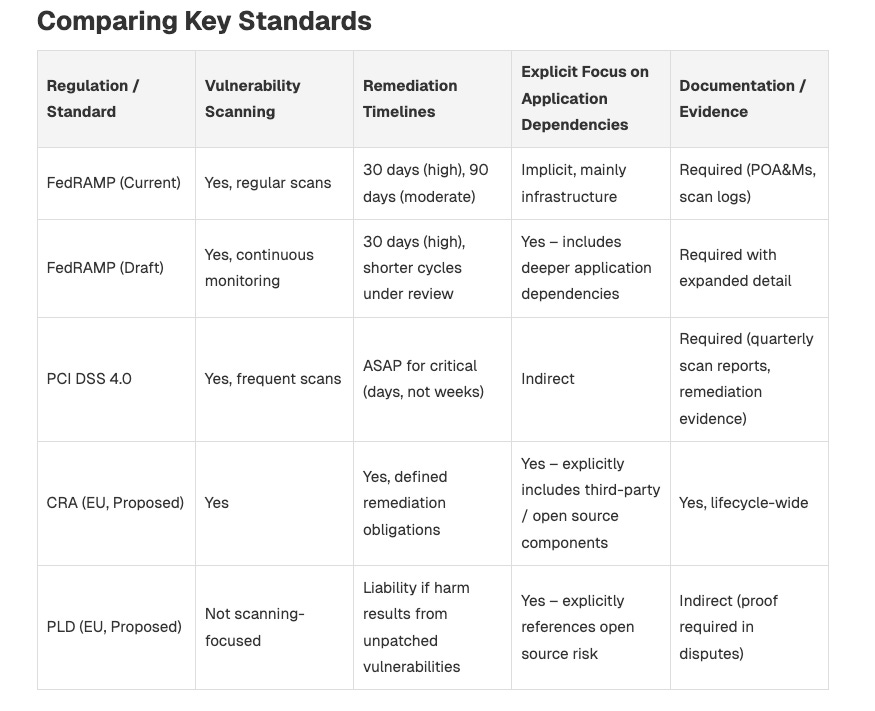

While Vulnerability Management (VulnMgt) has been a longstanding area of cyber, it is seeing increased focus and attention due to being driven by compliance requirements. This is a good piece from Resolved Security discussing frameworks like FedRAMP, PCI DSS, CRA, and others with rigorous remediation timelines and broader VulnMgt requirements, forcing teams to mature in this space.

The article argues that VulnMgt is now at the top of executives' minds due to compliance and regulatory requirements. They are forced to ensure their organizations detect vulnerabilities quickly across their technology portfolios and demonstrate they have remediation capabilities and documentation aligning with their regulatory requirements.

This means organizations must be equipped to speak to their VulnMgt practices when interfacing with regulators and compliance assessors and report internally to their leadership on how they meet these requirements.

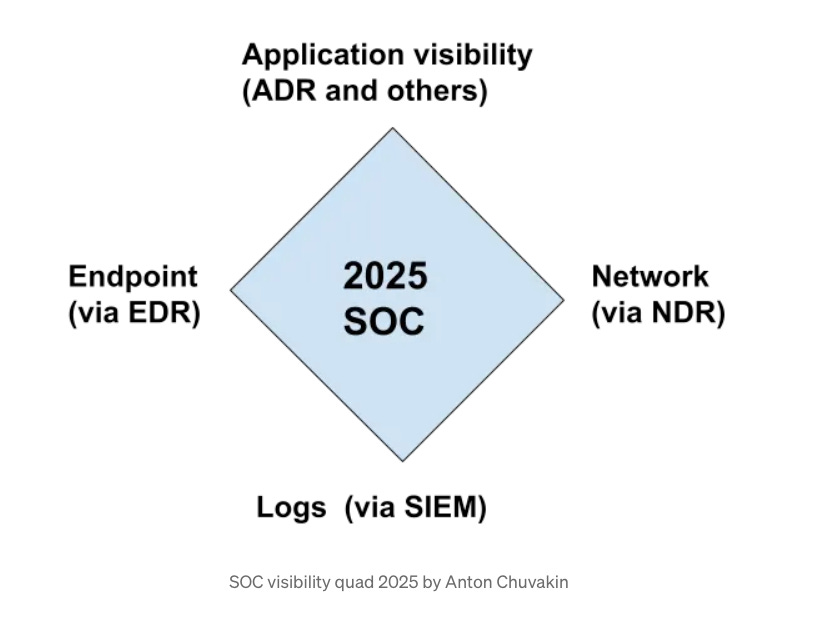

SOC Visibility Triad is Now a Quad - SOC Visibility Quad 2025

Longtime industry analyst and leader Anton Chuvakin recently updated his SOC Visibility Triad, making it a quad. The resource originally cited three key areas for good SOC visibility: Logs (L), Endpoints (E), and Network (N) resources.

Anton makes the case that the original trio of areas is still relevant, but the need for application context warrants extending the triad to a quad. I, of course, agree with Anton, given I’ve spoken about this topic to some extent in various pieces, such as: