A Zero Trust-centric Approach to AI Security

A look at Zscaler's Secure AI launch

By now its no surprise that AI security is top of mind for most CISOs, security leaders and teams. AI has dominated headlines for the last several years, from the initial excitement around GenAI to the cautious optimism around Agentic AI more recently.

We’ve seen a lot of innovation in the ecosystem, both from existing incumbents as well as startups looking to help organizations enable secure AI adoption and transformation.

One of the largest leaders in the ecosystem is now making their Secure AI Launch, looking to help define how organizations securely use and build with AI. While the launch is new, and I’ll be diving into a lot of the details in this blog, Zscaler isn’t starting from scratch and they’re building on top of an unparalleled footprint and unique position within the ecosystem.

Additionally, there were early signs of their Secure AI Launch for those who were paying attention too, such as Zscaler CEO Jay Chaudhry’s talk at a major Cloud Security Alliance (CSA) event towards the end of 2025.

Unpacking Zscaler’s Secure AI Launch

During that talk, Jay walked through how AI was fundamentally changing the way organizations operate and laid out a vision for how Cloud and Network Security needed to evolve along with this major technological paradigm shift.

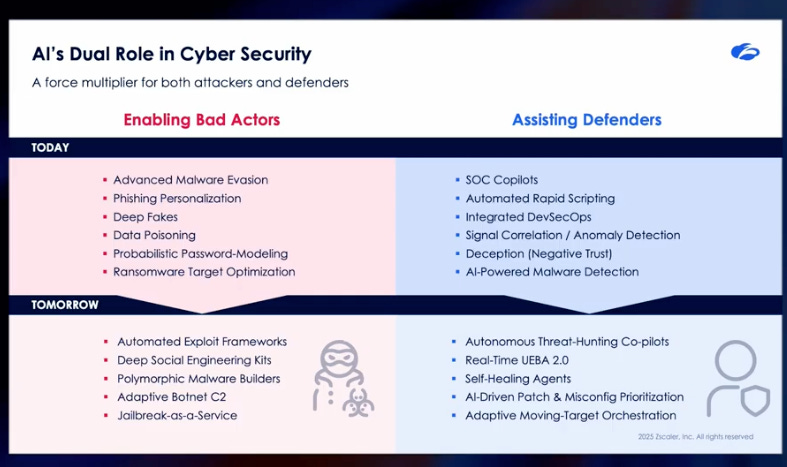

Jay rightly points out that AI has a dual-role in cybersecurity, acting as a force multiplier in cybersecurity for both defenders and attackers alike. This is a point echoed recently by OpenAI’s Sam Altman as well.

Jay made the case that for defenders to have a chance to keep pace with attackers, they have to overcome organizational inertia and adopt modern technologies and platforms that leverage the same technology and bring a holistic picture of their organizations thread landscape.

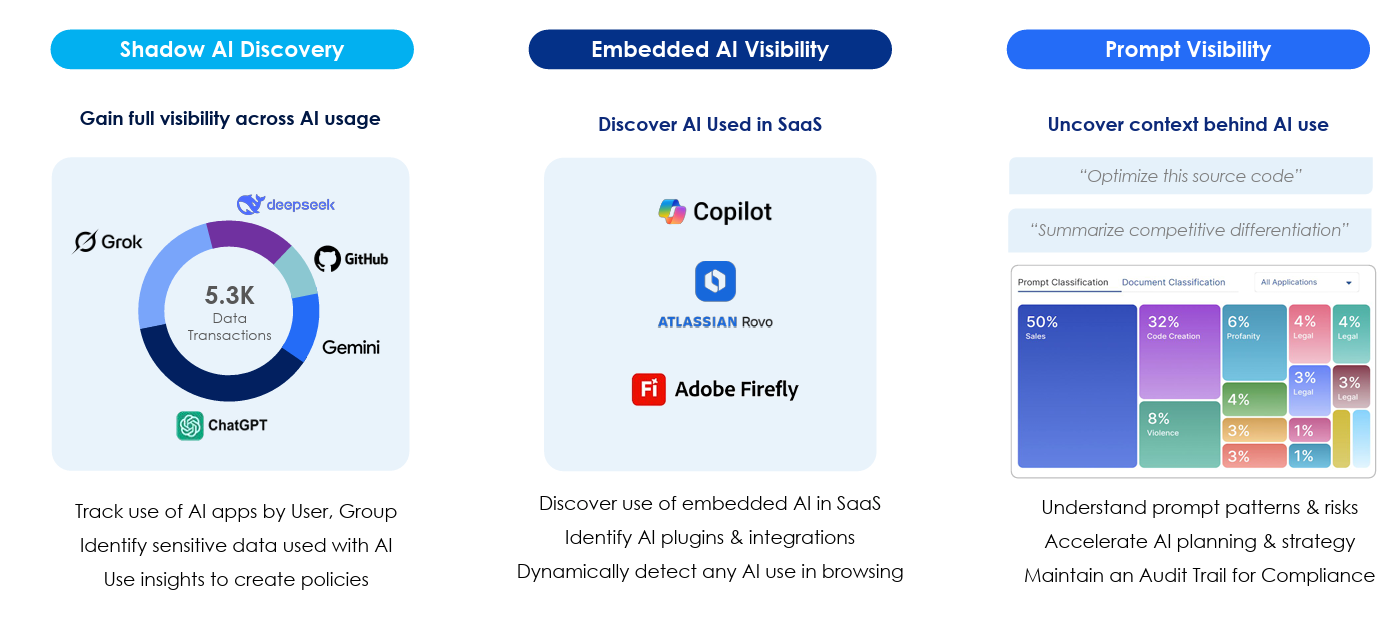

A key part of that involves understanding where your organization is using AI to begin with. There’s a reason we have sayings such as “you can’t secure what you don’t see or know exists” and asset inventory being a critical control in sources such as CIS. Few are better positioned to capture the rampant GenAI shadow usage than Zscaler due to their unique role in network security and their massive footprint worldwide.

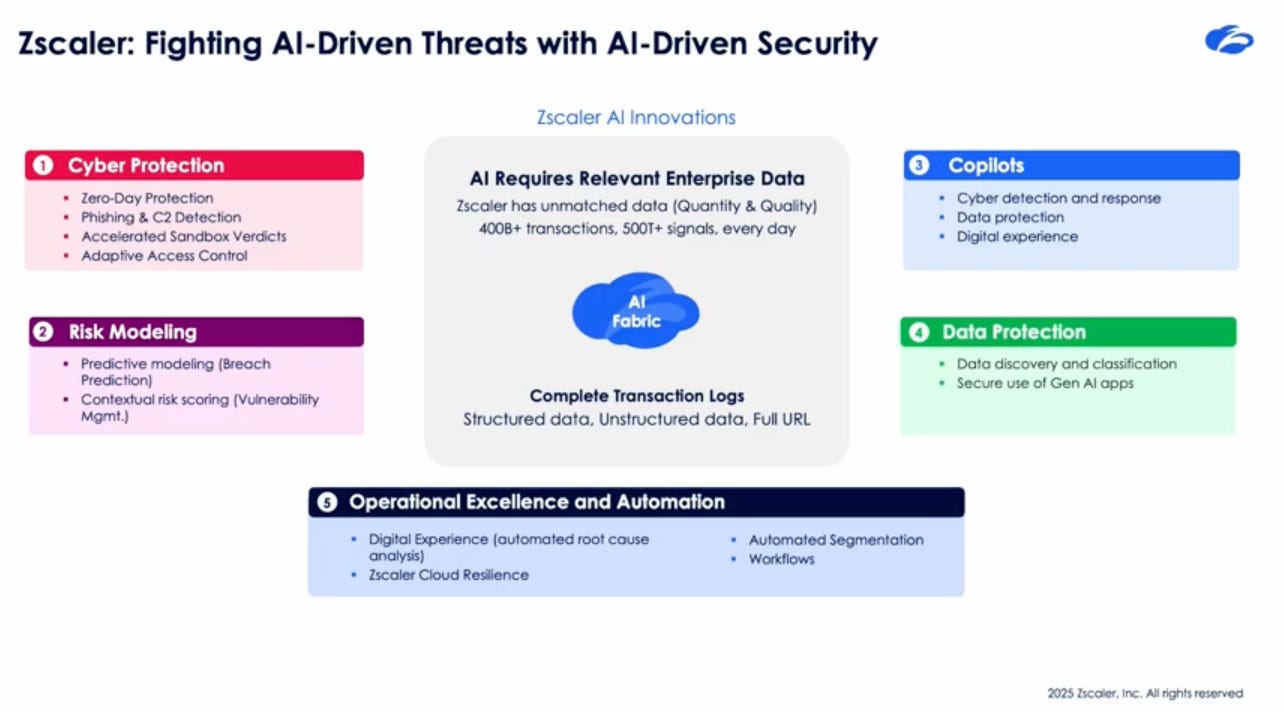

As seen above, Zscaler processes a mind boggling 400 billion transactions and over 500 trillion signals - every single day.

Zscaler is able to do this due to sitting in the traffic path of enterprise users with their longstanding network-centric approach to security.

As modern AI has evolved, with LLMs, Gen AI and Agents, so has enterprise usage and security patterns. This is something Zscaler’s Head of Product Strategy, Dhawal Sharma recently walked through with SiliconANGLE’s theCUBE, where he discussed organizations maturity curve, from an initial risk averse reaction of looking to block usage, to looking to get visibility and governance over usage, expand to cover emerging protocols and even look to get visibility, guardrails and enforcement around prompting and sensitive data risks. Dhawal also covers the reality that AI is being widely embedded across organizations SaaS portfolio as well.

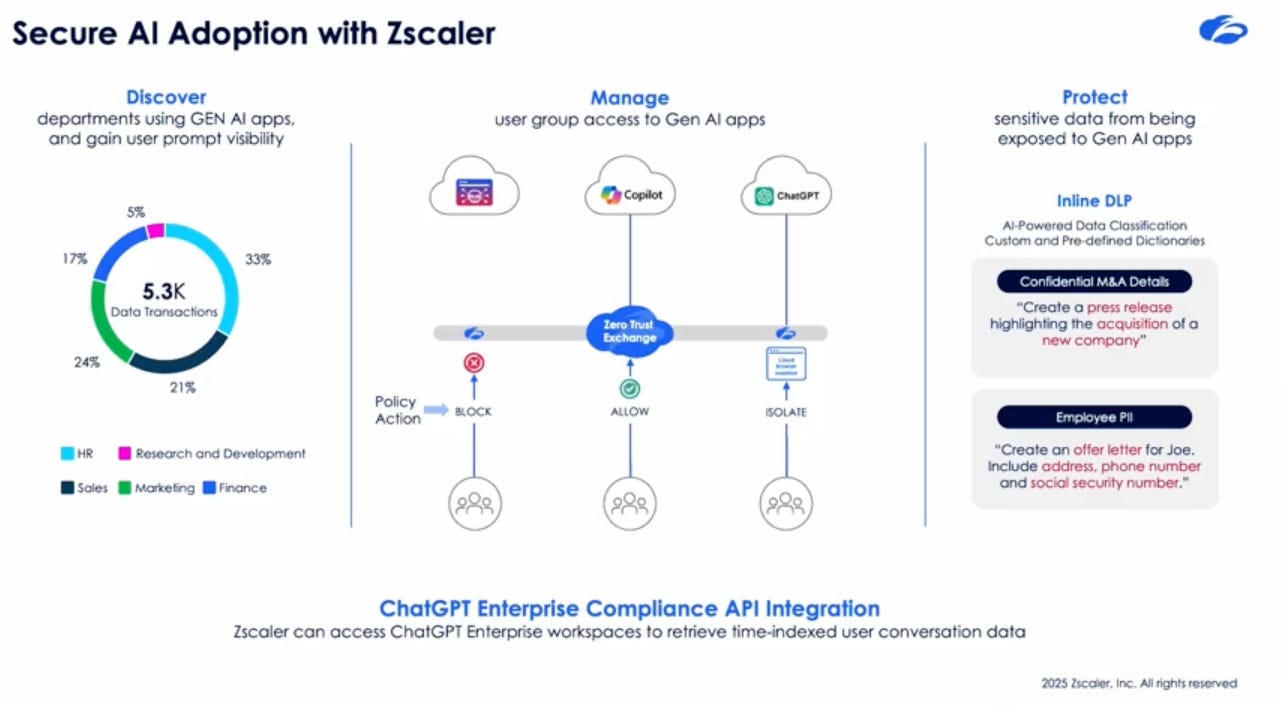

I’ve long talked about topics such as SaaS Governance and Security and laid out three fundamental pillars of Discover, Manage and Secure. I’ve written about these concepts before in articles such as “The Why and How of SaaS Governance”, and helped lead CSA’s publication “SaaS Governance Best Practices for Cloud Customers”. However, the SaaS landscape much like the broader software ecosystem has changed significantly since we published that CSA guidance on SaaS Governance.

Now, nearly every major SaaS provider is embedding AI into their service offerings, amplifying the challenges of organizations looking to govern their AI consumption, and understand where AI is being used, including in their SaaS portfolio. To do so requires not only shadow AI discovery and visibility, included in embedded AI within SaaS, but also capturing context behind prompt patterns and the risks they present to the organization.

Zscaler takes an approach aligned with the major principles I discussed above, using their network path position to help organizations get visibility over GenAI consumption from public cloud service providers, as well as SaaS providers with embedded AI capabilities.

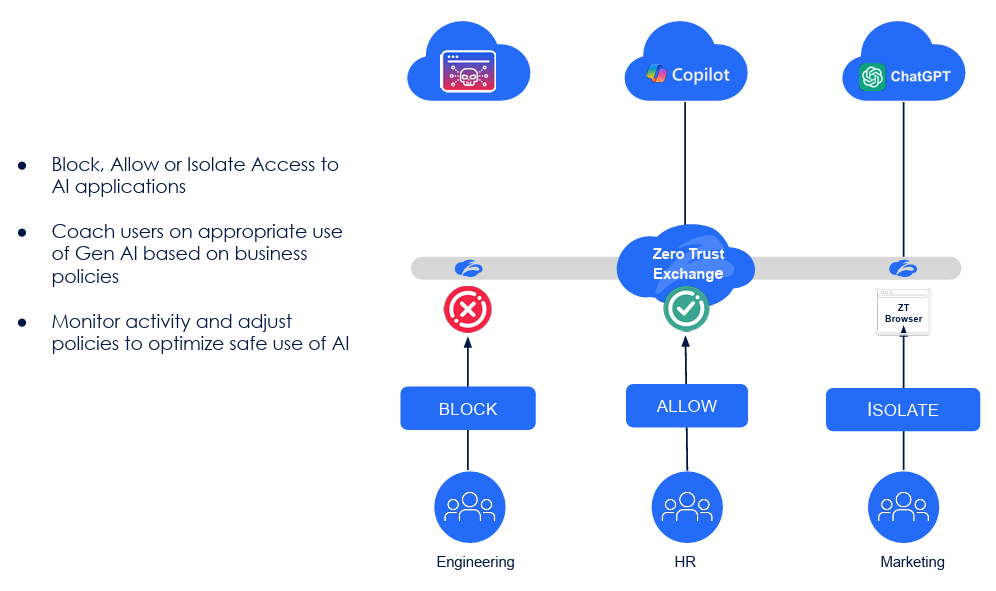

They help govern and manage that GenAI consumption across popular tools such as Copilot and ChatGPT by making organizational security policies actionable, allowing teams to Block, Allow or Isolate that network traffic. Lastly, they aim to help organizations secure the using with native inline DLP capabilities that can mitigate sensitive data being sent to GenAI providers, which is a leading concern for CISOs.

As I mentioned above, Jay advocates that security must leverage this same emerging technology to keep pace with attackers, and that is what Zscaler is providing with their AI-powered data classification capabilities that come with custom and pre-defined dictionaries to mitigate the loss of sensitive data types such as IP or PII.

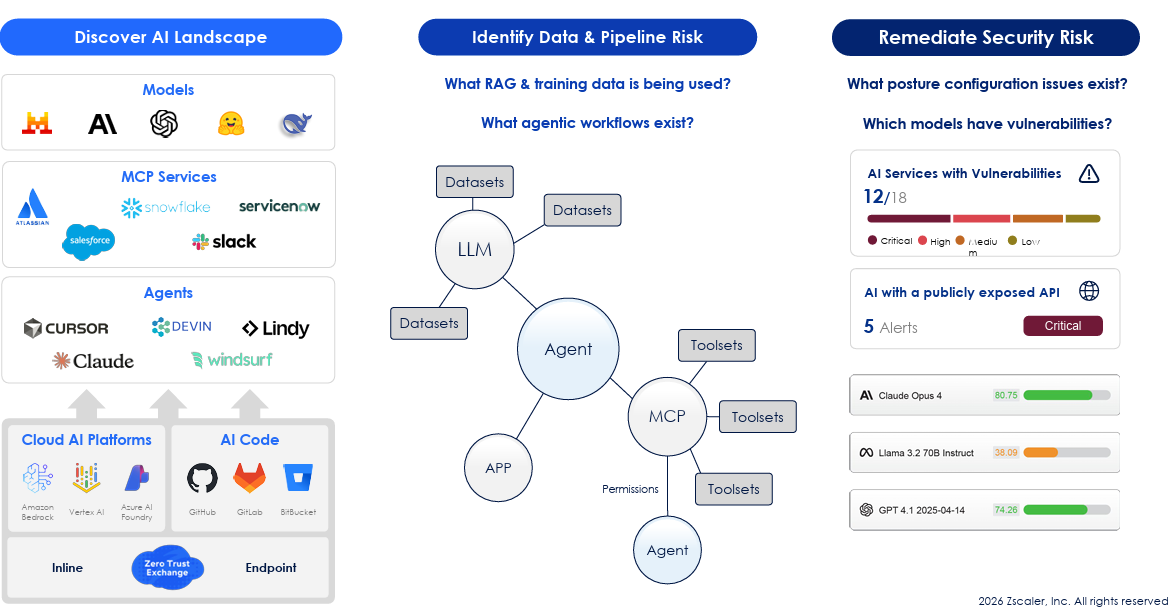

It isn’t just public GenAI providers that security is concerned with though when it comes to AI consumption. There is also the rise of AI coding tools, and the need to have visibility of AI workloads running in cloud environments or using critical AI dependencies in their source code management (SCM) systems. Zscaler is looking to provide a unified dashboard to give organizations full visibility of their AI landscape and usage.

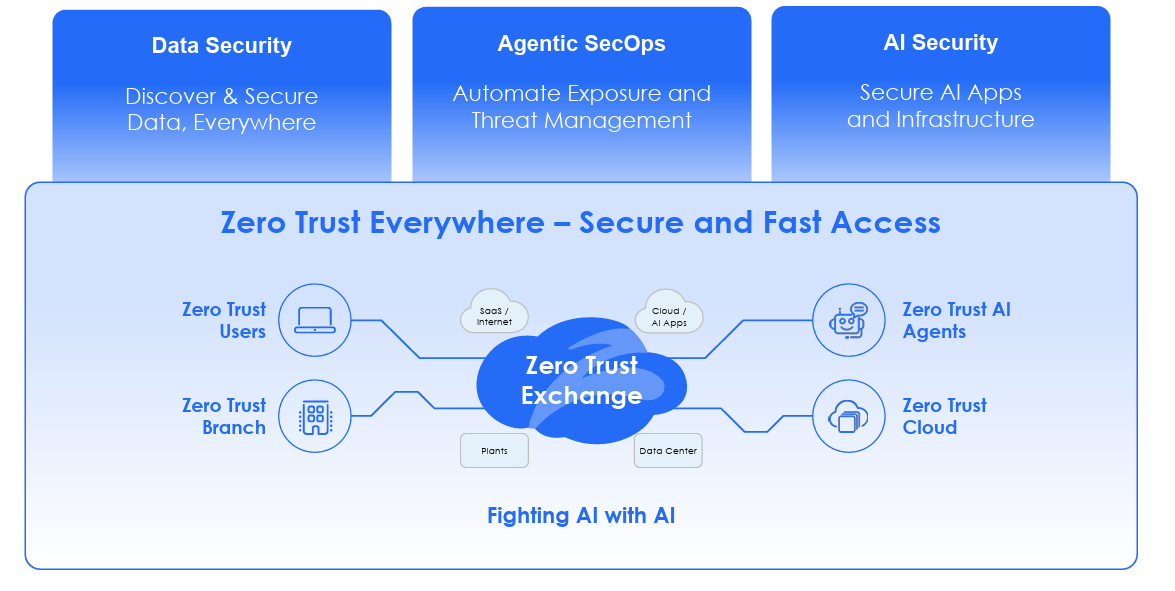

This convergence of rampant SaaS consumption, opaque cloud workloads using AI and now an unprecedented level of AI consumption across frontier model providers, AI coding tools and more is creating challenges for organizations when it comes to AI sprawl, posture and inspection. This is why Zscaler is building on their foundations in Zero Trust and looking to extend those principles to the modern landscape of AI.

This includes helping organizations understand their AI footprint and risks when it comes to asset management, securing access to those AI applications to enable safe and responsible use aligned with organizational policies and also secure AI applications and infrastructure the organization is hosting in addition to consuming.

What makes AI asset management more complex than traditional IT and SaaS is the complexity of its footprint. This includes models, MCP services, agents and the various cloud AI platforms and code dependencies that are involved. It also needs to provide visibility of implementations such as RAG, agentic workflows and the datasets being used in training for organizations doing post-training activities on custom models internally.

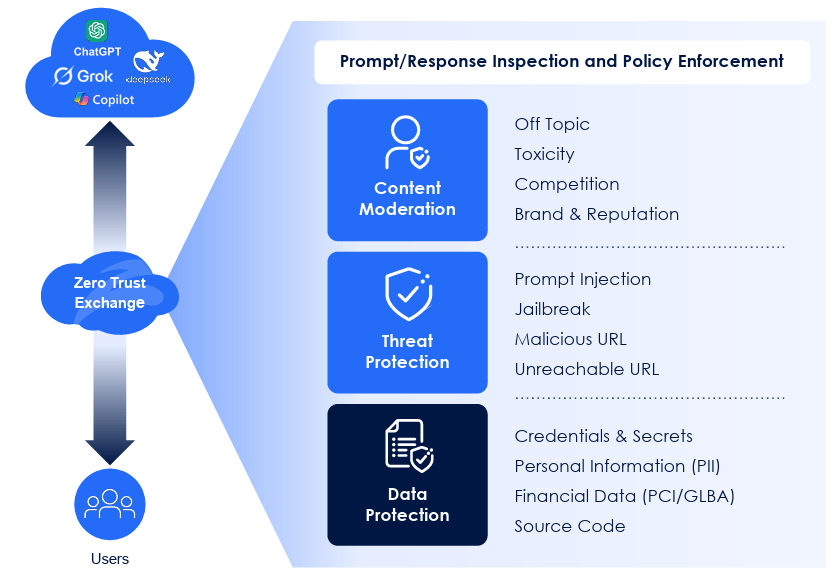

Visibility and governance are necessary and critical steps for AI security but organizations ultimately want to prevent potential incidents and risks with effective protections. That is why a key part of Zscaler’s Secure AI launch includes inline controls, where organizations can inspect traffic and prevent major risks such as sensitive data exfiltration or even moderating content with intent-based policies.

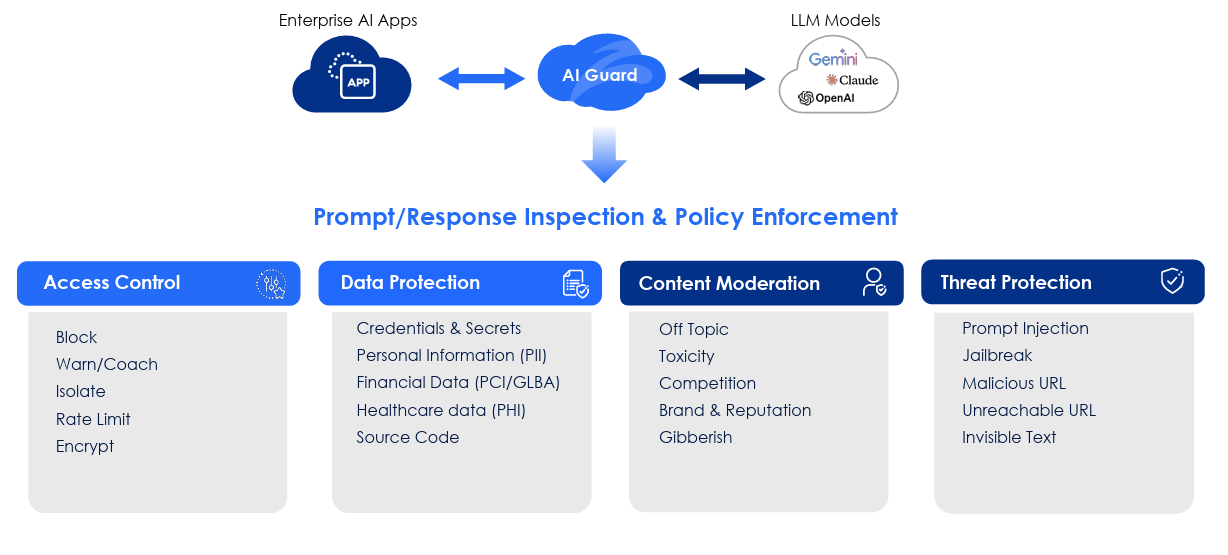

All of this is driven as part of Zscaler’s comprehensive zero trust platform with the implementation of AI Guard, which functions as a prompt/response inspection and policy enforcement engine covering areas such as Access Control, Data Protection, Content Moderation and Threat Protection. This includes for internal enterprise AI applications as well as externally consumed foundation models as well.

Attack Driven Defense

Zscaler joined the ranks of major cyber leaders making AI security acquisitions in 2025, when it was announced that they were acquiring SPLX. For those unfamiliar, SPLX focuses on automated red teaming, which is simulating attacks to find vulnerabilities in AI systems. This includes potential attacks involving techniques such as prompt injections, jailbreaks, and sensitive data exposure among others.

If you’re like me, you likely watch these major acquisitions and look to see how leading cyber players integrate the acquired teams and capabilities into their existing offerings and platforms. The acquisition of SPLX was done with intent, with Zscaler’s CEO Jay Chaudhry mentioning in the press release:

“By combining SPLX’s technology with the intelligence of the Zscaler Zero Trust Exchange and its native data protection that classifies, governs, and prevents loss of sensitive data across prompts, models, and outputs, Zscaler will secure the entire AI lifecycle on one platform. This will strengthen our industry leadership and give customers the confidence to safely embrace AI.”

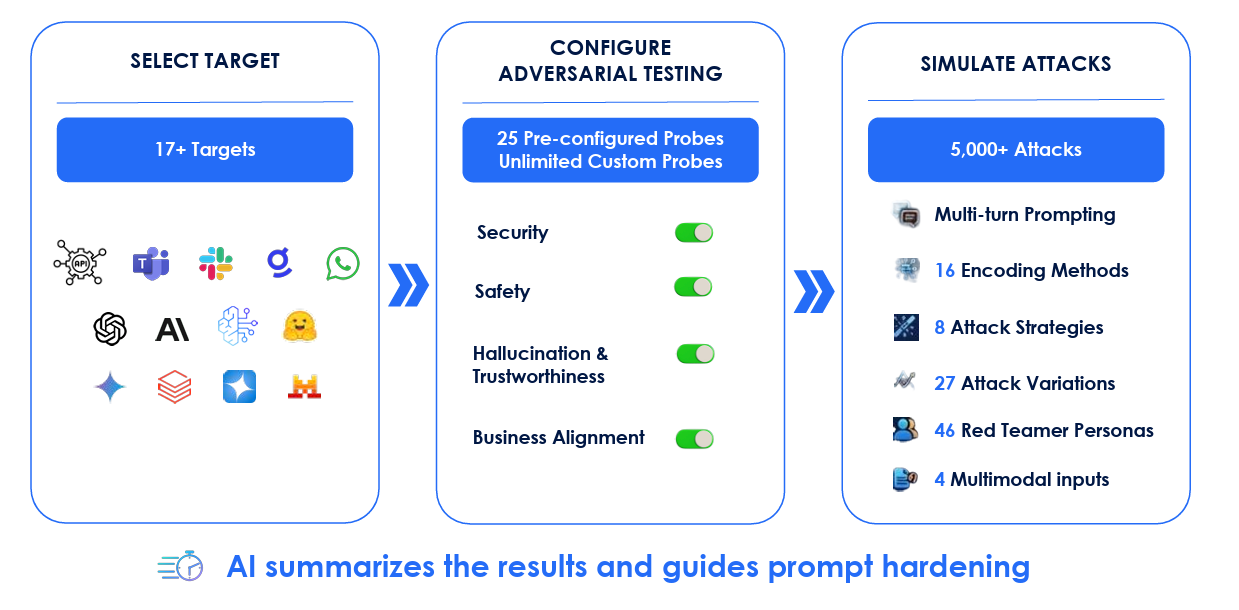

But what does that look like in practice, and how does it tie into Zscaler’s Secure AI launch? Zscaler is using the addition of SPLX to their platform to help organizations close AI vulnerabilities with continuous red teaming.

This includes coverage for tens of existing targets, such as OpenAI, DataBricks, Gemini and HuggingFace among others and being able to configure adversarial testing, either through preconfigured probes or customized use cases to meet organizational needs. From there, organizations can simulate thousands of potential attacks, using different attack strategies, red team personas and even have coverage for multimodal inputs, which are poised to rise, such as voice and video.

Zscaler is looking to capitalize on the insights from AI Red Teaming by integrating it with AI Guard to help close vulnerabilities that were discovered and using offensive security to inform defensive operations and security posture.

Resources

For those looking to accelerating their AI initiatives, Zscaler stood up a hub of resources with various discussions and additional resources for those looking to learn more, be sure to check it out.

If you’re interested in learning more, you can check out Zscaler’s upcoming webinar “Securing AI Apps and Infrastructure”. They’ll be covering:

How to configure and execute continuous AI red teaming on target systems, leveraging probes that cover security, safety, hallucinations & trustworthiness and business alignment

How to remediate discovered vulnerabilities and harden system prompts

How to deploy intent-based detectors to block malicious attacks, like prompt injection, jailbreak, malicious URLs and more

How to ensure the responsible use of AI with content moderation on responses governing off topic, toxicity, brand and reputational damage and more.

Closing Thoughts

As I opened the article, many have been curious to see how one of the security industry leaders in Zscaler was going to approach AI Security. That answer is here now, as they bring their deep expertise in Zero Trust to the world of AI, launching AI Guard, to help organizations Discover, Manage and Secure their AI Footprint.

This includes focusing on key areas such as enterprise applications and models, and potential risks across access control, data, workloads and more. The acquisition of SPLX and integration into their approach lets them utilize automated red teaming across some of the most popular AI platforms and providers to take a threat informed approach to defending enterprise environments and bolstering security postures across AI ecosystems.

Zscaler will definitely be one of the major players in the space to keep an eye on moving forward as we see AI adoption continue to mature and evolve due to their unparalleled telemetry, existing coverage and capabilities, ability to execute and now building on all of that to specifically focus on AI security.

This is an exceptionally comprehensive breakdown of Zscaler's AI security strategy and it really highlights how zero trust principles are evolving for the AI era. The integration of SPLX for automated red teaming is particullarly smart—most orgs struggle with manual testing at scale and this kinda continuous adversarial approach is exactly what's needed. I've been working with enterprise clients on their AI governance frameworks and the visibility gap around shadow AI usage (especialy embedded in SaaS) is massive, so Zscaler's network position gives them a huge advantage here.

Great piece. Although not sure if red teaming a frontier model is enough. AI security is more about managing the newly formed probabistic risk surface - by securing systems and data flows that are touched by LLMs