A Look at the UK's National Cyber Security Centre's Vulnerability Management Guidance

The UK’s National Cyber Security Centre (NCSC) recently published some vulnerability management guidance that has some forward leaning recommendations that are worth taking a note of.

They open the guidance by pointing out that vulnerabilities take many different forms. These include inherent characteristics of the software itself (e.g. flaws and defects) as well as configuration issues which may present the opportunity for exploitation by malicious actors. Additionally, some vulnerabilities may be known to the vendor, while others aren’t and have no mitigations available (e.g. zero days).

The guidance points out key principles to establish effective vulnerability management processes and emphasizes that it is easy to get overwhelmed by the number of vulnerabilities, hence an effective prioritization scheme is critical to helping focus on the most relevant and critical risks.

There’s also the specific call-out that vulnerability management cannot exist in a silo and needs to be an organizational-wide cross-collaborative effort. This includes having awareness and input from groups such as senior leadership and event reporting to the board for visibility on posture. When it comes to the board, they provide the “Cybersecurity Toolkit for Boards” as a resource to help boards more effectively govern cyber risk.

The guidance goes on to lay out what they dub their “Vulnerability Management Principles”, which will touch on below:

Vulnerability Management Principles

Put in place a policy to update by default

This one is fairly simple, because it is emphasizing the need to apply updates (e.g. patches) as soon and quickly as possible. We know this is critical because malicious actors on average outpace defenders when it comes to weaponizing vulnerabilities contrasted against defenders mean-time-to-remediate. This is highlighted in reports such as Qualys’ 2023 TruRISK Research Report.

So not only do defenders struggle to remediate most vulnerabilities, the malicious actors outpace their remediation timelines by roughly 11 days. Given most organizations are working 5 day work weeks, this means malicious actors have two work weeks to exploit, abuse, establish a foothold, pivot laterally, exfiltrate data and more before defenders even remediate a vulnerability, which by then, could be moot, if the attacker has established persistent access or carried out their nefarious activities.

This is likely why the NCSC is recommending that organizations put a policy in place to automatically apply updates as a default setting in their environment. That said, some organizations will of course take pause with this idea, given there could be reliability and operational concerns that could cause disruption from updates, as well as challenges with thorough testing if updates are automatically applied.

The guidance makes several recommendations on rolling out updates, which includes testing them on your own systems using phased and staged rollouts as well as canary deployment models to both minimize disruption to production systems but also accelerating patching. There are also recommendations to make use of Infrastructure-as-Code (IaC) and automation to accelerate patching and updates and ease the administrative burden as well. Obviously these recommendations are less practical in disconnected, air-gapped, on-premise and other environments that aren’t as cloud-native.

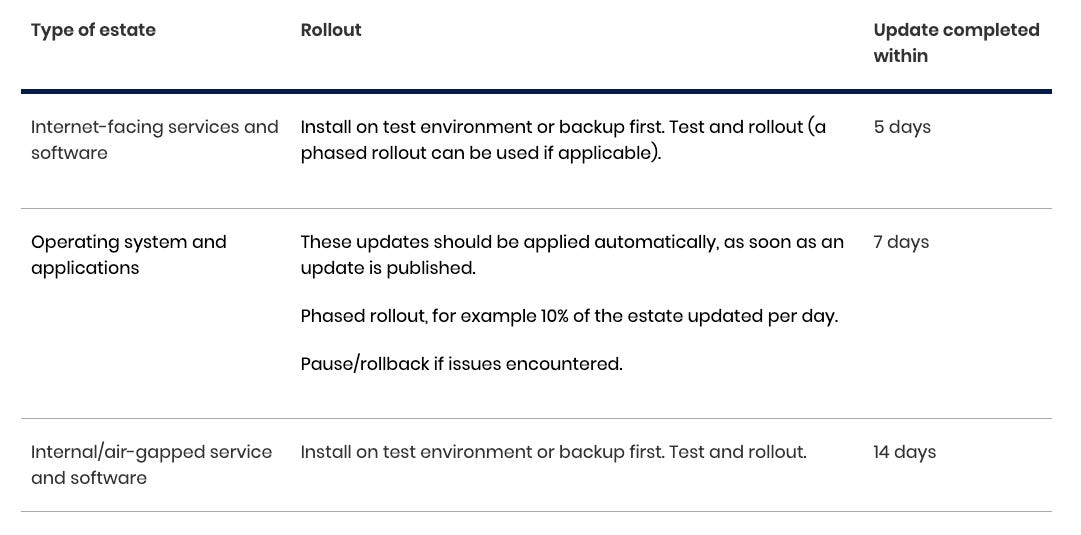

NCSC makes specific recommendations as depicted below for business/mission-critical systems.

Source: Patrick Garrity

This points out that publicly facing systems require the most expedited application of updates due to their ease of access and exposure to malicious actives, and then slightly less aggressive timelines for OS’s, Applications and internal air-gapped software. That said, organizations shouldn’t feel a false sense of security with internal systems, as we know the legacy perimeter-based security model is now antiquated and should be replaced with a zero trust model, meaning don’t simply think internal systems are less susceptible to exploitation.

The guidance goes on to discuss how this is when conditions are normal (are they ever?) and there are situation where exploitation will dive deviations from the above timelines. We already know exploitation is active from resources such as the CISA Known Exploited Vulnerability (KEV) catalog, or imminent from resources such as the Exploit Prediction Scoring System (EPSS).

Organizations should be paying close attention to vendor advisories, for specific insights on the vulnerability, known exploitation, as well as recommended mitigations, especially when a patch isn’t available quite yet and compensating controls are required.

Asset Identification

The second section of the NCSC guidance discusses asset identification. This comes as no surprise, given Hardware/Software asset inventory have been among the top SANS/CIS Critical Security controls for years, with well known phrases in the community such as “you can’t protect what you don’t know you have” (or see, in the push around cloud and observability).

The guidance also correctly points out that is important to not just know what assets you have, but who they belong to.

A vulnerability without an owner goes un-remediated.

Organizations need to know what assets they have, who they belong to and the expectations of asset owners when it comes to vulnerability management. This includes activities such as reporting, remediation, prioritization and more.

NCSC recommends their “Guidance for Organizations about Asset Management” as an accompanying resource.

There is also the need to deal with obsolete and end of life software or products. While “apply patches” works for products that are actively supported, it doesn’t work so well for obsolete and end-of-life products. Yet, these products exist in most large enterprise environments due to resource constraints with upgrading or migrating to other products, their use being tied to critical systems or operations and more.

NCSC recommends the use of their “Guidance for Obsolete Products” for a deeper dive on the topic.

While their guidance didn’t specifically call out open source, there is even more nuance on this topic from my perspective, as it can be hard to tell what is “end-of-life” as there may not be a formal declaration from project maintainers and much of the OSS ecosystem goes on to exist without receiving active updates and maintenance.

NCSC stresses the role of configuration management and recommends where possible, the use of Infrastructure-as-Code and Configuration-as-Code (IaC/CaC) to help automate implementation and remediation at scale.

Carrying out assessments by triaging and prioritizing

While updating and patching is ideal there are plenty of situations where either a fix isn’t available or the fix doesn’t completely resolve the vulnerability or potential for exploitation.

This is where additional activities such as triage and prioritization are required.

NCSC recommends ongoing vulnerability assessments and recommends at least monthly vulnerability assessments across an organizations entire estate. While they don’t specifically use the term, organizations are, and should be moving towards active Attack Surface Management (ASM), which Palo Alto defines as:

“the process of continuously identifying, monitoring and managing all internal and external internet-connected assets for potential attack vectors and exposures”

Additionally, while NCSC doesn’t call out penetration testing or activities such as Purple Teaming and Adversarial Emulation, mature organizations are implementing these practices in addition to basic activities such as regular vulnerability scanning.

NCSC recommends software suppliers have defined vulnerability disclosure programs (VDP)’s and provides their “Vulnerability Disclosure Toolkit” as a resource for suppliers.

It is also worth noting that defined VDP’s are gaining traction in the U.S. in guidance such as NIST’s Secure Software Development Framework (SSDF) and being called for in emerging Federal requirements for suppliers selling to the U.S. Federal government.

When it comes to vulnerability triage NCSC points out that not all vulnerabilities can or will be remediated immediately. Organizations may face resource constraints, prioritization challenges, or the supplier didn’t release a fix yet. Additionally (and thankfully), NCSC points out that while CVSS base severity scores may help inform prioritization, organizations should be considering business impact and risk from a vulnerability too, not just relying on CVSS scores alone.

NCSC lays out three options for a triage process, which are:

Fix

Acknowledge

Investigate

Fix is straight forward, with implementing a fix when possible or implementing compensating controls. Some organizations may simply acknowledge a vulnerability and depending on the severity capture it in a risk register, or Plans of Actions and Milestones (POAM) in U.S. Federal-speak, along with planned review or remediation dates.

This is essentially risk acceptance, at least until, or if, remediation occurs.

Lastly, is the option to investigate, because the organization doesn’t have enough information on the specific cost or method of remediation.

The organization must own the risks

My personal favorite aspect of the NCSC guidance is the explicit call out that “the organization must own the risks of not updating”.

Let’s read that again, slower, for those who struggle with the concept

“THE BUSINESS OWNS THE RISK”

This is a concept many in our community simply don’t grasp. While security can, and often does explain, advise, recommend and plead, ultimately the business OWNS the decisions around risk.

Anyone who has been a cyber practitioner for sometime knows all too well the scenario of working with engineering, development and business peers to explain the risk of doing some “thing” or making a decision, only for it to fall on deaf ears or be ignored (or noted, to be more polite about it) and the business press on anyways.

This is due to the reality that cybersecurity is but one risk the organization must consider, not the only risk. There are other factors such as speed to market/mission, revenue, market share, competitive advantage and more, all of which must be considered alongside security.

Now, some may rightly contest that cybersecurity has and does get placed below those other considerations, and that argument often has merit, but it doesn’t change the fundamental premise that security is one consideration among many for the business.

Of course, this argument gets much more tricky when we are discussing software suppliers/manufacturers, who make decisions to ship subpar insecure products and essentially externalize the cost of insecurity onto the market and consumers, but that is venturing into the territory of software liability, which I have covered extensively in articles, as well as a recent discussion with researchers and scholars Chinmayi Sharma and Jim Dempsey in a recent episode of my Resilient Cyber show.

So as the guidance states, “The decision not to fix an issue is, at root, a senior-level business risk decision, not an IT problem, and every organization has it’s own risk appetite”.

NCSC provides a robust subset of considerations when determining to fix an issue, such as:

Risk-based prioritization (including resources such as CVSS and CISA KEV along with Threat Intel)

Potential impacts to the system and service

Reputational damage

Resources and cost to fix an issue

Cost of incident response and recovery as well as regulatory or legal ramifications (which may become a much more relevant consideration if concepts such as software liability for suppliers materializes)

IF risk acceptance decisions are made, the organization should thoroughly document those decisions as well as the rationale behind them, and residual risk as a result.

Again, they emphasize to close this section out

“It’s important that the business OWNS the risk, not the security team, and that it is visible to senior leaders”

Verify and regularly review your vulnerability management process

NCSC closes their guidance out stressing that vulnerability management processes should be constantly evolving due to the dynamic nature of organizations, threats and vulnerabilities.

This requires regular review of the vulnerability management plan/process, as well as verification of its effectiveness.

Penetration Tests do make an appearance in this section, as a way to verify that the vulnerability management process is working as intended. They provide separate “guidance on penetration testing”.

Conclusion

To close, let’s recap. Organizations must have a defined, documented and regularly verified and iterating vulnerability management process in place. It should lean into concepts such as updating by default or automating patch where possible, and without effective asset inventory and identification, any vulnerability management efforts will falter.

Organizations should conduct regular vulnerability assessments coupled with organizationally defined triaging and prioritization processes.

And most importantly of all, the business must OWN the risk of not remediating vulnerabilities, not security.

This means our job in security is to help empower the business to make risk-informed decisions around risk.

Below is a comprehensive checklist created by Patrick Garrity of VulnCheck.

(Shout out to my friend Patrick Garrity who created some of the images I share above in the article, and for those looking for a video recap of the NCSC guidance, he has one here).